-

Notifications

You must be signed in to change notification settings - Fork 7

Billion Triples Challenge

- NxParser is a nice tool that's easy to use and is faster than using awk to extract portions of N-QUADS files.

- Our earlier notes on characterizing a list of RDF node URIs comes in handy in our exploration.

This page describes my first foray into the Billion Triples Challenge crawls. It's some preliminaries to compare Prizms' results to the rest of Linked Data that was created using other methods.

Processing preliminaries:

- Data i.e. "givens" from Andreas Harth, with a peek at the n-quads provided.

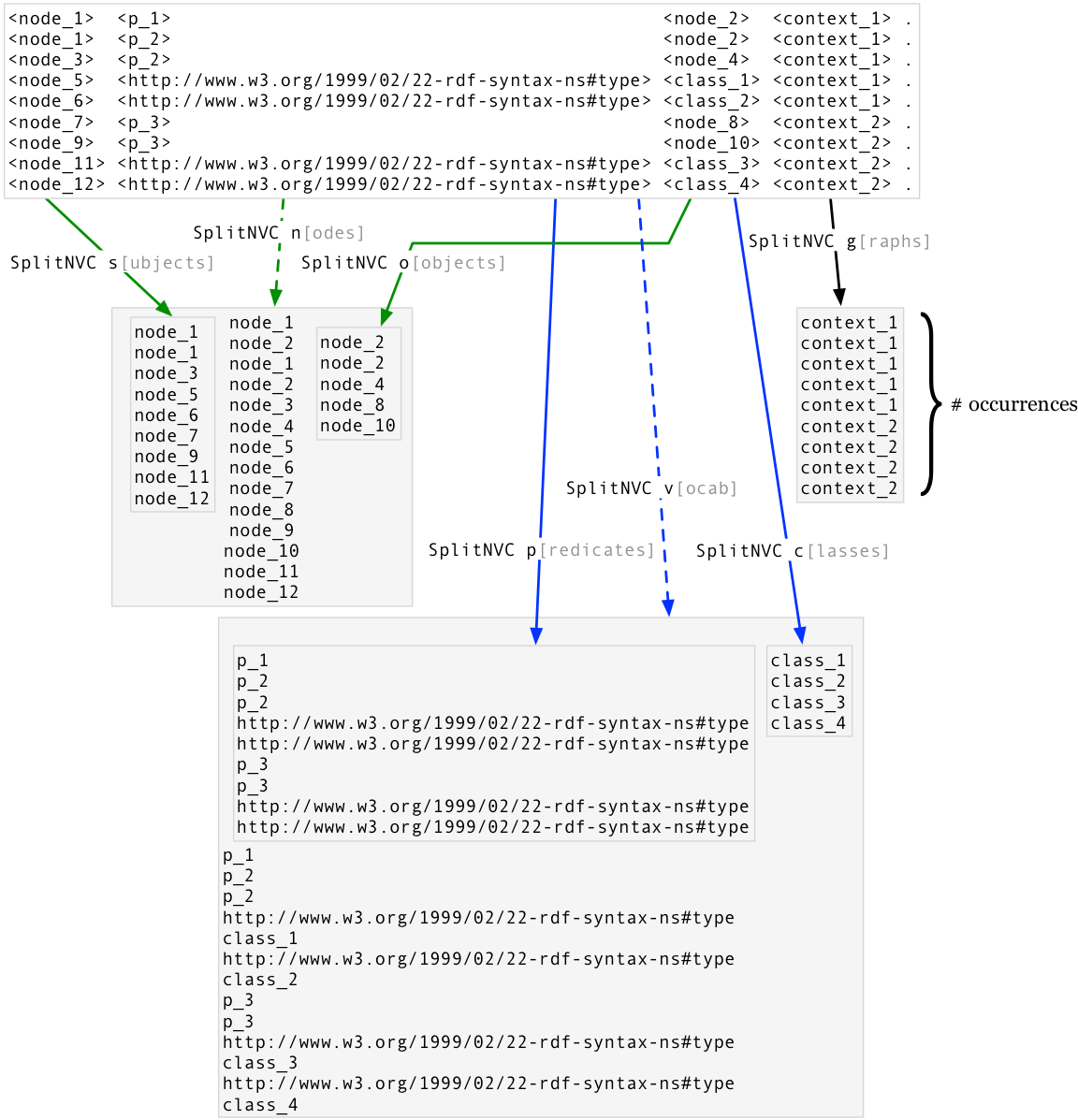

- Splitting N-Quads into different URI "occurrence-sets" with SplitNVC.

- Partitioning URI "occurrence-sets" by domain and Pay Level Domain.

- URI Treemap applies to an occurrence-set, whether partitioned by domain/PLD or not.

We break down URI occurrences in the N-Quads into sets:

- Contexts

- Vocabulary (both properties and classes)

- Nodes (subjects and non-class, URI objects)

Holistic analyses:

Data (i.e. 'givens')

Four crawls are available, a fifth is being done as we speak (Mar 2014).

- annually to 2003, but not available.

- 2008, 450 MQ / 160GB (uncompressed)

- As reported in Andreas' thesis; not readily available.

- 2009, 1.4BQ / 17GB (page)

- btc-2009-chunk-000.gz: 202MB => 2.4GB (x 116 chunks = 275GB)

- 2010, 3.2BQ / 27GB (page)

- also has 302/303 redirects

- 2011, 2BQ / 20GB (page)

- also has squid access log (format)

- also has 302/303 redirects

- 2012, 1.4BQ / 17GB (page)

- be careful, file names collide!

- also has squid access log

- also has 302/303 redirects

- 2013 - (There was no 2013 crawl, confirmed by Andreas Harth via email on 22 March 2014)

- 2014 - (announcement, page, challenge)

- 2015 - winners, but no crawl?

btc-2009-chunk-000.gz starts out as S P O C:

<Date:1988> <http://www.aktors.org/ontology/portal#year-of> "1988" <http://www.hyphen.info/rdf/csukData/edinburgh/EdinburghPublications4.rdf> .

<Date:1988> <http://www.w3.org/1999/02/22-rdf-syntax-ns#type> <http://www.aktors.org/ontology/portal#Calendar-Date> <http://www.hyphen.info/rdf/csukData/edinburgh/EdinburghPublications4.rdf> .

<Date:1991> <http://www.aktors.org/ontology/portal#year-of> "1991" <http://www.hyphen.info/rdf/csukData/edinburgh/EdinburghPublications4.rdf> .

<Date:1991> <http://www.w3.org/1999/02/22-rdf-syntax-ns#type> <http://www.aktors.org/ontology/portal#Calendar-Date> <http://www.hyphen.info/rdf/csukData/edinburgh/EdinburghPublications4.rdf> .

<Date:1997> <http://www.aktors.org/ontology/portal#year-of> "1997" <http://www.hyphen.info/rdf/csukData/edinburgh/EdinburghPublications4.rdf> .

<Date:1997> <http://www.w3.org/1999/02/22-rdf-syntax-ns#type> <http://www.aktors.org/ontology/portal#Calendar-Date> <http://www.hyphen.info/rdf/csukData/edinburgh/EdinburghPublications4.rdf> .

<Date:2000> <http://www.aktors.org/ontology/portal#year-of> "2000" <http://www.hyphen.info/rdf/csukData/edinburgh/EdinburghPublications4.rdf> .

<Date:2000> <http://www.w3.org/1999/02/22-rdf-syntax-ns#type> <http://www.aktors.org/ontology/portal#Calendar-Date> <http://www.hyphen.info/rdf/csukData/edinburgh/EdinburghPublications4.rdf> .

<Date:Apr2001> <http://www.aktors.org/ontology/portal#month-of> "4" <http://www.hyphen.info/rdf/csukData/edinburgh/EdinburghPublications7.rdf> .

<Date:Apr2001> <http://www.aktors.org/ontology/portal#year-of> "2001" <http://www.hyphen.info/rdf/csukData/edinburgh/EdinburghPublications7.rdf> .

datatyped literals look like S P O^^D C:

<http://140.203.154.158:8081/browse/PLUGIN-9> <http://baetle.googlecode.com/svn/ns/#due_date> "2008-06-01T23:00:00Z"^^<http://www.w3.org/2001/XMLSchema#dateTime> <http://140.203.154.158:8081/RDFDump/RDFDump.rdf> .

<http://140.203.154.158:8081/browse/PLUGIN-9> <http://baetle.googlecode.com/svn/ns/#updated> "2008-05-30T11:44:17Z"^^<http://www.w3.org/2001/XMLSchema#dateTime> <http://140.203.154.158:8081/RDFDump/RDFDump.rdf> .

<http://140.203.154.158:8081/browse/PLUGIN-9> <http://purl.org/dc/terms/created> "2008-05-30T11:44:17Z"^^<http://www.w3.org/2001/XMLSchema#dateTime> <http://140.203.154.158:8081/RDFDump/RDFDump.rdf> .

<http://140.203.154.158:8083/browse/RDFBUG-6> <http://baetle.googlecode.com/svn/ns/#created> "2008-06-16T16:01:51Z"^^<http://www.w3.org/2001/XMLSchema#dateTime> <http://140.203.154.158:8083/RDFDump/RDFDump.rdf> .

The fourth element of N-QUADs is the context, which is the URI from which the S P O came when it was dereferenced. Here's a summary, then we describe how we got it.

- 2009, 1.4BQ / 17GB (page)

- 3.2 GB gz context occurrences (i.e. duplicates)

- Crawled 1,151,383,272 quad statements from 50,606,616 contexts within 685,630 domains within 129,500 PLDs

- Top PLD: dbpedia.org with 251,672,363 triples

- 2010, 3.2BQ / 27GB (page)

- 820 MB gz context occurrences (i.e. duplicates)

- Crawled 3,171,793,030 quad statements from 8,123,855 contexts within 443,530 domains within 18,596 PLDs

- Top PLD: kaufkauf.net with 1,616,059,815 triples

- 2011, 2BQ / 20GB (page)

- 648 MB gz context occurrences (i.e. duplicates)

- Crawled 2,168,395,469 quad statements from 7,377,163 contexts within 240,053 domains within 790 PLDs

- Top PLD: hi5.com with 1,367,024,669 triples

- 2012, 1.4BQ / 17GB (page) -- be careful, file names collide!

- 431 MB gz context occurrences (i.e. duplicates)

- Crawled 1,436,545,545 quad statements from 9,258,355 contexts within 108,010 domains within 837 PLDs

- Top PLD: data.gov.uk with 751,352,061 triples

- 2013 - (There was no 2013 crawl)

- 2014, (in progress)

We can get the context occurrences by stripping away the SPO with gunzip -c ../source/*.gz | awk '{print $(NF-1)}' | gzip > c.gz:

$ gunzip -c manual/c.gz | head

<http://www.hyphen.info/rdf/csukData/edinburgh/EdinburghPublications4.rdf>

<http://www.hyphen.info/rdf/csukData/edinburgh/EdinburghPublications4.rdf>

<http://www.hyphen.info/rdf/csukData/edinburgh/EdinburghPublications4.rdf>

<http://www.hyphen.info/rdf/csukData/edinburgh/EdinburghPublications4.rdf>

<http://www.hyphen.info/rdf/csukData/edinburgh/EdinburghPublications4.rdf>

<http://www.hyphen.info/rdf/csukData/edinburgh/EdinburghPublications4.rdf>

<http://www.hyphen.info/rdf/csukData/edinburgh/EdinburghPublications4.rdf>

<http://www.hyphen.info/rdf/csukData/edinburgh/EdinburghPublications4.rdf>

<http://www.hyphen.info/rdf/csukData/edinburgh/EdinburghPublications7.rdf>

<http://www.hyphen.info/rdf/csukData/edinburgh/EdinburghPublications7.rdf>

gunzip -c ../c.gz | sed 's/^<//;s/>$//' | head | java edu.rpi.tw.string.uri.TldManager + > counts.csv 2> /dev/null gives unsorted:

$ head -3 counts.csv

deambulando.com 9

hodgepodgekitchen.blogspot.com 210

omaralarabi.blogspot.com 225

cat counts.csv | sort -u | sort -k 2 -n -r > counts-sorted.csv sorts by number of occurrences:

$ head counts-sorted.csv

dbpedia.org 251672363

livejournal.com 133266746

rkbexplorer.com 94739389

geonames.org 84896760

mybloglog.com 61339034

sioc-project.org 51552171

qdos.com 23970898

hi5.com 23745914

kanzaki.com 22843734

rdfabout.com 17691303

Find out how many triples come from each URL request with vsr's gunzip -c ../c.gz | sed 's/^<//;s/>$//' | java $BTE_PACKAGE.CharTreeEntry - > char_tree.csv

$ cat char_tree.csv | sort -k 2 -n -r

http://www.hyphen.info/rdf/30.xml 182

http://www.hyphen.info/rdf/26.xml 126

http://www.hyphen.info/rdf/20.xml 86

http://www.hyphen.info/rdf/40.xml 52

http://www.christiansurfers.net/feed.aspx 48

http://www.hyphen.info/rdf/35.xml 38

http://www.hyphen.info/rdf/18.xml 37

Break out into a tree with gunzip -c ../c.gz | sed 's/^<//;s/>$//' | java $BTE_PACKAGE.CharTreeEntry + > char_tree.pretty

$ cat source/contexts.csv

ftp://darthmouth.edu/files/blah1.csv

ftp://darthmouth.edu/files/blah2.csv

mailto:[email protected]

mailto:[email protected]

http://www.hyphen.info/rdf/30.xml

http://www.hyphen.info/rdf/26.xml

http://www.hyphen.info/rdf/20.xml

http://www.hyphen.info/rdf/20.xml

http://www.hyphen.info/rdf/40.xml

http://www.hyphen.info/rdf/40.xml

http://www.hyphen.info/rdf/40.xml

http://www.hyphen.info/rdf/40

http://www.hyphen.info/rdf/40

http://www.christiansurfers.net/feed.aspx

http://www.hyphen.info/rdf/35.xml

http://www.hyphen.info/rdf/18.xml

$ cat source/contexts.csv | java $BTE_PACKAGE.CharTreeEntry +

ftp://darthmouth.edu/files/blah _/2

1.csv 1

2.csv 1

mailto: _/2

[email protected] 1

[email protected] 1

http://www. _/12

hyphen.info/rdf/ _/11

3 _/2

0.xml 1

5.xml 1

2 _/3

6.xml 1

0.xml 2

40 2/3

.xml 3

18.xml 1

christiansurfers.net/feed.aspx 1

We can divvy up the URIs by PLD and domains.

cat my-uris | java edu.rpi.tw.string.NameFactory --domain-of -

http://www.w3.org

http://www.w3.org

http://www.w3.org

http://www.w3.org

With input http://www.hyphen.info/rdf/40.xml, produce the following description in N-TRIPLES (extends the Between The Edges vocab):

<http://www.hyphen.info/rdf/40.xml> bte:root <http://www.hyphen.info> .

<http://www.hyphen.info> bte:pld <http://hyphen.info>

<http://hyphen.info> a bte:PayLevelDomain .

bte:pld rdfs:subPropertyOf bte:broader .

Lean on TDB to handle the disk packing beyond memory. tdbloader accepts N-TRIPLEs on stdin (e.g. rapper -g -o ntriples manual/contexts.ttl | tdbloader --loc=b -)

gunzip -c ../c.gz | sed 's/^<//;s/>$//' | head | java edu.rpi.tw.string.uri.TldManager +3

$ cat source/contexts.csv

ftp://darthmouth.edu/files/blah1.csv

ftp://darthmouth.edu/files/blah2.csv

mailto:[email protected]

mailto:[email protected]

http://www.hyphen.info/rdf/30.xml

http://www.hyphen.info/rdf/26.xml

http://www.hyphen.info/rdf/20.xml

http://www.hyphen.info/rdf/20.xml

http://www.hyphen.info/rdf/40.xml

http://www.hyphen.info/rdf/40.xml

http://www.hyphen.info/rdf/40.xml

http://www.hyphen.info/rdf/40

http://www.hyphen.info/rdf/40

http://www.christiansurfers.net/feed.aspx

http://www.hyphen.info/rdf/35.xml

http://www.hyphen.info/rdf/18.xml

$ cat source/contexts.csv | java edu.rpi.tw.string.uri.TldManager -=3 | head -7

<http://www.hyphen.info/rdf/30.xml> <http://purl.org/twc/vocab/between-the-edges/root> <http://www.hyphen.info> .

<http://www.hyphen.info> <http://purl.org/twc/vocab/between-the-edges/pld> <http://hyphen.info> .

<http://hyphen.info> <http://www.w3.org/1999/02/22-rdf-syntax-ns#type> <http://purl.org/twc/vocab/between-the-edges/PayLevelDomain> .

<http://www.christiansurfers.net/feed.aspx> <http://purl.org/twc/vocab/between-the-edges/root> <http://www.christiansurfers.net> .

<http://www.christiansurfers.net> <http://purl.org/twc/vocab/between-the-edges/pld> <http://christiansurfers.net> .

<http://christiansurfers.net> <http://www.w3.org/1999/02/22-rdf-syntax-ns#type> <http://purl.org/twc/vocab/between-the-edges/PayLevelDomain> .

$ cat source/contexts.csv | java edu.rpi.tw.string.uri.TldManager -=3 | tdbloader --loc=manual/contexts.tdb -

$ cat manual/plds.rq

prefix bte: <http://purl.org/twc/vocab/between-the-edges/>

select distinct ?domain ?pld

where {

?domain bte:pld ?pld

}

$ tdbquery --loc=manual/contexts.tdb --query=manual/plds.rq

---------------------------------------------------------------------

| domain | pld |

=====================================================================

| <http://www.hyphen.info> | <http://hyphen.info> |

| <http://www.christiansurfers.net> | <http://christiansurfers.net> |

---------------------------------------------------------------------

$ cat source/contexts.csv | java edu.rpi.tw.string.uri.TldManager -=3 | tdbloader --loc=manual/contexts.tdb -

$ cat manual/pld-counts.rq

prefix bte: <http://purl.org/twc/vocab/between-the-edges/>

select ?pld (count(distinct *) as ?count)

where {

?url bte:root ?domain .

?domain bte:pld ?pld

}

group by ?pld

$ cat source/

.svn/ contexts.csv

$ cat source/contexts.csv | sort -u

ftp://darthmouth.edu/files/blah1.csv

ftp://darthmouth.edu/files/blah2.csv

http://www.christiansurfers.net/feed.aspx

http://www.hyphen.info/rdf/18.xml

http://www.hyphen.info/rdf/20.xml

http://www.hyphen.info/rdf/26.xml

http://www.hyphen.info/rdf/30.xml

http://www.hyphen.info/rdf/35.xml

http://www.hyphen.info/rdf/40

http://www.hyphen.info/rdf/40.xml

mailto:[email protected]

mailto:[email protected]

$ tdbquery --loc=manual/contexts.tdb --query=manual/pld-counts.rq

-----------------------------------------

| pld | count |

=========================================

| <http://christiansurfers.net> | 1 |

| <http://hyphen.info> | 7 |

-----------------------------------------

The "three triples per context" triples from edu.rpi.tw.string.uri.TldManager loaded into TDB:

- 2009, 1.4BQ / 17GB (page)

-

Completed: 3,358,556,031 triples loaded in 29,998.14 seconds [Rate: 111,958.81 per second](11GB) - 46,889,837 contexts from 685,057 domains from 129,400 Pay Level Domains.

-

- 2010, 3.2BQ / 27GB (page)

-

Completed: 9,513,046,755 triples loaded in 54,151.56 seconds [Rate: 175,674.47 per second](2.1GB) - 8,106,236 contexts from 443,530 domains from 18,575 Pay Level Domains.

-

- 2011, 2BQ / 20GB (page)

-

Completed: 6,505,105,773 triples loaded in 42,869.01 seconds [Rate: 151,743.80 per second](1.9GB) - 7,403,255 contexts from 240,053 domains from 793 Pay Level Domains.

-

- 2012, 1.4BQ / 17GB (page) -- be careful, file names collide!

-

Completed: 4,203,389,676 triples loaded in 35,219.66 seconds [Rate: 119,347.80 per second](2.3GB) - 8,959,126 contexts from 108,007 domains from 829 Pay Level Domains.

-

- 2013 - (There was no 2013 crawl)

- 2014, (in progress)

2012 PLDs and number of domains (pld-sizes.rq):

| <http://soton.ac.uk> | 7 |

| <http://daisycha.in> | 13 |

| <http://sourceforge.net> | 18 |

| <http://weblog.com.pt> | 75 |

| <http://status.net> | 262 |

| <http://livejournal.com> | 106675 |

2011 PLDs and number of domains (pld-sizes.rq):

| <http://data.gov.uk> | 7 |

| <http://blogiem.lv> | 11 |

| <http://cz.cc> | 20 |

| <http://daisycha.in> | 26 |

| <http://sapo.cv> | 36 |

| <http://status.net> | 800 |

| <http://sapo.pt> | 5486 |

| <http://livejournal.com> | 232764 |

2010 PLDs and number of domains (pld-sizes.rq):

| <http://sub.jp> | 49 |

| <http://covblogs.com> | 54 |

| <http://libdems.org.uk> | 54 |

| <http://php.net> | 56 |

| <http://sfr.fr> | 58 |

| <http://indymedia.org> | 61 |

| <http://kataweb.it> | 62 |

| <http://craigslist.org> | 63 |

| <http://chattablogs.com> | 65 |

| <http://a-thera.jp> | 69 |

| <http://so-net.ne.jp> | 76 |

| <http://weblogs.jp> | 76 |

| <http://over-blog.net> | 80 |

| <http://main.jp> | 84 |

| <http://virginradioblog.fr> | 84 |

| <http://269g.net> | 86 |

| <http://sakura.ne.jp> | 86 |

| <http://antville.org> | 88 |

| <http://blogalia.com> | 88 |

| <http://psychologies.com> | 91 |

| <http://sourceforge.net> | 91 |

| <http://typepad.jp> | 91 |

| <http://dreamlog.jp> | 98 |

| <http://mu.nu> | 100 |

| <http://weblogs.us> | 111 |

| <http://typepad.fr> | 131 |

| <http://xrea.com> | 131 |

| <http://sblo.jp> | 146 |

| <http://jugem.jp> | 149 |

| <http://blogbus.com> | 172 |

| <http://mo-blog.jp> | 204 |

| <http://no-blog.jp> | 208 |

| <http://googlecode.com> | 221 |

| <http://moe-nifty.com> | 257 |

| <http://webry.info> | 288 |

| <http://txt-nifty.com> | 299 |

| <http://noblog.net> | 328 |

| <http://de-blog.jp> | 367 |

| <http://blogger.de> | 377 |

| <http://web-log.nl> | 443 |

| <http://twoday.net> | 454 |

| <http://tea-nifty.com> | 542 |

| <http://way-nifty.com> | 554 |

| <http://weblog.com.pt> | 680 |

| <http://blogzine.jp> | 842 |

| <http://blogiem.lv> | 855 |

| <http://air-nifty.com> | 876 |

| <http://livedoor.biz> | 1029 |

| <http://blogs.com> | 1149 |

| <http://over-blog.com> | 1159 |

| <http://ocn.ne.jp> | 1472 |

| <http://nikki-k.jp> | 1533 |

| <http://deai.com> | 2067 |

| <http://drecom.jp> | 2522 |

| <http://joueb.com> | 3281 |

| <http://cocolog-nifty.com> | 3480 |

| <http://seesaa.net> | 4402 |

| <http://typepad.com> | 6053 |

| <http://sapo.pt> | 6822 |

| <http://deadjournal.com> | 75696 |

| <http://livejournal.com> | 122343 |

| <http://vox.com> | 176828 |

2009 PLDs and number of domains (pld-sizes.rq):

| <http://shinobi.jp> | 310 |

| <http://txt-nifty.com> | 325 |

| <http://naturum.ne.jp> | 354 |

| <http://drecom.jp> | 369 |

| <http://craigslist.org> | 391 |

| <http://269g.net> | 392 |

| <http://photobucket.com> | 394 |

| <http://blogger.de> | 395 |

| <http://free.fr> | 444 |

| <http://blogspirit.com> | 458 |

| <http://virginradioblog.fr> | 461 |

| <http://weblog.com.pt> | 486 |

| <http://blogbus.com> | 489 |

| <http://zenfolio.com> | 494 |

| <http://multiply.com> | 518 |

| <http://sblo.jp> | 530 |

| <http://blogzine.jp> | 575 |

| <http://tea-nifty.com> | 582 |

| <http://way-nifty.com> | 588 |

| <http://ti-da.net> | 678 |

| <http://hautetfort.com> | 681 |

| <http://imiaru.net> | 827 |

| <http://jaiku.com> | 841 |

| <http://tumblr.com> | 846 |

| <http://blogiem.lv> | 852 |

| <http://ocn.ne.jp> | 958 |

| <http://air-nifty.com> | 984 |

| <http://blogstream.com> | 986 |

| <http://livedoor.biz> | 1008 |

| <http://nikki-k.jp> | 1248 |

| <http://mindsay.com> | 1331 |

| <http://carate.org> | 1606 |

| <http://blogonline.ru> | 1780 |

| <http://blogs.com> | 1961 |

| <http://twoday.net> | 2339 |

| <http://over-blog.com> | 2423 |

| <http://webry.info> | 2529 |

| <http://wordpress.com> | 2534 |

| <http://joueb.com> | 3300 |

| <http://cocolog-nifty.com> | 4227 |

| <http://dotnode.com> | 4800 |

| <http://web-log.nl> | 5264 |

| <http://seesaa.net> | 5924 |

| <http://sapo.pt> | 7624 |

| <http://typepad.com> | 10358 |

| <http://live.com> | 13708 |

| <http://deadjournal.com> | 26195 |

| <http://ya.ru> | 44815 |

| <http://vox.com> | 118682 |

| <http://livejournal.com> | 249020 |

(number of URIs per domain queries ran out of memory)

(number of URIs queries ran out of memory)

tdbquery --loc=c.tdb --results CSV --query=../../../../btc-2009/src/uris-in-domain.rq | awk '{if(NR>1){print}}' | java $BTE_PACKAGE.CharTreeEntry +

- 2009, 1.4BQ / 17GB (page)

- 1,294,664,049 term occurrences of 395,448 terms from 1,360 domains within 970 Pay Level Domains.

Completed: 3,882,742,407 triples loaded in 16,545.61 seconds [Rate: 234,669.00 per second]

- 2010, 3.2BQ / 27GB (page)

- 3,729,056,540 term occurrences of 261,877 terms from 1,424 domains within 984 Pay Level Domains.

Completed: 11,185,401,378 triples loaded in 51,584.10 seconds [Rate: 216,838.16 per second]

- 2011, 2BQ / 20GB (page)

- 2,607,749,504 term occurrences of 264,693 terms from 449 domains within 369 Pay Level Domains.

Completed: 7,823,235,765 triples loaded in 38,776.07 seconds [Rate: 201,754.20 per second]

- 2012, 1.4BQ / 17GB (page) -- be careful, file names collide!

- 1,687,203,330 term occurrences of 363,330 terms from 363 domains within 282 Pay Level Domains.

Completed: 5,060,828,772 triples loaded in 27,811.35 seconds [Rate: 181,969.89 per second]

- 2013 - (There was no 2013 crawl)

- 2014, (in progress)

The following will get a list of properties and classes appearing in the crawl.

$ find ../source -name "btc-*-chunk*.gz" | xargs -n 1 p-and-c.sh -u | gzip > v.gz

$ gunzip -c v.gz | sort -u | gzip > v-u.gz;

$ mv v-u.gz v.gz

But, it's a bit slow so we'll use our extension of NxParser to extract the vocabulary terms (properties and classes):

gunzip -c ../source/*-data-*.gz | java edu.rpi.tw.data.rdf.io.nquads.SplitNVC - v | gzip > v.gz

- 2009, 1.4BQ / 17GB (page)

- 1,151,383,272 property occurrences (triples) of 137,219 properties from 1,173 domains within 863 Pay Level Domains.

- 2010, 3.2BQ / 27GB (page)

- 3,171,793,030 property occurrences (triples) of 95,071 properties from 1,218 domains within 858 Pay Level Domains.

- 2011, 2BQ / 20GB (page)

- 2,168,395,469 property occurrences (triples) of 47,980 properties from 403 domains within 328 Pay Level Domains.

- 2012, 1.4BQ / 17GB (page) -- be careful, file names collide!

- 1,436,545,545 property occurrences (triples) of 57,854 properties from 335 domains within 261 Pay Level Domains.

- 2013 - (There was no 2013 crawl)

- 2014, (in progress)

- 2009, 1.4BQ / 17GB (page)

- 143,280,777 class occurrences of 278,210 classes from 1,039 domains within 754 Pay Level Domains.

- 2010, 3.2BQ / 27GB (page)

- 557,263,510 class occurrences of 166,663 classes from 1084 domains within 767 Pay Level Domains.

- 2011, 2BQ / 20GB (page)

- 439,354,035 class occurrences of 215,927 classes from 348 domains within 288 Pay Level Domains.

- 2012, 1.4BQ / 17GB (page) -- be careful, file names collide!

- 250,657,785 class occurrences of 305,021 classes from 254 domains within 193 Pay Level Domains.

- 2013 - (There was no 2013 crawl)

- 2014, (in progress)

- 2009, 1.4BQ / 17GB (page)

- 1,415,452,155 URI node occurrences in 4.2 GB gz

Completed: 4,081,066,527 triples loaded in 31,625.84 seconds [Rate: 129,042.13 per second]

- 2010, 3.2BQ / 27GB (page)

- 3,544,468,281 URI node occurrences 7.0 GB gz

- 590 MB stringtree gz

- 2011, 2BQ / 20GB (page)

- 1,171,513,984 URI node occurrences in 4.7 GB gz

- 500 MB stringtree gz

Completed: 3,513,684,279 triples loaded in 27,394.40 seconds [Rate: 128,262.89 per second]

- 2012, 1.4BQ / 17GB (page) -- be careful, file names collide!

- 1,578,702,577 URI node occurrences in 3.4 GB gz

- 450 MB stringtree gz

- 2013 - (There was no 2013 crawl)

- 2014, (in progress)

If the PLD doesn't reference W3C, then it isn't worth considering. That would mean that no rdf:type properties occur, and so it isn't RDF enough.

- 2009, 1.4BQ / 17GB (page)

- 38,755 edges of the 1,822,809 edges in the PLD-based authority matrix have occurrences > 1,000.

- 2010, 3.2BQ / 27GB (page)

- 9,567 edges of the 699,757 edges in the PLD-based authority matrix have occurrences > 1,000.

- 2011, 2BQ / 20GB (page)

- 1,249 edges of the 367,075 edges in the PLD-based authority matrix have occurrences > 1,000.

- 2012, 1.4BQ / 17GB (page) -- be careful, file names collide!

- 2,616 edges of the 2,014,273 edges in the PLD-based authority matrix have occurrences > 1,000.

- 2013 - (There was no 2013 crawl)

- 2014, (in progress)

- Sindice's 2011 crawl ~250GB compressed crawls: http://data.sindice.com/trec2011/