Exercise the GA4GH data-object-schemas

- As a researcher, in order to maximize the amount of data I can process from disparate repositories, I can use DOS to harmonize those repositories

- As a researcher, in order to minimize cloud costs and processing time, I can use DOS' to harmonized data to make decisions about what platform/region I should use to download from or where my code should execute.

- As a informatician, in order to injest from disparate repositories, I need to injest an existing repository into DOS

- As a informatician, in order to keep DOS up to date, I need to observe changes to the repository and automatically update DOS

- As a developer, in order to enable DOS, I need to integrate DOS into my backend stack

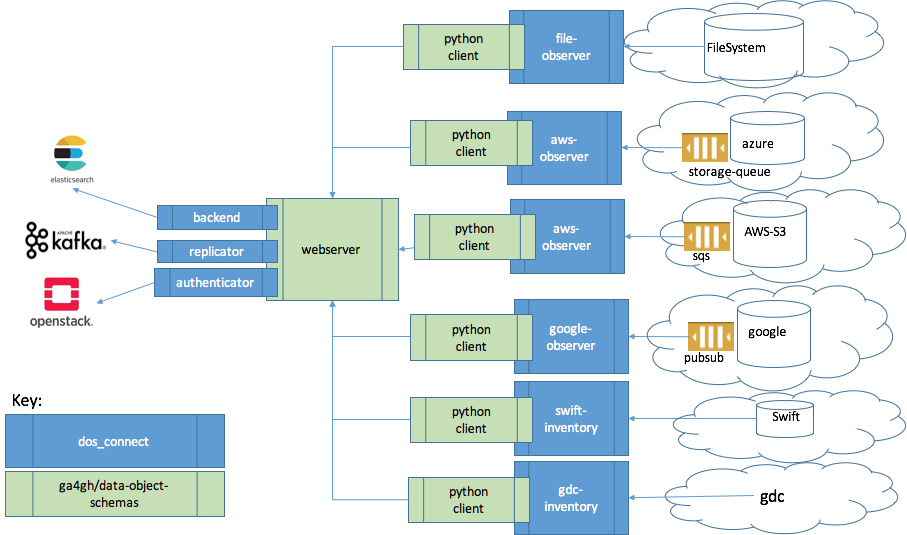

This project provides two high level capabilities:

- observation: long lived services to observe the object store and populate a webserver with data-object-schema records. These observations catch add, moves and deletes to the object store.

- inventory: on demand commands to capture a snapshot of the object store using data-object-schema records.

The data-object-schema is 'unopinionated' in several areas:

- authentication and authorization is unspecified.

- no specific backend is specified.

- 'system-of-record' for id, if unspecified, is driven by the client.

dos_connect addresses these on the server and client by utilizing plugin duck-typing

Server plugins:

BACKEND: for storage. Implementations: in-memory and elasticsearch. e.g. BACKEND=dos_connect.server.elastic_backendAUTHORIZER: for AA. noop, keystone, and basic. e.g. AUTHORIZER=dos_connect.server.keystone_api_key_authorizerREPLICATOR: for downstream consumers. noop, keystone e.g. REPLICATOR=dos_connect.server.kafka_replicator

Client plugins:

All observers and inventory tasks leverage a middleware plugin capability.

- user_metadata(): customize the collection of metadata

- before_store(): modify the data_object before persisting

- md5sum(): calculate the md5 of the file

- id(): customize id e.g. CUSTOMIZER=dos_connect.apps.aws_customizer

To specify your own customizer, set the CUSTOMIZER environmental variable.

For example: AWS S3 returns a special hash for multipart files. The aws_customizer uses a lambda to calculate the true md5 hash of multipart files. Other client customizers include noop, url_as_id, and smmart (obfuscates paths and associates user metadata)

see here

Setup: .env file

# ******* webserver

# http port

DOS_CONNECT_WEBSERVER_PORT=<port-number>

# configure backend

BACKEND=dos_connect.server.elasticsearch_backend

ELASTIC_URL=<url>

# configure authorizer

AUTHORIZER=dos_connect.server.keystone_api_key_authorizer

# (/v3)

DOS_SERVER_OS_AUTH_URL=<url>

AUTHORIZER_PROJECTS=<project_name>

# replicator

REPLICATOR=dos_connect.server.kafka_replicator

KAFKA_BOOTSTRAP_SERVERS=<url>

KAFKA_DOS_TOPIC=<topic-name>

Server Startup:

$ alias web='docker-compose -f docker-compose-webserver.yml'

$ web build ; web up -d

Client Startup:

note: execute source <openstack-openrc.sh> first

# webserver endpoint

export DOS_SERVER=<url>

# sleep in between inventory runs

export SLEEP=<seconds-to-sleep>

# bucket to monitor

export BUCKET_NAME=<existing-bucket-name>

$ alias client='docker-compose -f docker-compose-swift.yml'

$ client build; client up -d

-

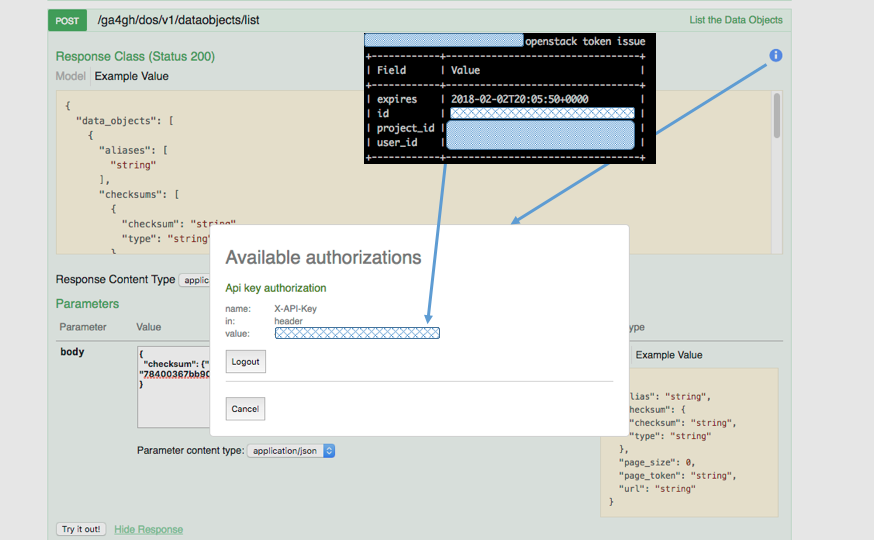

see swagger

-

note: you will need to belong to openstack and provide a token from

openstack token issue

-

see kafak topic 'dos-events' for stream

-

the kafka queue is populated with

{'method': method, 'doc': doc}where

docis a data_object andmethodis one of ['CREATE', 'UPDATE', 'DELETE']

- testing

- evangelization

- swagger improvements (403, 401 status codes)