-

Notifications

You must be signed in to change notification settings - Fork 113

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Non-determinism due to incorrect parameter caching #2708

Comments

|

Thanks for your report. From the error message it seems that the transaction somehow causes non-deterministic execution (e.g., the nodes that crashed diverged from the majority). The transaction payload includes an extra |

|

My current hypothesis is that the issue is not caused by the actual transaction spam directly, rather it just triggers a bug in nodes that restarted at any point since genesis and (due to a bug) didn't initialize the Since this (incorrectly) only happens in This is also the reason why wiping state fixed it (unless -- I assume -- you again restarted the node before you processed the oversized transactions). |

|

@sebastianj You rock. |

|

@kostko Hey - yeah, I wouldn't really call what I did "spam" since Tendermint's way of dealing with nonces effectively kinda stops spam (it immediately cancels the ensuing transactions - other chains queue them up and process them according to the nonce order). Compared to when I've done these attacks on Harmony and Elrond, the actual tx spamming was significantly less effective on your chain since Tendermint's way of dealing with nonces effectively acts as a rate limiter. I also presumed that it was the actual tx spamming of valid txs that caused this - which doesn't seem to be the case (just like you also concluded). I left the "spammer" running yesterday for several hours with txs just being shy of 32768 bytes and that didn't crash my node. I then remembered that I initially ran the spammer with invalid / large payloads when my node + other nodes started crashing. So I just switched the tx payload over to https://gist.github.com/SebastianJ/70fbf825b0a98e420b35a0ca64c78c8d (301,552 bytes) (i.e. oversized tx payload) and after sending a couple of those txs my node went into the So yeah, seems you've definitely nailed down what's going on - and I have to resync from block 0 again 🤣 |

|

@psabilla Thanks :) |

|

Successful reproduction in long-term E2E tests in #2709. |

|

@sebastianj , The Breaker of Chains ! 👍 |

|

Fixed in master and backported to 20.3.x branch (will be in the next 20.3.2 release). Thanks again! |

Reporting an attack as a part of the ongoing "The Quest" challenge, and I'm guessing this is the place to do it.

SUMMARY

I wrote a tx spammer in Go that spams the network with bogus transactions (with each tx having a payload as close as possible to the max tx size of 32768 bytes).

Initially this forced roughly 30% of the nodes on the network to crash and stop syncing, ending up with the error:

(Multiple people on Slack whose nodes got terminated also confirmed that they received the same error message).

The only way to recover from this error was to remove all *.db and *.wal files, restart the node and effectively resync from block 0. This didn't work every time though - sometimes it did work, sometimes it didn't - so frustratedly enough you'd have to repeat this a couple of times in order to get the node to sync the chain properly again.

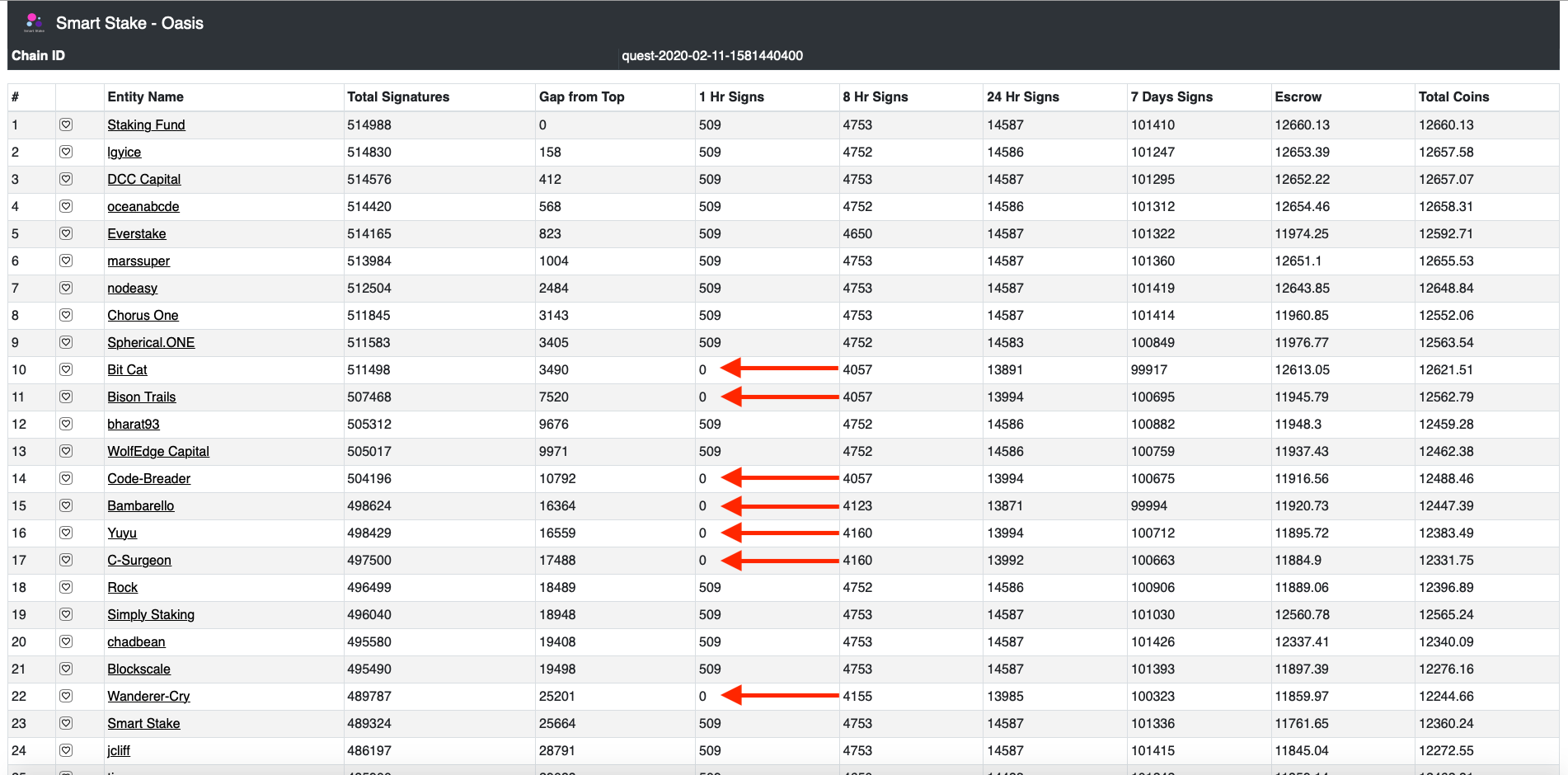

Here's how the top 25 leaderboard (using SmartStake's dashboard) looked after the initial wave of the attack:

Outside of the top 25 plenty of other nodes were also affected. A lot of the nodes have since recovered (after performing full resyncs from block 0) but some are still offline since the start of the attack.

ISSUE TYPE

COMPONENT NAME

go/consensus/tendermintOASIS NODE VERSION

OS / ENVIRONMENT

STEPS TO REPRODUCE

The steps above will download the statically compiled go binary (for Linux), download the list of receiver addresses (to randomly send txs to) and also download the tx payload, a Base64 encoded picture of the one and only - Mr Bubz:

ACTUAL RESULTS

Several nodes in "The Quest" network were terminated and couldn't get back to syncing/joining consensus unless they removed all databases (*.db & *.wal), restarted their nodes and resynced from block 0.

The following error message (or similar alterations of it) was displayed:

EXPECTED RESULTS

Nodes should be able to withstand an attack like this. They should't get terminated or have to resync from block 0 to be able to participate in the consensus process again.

The text was updated successfully, but these errors were encountered: