-

Notifications

You must be signed in to change notification settings - Fork 409

Multistream rendering and instancing

In this lesson we learn how to use multistream rendering to implement GPU instancing.

For these tutorial lessons, we've been providing a single stream of vertex data to the input assembler. Generally the most efficient rendering is a single vertex buffer with a stride of 16, 32, or 64 bytes, but there are times when arranging the vertex data in such a layout is expensive. The Direct3D Input Assembler can therefore pull vertex information from up to 32 vertex buffers. This provides a lot of freedom in managing your vertex buffers.

For example, if we return to a case from Simple rendering, here is the 'stock' vertex input layout for VertexPositionNormalTexture:

const D3D12_INPUT_ELEMENT_DESC c_InputElements[] =

{

{ "SV_Position", 0, DXGI_FORMAT_R32G32B32_FLOAT, 0, D3D12_APPEND_ALIGNED_ELEMENT, D3D12_INPUT_CLASSIFICATION_PER_VERTEX_DATA, 0 },

{ "NORMAL", 0, DXGI_FORMAT_R32G32B32_FLOAT, 0, D3D12_APPEND_ALIGNED_ELEMENT, D3D12_INPUT_CLASSIFICATION_PER_VERTEX_DATA, 0 },

{ "TEXCOORD", 0, DXGI_FORMAT_R32G32_FLOAT, 0, D3D12_APPEND_ALIGNED_ELEMENT, D3D12_INPUT_CLASSIFICATION_PER_VERTEX_DATA, 0 },

};This describes a single vertex stream with three elements. We could instead arrange this into three VBs as follows:

// Position in VB#0, NORMAL in VB#1, TEXCOORD in VB#2

const D3D12_INPUT_ELEMENT_DESC c_InputElements[] =

{

{ "SV_Position", 0, DXGI_FORMAT_R32G32B32_FLOAT, 0, D3D12_APPEND_ALIGNED_ELEMENT, D3D12_INPUT_CLASSIFICATION_PER_VERTEX_DATA, 0 },

{ "NORMAL", 0, DXGI_FORMAT_R32G32B32_FLOAT, 1, D3D12_APPEND_ALIGNED_ELEMENT, D3D12_INPUT_CLASSIFICATION_PER_VERTEX_DATA, 0 },

{ "TEXCOORD", 0, DXGI_FORMAT_R32G32_FLOAT, 2, D3D12_APPEND_ALIGNED_ELEMENT, D3D12_INPUT_CLASSIFICATION_PER_VERTEX_DATA, 0 },

};To render, we'd need to create an Pipeline State Object (PSO) for this input layout, and then bind the vertex buffers to each slot:

D3D12_VERTEX_BUFFER_VIEW vbViews[3] = {};

vbViews[0].BufferLocation = ...;

vbViews[0].StrideInBytes = sizeof(float) * 3;

vbViews[0].SizeInBytes = ...;

vbViews[1].BufferLocation = ...;

vbViews[1].StrideInBytes = sizeof(float) * 3;

vbViews[1].SizeInBytes = ...;

vbViews[2].BufferLocation = ...;

vbViews[2].StrideInBytes = sizeof(float) * 2;

vbViews[2].SizeInBytes = ...;

commandList->IASetVertexBuffers(0, 3, &vbViews);Note if we are using DrawIndexed, then the same index value is used to retrieve the 'ith' element from each vertex buffer (i.e. there is only one index per vertex, and all VBs must be at least as long as the highest index value).

In addition to pulling vertex data from multiple streams, the input assembler can also 'loop' over some streams to implement a feature called "instancing". Here the same vertex data is drawing multiple times with some per-vertex data changing "once per instance" as it loops over the other data. This allows you to efficiently render a large number of the same object in many locations, such as grass or boulders.

The NormalMapEffect supports GPU instancing using a per-vertex XMFLOAT3X4 matrix which can include translations, rotations, scales, etc. For example if we were using VertexPositionNormalTexture vertex data with instancing, we'd create an input layout as follows:

// VertexPositionNormalTexture in VB#0, XMFLOAT3X4 in VB#1

const D3D12_INPUT_ELEMENT_DESC c_InputElements[] =

{

{ "SV_Position", 0, DXGI_FORMAT_R32G32B32_FLOAT, 0, D3D12_APPEND_ALIGNED_ELEMENT, D3D12_INPUT_CLASSIFICATION_PER_VERTEX_DATA, 0 },

{ "NORMAL", 0, DXGI_FORMAT_R32G32B32_FLOAT, 0, D3D12_APPEND_ALIGNED_ELEMENT, D3D12_INPUT_CLASSIFICATION_PER_VERTEX_DATA, 0 },

{ "TEXCOORD", 0, DXGI_FORMAT_R32G32_FLOAT, 0, D3D12_APPEND_ALIGNED_ELEMENT, D3D12_INPUT_CLASSIFICATION_PER_VERTEX_DATA, 0 },

{ "InstMatrix", 0, DXGI_FORMAT_R32G32B32A32_FLOAT, 1, D3D12_APPEND_ALIGNED_ELEMENT, D3D12_INPUT_CLASSIFICATION_PER_INSTANCE_DATA, 1 },

{ "InstMatrix", 1, DXGI_FORMAT_R32G32B32A32_FLOAT, 1, D3D12_APPEND_ALIGNED_ELEMENT, D3D12_INPUT_CLASSIFICATION_PER_INSTANCE_DATA, 1 },

{ "InstMatrix", 2, DXGI_FORMAT_R32G32B32A32_FLOAT, 1, D3D12_APPEND_ALIGNED_ELEMENT, D3D12_INPUT_CLASSIFICATION_PER_INSTANCE_DATA, 1 },

};Here the first vertex buffer has enough VertexPositionNormalTexture vertex data for one instance, and the second vertex buffer has as many XMFLOAT3X4 entries as instances.

First create a new project using the instructions from the earlier lessons: Using DeviceResources and Adding the DirectX Tool Kit which we will use for this lesson.

Start by saving spnza_bricks_a.dds, spnza_bricks_a_normal.dds, spnza_bricks_a_specular.dds into your new project's directory, and then from the top menu select Project / Add Existing Item.... Select "spnza_bricks_a.dds" and click "OK". Repeat for the two other files.

In the Game.h file, add the following variables to the bottom of the Game class's private declarations (right after where you added m_graphicsMemory as part of setup):

DirectX::SimpleMath::Matrix m_view;

DirectX::SimpleMath::Matrix m_proj;

std::unique_ptr<DirectX::DescriptorHeap> m_resourceDescriptors;

std::unique_ptr<DirectX::CommonStates> m_states;

std::unique_ptr<DirectX::NormalMapEffect> m_effect;

std::unique_ptr<DirectX::GeometricPrimitive> m_shape;

Microsoft::WRL::ComPtr<ID3D12Resource> m_brickDiffuse;

Microsoft::WRL::ComPtr<ID3D12Resource> m_brickNormal;

Microsoft::WRL::ComPtr<ID3D12Resource> m_brickSpecular;

UINT m_instanceCount;

std::unique_ptr<DirectX::XMFLOAT3X4[]> m_instanceTransforms;

enum Descriptors

{

BrickDiffuse,

BrickNormal,

BrickSpecular,

Count

};In Game.cpp file, modify the Game constructor to initialize the new variable:

Game::Game() noexcept(false) :

m_instanceCount(0)

{

m_deviceResources = std::make_unique<DX::DeviceResources>();

m_deviceResources->RegisterDeviceNotify(this);

}In Game.cpp, add to the TODO of CreateDeviceDependentResources:

m_shape = GeometricPrimitive::CreateSphere();

RenderTargetState rtState(m_deviceResources->GetBackBufferFormat(),

m_deviceResources->GetDepthBufferFormat());

const D3D12_INPUT_ELEMENT_DESC c_InputElements[] =

{

{ "SV_Position", 0, DXGI_FORMAT_R32G32B32_FLOAT, 0, D3D12_APPEND_ALIGNED_ELEMENT, D3D12_INPUT_CLASSIFICATION_PER_VERTEX_DATA, 0 },

{ "NORMAL", 0, DXGI_FORMAT_R32G32B32_FLOAT, 0, D3D12_APPEND_ALIGNED_ELEMENT, D3D12_INPUT_CLASSIFICATION_PER_VERTEX_DATA, 0 },

{ "TEXCOORD", 0, DXGI_FORMAT_R32G32_FLOAT, 0, D3D12_APPEND_ALIGNED_ELEMENT, D3D12_INPUT_CLASSIFICATION_PER_VERTEX_DATA, 0 },

{ "InstMatrix", 0, DXGI_FORMAT_R32G32B32A32_FLOAT, 1, D3D12_APPEND_ALIGNED_ELEMENT, D3D12_INPUT_CLASSIFICATION_PER_INSTANCE_DATA, 1 },

{ "InstMatrix", 1, DXGI_FORMAT_R32G32B32A32_FLOAT, 1, D3D12_APPEND_ALIGNED_ELEMENT, D3D12_INPUT_CLASSIFICATION_PER_INSTANCE_DATA, 1 },

{ "InstMatrix", 2, DXGI_FORMAT_R32G32B32A32_FLOAT, 1, D3D12_APPEND_ALIGNED_ELEMENT, D3D12_INPUT_CLASSIFICATION_PER_INSTANCE_DATA, 1 },

};

const D3D12_INPUT_LAYOUT_DESC layout = { c_InputElements, static_cast<UINT>(std::size(c_InputElements)) };

EffectPipelineStateDescription pd(

&layout,

CommonStates::Opaque,

CommonStates::DepthDefault,

CommonStates::CullNone,

rtState);

m_effect = std::make_unique<NormalMapEffect>(device,

EffectFlags::Specular | EffectFlags::Instancing, pd);

m_effect->EnableDefaultLighting();

m_resourceDescriptors = std::make_unique<DescriptorHeap>(device,

Descriptors::Count);

m_states = std::make_unique<CommonStates>(device);

ResourceUploadBatch resourceUpload(device);

resourceUpload.Begin();

m_shape->LoadStaticBuffers(device, resourceUpload);

DX::ThrowIfFailed(CreateDDSTextureFromFile(device, resourceUpload,

L"spnza_bricks_a.DDS",

m_brickDiffuse.ReleaseAndGetAddressOf()));

CreateShaderResourceView(device, m_brickDiffuse.Get(),

m_resourceDescriptors->GetCpuHandle(Descriptors::BrickDiffuse));

DX::ThrowIfFailed(CreateDDSTextureFromFile(device, resourceUpload,

L"spnza_bricks_a_normal.DDS",

m_brickNormal.ReleaseAndGetAddressOf()));

CreateShaderResourceView(device, m_brickNormal.Get(),

m_resourceDescriptors->GetCpuHandle(Descriptors::BrickNormal));

DX::ThrowIfFailed(CreateDDSTextureFromFile(device, resourceUpload,

L"spnza_bricks_a_specular.DDS",

m_brickSpecular.ReleaseAndGetAddressOf()));

CreateShaderResourceView(device, m_brickSpecular.Get(),

m_resourceDescriptors->GetCpuHandle(Descriptors::BrickSpecular));

auto uploadResourcesFinished = resourceUpload.End(

m_deviceResources->GetCommandQueue());

uploadResourcesFinished.wait();

m_effect->SetTexture(m_resourceDescriptors->GetGpuHandle(Descriptors::BrickDiffuse),

m_states->LinearClamp());

m_effect->SetNormalTexture(m_resourceDescriptors->GetGpuHandle(Descriptors::BrickNormal));

m_effect->SetSpecularTexture(m_resourceDescriptors->GetGpuHandle(Descriptors::BrickSpecular));

// Create instance transforms.

{

size_t j = 0;

for (float y = -6.f; y < 6.f; y += 1.5f)

{

for (float x = -6.f; x < 6.f; x += 1.5f)

{

++j;

}

}

m_instanceCount = static_cast<UINT>(j);

m_instanceTransforms = std::make_unique<XMFLOAT3X4[]>(j);

constexpr XMFLOAT3X4 s_identity = { 1.f, 0.f, 0.f, 0.f, 0.f, 1.f, 0.f, 0.f, 0.f, 0.f, 1.f, 0.f };

j = 0;

for (float y = -6.f; y < 6.f; y += 1.5f)

{

for (float x = -6.f; x < 6.f; x += 1.5f)

{

m_instanceTransforms[j] = s_identity;

m_instanceTransforms[j]._14 = x;

m_instanceTransforms[j]._24 = y;

++j;

}

}

}In Game.cpp, add to the TODO of CreateWindowSizeDependentResources:

auto size = m_deviceResources->GetOutputSize();

m_view = Matrix::CreateLookAt(Vector3(0.f, 0.f, 12.f),

Vector3::Zero, Vector3::UnitY);

m_proj = Matrix::CreatePerspectiveFieldOfView(XM_PI / 4.f,

float(size.right) / float(size.bottom), 0.1f, 25.f);

m_effect->SetView(m_view);

m_effect->SetProjection(m_proj);In Game.cpp, add to the TODO of OnDeviceLost:

m_resourceDescriptors.reset();

m_states.reset();

m_effect.reset();

m_shape.reset();

m_brickDiffuse.Reset();

m_brickNormal.Reset();

m_brickSpecular.Reset();In Game.cpp, add to the TODO of Render:

ID3D12DescriptorHeap* heaps[] = { m_resourceDescriptors->Heap(), m_states->Heap() };

commandList->SetDescriptorHeaps(static_cast<UINT>(std::size(heaps)), heaps);

const size_t instBytes = m_instanceCount * sizeof(XMFLOAT3X4);

GraphicsResource inst = m_graphicsMemory->Allocate(instBytes);

memcpy(inst.Memory(), m_instanceTransforms.get(), instBytes);

D3D12_VERTEX_BUFFER_VIEW vertexBufferInst = {};

vertexBufferInst.BufferLocation = inst.GpuAddress();

vertexBufferInst.SizeInBytes = static_cast<UINT>(instBytes);

vertexBufferInst.StrideInBytes = sizeof(XMFLOAT3X4);

commandList->IASetVertexBuffers(1, 1, &vertexBufferInst);

m_effect->Apply(commandList);

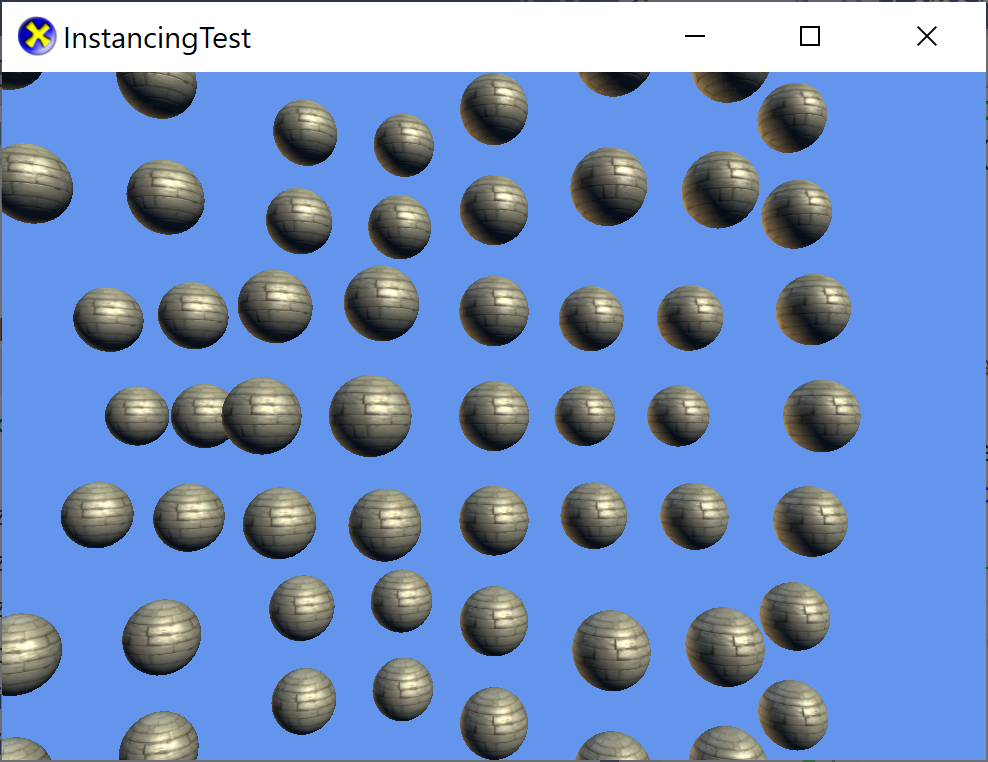

m_shape->DrawInstanced(commandList, m_instanceCount);Build and run to see 64 spheres rendered.

You can provide updated instance data per-frame to animate the locations, while leaving the original vertex data 'static' for best performance.

In Game.cpp, add to the TODO of Update:

auto time = static_cast<float>(m_timer.GetTotalSeconds());

size_t j = 0;

for (float y = -6.f; y < 6.f; y += 1.5f)

{

for (float x = -6.f; x < 6.f; x += 1.5f)

{

XMMATRIX m = XMMatrixTranslation(x,

y,

cos(time + float(x) * XM_PIDIV4)

* sin(time + float(y) * XM_PIDIV4)

* 2.f);

XMStoreFloat3x4(&m_instanceTransforms[j], m);

++j;

}

}

assert(j == m_instanceCount);Build and run to see the spheres moving individually.

The XMFLOAT3X4 data type is a little different than the other DirectXMath / SimpleMath types used in DirectX Tool Kit. It's a column-major transformation matrix. Normally we use row-major in DirectX samples, and when moving to a shader we transpose it as we set it into the Constant Buffer to fit into HLSL's default of column-major.

The XMFLOAT3X4 data type was introduced in DirectXMath version 3.13 to support DirectX Raytracing which used this column-major form in the API. It's a compact way to encode a '4x3' matrix where the last column is 0, 0, 0, 1 (i.e. a matrix which can encode affine 3D transformations like translation, scale, and rotation but not perspective projection). We transpose it to column-major and it fits neatly into three XMVECTOR values as the last row is implicitly 0 0 0 1. As you see above, we build a row-major XMMATRIX transformation matrix and then you can use XMStoreFloat3x4 which will transpose it as it's written to the buffer.

If manipulating XMFLOAT3X4 directly, the translation is in _14, _24, _34.

The skinning shaders use the same trick of encoding the bone transformation matrices as three

XMVECTORvalues each in the column-major form to allow bones to be packed into smaller space (72 bones instead of 54 in the same amount of space), but the API deals with the transpose when you set the array ofXMMATRIXvalues. Since the instancing data is driven by the application, it doesn't make sense to try to hide this detail.

To render Model with instancing, you must create the effects with instancing enabled, create the PSOs with the instancing input layout, and call DrawInstanced for each ModelMeshPart.

Here's a basic outline of implementing this:

instancedModel = Model::CreateFromSDKMESH(device, L"mymodel.sdkmesh");

// mymodel.sdkmesh must have vertex normals, vertex texture coordinates, and

// define normal maps in the materials so it uses NormalMapEffect or PBREffect.

static const D3D12_INPUT_ELEMENT_DESC s_instElements[] =

{

// XMFLOAT3X4

{ "InstMatrix", 0, DXGI_FORMAT_R32G32B32A32_FLOAT, 1, D3D12_APPEND_ALIGNED_ELEMENT, D3D12_INPUT_CLASSIFICATION_PER_INSTANCE_DATA, 1 },

{ "InstMatrix", 1, DXGI_FORMAT_R32G32B32A32_FLOAT, 1, D3D12_APPEND_ALIGNED_ELEMENT, D3D12_INPUT_CLASSIFICATION_PER_INSTANCE_DATA, 1 },

{ "InstMatrix", 2, DXGI_FORMAT_R32G32B32A32_FLOAT, 1, D3D12_APPEND_ALIGNED_ELEMENT, D3D12_INPUT_CLASSIFICATION_PER_INSTANCE_DATA, 1 },

};

for (auto mit = instancedModel->meshes.begin(); mit != instancedModel->meshes.end(); ++mit)

{

auto mesh = mit->get();

assert(mesh != 0);

for (auto it = mesh->opaqueMeshParts.begin(); it != mesh->opaqueMeshParts.end(); ++it)

{

auto part = it->get();

assert(part != 0);

auto il = part->vbDecl;

// Since vbDecl can be a shared pointer, make sure we don't add this multiple times.

bool foundInst = false;

for (const auto& fit : *il)

{

if (_stricmp(fit.SemanticName, "InstMatrix") == 0)

{

foundInst = true;

}

}

if (!foundInst)

{

il->push_back(s_instElements[0]);

il->push_back(s_instElements[1]);

il->push_back(s_instElements[2]);

}

}

// Skipping alphaMeshParts for this model since we know it's empty...

assert(mesh->alphaMeshParts.empty());

}

modelResources = instancedModel->LoadTextures(device, resourceUpload);

EffectPipelineStateDescription pd(

nullptr,

CommonStates::Opaque,

CommonStates::DepthDefault,

CommonStates::CullClockwise,

rtState);

fxFactory->EnableInstancing(true);

instancedModelEffects = instancedModel->CreateEffects(*fxFactory, pd, pd);// Drawing the model with instancing

ID3D12DescriptorHeap* heaps[] = { modelResources->Heap(), m_states->Heap() };

commandList->SetDescriptorHeaps(static_cast<UINT>(std::size(heaps)), heaps);

const size_t instBytes = m_instanceCount * sizeof(XMFLOAT3X4);

GraphicsResource inst = m_graphicsMemory->Allocate(instBytes);

memcpy(inst.Memory(), m_instanceTransforms.get(), instBytes);

D3D12_VERTEX_BUFFER_VIEW vertexBufferInst = {};

vertexBufferInst.BufferLocation = inst.GpuAddress();

vertexBufferInst.SizeInBytes = static_cast<UINT>(instBytes);

vertexBufferInst.StrideInBytes = sizeof(XMFLOAT3X4);

commandList->IASetVertexBuffers(1, 1, &vertexBufferInst);

for (auto mit = instancedModel->meshes.cbegin(); mit != instancedModel->meshes.cend(); ++mit)

{

auto mesh = mit->get();

assert(mesh != 0);

for (auto it = mesh->opaqueMeshParts.cbegin(); it != mesh->opaqueMeshParts.cend(); ++it)

{

auto part = it->get();

assert(part != 0);

auto effect = instancedModelEffects[part->partIndex].get();

auto imatrices = dynamic_cast<IEffectMatrices*>(effect);

if (imatrices) imatrices->SetMatrices(Matrix::Identity, m_view, m_proj);

effect->Apply(commandList);

part->DrawInstanced(commandList, m_instanceCount);

}

// Skipping alphaMeshParts for this model since we know it's empty...

assert(mesh->alphaMeshParts.empty());

}The drawing above assumes an opaque model. If you have alpha-blending, you will need to do the drawing loop twice. See ModelMesh for details.

-

GPU instancing is also supported by DebugEffect and PBREffect.

-

While BasicEffect does not support instancing, you can use NormalMapEffect to emulate BasicEffect by providing a default 1x1 white texture (i.e. RGBA32 value

0xFFFFFFFF) and/or a smooth 1x1 normal map texture (i.e. RGBA32 value0x8080FFFF).

Next lessons: Using HDR rendering

All content and source code for this package are subject to the terms of the MIT License.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact [email protected] with any additional questions or comments.

- Universal Windows Platform apps

- Windows desktop apps

- Windows 11

- Windows 10

- Xbox One

- Xbox Series X|S

- x86

- x64

- ARM64

- Visual Studio 2022

- Visual Studio 2019 (16.11)

- clang/LLVM v12 - v18

- MinGW 12.2, 13.2

- CMake 3.20