In this repository we have trained a feed forward neural network model on MNIST and FMNIST dataset and have obtained the accuracy of 90%. Moreover we have encoded the MNIST dataset using 3 hidden layer encoder and have successfully decoded the dataset with a loss of 2%

The MNIST database (Modified National Institute of Standards and Technology database) is a large database of handwritten digits that is commonly used for training various image processing systems. The database is also widely used for training and testing in the field of machine learning.

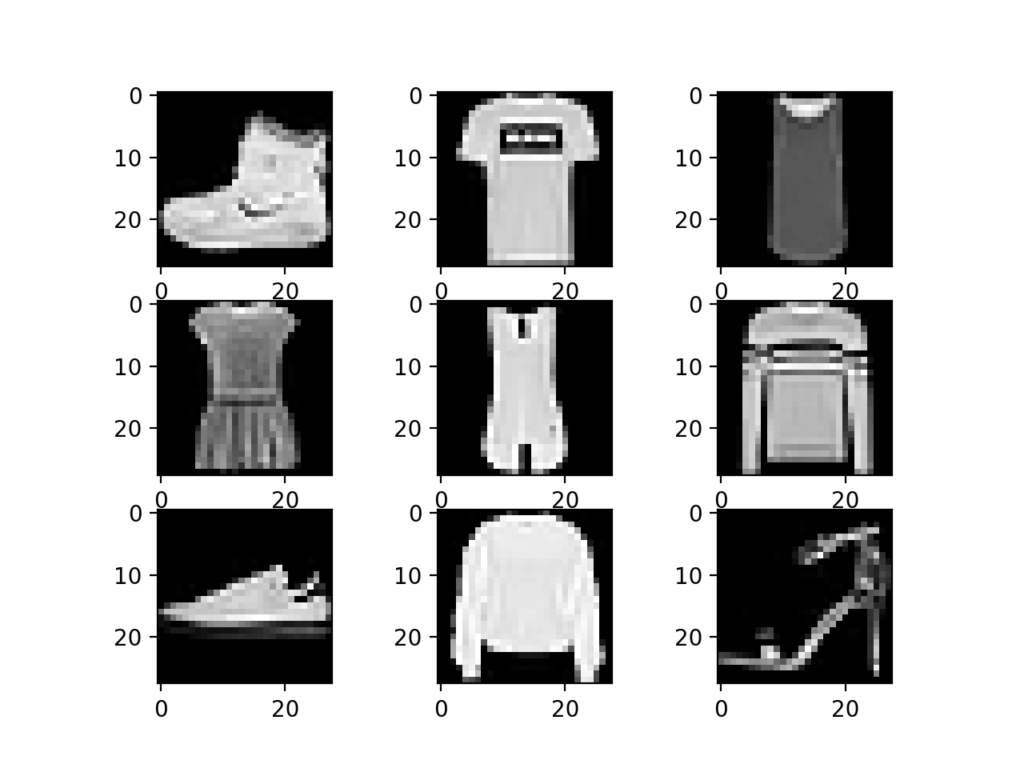

The Fashion MNIST dataset is an alternative to the standard MNIST dataset. Instead of handwritten digits, it contains 70000 28x28 grayscale images of ten types of fashion items.

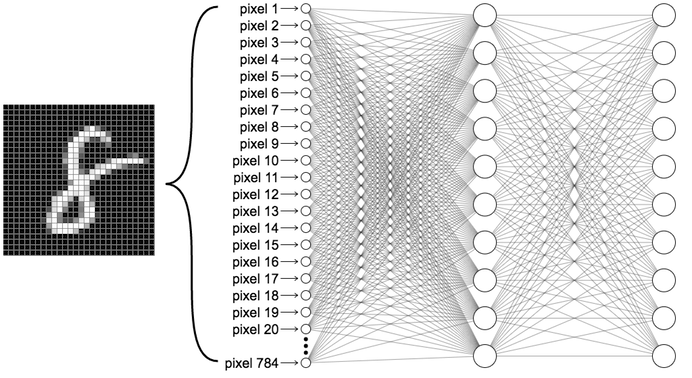

Deep feedforward networks, also often called feedforward neural networks, or multilayer perceptrons(MLPs), are the quintessential deep learning models. The goal of a feedforward network is to approximate some function f*. For example, for a classifier, y = f*(x) maps an input x to a category y. A feedforward network defines a mapping y = f(x;θ) and learns the value of the parameters θ that result in the best function approximation.

Encoders and decoders are 2 major techniques in Natural Language Processing using Deep Learning. Encoder: Maps (sensory) input data to a different (often lower dimensional, compressed) feature representation. Decoder: Maps the feature representation back into the input data space.

To know more about FMNIST dataset | ENCODER-DECODER