-

Notifications

You must be signed in to change notification settings - Fork 212

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

Add documentation for aisuite integration (#893)

- Loading branch information

1 parent

028659d

commit c191fa6

Showing

6 changed files

with

247 additions

and

0 deletions.

There are no files selected for viewing

184 changes: 184 additions & 0 deletions

184

apps/opik-documentation/documentation/docs/cookbook/aisuite.ipynb

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,184 @@ | ||

| { | ||

| "cells": [ | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "# Using Opik with aisuite\n", | ||

| "\n", | ||

| "Opik integrates with aisuite to provide a simple way to log traces for all aisuite LLM calls.\n" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "## Creating an account on Comet.com\n", | ||

| "\n", | ||

| "[Comet](https://www.comet.com/site?from=llm&utm_source=opik&utm_medium=colab&utm_content=openai&utm_campaign=opik) provides a hosted version of the Opik platform, [simply create an account](https://www.comet.com/signup?from=llm&utm_source=opik&utm_medium=colab&utm_content=aisuite&utm_campaign=opik) and grab you API Key.\n", | ||

| "\n", | ||

| "> You can also run the Opik platform locally, see the [installation guide](https://www.comet.com/docs/opik/self-host/overview/?from=llm&utm_source=opik&utm_medium=colab&utm_content=aisuite&utm_campaign=opik) for more information." | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": null, | ||

| "metadata": {}, | ||

| "outputs": [], | ||

| "source": [ | ||

| "%pip install --upgrade opik \"aisuite[openai]\"" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": null, | ||

| "metadata": {}, | ||

| "outputs": [], | ||

| "source": [ | ||

| "import opik\n", | ||

| "\n", | ||

| "opik.configure(use_local=False)" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "## Preparing our environment\n", | ||

| "\n", | ||

| "First, we will set up our OpenAI API keys." | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": null, | ||

| "metadata": {}, | ||

| "outputs": [], | ||

| "source": [ | ||

| "import os\n", | ||

| "import getpass\n", | ||

| "\n", | ||

| "if \"OPENAI_API_KEY\" not in os.environ:\n", | ||

| " os.environ[\"OPENAI_API_KEY\"] = getpass.getpass(\"Enter your OpenAI API key: \")" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "## Logging traces\n", | ||

| "\n", | ||

| "In order to log traces to Opik, we need to wrap our OpenAI calls with the `track_openai` function:" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": null, | ||

| "metadata": {}, | ||

| "outputs": [], | ||

| "source": [ | ||

| "from opik.integrations.aisuite import track_aisuite\n", | ||

| "import aisuite as ai\n", | ||

| "\n", | ||

| "client = track_aisuite(ai.Client(), project_name=\"aisuite-integration-demo\")\n", | ||

| "\n", | ||

| "messages = [\n", | ||

| " {\"role\": \"user\", \"content\": \"Write a short two sentence story about Opik.\"},\n", | ||

| "]\n", | ||

| "\n", | ||

| "response = client.chat.completions.create(\n", | ||

| " model=\"openai:gpt-4o\", messages=messages, temperature=0.75\n", | ||

| ")\n", | ||

| "print(response.choices[0].message.content)" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

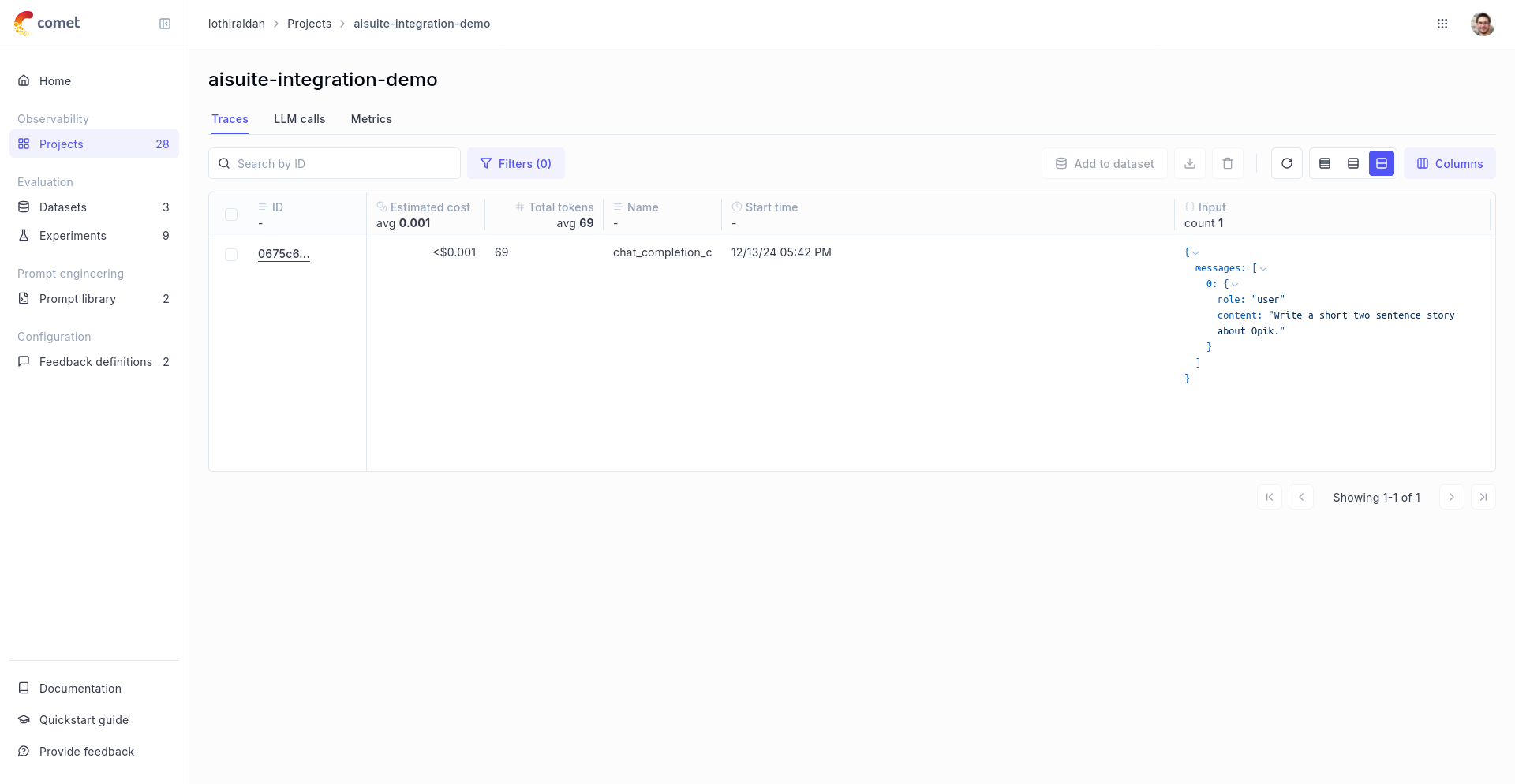

| "The prompt and response messages are automatically logged to Opik and can be viewed in the UI.\n", | ||

| "\n", | ||

| "" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "## Using it with the `track` decorator\n", | ||

| "\n", | ||

| "If you have multiple steps in your LLM pipeline, you can use the `track` decorator to log the traces for each step. If OpenAI is called within one of these steps, the LLM call with be associated with that corresponding step:" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": null, | ||

| "metadata": {}, | ||

| "outputs": [], | ||

| "source": [ | ||

| "from opik import track\n", | ||

| "from opik.integrations.aisuite import track_aisuite\n", | ||

| "import aisuite as ai\n", | ||

| "\n", | ||

| "client = track_aisuite(ai.Client(), project_name=\"aisuite-integration-demo\")\n", | ||

| "\n", | ||

| "\n", | ||

| "@track\n", | ||

| "def generate_story(prompt):\n", | ||

| " res = client.chat.completions.create(\n", | ||

| " model=\"openai:gpt-3.5-turbo\", messages=[{\"role\": \"user\", \"content\": prompt}]\n", | ||

| " )\n", | ||

| " return res.choices[0].message.content\n", | ||

| "\n", | ||

| "\n", | ||

| "@track\n", | ||

| "def generate_topic():\n", | ||

| " prompt = \"Generate a topic for a story about Opik.\"\n", | ||

| " res = client.chat.completions.create(\n", | ||

| " model=\"openai:gpt-3.5-turbo\", messages=[{\"role\": \"user\", \"content\": prompt}]\n", | ||

| " )\n", | ||

| " return res.choices[0].message.content\n", | ||

| "\n", | ||

| "\n", | ||

| "@track(project_name=\"aisuite-integration-demo\")\n", | ||

| "def generate_opik_story():\n", | ||

| " topic = generate_topic()\n", | ||

| " story = generate_story(topic)\n", | ||

| " return story\n", | ||

| "\n", | ||

| "\n", | ||

| "generate_opik_story()" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "The trace can now be viewed in the UI:\n", | ||

| "\n", | ||

| "" | ||

| ] | ||

| } | ||

| ], | ||

| "metadata": { | ||

| "kernelspec": { | ||

| "display_name": "Python 3 (ipykernel)", | ||

| "language": "python", | ||

| "name": "python3" | ||

| }, | ||

| "language_info": { | ||

| "codemirror_mode": { | ||

| "name": "ipython", | ||

| "version": 3 | ||

| }, | ||

| "file_extension": ".py", | ||

| "mimetype": "text/x-python", | ||

| "name": "python", | ||

| "nbconvert_exporter": "python", | ||

| "pygments_lexer": "ipython3", | ||

| "version": "3.10.12" | ||

| } | ||

| }, | ||

| "nbformat": 4, | ||

| "nbformat_minor": 4 | ||

| } |

60 changes: 60 additions & 0 deletions

60

apps/opik-documentation/documentation/docs/tracing/integrations/aisuite.md

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,60 @@ | ||

| --- | ||

| sidebar_label: aisuite | ||

| --- | ||

|

|

||

| # aisuite | ||

|

|

||

| This guide explains how to integrate Opik with the aisuite Python SDK. By using the `track_aisuite` method provided by opik, you can easily track and evaluate your aisuite API calls within your Opik projects as Opik will automatically log the input prompt, model used, token usage, and response generated. | ||

|

|

||

| <div style="display: flex; align-items: center; flex-wrap: wrap; margin: 20px 0;"> | ||

| <span style="margin-right: 10px;">You can check out the Colab Notebook if you'd like to jump straight to the code:</span> | ||

| <a href="https://colab.research.google.com/github/comet-ml/opik/blob/main/apps/opik-documentation/documentation/docs/cookbook/aisuite.ipynb" target="_blank" rel="noopener noreferrer"> | ||

| <img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab" style="vertical-align: middle;"/> | ||

| </a> | ||

| </div> | ||

|

|

||

| ## Getting started | ||

|

|

||

| First, ensure you have both `opik` and `aisuite` packages installed: | ||

|

|

||

| ```bash | ||

| pip install opik "aisuite[openai]" | ||

| ``` | ||

|

|

||

| In addition, you can configure Opik using the `opik configure` command which will prompt you for the correct local server address or if you are using the Cloud platfrom your API key: | ||

|

|

||

| ```bash | ||

| opik configure | ||

| ``` | ||

|

|

||

| ## Tracking aisuite API calls | ||

|

|

||

| ```python | ||

| from opik.integrations.aisuite import track_aisuite | ||

| import aisuite as ai | ||

|

|

||

| client = track_aisuite(ai.Client(), project_name="aisuite-integration-demo") | ||

|

|

||

| messages = [ | ||

| {"role": "user", "content": "Write a short two sentence story about Opik."}, | ||

| ] | ||

|

|

||

| response = client.chat.completions.create( | ||

| model="openai:gpt-4o", | ||

| messages=messages, | ||

| temperature=0.75 | ||

| ) | ||

| print(response.choices[0].message.content) | ||

| ``` | ||

|

|

||

| The `track_aisuite` will automatically track and log the API call, including the input prompt, model used, and response generated. You can view these logs in your Opik project dashboard. | ||

|

|

||

| By following these steps, you can seamlessly integrate Opik with the aisuite Python SDK and gain valuable insights into your model's performance and usage. | ||

|

|

||

| ## Supported aisuite methods | ||

|

|

||

| The `track_aisuite` wrapper supports the following aisuite methods: | ||

|

|

||

| - `aisuite.Client.chat.completions.create()` | ||

|

|

||

| If you would like to track another aisuite method, please let us know by opening an issue on [GitHub](https://github.com/comet-ml/opik/issues). |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

Binary file added

BIN

+140 KB

...opik-documentation/documentation/static/img/cookbook/aisuite_trace_cookbook.png

Loading

Sorry, something went wrong. Reload?

Sorry, we cannot display this file.

Sorry, this file is invalid so it cannot be displayed.

Binary file added

BIN

+302 KB

...entation/documentation/static/img/cookbook/aisuite_trace_decorator_cookbook.png

Loading

Sorry, something went wrong. Reload?

Sorry, we cannot display this file.

Sorry, this file is invalid so it cannot be displayed.