-

Notifications

You must be signed in to change notification settings - Fork 210

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

* Draft Haystack integration * Updated Haystack integration * Fix linter * Add Haystack integration docs * Fix SyntaxError in Python 3.10 * Add Haystack integration to doc sidebar * Remove redundant comments, fix OpikConnector.run method mistakes * Refactor haystack * Refactor the integration, add fake_backend_without_batching fixture * Remove prints * Refactor tracer, make the test stricter * fix sort integration names in the docs * Fix lint errors --------- Co-authored-by: Boris Feld <[email protected]> Co-authored-by: Aliaksandr Kuzmik <[email protected]> Co-authored-by: Aliaksandr Kuzmik <[email protected]>

- Loading branch information

1 parent

909d93d

commit 19b6a82

Showing

18 changed files

with

1,042 additions

and

9 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

231 changes: 231 additions & 0 deletions

231

apps/opik-documentation/documentation/docs/cookbook/haystack.ipynb

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,231 @@ | ||

| { | ||

| "cells": [ | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "# Using Opik with Haystack\n", | ||

| "\n", | ||

| "[Haystack](https://docs.haystack.deepset.ai/docs/intro) is an open-source framework for building production-ready LLM applications, retrieval-augmented generative pipelines and state-of-the-art search systems that work intelligently over large document collections.\n", | ||

| "\n", | ||

| "In this guide, we will showcase how to integrate Opik with Haystack so that all the Haystack calls are logged as traces in Opik." | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "## Creating an account on Comet.com\n", | ||

| "\n", | ||

| "[Comet](https://www.comet.com/site?from=llm&utm_source=opik&utm_medium=colab&utm_content=haystack&utm_campaign=opik) provides a hosted version of the Opik platform, [simply create an account](https://www.comet.com/signup?from=llm&utm_source=opik&utm_medium=colab&utm_content=haystack&utm_campaign=opik) and grab you API Key.\n", | ||

| "\n", | ||

| "> You can also run the Opik platform locally, see the [installation guide](https://www.comet.com/docs/opik/self-host/overview/?from=llm&utm_source=opik&utm_medium=colab&utm_content=haystack&utm_campaign=opik) for more information." | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": null, | ||

| "metadata": {}, | ||

| "outputs": [], | ||

| "source": [ | ||

| "%pip install --upgrade --quiet opik haystack-ai" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": null, | ||

| "metadata": {}, | ||

| "outputs": [], | ||

| "source": [ | ||

| "import opik\n", | ||

| "\n", | ||

| "opik.configure(use_local=False)" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": 2, | ||

| "metadata": {}, | ||

| "outputs": [], | ||

| "source": [ | ||

| "import os\n", | ||

| "import getpass\n", | ||

| "\n", | ||

| "if \"OPENAI_API_KEY\" not in os.environ:\n", | ||

| " os.environ[\"OPENAI_API_KEY\"] = getpass.getpass(\"Enter your OpenAI API key: \")" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "## Creating the Haystack pipeline\n", | ||

| "\n", | ||

| "In this example, we will create a simple pipeline that uses a prompt template to translate text to German.\n", | ||

| "\n", | ||

| "To enable Opik tracing, we will:\n", | ||

| "1. Enable content tracing in Haystack by setting the environment variable `HAYSTACK_CONTENT_TRACING_ENABLED=true`\n", | ||

| "2. Add the `OpikConnector` component to the pipeline\n", | ||

| "\n", | ||

| "Note: The `OpikConnector` component is a special component that will automatically log the traces of the pipeline as Opik traces, it should not be connected to any other component." | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": 5, | ||

| "metadata": {}, | ||

| "outputs": [ | ||

| { | ||

| "name": "stderr", | ||

| "output_type": "stream", | ||

| "text": [ | ||

| "OPIK: Traces will not be logged to Opik because Haystack tracing is disabled. To enable, set the HAYSTACK_CONTENT_TRACING_ENABLED environment variable to true before importing Haystack.\n", | ||

| "OPIK: Started logging traces to the \"Default Project\" project at https://www.comet.com/opik/jacques-comet/redirect/projects?name=Default%20Project.\n" | ||

| ] | ||

| }, | ||

| { | ||

| "name": "stdout", | ||

| "output_type": "stream", | ||

| "text": [ | ||

| "Trace ID: 0675969a-fd40-7fcb-8000-0287dec9559f\n", | ||

| "ChatMessage(content='Berlin ist die Hauptstadt Deutschlands und zugleich eine der aufregendsten und vielfältigsten Städte Europas. Die Metropole hat eine reiche Geschichte, die sich in ihren historischen Gebäuden, Museen und Denkmälern widerspiegelt. Berlin ist auch bekannt für seine lebendige Kunst- und Kulturszene, mit unzähligen Galerien, Theatern und Musikveranstaltungen.\\n\\nDie Stadt ist zudem ein Schmelztiegel der Kulturen, was sich in der vielfältigen Gastronomie, den lebhaften Märkten und den multikulturellen Vierteln widerspiegelt. Berlin bietet auch eine lebendige Nightlife-Szene, mit zahlreichen Bars, Clubs und Veranstaltungen für jeden Geschmack.\\n\\nNeben all dem kulturellen Reichtum hat Berlin auch eine grüne Seite, mit vielen Parks, Gärten und Seen, die zum Entspannen und Erholen einladen. Insgesamt ist Berlin eine Stadt, die für jeden etwas zu bieten hat und die Besucher mit ihrer Vielfalt und Offenheit begeistert.', role=<ChatRole.ASSISTANT: 'assistant'>, name=None, meta={'model': 'gpt-3.5-turbo-0125', 'index': 0, 'finish_reason': 'stop', 'usage': {'completion_tokens': 255, 'prompt_tokens': 29, 'total_tokens': 284, 'completion_tokens_details': CompletionTokensDetails(accepted_prediction_tokens=0, audio_tokens=0, reasoning_tokens=0, rejected_prediction_tokens=0), 'prompt_tokens_details': PromptTokensDetails(audio_tokens=0, cached_tokens=0)}})\n" | ||

| ] | ||

| } | ||

| ], | ||

| "source": [ | ||

| "import os\n", | ||

| "\n", | ||

| "os.environ[\"HAYSTACK_CONTENT_TRACING_ENABLED\"] = \"true\"\n", | ||

| "\n", | ||

| "from haystack import Pipeline\n", | ||

| "from haystack.components.builders import ChatPromptBuilder\n", | ||

| "from haystack.components.generators.chat import OpenAIChatGenerator\n", | ||

| "from haystack.dataclasses import ChatMessage\n", | ||

| "\n", | ||

| "from opik.integrations.haystack import OpikConnector\n", | ||

| "\n", | ||

| "\n", | ||

| "pipe = Pipeline()\n", | ||

| "\n", | ||

| "# Add the OpikConnector component to the pipeline\n", | ||

| "pipe.add_component(\"tracer\", OpikConnector(\"Chat example\"))\n", | ||

| "\n", | ||

| "# Continue building the pipeline\n", | ||

| "pipe.add_component(\"prompt_builder\", ChatPromptBuilder())\n", | ||

| "pipe.add_component(\"llm\", OpenAIChatGenerator(model=\"gpt-3.5-turbo\"))\n", | ||

| "\n", | ||

| "pipe.connect(\"prompt_builder.prompt\", \"llm.messages\")\n", | ||

| "\n", | ||

| "messages = [\n", | ||

| " ChatMessage.from_system(\n", | ||

| " \"Always respond in German even if some input data is in other languages.\"\n", | ||

| " ),\n", | ||

| " ChatMessage.from_user(\"Tell me about {{location}}\"),\n", | ||

| "]\n", | ||

| "\n", | ||

| "response = pipe.run(\n", | ||

| " data={\n", | ||

| " \"prompt_builder\": {\n", | ||

| " \"template_variables\": {\"location\": \"Berlin\"},\n", | ||

| " \"template\": messages,\n", | ||

| " }\n", | ||

| " }\n", | ||

| ")\n", | ||

| "\n", | ||

| "trace_id = response[\"tracer\"][\"trace_id\"]\n", | ||

| "print(f\"Trace ID: {trace_id}\")\n", | ||

| "print(response[\"llm\"][\"replies\"][0])" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

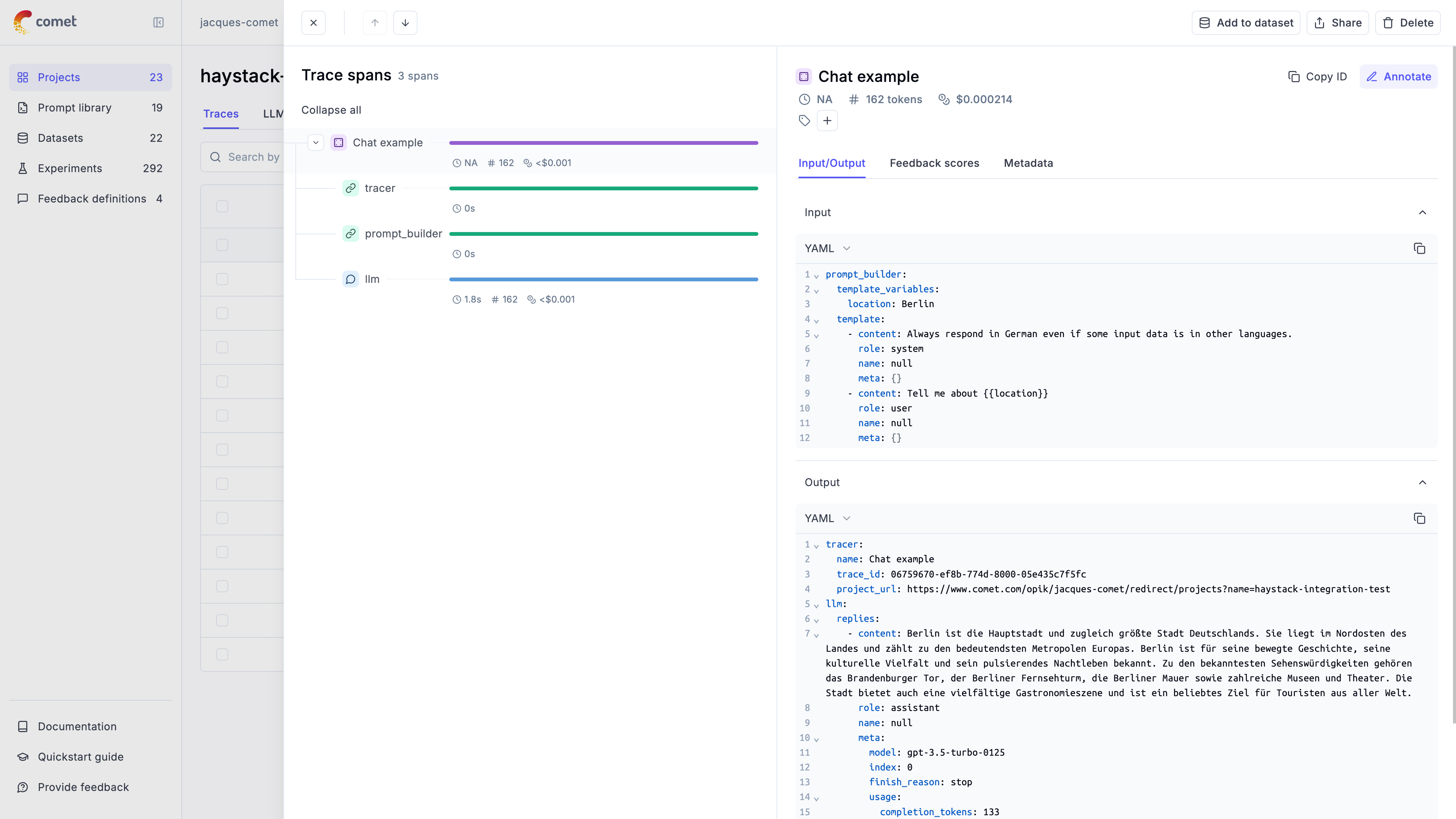

| "The trace is now logged to the Opik platform:\n", | ||

| "\n", | ||

| "" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "## Advanced usage\n", | ||

| "\n", | ||

| "### Ensuring the trace is logged\n", | ||

| "\n", | ||

| "By default the `OpikConnector` will flush the trace to the Opik platform after each component in a thread blocking way. As a result, you may disable flushing the data after each component by setting the `HAYSTACK_OPIK_ENFORCE_FLUSH` environent variable to `false`.\n", | ||

| "\n", | ||

| "**Caution**: Disabling this feature may result in data loss if the program crashes before the data is sent to Opik. Make sure you will call the `flush()` method explicitly before the program exits:" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": 6, | ||

| "metadata": {}, | ||

| "outputs": [], | ||

| "source": [ | ||

| "from haystack.tracing import tracer\n", | ||

| "\n", | ||

| "tracer.actual_tracer.flush()" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "### Getting the trace ID\n", | ||

| "\n", | ||

| "If you would like to log additional information to the trace you will need to get the trace ID. You can do this by the `tracer` key in the response of the pipeline:" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": 7, | ||

| "metadata": {}, | ||

| "outputs": [ | ||

| { | ||

| "name": "stdout", | ||

| "output_type": "stream", | ||

| "text": [ | ||

| "Trace ID: 067596ab-da00-7c1f-8000-53f7af5fc3de\n" | ||

| ] | ||

| } | ||

| ], | ||

| "source": [ | ||

| "response = pipe.run(\n", | ||

| " data={\n", | ||

| " \"prompt_builder\": {\n", | ||

| " \"template_variables\": {\"location\": \"Berlin\"},\n", | ||

| " \"template\": messages,\n", | ||

| " }\n", | ||

| " }\n", | ||

| ")\n", | ||

| "\n", | ||

| "trace_id = response[\"tracer\"][\"trace_id\"]\n", | ||

| "print(f\"Trace ID: {trace_id}\")" | ||

| ] | ||

| } | ||

| ], | ||

| "metadata": { | ||

| "kernelspec": { | ||

| "display_name": "py312_llm_eval", | ||

| "language": "python", | ||

| "name": "python3" | ||

| }, | ||

| "language_info": { | ||

| "codemirror_mode": { | ||

| "name": "ipython", | ||

| "version": 3 | ||

| }, | ||

| "file_extension": ".py", | ||

| "mimetype": "text/x-python", | ||

| "name": "python", | ||

| "nbconvert_exporter": "python", | ||

| "pygments_lexer": "ipython3", | ||

| "version": "3.12.4" | ||

| } | ||

| }, | ||

| "nbformat": 4, | ||

| "nbformat_minor": 2 | ||

| } |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

130 changes: 130 additions & 0 deletions

130

apps/opik-documentation/documentation/docs/tracing/integrations/haystack.md

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,130 @@ | ||

| --- | ||

| sidebar_label: Haystack | ||

| --- | ||

|

|

||

| # Haystack | ||

|

|

||

| [Haystack](https://docs.haystack.deepset.ai/docs/intro) is an open-source framework for building production-ready LLM applications, retrieval-augmented generative pipelines and state-of-the-art search systems that work intelligently over large document collections. | ||

|

|

||

| Opik integrates with Haystack to log traces for all Haystack pipelines. | ||

|

|

||

| ## Getting started | ||

|

|

||

| First, ensure you have both `opik` and `haystack-ai` installed: | ||

|

|

||

| ```bash | ||

| pip install opik haystack-ai | ||

| ``` | ||

|

|

||

| In addition, you can configure Opik using the `opik configure` command which will prompt you for the correct local server address or if you are using the Cloud platfrom your API key: | ||

|

|

||

| ```bash | ||

| opik configure | ||

| ``` | ||

|

|

||

| ## Logging Haystack pipeline runs | ||

|

|

||

| To log a Haystack pipeline run, you can use the [`OpikConnector`](https://www.comet.com/docs/opik/python-sdk-reference/integrations/haystack/OpikConnector.html). This connector will log the pipeline run to the Opik platform and add a `tracer` key to the pipeline run response with the trace ID: | ||

|

|

||

| ```python | ||

| import os | ||

|

|

||

| os.environ["HAYSTACK_CONTENT_TRACING_ENABLED"] = "true" | ||

|

|

||

| from haystack import Pipeline | ||

| from haystack.components.builders import ChatPromptBuilder | ||

| from haystack.components.generators.chat import OpenAIChatGenerator | ||

| from haystack.dataclasses import ChatMessage | ||

|

|

||

| from opik.integrations.haystack import OpikConnector | ||

|

|

||

|

|

||

| pipe = Pipeline() | ||

|

|

||

| # Add the OpikConnector component to the pipeline | ||

| pipe.add_component( | ||

| "tracer", OpikConnector("Chat example") | ||

| ) | ||

|

|

||

| # Continue building the pipeline | ||

| pipe.add_component("prompt_builder", ChatPromptBuilder()) | ||

| pipe.add_component("llm", OpenAIChatGenerator(model="gpt-3.5-turbo")) | ||

|

|

||

| pipe.connect("prompt_builder.prompt", "llm.messages") | ||

|

|

||

| messages = [ | ||

| ChatMessage.from_system( | ||

| "Always respond in German even if some input data is in other languages." | ||

| ), | ||

| ChatMessage.from_user("Tell me about {{location}}"), | ||

| ] | ||

|

|

||

| response = pipe.run( | ||

| data={ | ||

| "prompt_builder": { | ||

| "template_variables": {"location": "Berlin"}, | ||

| "template": messages, | ||

| } | ||

| } | ||

| ) | ||

|

|

||

| print(response["llm"]["replies"][0]) | ||

| ``` | ||

|

|

||

| Each pipeline run will now be logged to the Opik platform: | ||

|

|

||

|  | ||

|

|

||

| :::tip | ||

|

|

||

| In order to ensure the traces are correctly logged, make sure you set the environment variable `HAYSTACK_CONTENT_TRACING_ENABLED` to `true` before running the pipeline. | ||

|

|

||

| ::: | ||

|

|

||

| ## Advanced usage | ||

|

|

||

| ### Disabling automatic flushing of traces | ||

|

|

||

| By default the `OpikConnector` will flush the trace to the Opik platform after each component in a thread blocking way. As a result, you may want to disable flushing the data after each component by setting the `HAYSTACK_OPIK_ENFORCE_FLUSH` environent variable to `false`. | ||

|

|

||

| In order to make sure that all traces are logged to the Opik platform before you exit a script, you can use the `flush` method: | ||

|

|

||

| ```python | ||

| from haystack.tracing import tracer | ||

|

|

||

| # Pipeline definition | ||

|

|

||

| tracer.actual_tracer.flush() | ||

| ``` | ||

|

|

||

| :::warning | ||

|

|

||

| Disabling this feature may result in data loss if the program crashes before the data is sent to Opik. Make sure you will call the `flush()` method explicitly before the program exits. | ||

|

|

||

| ::: | ||

|

|

||

| ### Updating logged traces | ||

|

|

||

| The `OpikConnector` returns the logged trace ID in the pipeline run response. You can use this ID to update the trace with feedback scores or other metadata: | ||

|

|

||

| ```python | ||

| import opik | ||

|

|

||

| response = pipe.run( | ||

| data={ | ||

| "prompt_builder": { | ||

| "template_variables": {"location": "Berlin"}, | ||

| "template": messages, | ||

| } | ||

| } | ||

| ) | ||

|

|

||

| # Get the trace ID from the pipeline run response | ||

| trace_id = response["tracer"]["trace_id"] | ||

|

|

||

| # Log the feedback score | ||

| opik_client = opik.Opik() | ||

| opik_client.log_traces_feedback_scores([ | ||

| {"id": trace_id, "name": "user-feedback", "value": 0.5} | ||

| ]) | ||

| ``` |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

Oops, something went wrong.