-

Notifications

You must be signed in to change notification settings - Fork 66

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Networked Replication #19

Conversation

fixed links

Updating from Discord feedback.

pretty lazy, but it's something

…er" vs "connection"

"sparesets"

more neutral tone in the implementation details

|

Can't add anything useful at the moment, but just want to thank you for the effort you've put into this RFC. I think this might turn into something quite unique among general-purpose engines and extremely valuable for multiplayer game developers. I've been developing my own prototype of a multiplayer game in Bevy. I'm by no means an expert in writing netcode, but so far this is my most successful attempt. I thought I could share it, probably it would give some insights on what might be needed for a multiplayer game, what patterns we could incorporate into the engine and make using them easier, or what patterns to avoid. :) vladbat00/muddle-run#7 (still work in progress, but it already works for desktop and is possible to play around with) I've implemented the following features:

I don't pretend like they are perfectly executed and don't have any bugs.. But at least one can control the spawned cubes with WASD, although the movement is a bit jittery. |

implementation_details.md

Outdated

| ## "Clock" Synchronization | ||

| Ideally, clients predict ahead by just enough to have their inputs reach the server right before they're needed. People often try to have clients estimate the clock time on the server (with some SNTP handshake) and use that to schedule the next simulation step, but that's overly complex. | ||

|

|

||

| What we really care about is: How much time passes between when the server receives my input and when that input is consumed? If the server simply tells clients how long their inputs are waiting in its buffer, the clients can use that information to converge on the correct lead. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Can clients just estimate by how much they need to be ahead by calculating this value based on RTT? They can usually guess the current frame number on server (assuming it comes with updates), they know how much time on average it takes for their packets to be acknowledged and they can also estimate jitter and packet loss.

Or is it a good practice for servers to tell by how much a client has to be ahead explicitly?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

IMO guessing is more work, not less.

What we're trying to control is the predict-ahead amount. It's simpler and more accurate to just do that directly.

RTT can only be approximated whereas the server can measure the exact duration inputs spend in its buffer (more informative too since it "contains" both latency and jitter). Loss can be interpreted as a negative duration. Loss doesn't need to be estimated either.

Or is it a good practice for servers to tell by how much a client has to be ahead explicitly?

This can be an entirely client-sided process. In my example, the server only tells clients how much they are ahead (sent with the updates). Clients could decide for themselves how much to be ahead.

implementation_details.md

Outdated

|

|

||

| 3. Always rollback and re-simulate. | ||

|

|

||

| Now, if you're thinking that's wasteful, the "if mispredicted" gives you a false sense of security. If I make a game and claim it can rollback 250ms, that basically should mean *any* 250ms, with no stuttering. If clients *always* rollback and re-sim, it'll be easier to profile and optimize for that. As a bonus, clients never need to store old predicted states. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Good point.

I just wanted to clarify this bit:

As a bonus, clients never need to store old predicted states.

It means that we still store the history of authoritative updates, but clients can simply avoid adding predicted states on top of those, correct? (I.e. they can store just the latest one.) And we still need to store a buffer of local players' commands, to be able to re-sim the predicted states.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, that's exactly it.

…ils.md Worded more generically until I have a working implementation.

more nitpicky edits

networked_replication.md

Outdated

|

|

||

| ## Unresolved Questions | ||

|

|

||

| - Can we provide lints for undefined behavior like mutating networked state outside of `NetworkFixedUpdate`? | ||

| - Do rollbacks break change detection or events? | ||

| - ~~Do rollbacks break change detection or events?~~ As long as we're careful to update the appropriate change ticks, it should be okay. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Events don't work off of change ticks at the moment sadly :( This will need to be explicitly tested; or we can try to work out an events design to use change ticks.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ah, good point. I was tunnel-visioned on the change detection when I edited that.

I haven't invested much thought into events yet, but they will be tricky, I think. The ones contained inside the simulation loop might actually be the most straightforward.

Events that are sent out to be consumed by "non-networked" systems, UI, etc. will have weird requirements because of mispredictions.

Sorry to all my English teachers. Also moved the player vs. client thing back to the top. The distinction is important for interest management.

trying to be exact with terminology

Been working on a proof of concept for this and found some mistakes in the rushed pseudocode I wrote in the time sync section. Made some revisions, but realized some other stuff was out of data too.

Would it be worth separating this into two modules? The serialization aspect seems distinct from (but a dependency of) the replication aspect. Splitting off |

So this doc is a bit outdated / missing details now that I've had some time to work on a plugin implementation. Me having "serialization" here is honestly a mistake since my snapshot memory arena strategy does not (de)serialize (it's just a copy). But yes, you still make a good point, and I will most likely put the underlying allocator in its own crate. I wanna clarify some stuff, though. Prediction comes for free with my strategy. Nothing is blocked upfront, so users will actually have to explicitly opt-out using a new How state is synced is where my impl gets very opinonated. My versions of full and interest-managed state transfer are both dependent on the contiguous memory arena (mostly for compression). I still need to flesh out an API for users to change the relevance of component values for each client at run-time. I'm open to suggestions there. My approach to prioritization does not involve user-defined priorities and changing update frequencies, but those can maybe be added as an option later. My current plan is:

|

|

I think those are good models for many game types. I do believe that in any situation where the networking system has to be opinionated, there should be good API slice points for games that don't fit the typical mold to insert their own logic instead. It would be a shame to have things coupled in a way where you can't use something like the helpful serialization attributes for byte-packing component fields because your game's networking model doesn't match up with how this system does its sync/rollback and interest decisions. Networking model has a lot of influence on project cost, so if a custom implementation of part of the stack can save bandwidth by sacrificing features they don't need, all the more power to them and it would be nice to make that easy to insert as an alternative. |

I understand where you're coming from, but you're making a lot of assumptions. Let me start by saying that my priorities are, in order:

I'm confident that the architecture I ended up with is minimally invasive and puts the fewest restrictions on what users can do and without sacrificing any performance. I do not see a mold being enforced here. The only hard requirement is something every game can do—the game physics have to be a discrete simulation. Users are not going to have to fight against it to "get back" anything because there's nothing happening behind the scenes that incurs extra cost. Rollback will be as fast as it could ever be and there's nothing to turn off to make it faster. The performance of a client-side simulation step (and subsequent resims) will be solely up to the user.

I'm open to attribute suggestions here, but there are no other "serialization" attributes except decimal precision because nothing is serialized. If you knew that some integer field was always between two values, store it encoded like that and then add the minimum value in your systems. I only have a special thing for quaternions, just so that decimal precision corresponds to angle precision. There is still compression on top of this, for both full and interest-managed state transfer. And I'm confident the default will pack a lot more data than most strategies users would think of. The only thing that may add waste are the padding bytes in a user's structs (depends on the alignment). Storing packed versions of structs is likely worth exploring later, but I'm shelving that until someone demonstrates a need for it after I release my plugin. I am open to exposing more control over interest management. I already want to leave room for users to swap in their own form of AOI (e.g. potentially visible sets). I just think the default prioritization will work very well. My main goal is a high-performing plugin that everyone can use (without grokking networking to the degree |

I don't think saying that there are there are many idiosyncratic game netcode requirements, and no single library can adequately service them all is much of an assumption. It's no coincidence that no single gamedev networking paradigm has really emerged victorious. There are divides between genres, at different degrees of scale, between platforms, and there's a rather significant divide between AAA and Indie. Unreal works similarly to this on the surface, but there are good reasons to make an Unreal game without using Unreal networking, and in that situation Unreal networking can actually be a hurdle to get over since it gets in your way. That said, this seems like a good baseline that will serve many projects with its defaults, and bevy's structure makes everything flexible to begin with. There are also parts of this that could be expanded upon to service more paradigms than the ones presented directly here. I do think it would be useful to have different parts of the stack be as individually accessible as possible, so that the process of using something that isn't necessarily this rollback/prediction model can still be served by this plugin (at least, if it's intended to be the bevy networking solution). At the very least, a division between the things bevy can do to make networking generally easier (fixed timesteps for network processing, serialization/memory arrangement of components, etc.) and the actual networking solution itself would be immensely useful. |

If nothing else, I hope I conveyed that I think these are pretty big assumptions, and that challenging them is what led me to start this. I'll admit I don't plan on supporting everything (e.g. no peer-to-peer over 2 players). I'm not going to opine on it, but now that I know what I know, I don't think there's much natural division in multiplayer netcode at all. Deterministic vs authoritative is the big one, but that doesn't translate to much under this architecture.

I mean, not to knock anyone, but it certainly feels like a coincidence to me. Developers have time and budget constraints and they usually aren't designing general-purpose engines. Even in the engine space, Unreal is the only one trying. IMO multiplayer programming just lacks the abundance of learning material that e.g. rendering has. That is changing, but there's still just not much concise, accurate information available (lots of misleading and incorrect information though). I know of only one multiplayer programming textbook. Ideally, some blog posts would expose the fact that multiplayer games are just reinventing distributed systems and databases with worse terminology.

I'll try to keep things as decoupled as I can. |

snapshot time is the time stored in the snapshot, not the time when it was received

|

First of all thanks for the write up! I agree that having replication "early" on would be a big plus for bevy. Not sure if this is PR is still being worked on but here are my two cents. For one of my projects in bevy I've implemented a really rough network replication feature that was more meant to be a working prototype / example than an production ready thing so there are tons of missing features and often the shortest path was taken. But the most important learning in the end is / was that the concept of replication works great and building your server and client with it is as easy as it can get, so we are on a good path there. But I think mixing replication and networking could be a bad idea. In the end the goal should be to have a replication feature that allows you to sync up two or more The networking side is another huge part and I feel like bevy should only care as little as possible about it. There are already amazing projects like quinn (https://github.com/quinn-rs/quinn) that implements the QUIC protocol (UDP) with both reliable and unreliable messages (through a QUIC extension). On top of the service / client it self there are a lot of other networking features that you also mentioned: encryptions, statistics, safety, reliability, scalability and many more. It's not to uncommon to have rather "simple and unsafe" server implementations that have proxys on top of it. That applies to both large scaled https infrastructures but also game server. We now even have Quilkin a udp proxy (https://github.com/googleforgames/quilkin) in the rust ecosystem. No need to reinvent the wheel here. Looking even further at typical infrastructures with kubernetes for example there is even more work needed. tl;dr: Keep networking and replication seperated concerns (while of course not forgetting that these two can (but not have to be) closely related to each other. And let bevy focus more on the replication part rather than networking. |

|

Thank you for the feedback. I want to say upfront that I agree with a lot of what you've said. This RFC already advocates for keeping things modular. I do not want any coupling between the replication code (the save and restore) and whatever plumbing sends and receives the data.

A generalized API for saving and restoring the state of a world (or copying data from one world to another) is outside the scope of this RFC. I think a better place to discuss that would be the "multiple worlds" RFC #43. While I agree that their APIs should be modular, solving replication while ignoring the constraints imposed by networking won't produce a good solution for online multiplayer. That's why this is an RFC for "networked replication" and not "cloning worlds." We could definitely extend Those unique challenges have to be addressed and they heavily motivate storing data in ways that can be compressed really well, really quickly, and I described the strategy I think solves those challenges the best. Said strategy would work in offline contexts too (where compression is unnecessary), but those aren't my focus.

Sockets, messaging protocols, etc. are beyond the scope of the RFC. I know how Steam, Epic, Microsoft, Amazon, etc. all have their own socket and authentication libraries that you must use to access their infrastructure, so I agree to the extent that I think you mean keep things modular. If you're saying that Bevy shouldn't have some UDP thing of its own, I'm inclined to disagree. I think if we're gonna advertise "Bevy can do online multiplayer" at some point, there should at least be something simple built-in for testing purposes. It just needs to be modular.

QUIC would be nice, but I'm not sure if its unreliable extension is enough for games. QUIC datagrams are exclusively unreliable and unordered, while most realtime multiplayer games are unreliable but sequenced—where the receiving side rejects messages older than the latest received (stale data is useless). We'd have to implement this on top of QUIC. Likewise, QUIC multiplexing is only supported for its reliable streams. There is no equivalent channel abstraction for datagrams, so we'd have to implement that too. Implementing those on top of |

|

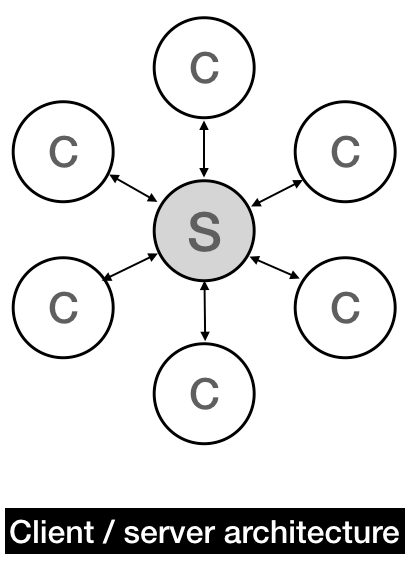

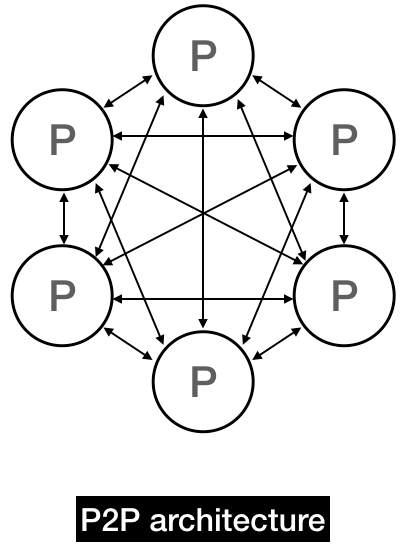

Which networking model should be default in your eyes? Peer-to-peer or client<->server? By looking at the, Bevy is used by indie developers, with either no real budget at the time of writing it or really low one which is huge barrier for maintaining Client <-> Server architecture in their game. Here comes Steam Network, a peer-to-peer abstraction which fits best for sole developers or small studios with low budget. Steam handles lobbing and matchmaking for the game through their APIs which is huge help and offloads a lot of costs. If studio does not have their Server Infrastructure then renting many external servers comes with huge costs over pretty much FREE peer-to-peer model which sometimes requires small relay server to reduce hiccups when original host leaves session. Azure PlayFab is there to help sole devs and small/medium studios with maintaining networking by providing FREE Client <-> Server abstraction up to 100k of unique players, which is fine for the beginning but then costs start growning but they're still much smaller then external servers or own infrastructure. PlayFab provides SDK which can be wrapped as external or internal plugin. Have you considered adding LAN option as In the early 2000s, many games had that option because it was supersingly cheap to implement. That change introduced LAN parties which exists even now, there public places like "Gamer Pubs" which organize such events for players. |

Yes, thanks for the explaination. That was important for some future work😊. I don't really know much about networking other than some backend dev and UDP based file servers. About QUIC from above, I have tested it in quick unreal project with 10 different players in realtime and there is no difference with UDP, except that connection is more secure. |

|

QUIC having secure connections baked in is an enormous plus, for sure. That said, IMO the only thing that's relevant in this context is that it exposes an unreliable channel. At the moment, WebRTC is the only web API that exposes them, which is why there are crates like https://github.com/triplehex/webrtc-unreliable and https://github.com/naia-lib/webrtc-unreliable-client. Otherwise, you're stuck with WebSockets (built on TCP). There's a new WebTransport API (built on QUIC) that exposes an unreliable channel with no headaches. That would be the go-to choice, but it isn't available in all browsers yet. |

|

Right, I forgot about it's WebAssmebly limitations. Good point |

|

Closing this as (1) it hasn't been my focus for quite some time and (2) a lot of the implementation details discussed—particularly the paragraphs about allocation/compression—would IMO be too hard to implement in a reasonable timeframe, either because they're reliant on Rust features that still haven't stabilized yet, or because there's already a queue of large ECS features that have higher priority. Basically, implementing this RFC as envisioned would first need |

RENDERED

Proposes an implementation of engine features for developing networked games. Main focus is replication with key interest in providing it transparently (i.e. minimal, if any, networking boilerplate).