-

Notifications

You must be signed in to change notification settings - Fork 4

Korektor

| Dataset name | Source |

|---|---|

| korektor-czech-130202 | The current Korektor model |

| syn2005 | Czech National Corpus (CNC) - http://hdl.handle.net/11858/00-097C-0000-0023-119E-8 |

| syn2010 | Czech National Corpus (CNC) - http://hdl.handle.net/11858/00-097C-0000-0023-119F-6 |

precision = TP / (TP + FP)

recall = TP / (TP + FN)

F1-score = 2 * (precision * recall) / (precision + recall)

| Measure | Description |

|---|---|

| TP | Number of words with spelling errors that the spell checker detected correctly |

| FP | Number of words identified as spelling errors that are not actually spelling errors |

| TN | Number of correct words that the spell checker did not flag as having spelling errors |

| FN | Number of words with spelling errors that the spell checker did not flag as having spelling errors |

| Measure | Description |

|---|---|

| TP | Number of words with spelling errors for which the spell checker gave the correct suggestion |

| FP | Number of words (with/without spelling errors) for which the spell checker made suggestions, and for those, either the suggestion is not needed (in the case of non-existing errors) or the suggestion is incorrect if indeed there was an error in the original word. |

| TN | Number of correct words that the spell checker did not flag as having spelling errors and no suggestions were made. |

| FN | Number of words with spelling errors that the spell checker did not flag as having spelling errors or did not provide any suggestions |

| Dataset | Max edit distance | Precision | Recall | F1-score |

|---|---|---|---|---|

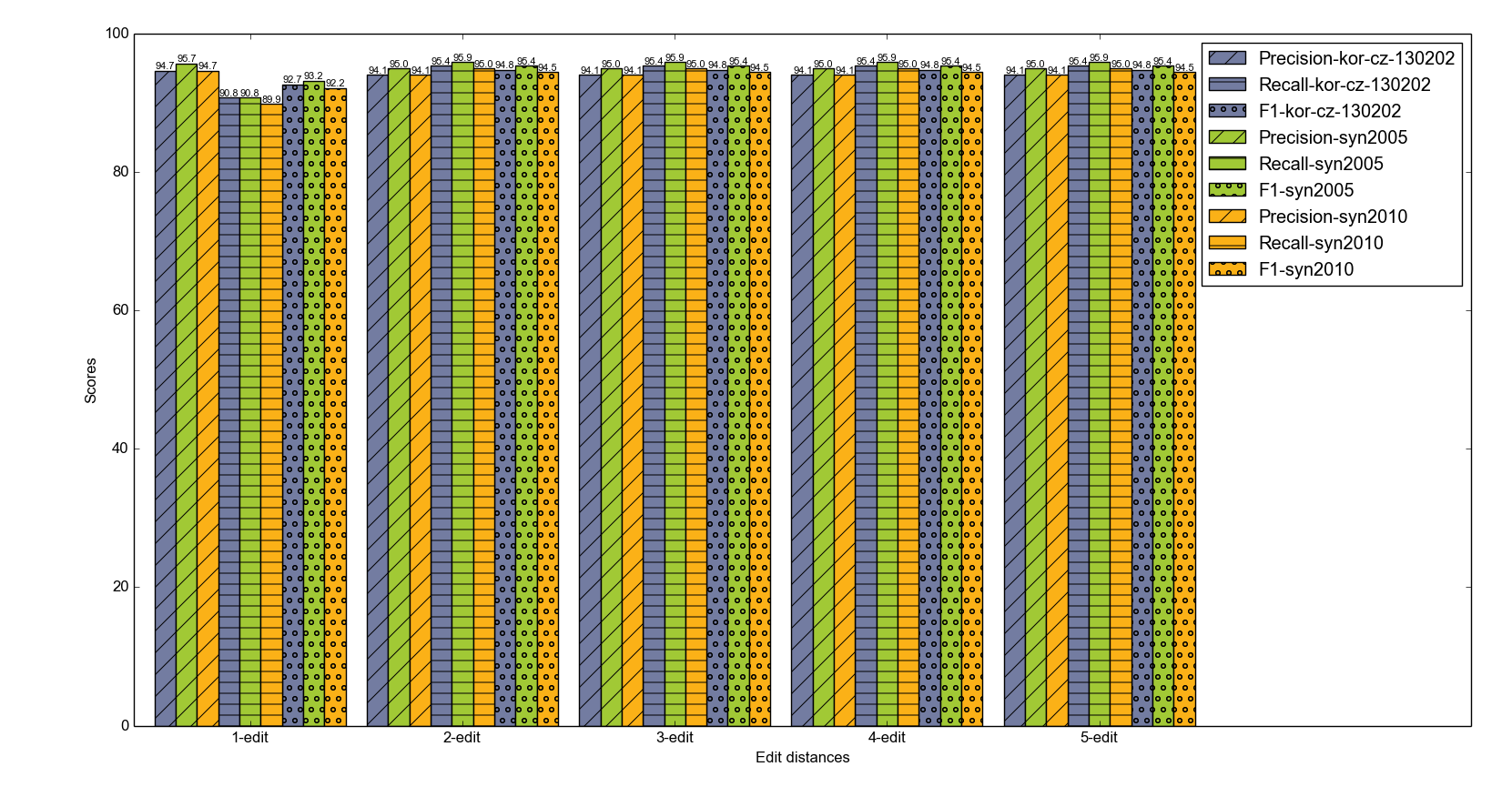

| kor-cz-130202 | 1-edit | 94.7 | 90.8 | 92.7 |

| syn2005 | “ | 95.7 | 90.8 | 93.2 |

| syn2010 | “ | 94.7 | 89.9 | 92.2 |

| kor-cz-130202 | 2-edit | 94.1 | 95.4 | 94.8 |

| syn2005 | “ | 95.0 | 95.9 | 95.4 |

| syn2010 | “ | 94.1 | 95.0 | 94.5 |

| kor-cz-130202 | 3edit | 94.1 | 95.4 | 94.8 |

| syn2005 | “ | 95.0 | 95.9 | 95.4 |

| syn2010 | “ | 94.1 | 95.0 | 94.5 |

| kor-cz-130202 | 4-edit | 94.1 | 95.4 | 94.8 |

| syn2005 | “ | 95.0 | 95.9 | 95.4 |

| syn2010 | “ | 94.1 | 95.0 | 94.5 |

| kor-cz-130202 | 5-edit | 94.1 | 95.4 | 94.8 |

| syn2005 | “ | 95.0 | 95.9 | 95.4 |

| syn2010 | “ | 94.1 | 95.0 | 94.5 |

Note that the results are same for edit distances 2,3,4,5. This maybe due to the edit distance parameter does not really influence the error detection much.

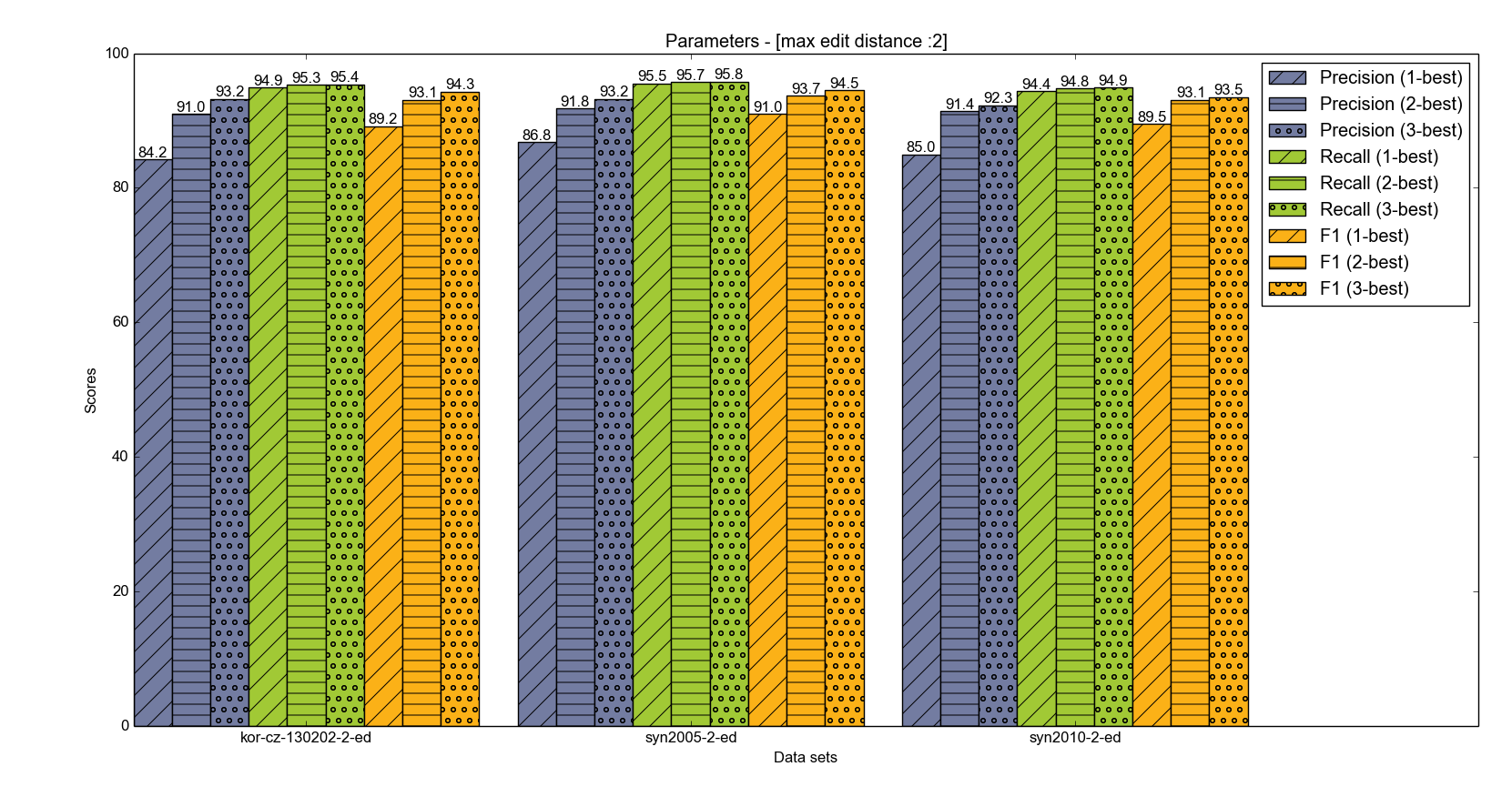

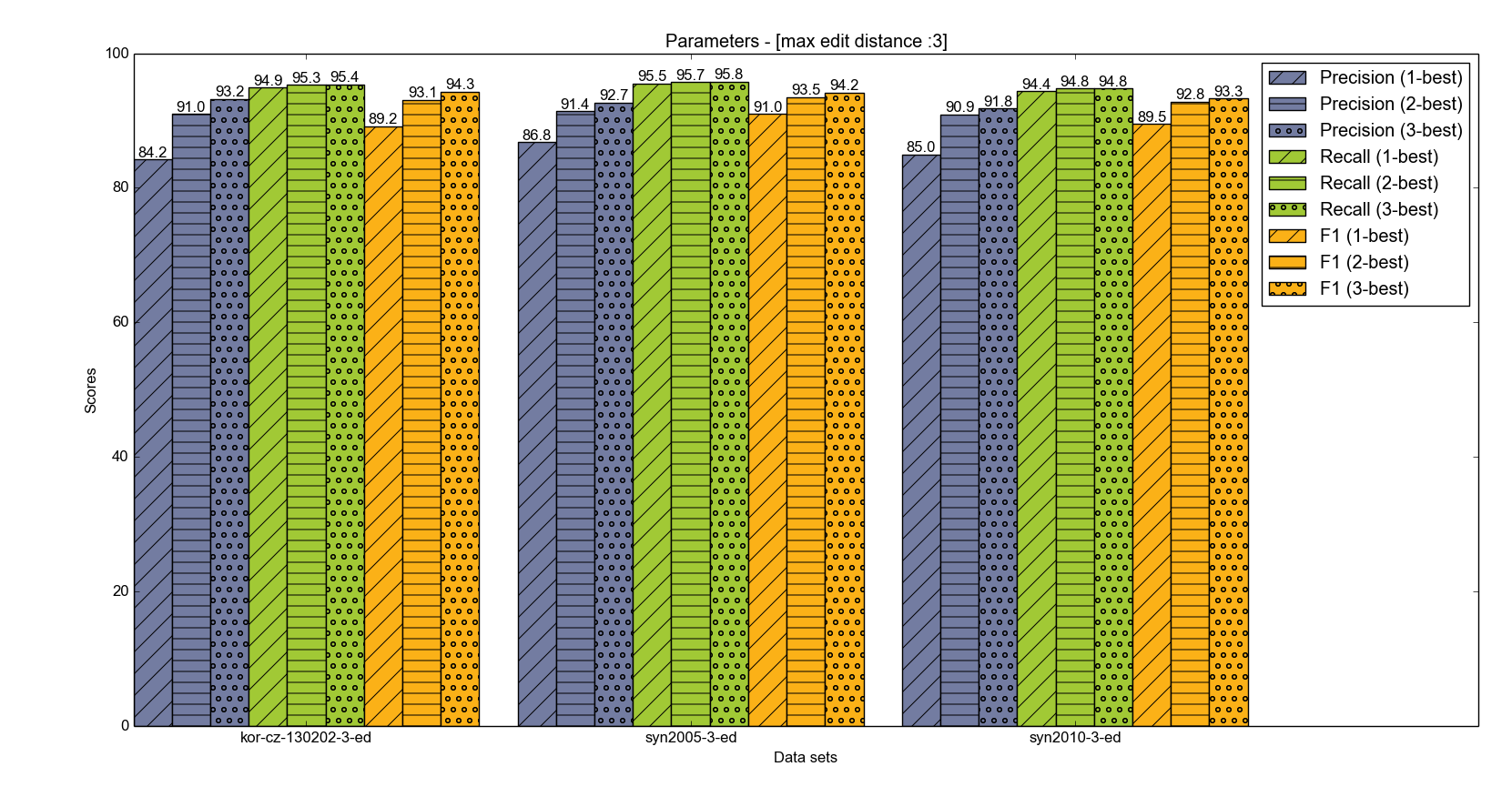

Item | top-1 | top-1 | top-1 | top-2 | top-2 | top-2 | top-3 | top-3 | top-3 ------ | ------ | ------ | ------ | ------ | ------ | ------ | ------ | ------ | ------ | ------ dataset | precision | recall | F1-score | precision | recall | F1-score | precision | recall | F1-score kor-cz-130202-1-ed | 85.2 | 89.9 | 87.5 | 90.9 | 90.5 | 90.7 | 93.3 | 90.7 | 92.0 syn2005-1-ed | 87.9 | 90.1 | 89.0 | 92.3 | 90.5 | 91.4 | 93.7 | 90.7 | 92.2 syn2010-1-ed | 86.0 | 89.0 | 87.5 | 91.8 | 89.6 | 90.7 | 92.3 | 89.7 | 91.0 kor-cz-130202-2-ed | 84.2 | 94.9 | 89.2 | 91.0 | 95.3 | 93.1 | 93.2 | 95.4 | 94.3 syn2005-2-ed | 86.8 | 95.5 | 91.0 | 91.8 | 95.7 | 93.7 | 93.2 | 95.8 | 94.5 syn2010-2-ed | 85.0 | 94.4 | 89.5 | 91.4 | 94.8 | 93.1 | 92.3 | 94.9 | 93.5 kor-cz-130202-3-ed | 84.2 | 94.9 | 89.2 | 91.0 | 95.3 | 93.1 | 93.2 | 95.4 | 94.3 syn2005-3-ed | 86.8 | 95.5 | 91.0 | 91.4 | 95.7 | 93.5 | 92.7 | 95.8 | 94.2 syn2010-3-ed | 85.0 | 94.4 | 89.5 | 90.9 | 94.8 | 92.8 | 91.8 | 94.8 | 93.3 kor-cz-130202-4-ed | 84.2 | 94.9 | 89.2 | 91.0 | 95.3 | 93.1 | 93.2 | 95.4 | 94.3 syn2005-4-ed | 86.8 | 95.5 | 91.0 | 91.4 | 95.7 | 93.5 | 92.7 | 95.8 | 94.2 syn2010-4-ed | 85.0 | 94.4 | 89.5 | 90.9 | 94.8 | 92.8 | 91.8 | 94.8 | 93.3 kor-cz-130202-5-ed | 84.2 | 94.9 | 89.2 | 91.0 | 95.3 | 93.1 | 93.2 | 95.4 | 94.3 syn2005-5-ed | 86.8 | 95.5 | 91.0 | 91.4 | 95.7 | 93.5 | 92.7 | 95.8 | 94.2 syn2010-5-ed | 85.0 | 94.4 | 89.5 | 90.9 | 94.8 | 92.8 | 91.8 | 94.8 | 93.3

| Ken LM parameters | ARPA LM | Binarized KenLM | Binarized Korektor LM |

|---|---|---|---|

| No pruning | 3.2G | 415MB | |

| trigram pruning (singleton) | 1.2G | 884M (probing), 425M (trie) | 194M |

| trigram+bigram pruning (singleton) | 540M | 401M (probing), 202M (trie) | 82M |

| trigram+bigram pruning (singleton+ count 2 ngrams) | 290M | 240M (probing), 135M (trie) | 46M |

| Test dataset (olga) | Precision | Recall | F1-score |

|---|---|---|---|

| no_pruning | 95.0 | 95.9 | 95.4 |

| prune_001 (trigram singleton) | 95.0 | 95.9 | 95.4 |

| prune_01 (bigram+trigram singletons) | 95.0 | 95.9 | 95.4 |

| prune_02 (bigram+trigram singleton and count 2 ngrams) | 95.0 | 95.9 | 95.4 |

| Test dataset | LM pruning parameters | Precision | Recall | F1-score |

|---|---|---|---|---|

| dejiny | no_pruning | 99.5 | 97.9 | 98.7 |

| " | prune_001 (trigram singleton) | 99.5 | 97.9 | 98.7 |

| " | prune_01 (bigram+trigram singletons) | 99.5 | 97.9 | 98.7 |

| " | prune_02 (bigram+trigram singleton and count 2 ngrams) | 99.5 | 97.8 | 98.6 |

| lisky | no_pruning | 99.5 | 98.1 | 98.8 |

| " | prune_001 (trigram singleton) | 99.5 | 98.1 | 98.8 |

| " | prune_01 (bigram+trigram singletons) | 99.4 | 98.1 | 98.8 |

| " | prune_02 (bigram+trigram singleton and count 2 ngrams) | 99.4 | 98.1 | 98.8 |

| povesti | no_pruning | 98.8 | 94.5 | 96.6 |

| " | prune_001 (trigram singleton) | 98.7 | 94.5 | 96.6 |

| " | prune_01 (bigram+trigram singletons) | 98.7 | 94.4 | 96.5 |

| " | prune_02 (bigram+trigram singleton and count 2 ngrams) | 98.7 | 94.4 | 96.5 |

| Item | top-1 | top-1 | top-1 | top-2 | top-2 | top-2 | top-3 | top-3 | top-3 |

|---|---|---|---|---|---|---|---|---|---|

| Dataset (syn2005) | precision | recall | F1-score | precision | recall | F1-score | precision | recall | F1-score |

| no_pruning | 86.8 | 95.5 | 91.0 | 91.8 | 95.7 | 93.7 | 93.2 | 95.8 | 94.5 |

| prune_001 (trigram singleton) | 87.3 | 95.5 | 91.2 | 91.8 | 95.7 | 93.7 | 93.2 | 95.8 | 94.5 |

| prune_01 (bigram+trigram singletons) | 87.7 | 95.5 | 91.5 | 91.8 | 95.7 | 93.7 | 93.2 | 95.8 | 94.5 |

| prune_02 (bigram+trigram singleton and count 2 ngrams) | 86.4 | 95.5 | 90.7 | 91.4 | 95.7 | 93.5 | 92.7 | 95.8 | 94.2 |