diff --git a/_posts/2024-08-12-AIGC.md b/_posts/2024-08-12-AIGC.md

new file mode 100644

index 00000000..ea522964

--- /dev/null

+++ b/_posts/2024-08-12-AIGC.md

@@ -0,0 +1,275 @@

+---

+layout: post

+title: Generative AI

+author: [Richard Kuo]

+category: [Lecture]

+tags: [jekyll, ai]

+---

+

+This introduction includes Text-to-Image, Text-to-Video, Text-to-Motion, Text-to-3D, Image-to-3D.

+

+---

+

+

+---

+## Text-to-Image

+**News:** [An A.I.-Generated Picture Won an Art Prize. Artists Aren’t Happy.](https://www.nytimes.com/2022/09/02/technology/ai-artificial-intelligence-artists.html)

+

+

+**Blog:** [DALL-E, DALL-E2 and StoryDALL-E](https://zhangtemplar.github.io/dalle/)

+

+---

+### DALL.E

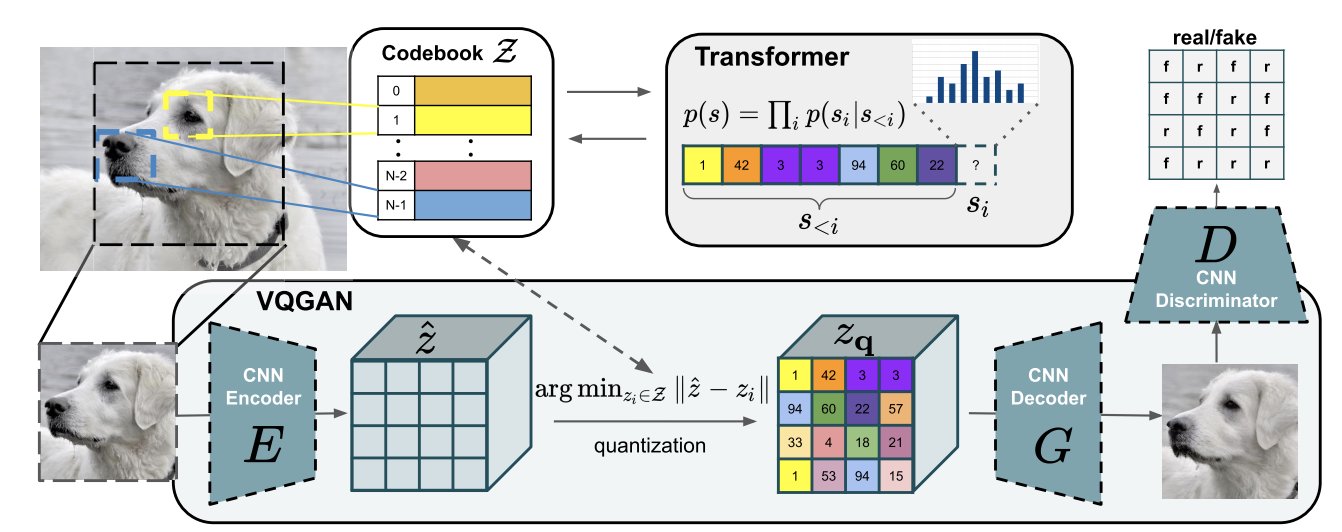

+DALL·E is a 12-billion parameter version of GPT-3 trained to generate images from text descriptions, using a dataset of text–image pairs.

+

+**Blog:** [https://openai.com/blog/dall-e/](https://openai.com/blog/dall-e/)

+**Paper:** [Zero-Shot Text-to-Image Generation](https://arxiv.org/abs/2102.12092)

+**Code:** [openai/DALL-E](https://github.com/openai/DALL-E)

+

+The overview of DALL-E could be illustrated as below. It contains two components: for image, VQGAN (vector quantized GAN) is used to map the 256x256 image to a 32x32 grid of image token and each token has 8192 possible values; then this token is combined with 256 BPE=encoded text token is fed into to train the autoregressive transformer. The text token is set to 256 by maximal.

+

+

+---

+### Contrastive Language-Image Pre-training (CLIP)

+**Paper:** [Learning Transferable Visual Models From Natural Language Supervision](https://arxiv.org/abs/2103.00020)

+

+

+---

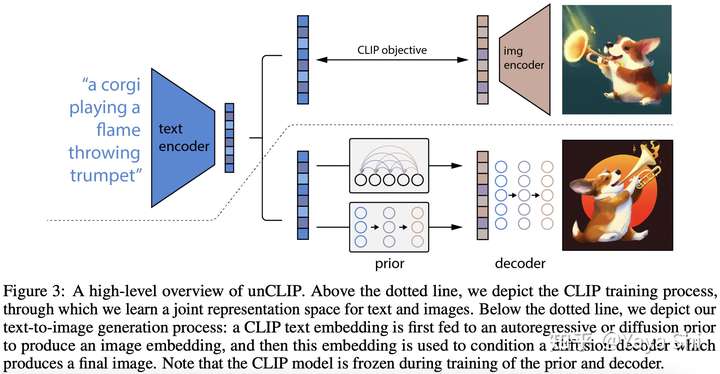

+### [DALL.E-2](https://openai.com/dall-e-2/)

+DALL·E 2 is a new AI system that can create realistic images and art from a description in natural language.

+

+**Blog:** [How DALL-E 2 Actually Works](https://www.assemblyai.com/blog/how-dall-e-2-actually-works/)

+"a bowl of soup that is a portal to another dimension as digital art".

+

+

+**Paper:** [Hierarchical Text-Conditional Image Generation with CLIP Latents](https://arxiv.org/abs/2204.06125)

+

+

+---

+### [LAION-5B Dataset](https://laion.ai/blog/laion-5b/)

+5.85 billion CLIP-filtered image-text pairs

+**Paper:** [LAION-5B: An open large-scale dataset for training next generation image-text models](https://arxiv.org/abs/2210.08402)

+

+

+---

+### [DALL.E-3](https://openai.com/dall-e-3)

+

+

+**Paper:** [Improving Image Generation with Better Captions](https://cdn.openai.com/papers/dall-e-3.pdf)

+

+**Blog:** [DALL-E 2 vs DALL-E 3 Everything you Need to Know](https://www.cloudbooklet.com/dall-e-2-vs-dall-e-3/)

+

+**Dataset Recaptioning**

+

+

+---

+### Stable Diffusion

+**Paper:** [High-Resolution Image Synthesis with Latent Diffusion Models](https://arxiv.org/abs/2112.10752)

+

+**Blog:** [Stable Diffusion: Best Open Source Version of DALL·E 2](https://towardsdatascience.com/stable-diffusion-best-open-source-version-of-dall-e-2-ebcdf1cb64bc)

+

+**Code:** [Stable Diffusion](https://github.com/CompVis/stable-diffusion)

+

+

+

+**Demo:** [Stable Diffusion Online (SDXL)](https://stablediffusionweb.com/)

+Stable Diffusion XL is a latent text-to-image diffusion model capable of generating photo-realistic images given any text input, cultivates autonomous freedom to produce incredible imagery, empowers billions of people to create stunning art within seconds.

+

+---

+### [Imagen](https://imagen.research.google/)

+**Paper:** [Photorealistic Text-to-Image Diffusion Models with Deep Language Understanding](https://arxiv.org/abs/2205.11487)

+**Blog:** [How Imagen Actually Works](https://www.assemblyai.com/blog/how-imagen-actually-works/)

+

+

+The text encoder in Imagen is the encoder network of T5 (Text-to-Text Transfer Transformer)

+

+

+---

+### Diffusion Models

+**Blog:** [Introduction to Diffusion Models for Machine Learning](https://www.assemblyai.com/blog/diffusion-models-for-machine-learning-introduction/)

+

+Diffusion Models are a method of creating data that is similar to a set of training data.

+They train by destroying the training data through the addition of noise, and then learning to recover the data by reversing this noising process. Given an input image, the Diffusion Model will iteratively corrupt the image with Gaussian noise in a series of timesteps, ultimately leaving pure Gaussian noise, or "TV static".

+

+The Diffusion Model will then work backwards, learning how to isolate and remove the noise at each timestep, undoing the destruction process that just occurred.

+Once trained, the model can then be "split in half", and we can start from randomly sampled Gaussian noise which we use the Diffusion Model to gradually denoise in order to generate an image.

+

+

+---

+### SDXL

+**Paper:** [SDXL: Improving Latent Diffusion Models for High-Resolution Image Synthesis](https://arxiv.org/abs/2307.01952)

+**Code:** [Generative Models by Stability AI](https://github.com/stability-ai/generative-models)

+

+

+

+**Huggingface:** [stable-diffusion-xl-base-1.0](https://huggingface.co/stabilityai/stable-diffusion-xl-base-1.0)

+

+SDXL consists of an ensemble of experts pipeline for latent diffusion: In a first step, the base model is used to generate (noisy) latents, which are then further processed with a refinement model (available here: https://huggingface.co/stabilityai/stable-diffusion-xl-refiner-1.0/) specialized for the final denoising steps. Note that the base model can be used as a standalone module.

+

+**Kaggle:** [https://www.kaggle.com/rkuo2000/stable-diffusion-xl](https://www.kaggle.com/rkuo2000/stable-diffusion-xl/)

+

+---

+### [Transfusion](https://arxiv.org/html/2408.11039v1)

+**Paper:** [Transfusion: Predict the Next Token and Diffuse Images with One Multi-Modal Model](https://www.arxiv.org/abs/2408.11039)

+**Code:**

+

+

+---

+## Text-to-Video

+

+### Turn-A-Video

+**Paper:** [Tune-A-Video: One-Shot Tuning of Image Diffusion Models for Text-to-Video Generation](https://arxiv.org/abs/2212.11565)

+**Code:** [https://github.com/showlab/Tune-A-Video](https://github.com/showlab/Tune-A-Video)

+

+

+

+Given a video-text pair as input, our method, Tune-A-Video, fine-tunes a pre-trained text-to-image diffusion model for text-to-video generation.

+

+

+---

+### Open-VCLIP

+**Paper:** [Open-VCLIP: Transforming CLIP to an Open-vocabulary Video Model via Interpolated Weight Optimization](https://arxiv.org/abs/2302.00624)

+**Paper:** [Building an Open-Vocabulary Video CLIP Model with Better Architectures, Optimization and Data](https://arxiv.org/abs/2310.05010)

+**Code:** [https://github.com/wengzejia1/Open-VCLIP/](https://github.com/wengzejia1/Open-VCLIP/)

+

+

+---

+### [DyST](https://dyst-paper.github.io/)

+**Paper:** [DyST: Towards Dynamic Neural Scene Representations on Real-World Videos]()

+

+

+---

+## Text-to-Motion

+

+### TMR

+**Paper:** [TMR: Text-to-Motion Retrieval Using Contrastive 3D Human Motion Synthesis](https://arxiv.org/abs/2305.00976)

+**Code:** [https://github.com/Mathux/TMR](https://github.com/Mathux/TMR)

+

+---

+### Text-to-Motion Retrieval

+**Paper:** [Text-to-Motion Retrieval: Towards Joint Understanding of Human Motion Data and Natural Language](https://arxiv.org/abs/2305.15842)

+**Code:** [https://github.com/mesnico/text-to-motion-retrieval](https://github.com/mesnico/text-to-motion-retrieval)

+`A person walks in a counterclockwise circle`

+

+`A person is kneeling down on all four legs and begins to crawl`

+

+

+---

+### MotionDirector

+**Paper:** [MotionDirector: Motion Customization of Text-to-Video Diffusion Models](https://arxiv.org/abs/2310.08465)

+

+---

+### [GPT4Motion](https://gpt4motion.github.io/)

+**Paper:** [GPT4Motion: Scripting Physical Motions in Text-to-Video Generation via Blender-Oriented GPT Planning](https://arxiv.org/abs/2311.12631)

+

+

+---

+### Motion Editing

+**Paper:** [Iterative Motion Editing with Natural Language](https://arxiv.org/abs/2312.11538)

+

+---

+### [Awesome Video Diffusion Models](https://github.com/ChenHsing/Awesome-Video-Diffusion-Models)

+

+### StyleCrafter

+**Paper:** [StyleCrafter: Enhancing Stylized Text-to-Video Generation with Style Adapter](https://arxiv.org/abs/2312.00330)

+**Code:** [https://github.com/GongyeLiu/StyleCrafter](https://github.com/GongyeLiu/StyleCrafter)

+

+

+---

+### Stable Diffusion Video

+**Paper:** [Stable Video Diffusion: Scaling Latent Video Diffusion Models to Large Datasets](https://arxiv.org/abs/2311.15127)

+**Code:** [https://github.com/nateraw/stable-diffusion-videos](https://github.com/nateraw/stable-diffusion-videos)

+

+

+---

+### AnimateDiff

+**Paper:** [AnimateDiff: Animate Your Personalized Text-to-Image Diffusion Models without Specific Tuning](https://arxiv.org/abs/2307.04725)

+**Paper:** [SparseCtrl: Adding Sparse Controls to Text-to-Video Diffusion Models](https://arxiv.org/abs/2311.16933)

+**Code:** [https://github.com/guoyww/AnimateDiff](https://github.com/guoyww/AnimateDiff)

+

+

+---

+### Animate Anyone

+**Paper:** [Animate Anyone: Consistent and Controllable Image-to-Video Synthesis for Character Animation](https://arxiv.org/abs/2311.17117)

+

+---

+### [Outfit Anyone](https://humanaigc.github.io/outfit-anyone/)

+**Code:** [https://github.com/HumanAIGC/OutfitAnyone](https://github.com/HumanAIGC/OutfitAnyone)

+

+

+---

+### [SignLLM](https://signllm.github.io/Prompt2Sign/)

+**Paper:** [SignLLM: Sign Languages Production Large Language Models](https://arxiv.org/abs/2405.10718)

+**Code:** [https://github.com/SignLLM/Prompt2Sign](https://github.com/SignLLM/Prompt2Sign)

+

+---

+## Text-to-3D

+

+### Shap-E

+**Paper:** [Shap-E: Generating Conditional 3D Implicit Functions](https://arxiv.org/abs/2305.02463)

+**Code:** [https://github.com/openai/shap-e](https://github.com/openai/shap-e)

+**Kaggle:** [https://www.kaggle.com/rkuo2000/shap-e](https://www.kaggle.com/rkuo2000/shap-e)

+

+---

+### [MVdiffusion](https://mvdiffusion.github.io/)

+**Paper:** [MVDiffusion: Enabling Holistic Multi-view Image Generation with Correspondence-Aware Diffusion](https://arxiv.org/abs/2307.01097)

+**Code:** [https://github.com/Tangshitao/MVDiffusion](https://github.com/Tangshitao/MVDiffusion)

+

+

+---

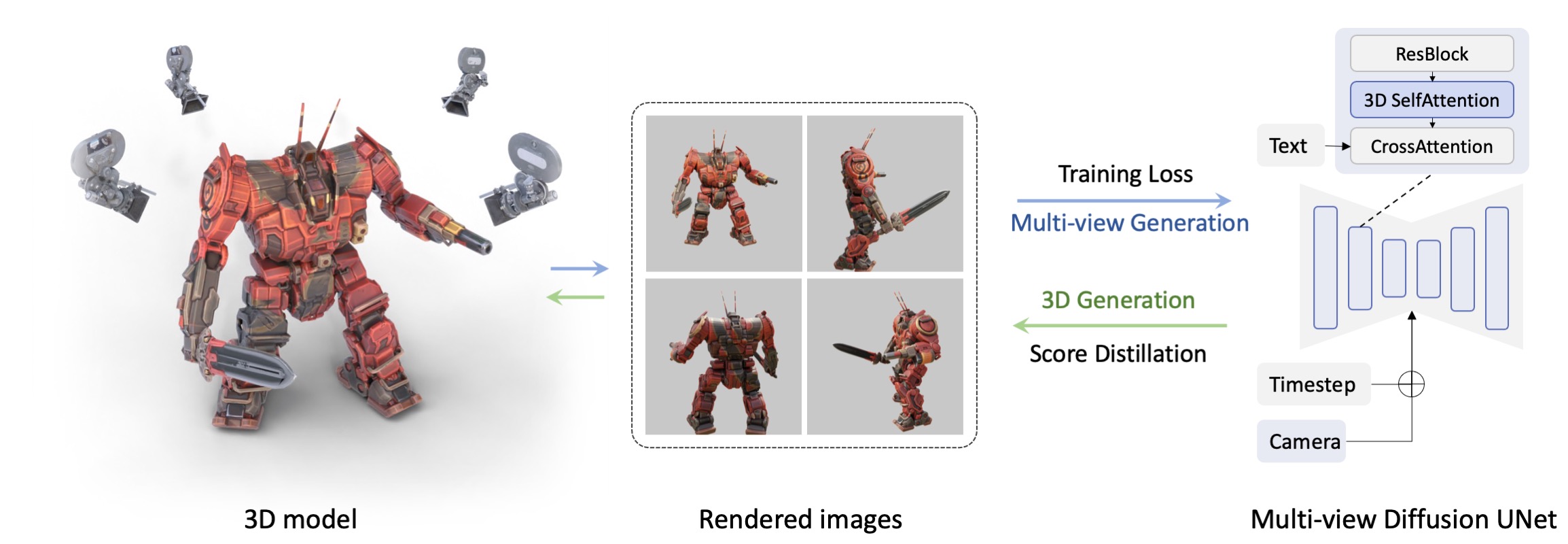

+### [MVDream](https://mv-dream.github.io/)

+**Paper:** [MVDream: Multi-view Diffusion for 3D Generation](https://arxiv.org/abs/2308.16512)

+**Code:** [https://github.com/bytedance/MVDream](https://github.com/bytedance/MVDream)

+**Kaggle:** [https://www.kaggle.com/rkuo2000/mvdream](https://www.kaggle.com/rkuo2000/mvdream)

+

+

+---

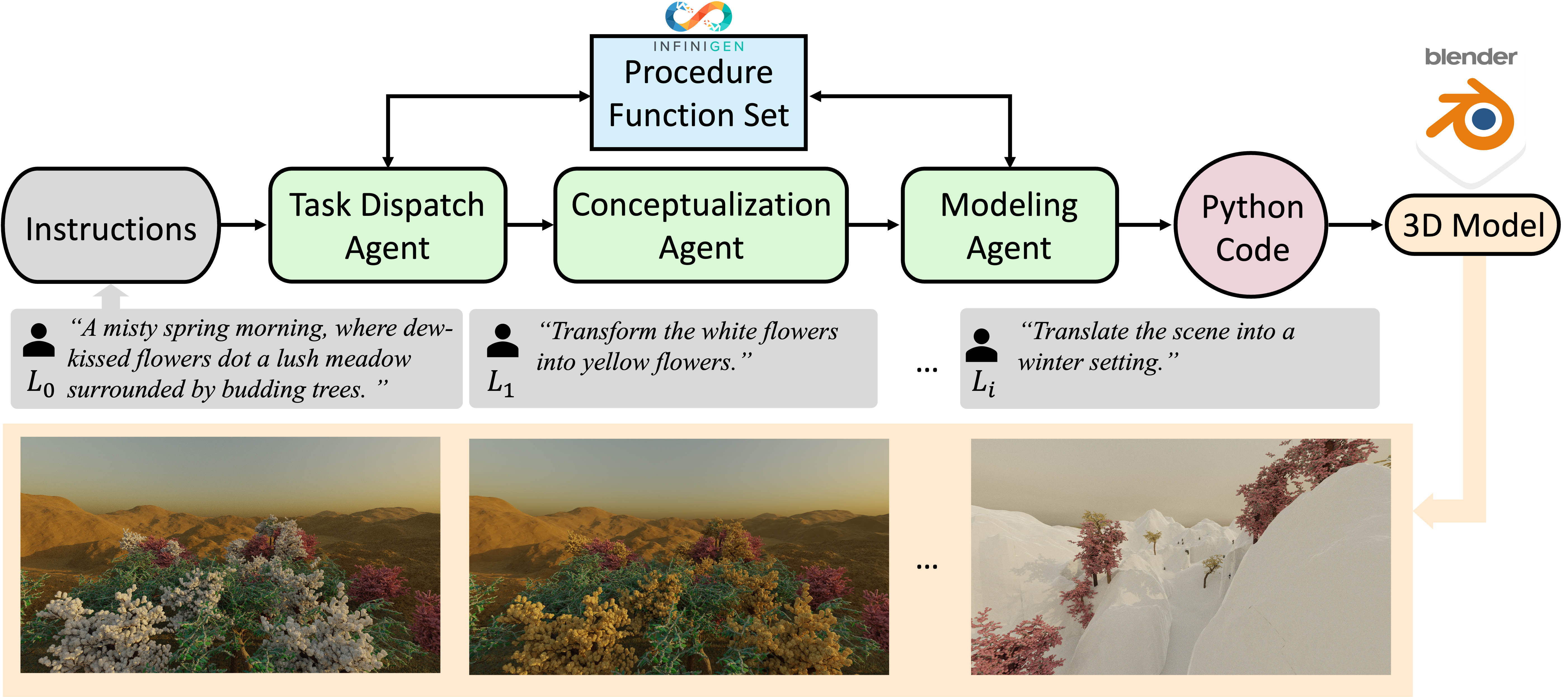

+### [3D-GPT](https://chuny1.github.io/3DGPT/3dgpt.html)

+**Paper:** [3D-GPT: Procedural 3D Modeling with Large Language Models](https://arxiv.org/abs/2310.12945)

+

+

+---

+### Advances in 3D Generation : A Survey

+**Paper:** [Advances in 3D Generation: A Survey](https://arxiv.org/html/2401.17807v1)

+

+---

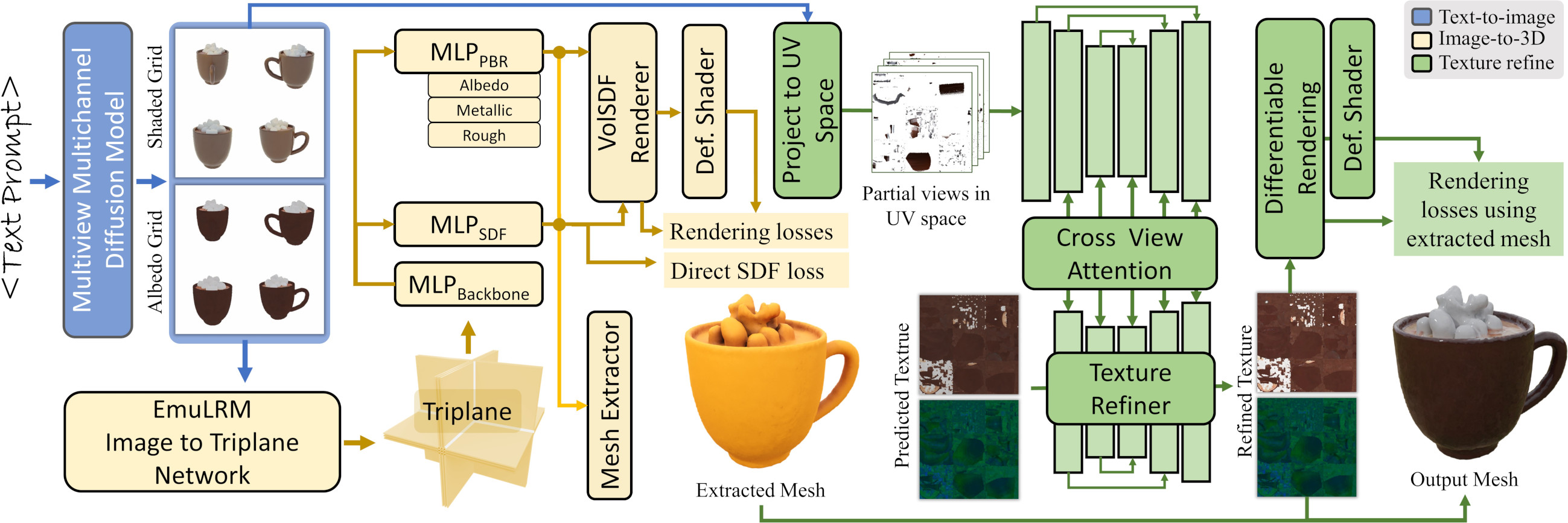

+### [AssetGen](https://assetgen.github.io/)

+**Paper:** [Meta 3D AssetGen: Text-to-Mesh Generation with High-Quality Geometry, Texture, and PBR Materials]

+(https://scontent-tpe1-1.xx.fbcdn.net/v/t39.2365-6/449707112_509645168082163_2193712134508658234_n.pdf?_nc_cat=111&ccb=1-7&_nc_sid=3c67a6&_nc_ohc=5bSbn3KaluAQ7kNvgFbjbd7&_nc_ht=scontent-tpe1-1.xx&oh=00_AYBM_JROjIFPbm8vwphinNrr4x1bUEFOeLV5iYsR6l_0rA&oe=668B3191)

+

+**Paper:** [Meta 3D Gen](chrome-extension://efaidnbmnnnibpcajpcglclefindmkaj/https://scontent-tpe1-1.xx.fbcdn.net/v/t39.2365-6/449707112_509645168082163_2193712134508658234_n.pdf?_nc_cat=111&ccb=1-7&_nc_sid=3c67a6&_nc_ohc=5bSbn3KaluAQ7kNvgFbjbd7&_nc_ht=scontent-tpe1-1.xx&oh=00_AYBM_JROjIFPbm8vwphinNrr4x1bUEFOeLV5iYsR6l_0rA&oe=668B3191)

+

+---

+### AI-Render

+Stable Diffusion in Blender

+**Code:** [https://github.com/benrugg/AI-Render](https://github.com/benrugg/AI-Render)

+

+

+

+

+---

+## Image-to-3D

+

+### Zero123++

+**Blog:** [Stable Zero123](https://stability.ai/news/stable-zero123-3d-generation)

+**Paper:** [Zero123++: a Single Image to Consistent Multi-view Diffusion Base Model](https://arxiv.org/abs/2310.15110)

+**Code:** [https://github.com/SUDO-AI-3D/zero123plus](https://github.com/SUDO-AI-3D/zero123plus)

+**Kaggle:** [https://www.kaggle.com/code/rkuo2000/zero123plus](https://www.kaggle.com/code/rkuo2000/zero123plus)

+**Kaggle:** [https://www.kaggle.com/code/rkuo2000/zero123-controlnet](https://www.kaggle.com/code/rkuo2000/zero123-controlnet)

+

+---

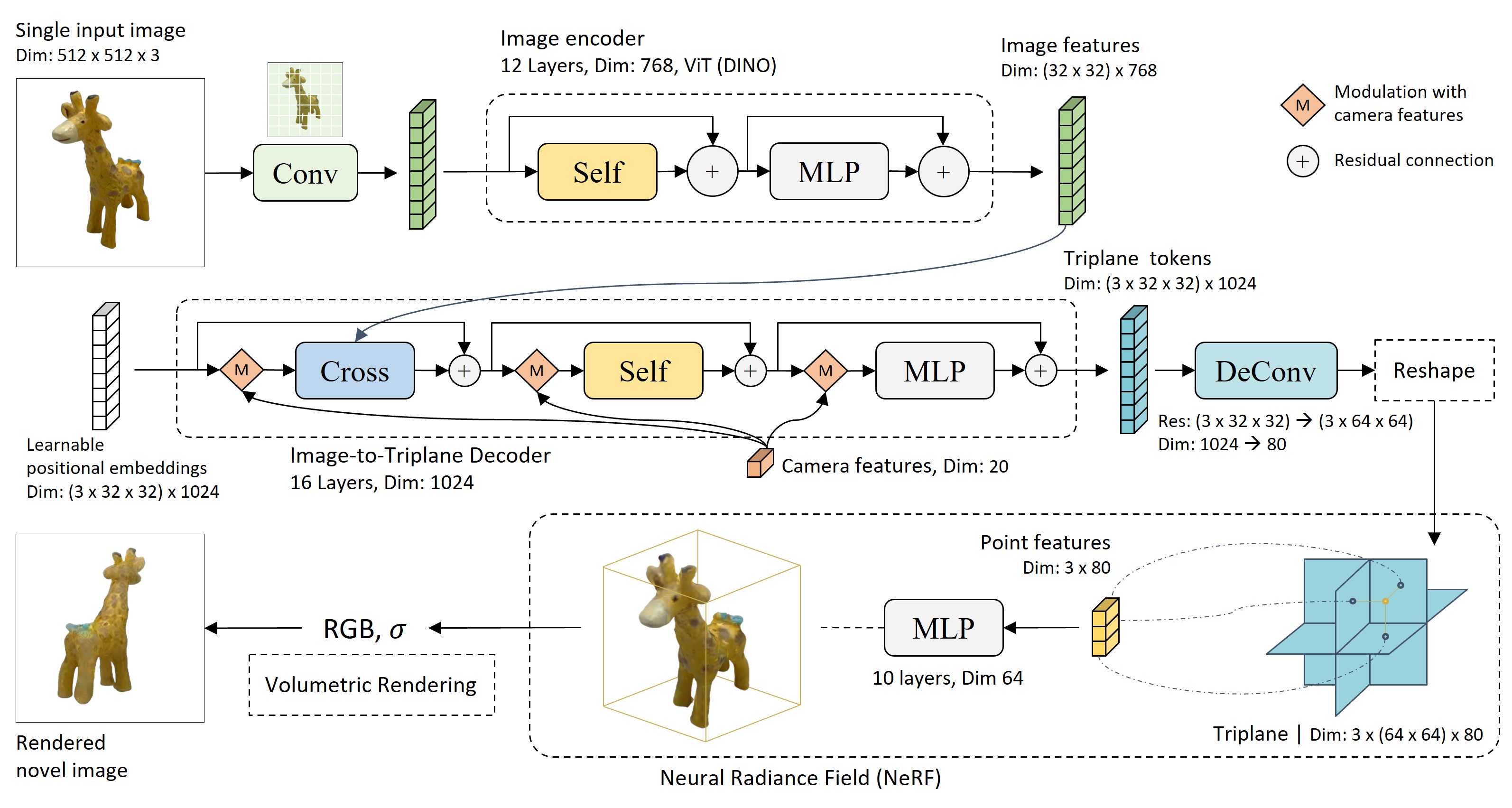

+### TripoSR

+**Blog:** [Introducing TripoSR: Fast 3D Object Generation from Single Images](https://stability.ai/news/triposr-3d-generation)

+**Paper:** [LRM: Large Reconstruction Model for Single Image to 3D](https://yiconghong.me/LRM/)

+**Github:** [https://github.com/VAST-AI-Research/TripoSR](https://github.com/VAST-AI-Research/TripoSR)

+`python run.py examples/chair.png --output-dir output/`

+

+

+

+

+

+

+

+*This site was last updated {{ site.time | date: "%B %d, %Y" }}.*

+

+