diff --git a/_posts/2024-05-04-RAG.md b/_posts/2024-05-04-RAG.md

index fd83d287..aee30c06 100644

--- a/_posts/2024-05-04-RAG.md

+++ b/_posts/2024-05-04-RAG.md

@@ -91,11 +91,60 @@ Introduction to Retrieval-Augmented Generation (RAG)

---

## Frameworks

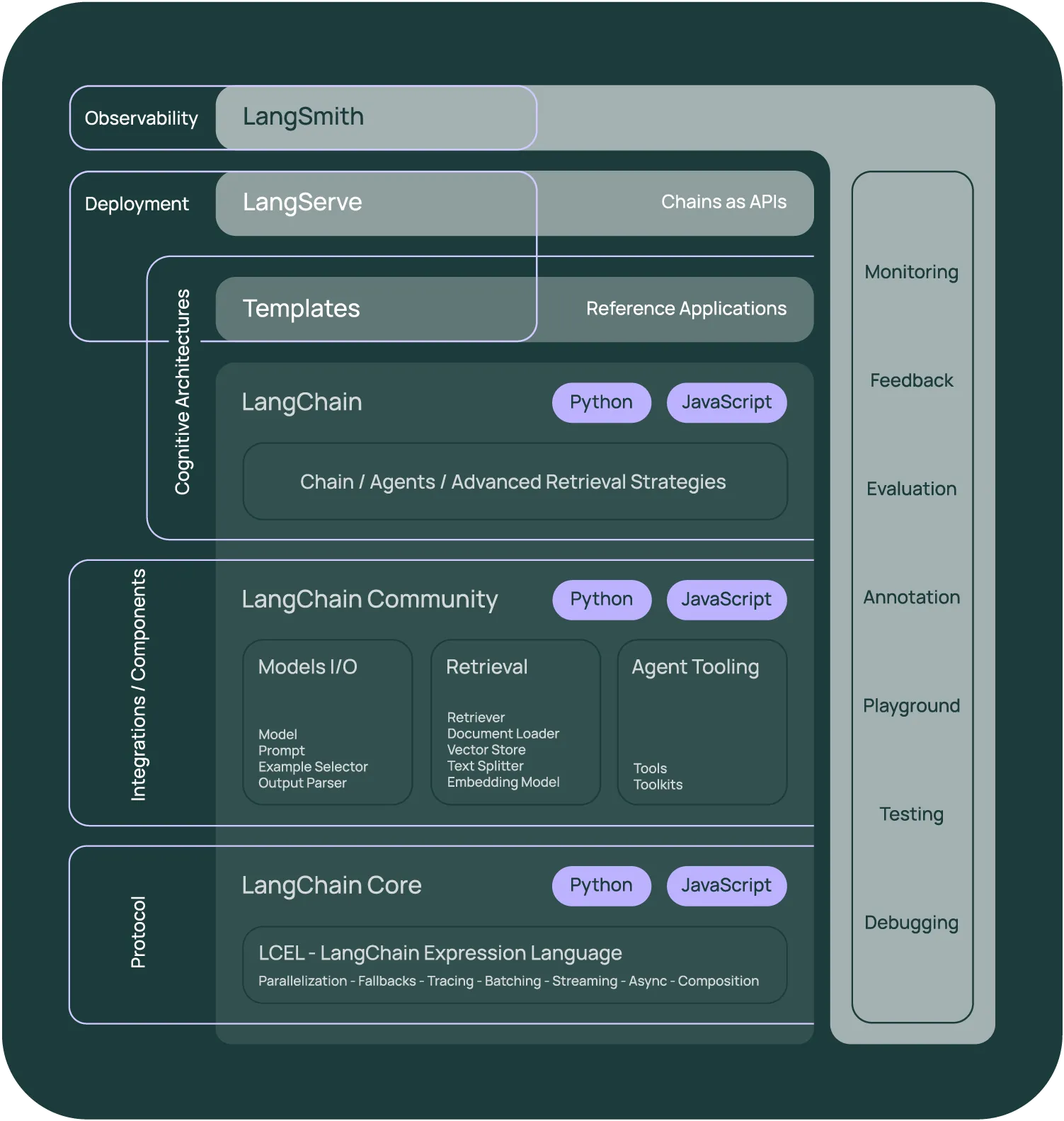

-### [Langchain](https://github.com/langchain-ai/langchain)

-LangChain is a framework for developing applications powered by large language models (LLMs).

+### [LangChain](https://github.com/langchain-ai/langchain)

+LangChain is a framework for developing applications powered by large language models (LLMs).

+

+---

+### [LangGraph](https://langchain-ai.github.io/langgraph/)

+**[Introduction to LangGraph](https://langchain-ai.github.io/langgraph/tutorials/introduction/)**

+

+

+```

+import json

+from langchain_anthropic import ChatAnthropic

+from langchain_community.tools.tavily_search import TavilySearchResults

+from langgraph.checkpoint.sqlite import SqliteSaver

+from langgraph.graph import END, MessageGraph

+from langgraph.prebuilt.tool_node import ToolNode

+

+# Define the function that determines whether to continue or not

+def should_continue(messages):

+ last_message = messages[-1]

+ # If there is no function call, then we finish

+ if not last_message.tool_calls:

+ return END

+ else:

+ return "action"

+

+# Define a new graph

+workflow = MessageGraph()

+

+tools = [TavilySearchResults(max_results=1)]

+

+model = ChatAnthropic(model="claude-3-haiku-20240307").bind_tools(tools)

+

+workflow.add_node("agent", model)

+workflow.add_node("action", ToolNode(tools))

+workflow.set_entry_point("agent")

+

+# Conditional agent -> action OR agent -> END

+workflow.add_conditional_edges(

+ "agent",

+ should_continue,

+)

+

+# Always transition `action` -> `agent`

+workflow.add_edge("action", "agent")

+memory = SqliteSaver.from_conn_string(":memory:") # Here we only save in-memory

+

+# Setting the interrupt means that any time an action is called, the machine will stop

+app = workflow.compile(checkpointer=memory, interrupt_before=["action"])

+```

+

+---

### [LlamaIndex](https://github.com/run-llama/llama_index)

-LlamaIndex (GPT Index) is a data framework for your LLM application.

+LlamaIndex (GPT Index) is a data framework for your LLM application.

**Kaggle:** [https://www.kaggle.com/code/rkuo2000/llm-llamaindex](https://www.kaggle.com/code/rkuo2000/llm-llamaindex)

---