-

Notifications

You must be signed in to change notification settings - Fork 21

Home

Welcome to the rapyuta-mapping wiki! rapyuta-mapping is part of the Rapyuta project that aims build a Cloud Robotics platform. See rapyuta.org for more details on the Rapyuta project.

Specifically, rapyuta-mapping is a demonstration of a cloud-based collaborative real-time mapping service with low-cost robots. The figure below shows the overall architecture of the system.

The above architecture mainly consist of

- Mobile robot: low-cost robots, each with an RGB- sensor, smartphone-class processor and a wireless connection to the data center.

- Robot clone: A set of processes for each robot connected to the Cloud that manages key frames and other data accumulation tasks, while updating the robot with optimized (or post-processed) maps. Currently, the Robot Clone sends the pre-programmed motion commands to the robot.

- Database: A relational database for storing maps.

- Map Optimizer: Parallel optimization algorithm to find the optimal pose graph based on all accumulated key- frames. After each optimizations cycle, the Map Opti- mizer updates the database and triggers the Robot Clone to update the robot with the new map.

- Map Merger: This process selects random frames from different maps and tries to match them. Once the transformations between two maps are found, the two maps are merged into a single map.

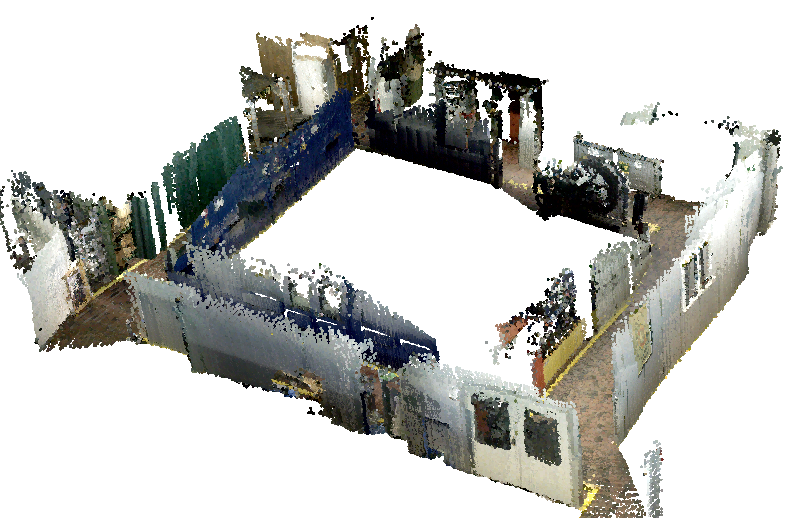

Figure below shows the visualization of a ~40 m corridor built by a robot in the cloud.

For a quick overview of the system please see:TBA

The figure below shows the two robots used for this demonstrator.

The robots use the iRobot Create as their base and this differential drive base provides a serial interface to send control commands and receive sensor information. PrimeSense CARMIN 1.08 is used for the RGB-D sensing which provides two registered depth and color images in VGA resolution at 30 frames per second. A 48 × 52 mm embedded board with a smartphone-class multi-core ARM processor is used for onboard computation. The embedded board runs a standard Linux operating system and connects to the Cloud through a dual-band USB wireless device. In addition to running the RGB-D sensor driver and controlling the robot, the onboard processor also runs a dense visual odometry algorithm to estimate the current pose of the robot. The key-frames produced by the visual odometry are sent to the Cloud processes through the wireless device.

TBA