原文:Exploring the NFL Draft with Python

[TOC]

在读过三两个Michael Lopez关于NFL选秀的文章后,我决定使用Python(取代R),来重现他的分析。

首先,让我们导入将用到的大部分东东。

注意:你可以在这里找到这篇文章的github仓库。它包括这个notebook,数据和我所使用的conda环境。

In [1]:

%matplotlib inline

import matplotlib.pyplot as plt

import seaborn as sns

import pandas as pd

from urllib.request import urlopen

from bs4 import BeautifulSoup

在我们开始之前,需要一些数据。我们将从Pro-Football-Reference那里抓取选秀数据,然后清理它们以进行分析。

我们会使用BeautifulSoup来抓取数据,然后将其存储到一个pandas Dataframe中。

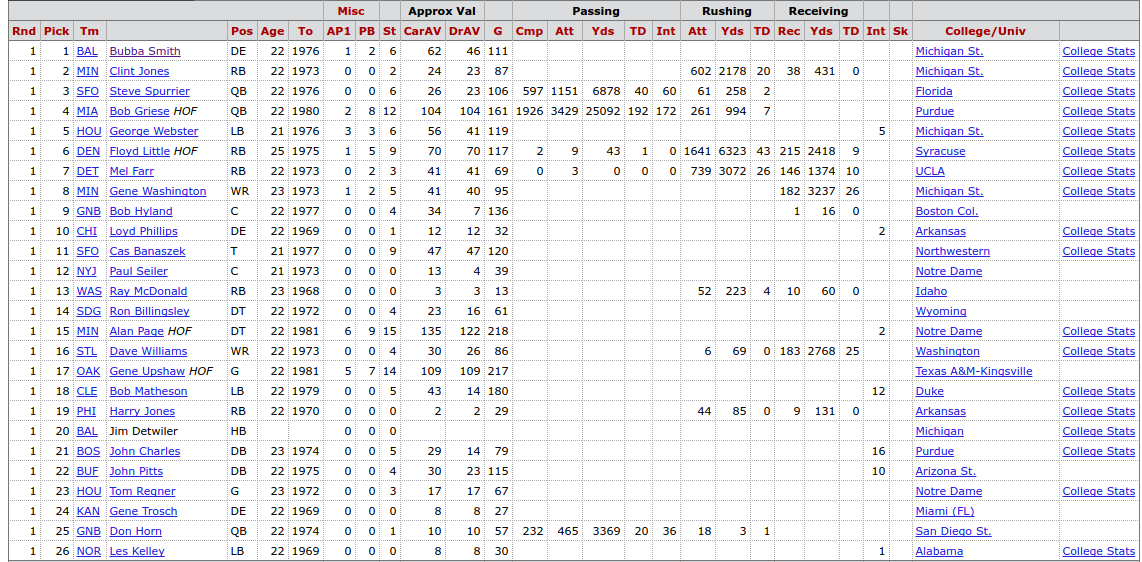

要感受下该数据,让我们看看1967选秀。

上面仅是在页面上找到的一小部分选秀表格。我们将提取列标题的第二行以及每个选择的所有信息。虽然采用这种方式,我们还会抓取每个选手的Pro-Football- Reference选手页面链接以及大学统计链接。这样,如果将来想要从他们的选手页面抽取数据,就可以做到了。

In [2]:

# The url we will be scraping

url_1967 = "http://www.pro-football-reference.com/years/1967/draft.htm"

# get the html

html = urlopen(url_1967)

# create the BeautifulSoup object

soup = BeautifulSoup(html, "lxml")

我们的DataFrame所需的列标题位于PFR表单的列标题的第二行。我们将抓取它,并且为两个额外的球员页面链接添加两个额外的列标题。

In [3]:

# Extract the necessary values for the column headers from the table

# and store them as a list

column_headers = [th.getText() for th in

soup.findAll('tr', limit=2)[1].findAll('th')]

# Add the two additional column headers for the player links

column_headers.extend(["Player_NFL_Link", "Player_NCAA_Link"])

使用CSS选择器 "#draft tr",我们可以很容易地提出数据行。我们基本上做的是,选择id值为"draft"的HTML元素内的表行元素。

谈到查找CSS选择器,一个非常有用的工具是SelectorGadget。这是一个网络扩展,它允许你点击一个网页的不同元素,然后为那些所选的元素提供CSS选择题。

In [4]:

# The data is found within the table rows of the element with id=draft

# We want the elements from the 3rd row and on

table_rows = soup.select("#drafts tr")[2:]

注意到,table_rows是一个标签元素列表。

In [5]:

type(table_rows)

Out[5]:

listIn [6]:

type(table_rows[0])

Out[6]:

bs4.element.TagIn [7]:

table_rows[0] # take a look at the first row

Out[7]:

1

1

[BAL](http://savvastjortjoglou.com/teams/clt/1967_draft.htm "Baltimore olts)

[Bubba Smith](http://savvastjortjoglou.com/players/S/SmitBu00.htm)

DE

22

1976

1

2

6

62

46

111

[Michigan St.](http://savvastjortjoglou.com/schools/michiganst/)

[College Stats](http://www.sports-reference.com/cfb/players/bubba-smith-2.tml)

在td (或者表格数据)元素内,可以找到对于每个球员,我们所要的数据。

下面,我创建了一个函数,它从table_rows中抽取我们想要的数据。注释会带你看到该函数的每个部分做了什么。

In [8]:

def extract_player_data(table_rows):

"""

Extract and return the the desired information from the td elements within

the table rows.

"""

# create the empty list to store the player data

player_data = []

for row in table_rows: # for each row do the following

# Get the text for each table data (td) element in the row

# Some player names end with ' HOF', if they do, get the text excluding

# those last 4 characters,

# otherwise get all the text data from the table data

player_list = [td.get_text()[:-4] if td.get_text().endswith(" HOF")

else td.get_text() for td in row.find_all("td")]

# there are some empty table rows, which are the repeated

# column headers in the table

# we skip over those rows and and continue the for loop

if not player_list:

continue

# Extracting the player links

# Instead of a list we create a dictionary, this way we can easily

# match the player name with their pfr url

# For all "a" elements in the row, get the text

# NOTE: Same " HOF" text issue as the player_list above

links_dict = {(link.get_text()[:-4] # exclude the last 4 characters

if link.get_text().endswith(" HOF") # if they are " HOF"

# else get all text, set thet as the dictionary key

# and set the url as the value

else link.get_text()) : link["href"]

for link in row.find_all("a", href=True)}

# The data we want from the dictionary can be extracted using the

# player's name, which returns us their pfr url, and "College Stats"

# which returns us their college stats page

# add the link associated to the player's pro-football-reference page,

# or en empty string if there is no link

player_list.append(links_dict.get(player_list[3], ""))

# add the link for the player's college stats or an empty string

# if ther is no link

player_list.append(links_dict.get("College Stats", ""))

# Now append the data to list of data

player_data.append(player_list)

return player_data

现在,我们可以使用来自1967年选秀的数据来创建DataFrame。

In [9]:

# extract the data we want

data = extract_player_data(table_rows)

# and then store it in a DataFrame

df_1967 = pd.DataFrame(data, columns=column_headers)

In [10]:

df_1967.head()

Out[10]:

| Rnd | Pick | Tm | Pos | Age | To | AP1 | PB | St | ... | TD | Rec | Yds | TD | Int | Sk | College/Univ | Player_NFL_Link | Player_NCAA_Link | |||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 1 | BAL | Bubba Smith | DE | 22 | 1976 | 1 | 2 | 6 | ... | Michigan St. | College Stats | /players/S/SmitBu00.htm | http://www.sports-reference.com/cfb/players/bu... | ||||||

| 1 | 1 | 2 | MIN | Clint Jones | RB | 22 | 1973 | 0 | 0 | 2 | ... | 20 | 38 | 431 | 0 | Michigan St. | College Stats | /players/J/JoneCl00.htm | http://www.sports-reference.com/cfb/players/cl... | ||

| 2 | 1 | 3 | SFO | Steve Spurrier | QB | 22 | 1976 | 0 | 0 | 6 | ... | 2 | Florida | College Stats | /players/S/SpurSt00.htm | http://www.sports-reference.com/cfb/players/st... | |||||

| 3 | 1 | 4 | MIA | Bob Griese | QB | 22 | 1980 | 2 | 8 | 12 | ... | 7 | Purdue | College Stats | /players/G/GrieBo00.htm | http://www.sports-reference.com/cfb/players/bo... | |||||

| 4 | 1 | 5 | HOU | George Webster | LB | 21 | 1976 | 3 | 3 | 6 | ... | 5 | Michigan St. | College Stats | /players/W/WebsGe00.htm | http://www.sports-reference.com/cfb/players/ge... |

5 rows × 30 columns

抓取自1967年起所有的选秀数据基本上与上面的过程相同,只是使用一个for循环,对每个选秀年进行重复。

当我们遍历年份时,我们会为每一个选秀创建一个DataFrame,然后将其附加到DataFrame组成的包含所有选秀大列表中。我们也将有一个单独的列表,它会包含任何错误,以及与错误关联的URL。这将让我们知道我们的爬虫是否有任何问题,以及哪个url导致了这个错误。我们还将为抢断(Tackle)添加一个额外的列。抢断在1993年赛季结束后出现,因此,这就是一个我们需要插入到为从1967年到1993年的选秀创建的DataFrame中的列。

In [11]:

# Create an empty list that will contain all the dataframes

# (one dataframe for each draft)

draft_dfs_list = []

# a list to store any errors that may come up while scraping

errors_list = []

In [12]:

# The url template that we pass in the draft year inro

url_template = "http://www.pro-football-reference.com/years/{year}/draft.htm"

# for each year from 1967 to (and including) 2016

for year in range(1967, 2017):

# Use try/except block to catch and inspect any urls that cause an error

try:

# get the draft url

url = url_template.format(year=year)

# get the html

html = urlopen(url)

# create the BeautifulSoup object

soup = BeautifulSoup(html, "lxml")

# get the column headers

column_headers = [th.getText() for th in

soup.findAll('tr', limit=2)[1].findAll('th')]

column_headers.extend(["Player_NFL_Link", "Player_NCAA_Link"])

# select the data from the table using the '#drafts tr' CSS selector

table_rows = soup.select("#drafts tr")[2:]

# extract the player data from the table rows

player_data = extract_player_data(table_rows)

# create the dataframe for the current years draft

year_df = pd.DataFrame(player_data, columns=column_headers)

# if it is a draft from before 1994 then add a Tkl column at the

# 24th position

if year < 1994:

year_df.insert(24, "Tkl", "")

# add the year of the draft to the dataframe

year_df.insert(0, "Draft_Yr", year)

# append the current dataframe to the list of dataframes

draft_dfs_list.append(year_df)

except Exception as e:

# Store the url and the error it causes in a list

error =[url, e]

# then append it to the list of errors

errors_list.append(error)

In [13]:

len(errors_list)

Out[13]:

0In [14]:

errors_list

Out[14]:

[]没有获得任何错误,不错。

现在,我们可以连接所有抓取的DataFrame,并创建一个大的DataFrame,来包含所有的选秀。

In [15]:

# store all drafts in one DataFrame

draft_df = pd.concat(draft_dfs_list, ignore_index=True)

In [16]:

# Take a look at the first few rows

draft_df.head()

Out[16]:

| Draft_Yr | Rnd | Pick | Tm | Pos | Age | To | AP1 | PB | ... | Rec | Yds | TD | Tkl | Int | Sk | College/Univ | Player_NFL_Link | Player_NCAA_Link | |||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1967 | 1 | 1 | BAL | Bubba Smith | DE | 22 | 1976 | 1 | 2 | ... | Michigan St. | College Stats | /players/S/SmitBu00.htm | http://www.sports-reference.com/cfb/players/bu... | ||||||

| 1 | 1967 | 1 | 2 | MIN | Clint Jones | RB | 22 | 1973 | 0 | 0 | ... | 38 | 431 | 0 | Michigan St. | College Stats | /players/J/JoneCl00.htm | http://www.sports-reference.com/cfb/players/cl... | |||

| 2 | 1967 | 1 | 3 | SFO | Steve Spurrier | QB | 22 | 1976 | 0 | 0 | ... | Florida | College Stats | /players/S/SpurSt00.htm | http://www.sports-reference.com/cfb/players/st... | ||||||

| 3 | 1967 | 1 | 4 | MIA | Bob Griese | QB | 22 | 1980 | 2 | 8 | ... | Purdue | College Stats | /players/G/GrieBo00.htm | http://www.sports-reference.com/cfb/players/bo... | ||||||

| 4 | 1967 | 1 | 5 | HOU | George Webster | LB | 21 | 1976 | 3 | 3 | ... | 5 | Michigan St. | College Stats | /players/W/WebsGe00.htm | http://www.sports-reference.com/cfb/players/ge... |

5 rows × 32 columns

由于有一些重复的列标题,有点甚至是空字符串,因此我们应该对这些列编辑一下下。

In [17]:

# get the current column headers from the dataframe as a list

column_headers = draft_df.columns.tolist()

# The 5th column header is an empty string, but represesents player names

column_headers[4] = "Player"

# Prepend "Rush_" for the columns that represent rushing stats

column_headers[19:22] = ["Rush_" + col for col in column_headers[19:22]]

# Prepend "Rec_" for the columns that reperesent receiving stats

column_headers[23:25] = ["Rec_" + col for col in column_headers[23:25]]

# Properly label the defensive int column as "Def_Int"

column_headers[-6] = "Def_Int"

# Just use "College" as the column header represent player's colleger or univ

column_headers[-4] = "College"

# Take a look at the updated column headers

column_headers

Out[17]:

['Draft_Yr',

'Rnd',

'Pick',

'Tm',

'Player',

'Pos',

'Age',

'To',

'AP1',

'PB',

'St',

'CarAV',

'DrAV',

'G',

'Cmp',

'Att',

'Yds',

'TD',

'Int',

'Rush_Att',

'Rush_Yds',

'Rush_TD',

'Rec',

'Rec_Yds',

'Rec_TD',

'Tkl',

'Def_Int',

'Sk',

'College',

'',

'Player_NFL_Link',

'Player_NCAA_Link']In [18]:

# Now assign edited columns to the DataFrame

draft_df.columns = column_headers

现在,我们搞定了必要的列,让我们将原始数据写入到CSV文件中。

In [19]:

# Write out the raw draft data to the raw_data fold in the data folder

draft_df.to_csv("data/raw_data/pfr_nfl_draft_data_RAW.csv", index=False)

现在,我们有了原始的选秀数据,需要把它清理干净一点,要进行一些我们想要的数据探索。

首先,让我们创建一个单独的DataFrame,它包含球员姓名,他们的球员页面链接,以及Pro-Football-Reference上的球员ID。这样,我们就可以有一个单独的CSV文件,它仅包含必要的信息,以便于在将来某个时候,为Pro-Football-Reference提取单个球员数据。

要从球员链接提取Pro-Football-Reference球员ID,我们将需要使用正则表达式。正则表达式是一个字符序列,用来在文本正文中匹配某种模式。我们可以用来匹配球员链接并抽取ID的正则表达式如下:

/.*/.*/(.*)\.

上面的正则表达式基本上表示,匹配具有以下模式的字符串:

- 一个

'/'. - 后面紧接着0或多个字符 (这由

'.*'字符表示)。 - 紧接着另一个

'/'(第二个'/'字符)。 - 紧接着0或多个字符 (再次,

'.*'字符)。 - 紧接着另一个 (第三次)

'/'。 - 紧接着0或多个字符分组 (

'(.*)'字符)。- 这是我们的正则表达式的关键部分。

'()'在我们想要提取的字符周围创建一个分组。由于球员ID位于第三个'/'和'.'之间,因此我们使用'(.*)'来抽取在我们的字符串中的那部分发现的所有字符。

- 这是我们的正则表达式的关键部分。

- 接着是

'.',球员ID后的字符。

我们可以通过将上面的正则表达式传递给pandas extract方法,来提取ID。

In [20]:

# extract the player id from the player links

# expand=False returns the IDs as a pandas Series

player_ids = draft_df.Player_NFL_Link.str.extract("/.*/.*/(.*)\.",

expand=False)

In [21]:

# add a Player_ID column to our draft_df

draft_df["Player_ID"] = player_ids

In [22]:

# add the beginning of the pfr url to the player link column

pfr_url = "http://www.pro-football-reference.com"

draft_df.Player_NFL_Link = pfr_url + draft_df.Player_NFL_Link

现在,我们可以保存一个仅包含球员姓名、ID和链接的DataFrame了。

In [23]:

# Get the Player name, IDs, and links

player_id_df = draft_df.loc[:, ["Player", "Player_ID", "Player_NFL_Link",

"Player_NCAA_Link"]]

# Save them to a CSV file

player_id_df.to_csv("data/clean_data/pfr_player_ids_and_links.csv",

index=False)

现在,我们完成了对球员ID的处理,让我们回到处理选秀数据。

首先,上次一些不必要的列。

In [24]:

# drop the the player links and the column labeled by an empty string

draft_df.drop(draft_df.columns[-4:-1], axis=1, inplace=True)

剩下的选秀数据留下的主要问题是,将所有东西转换成正确的数据类型。

In [25]:

draft_df.info()

Int64Index: 15845 entries, 0 to 15844

Data columns (total 30 columns):

Draft_Yr 15845 non-null int64

Rnd 15845 non-null object

Pick 15845 non-null object

Tm 15845 non-null object

Player 15845 non-null object

Pos 15845 non-null object

Age 15845 non-null object

To 15845 non-null object

AP1 15845 non-null object

PB 15845 non-null object

St 15845 non-null object

CarAV 15845 non-null object

DrAV 15845 non-null object

G 15845 non-null object

Cmp 15845 non-null object

Att 15845 non-null object

Yds 15845 non-null object

TD 15845 non-null object

Int 15845 non-null object

Rush_Att 15845 non-null object

Rush_Yds 15845 non-null object

Rush_TD 15845 non-null object

Rec 15845 non-null object

Rec_Yds 15845 non-null object

Rec_TD 15845 non-null object

Tkl 15845 non-null object

Def_Int 15845 non-null object

Sk 15845 non-null object

College 15845 non-null object

Player_ID 11416 non-null object

dtypes: int64(1), object(29)

memory usage: 3.7+ MB

从上面我们可以看到,许多球员数据在应该是数字的时候却不是。要将所有的列转换成它们正确的数值类型,我们可以将to_numeric函数应用到整个DataFrame之上。由于不可能转换一些列(例如,Player, Tm,等等。)到一个数值类型(因为它们并不是数字),因此我们需要设置errors参数为"ignore",从而避免引起任何错误。

In [26]:

# convert the data to proper numeric types

draft_df = draft_df.apply(pd.to_numeric, errors="ignore")

In [27]:

draft_df.info()

Int64Index: 15845 entries, 0 to 15844

Data columns (total 30 columns):

Draft_Yr 15845 non-null int64

Rnd 15845 non-null int64

Pick 15845 non-null int64

Tm 15845 non-null object

Player 15845 non-null object

Pos 15845 non-null object

Age 11297 non-null float64

To 10995 non-null float64

AP1 15845 non-null int64

PB 15845 non-null int64

St 15845 non-null int64

CarAV 10995 non-null float64

DrAV 9571 non-null float64

G 10962 non-null float64

Cmp 1033 non-null float64

Att 1033 non-null float64

Yds 1033 non-null float64

TD 1033 non-null float64

Int 1033 non-null float64

Rush_Att 2776 non-null float64

Rush_Yds 2776 non-null float64

Rush_TD 2776 non-null float64

Rec 3395 non-null float64

Rec_Yds 3395 non-null float64

Rec_TD 3395 non-null float64

Tkl 3644 non-null float64

Def_Int 2590 non-null float64

Sk 2670 non-null float64

College 15845 non-null object

Player_ID 11416 non-null object

dtypes: float64(19), int64(6), object(5)

memory usage: 3.7+ MB

我们还没有完成。很多数值列数据缺失,因为球员并没有累计任何那些统计数据。例如,一些球员并没有获得一个TD,甚至没有进行一场比赛。然我们选择带有数值数据的列,然后用0替换NaN (当前表示缺失数据的值),因为那是一个更合适的值。

In [28]:

# Get the column names for the numeric columns

num_cols = draft_df.columns[draft_df.dtypes != object]

# Replace all NaNs with 0

draft_df.loc[:, num_cols] = draft_df.loc[:, num_cols].fillna(0)

In [29]:

# Everything is filled, except for Player_ID, which is fine for now

draft_df.info()

Int64Index: 15845 entries, 0 to 15844

Data columns (total 30 columns):

Draft_Yr 15845 non-null int64

Rnd 15845 non-null int64

Pick 15845 non-null int64

Tm 15845 non-null object

Player 15845 non-null object

Pos 15845 non-null object

Age 15845 non-null float64

To 15845 non-null float64

AP1 15845 non-null int64

PB 15845 non-null int64

St 15845 non-null int64

CarAV 15845 non-null float64

DrAV 15845 non-null float64

G 15845 non-null float64

Cmp 15845 non-null float64

Att 15845 non-null float64

Yds 15845 non-null float64

TD 15845 non-null float64

Int 15845 non-null float64

Rush_Att 15845 non-null float64

Rush_Yds 15845 non-null float64

Rush_TD 15845 non-null float64

Rec 15845 non-null float64

Rec_Yds 15845 non-null float64

Rec_TD 15845 non-null float64

Tkl 15845 non-null float64

Def_Int 15845 non-null float64

Sk 15845 non-null float64

College 15845 non-null object

Player_ID 11416 non-null object

dtypes: float64(19), int64(6), object(5)

memory usage: 3.7+ MB

最后,我们完成了数据清理,现在,我们可以将其保存到一个CSV文件中去了。

In [30]:

draft_df.to_csv("data/clean_data/pfr_nfl_draft_data_CLEAN.csv", index=False)

现在,我们完成了获取和清理所要的数据,最后可以来些好玩的事了。首先,让我们保持选秀数据更新并包含2010年的选秀,因为,那些更近期参与选秀的球员尚未累计足够的数据,以拥有一个正确的代表性生涯近似值(Approximate Value) (或者cAV)。

In [31]:

# get data for drafts from 1967 to 2010

draft_df_2010 = draft_df.loc[draft_df.Draft_Yr <= 2010, :]

In [32]:

draft_df_2010.tail() # we see that the last draft is 2010

Out[32]:

| Draft_Yr | Rnd | Pick | Tm | Player | Pos | Age | To | AP1 | PB | ... | Rush_Yds | Rush_TD | Rec | Rec_Yds | Rec_TD | Tkl | Def_Int | Sk | College | Player_ID | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 14314 | 2010 | 7 | 251 | OAK | Stevie Brown | DB | 23.0 | 2014.0 | 0 | 0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 98.0 | 8.0 | 1.0 | Michigan | BrowSt99 |

| 14315 | 2010 | 7 | 252 | MIA | Austin Spitler | LB | 23.0 | 2013.0 | 0 | 0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 11.0 | 0.0 | 0.0 | Ohio St. | SpitAu99 |

| 14316 | 2010 | 7 | 253 | TAM | Erik Lorig | DE | 23.0 | 2014.0 | 0 | 0 | ... | 4.0 | 0.0 | 39.0 | 220.0 | 2.0 | 3.0 | 0.0 | 0.0 | Stanford | LoriEr99 |

| 14317 | 2010 | 7 | 254 | STL | Josh Hull | LB | 23.0 | 2013.0 | 0 | 0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 11.0 | 0.0 | 0.0 | Penn St. | HullJo99 |

| 14318 | 2010 | 7 | 255 | DET | Tim Toone | WR | 25.0 | 2012.0 | 0 | 0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | Weber St. | ToonTi00 |

5 rows × 30 columns

使用seaborn的distplot函数,我们可以快速地看到cAV分布的样子,既包括直方图,又包括核密度估计。

In [33]:

# set some plotting styles

from matplotlib import rcParams

# set the font scaling and the plot sizes

sns.set(font_scale=1.65)

rcParams["figure.figsize"] = 12,9

In [34]:

# Use distplot to view the distribu

sns.distplot(draft_df_2010.CarAV)

plt.title("Distribution of Career Approximate Value")

plt.xlim(-5,150)

plt.show()

我们还可以通过boxplot函数,看到按位置分布。

In [35]:

sns.boxplot(x="Pos", y="CarAV", data=draft_df_2010)

plt.title("Distribution of Career Approximate Value by Position (1967-2010)")

plt.show()

从上面的两张图中,我们看到,大多数的球员最终在他们的NFL职业生涯中并未做很多事,因为大多数的球员在0-10 cAV范围周围徘徊。

还有一些位置,对于整个分布,具有0 cAV,或者非常低(及小的)cAV分布。我们可以从厦门的值数看到,这可能是出于这样的事实,有非常少的球员带有那些位置标签。

In [36]:

# Look at the counts for each position

draft_df_2010.Pos.value_counts()

Out[36]:

DB 2456

LB 1910

RB 1686

WR 1636

DE 1130

T 1091

G 959

DT 889

TE 802

QB 667

C 425

K 187

P 150

NT 127

FB 84

FL 63

E 29

HB 23

KR 3

WB 2

Name: Pos, dtype: int64让我们丢弃那些位置,然后将"HB"球员和"RB"球员合并在一起。

In [37]:

# drop players from the following positions [FL, E, WB, KR]

drop_idx = ~ draft_df_2010.Pos.isin(["FL", "E", "WB", "KR"])

draft_df_2010 = draft_df_2010.loc[drop_idx, :]

In [38]:

# Now replace HB label with RB label

draft_df_2010.loc[draft_df_2010.Pos == "HB", "Pos"] = "RB"

让我们再看看位置分布。

In [39]:

sns.boxplot(x="Pos", y="CarAV", data=draft_df_2010)

plt.title("Distribution of Career Approximate Value by Position (1967-2010)")

plt.show()

现在,我们可以拟合一条曲线,来看看每个选择的cAV。我们将使用l局部回归来拟合曲线,它沿着数据“旅行”,每次拟合一条曲线到小块数据。该过程的一个酷酷的可视化(来自于简单的统计博文)见下:

seaborn让我们通过使用regplot并设置lowess参数为True,非常轻松地绘制一条Lowess曲线。

In [40]:

# plot LOWESS curve

# set line color to be black, and scatter color to cyan

sns.regplot(x="Pick", y="CarAV", data=draft_df_2010, lowess=True,

line_kws={"color": "black"},

scatter_kws={"color": sns.color_palette()[5], "alpha": 0.5})

plt.title("Career Approximate Value by Pick")

plt.xlim(-5, 500)

plt.ylim(-5, 200)

plt.show()

我们也可以使用lmplot并设置hue为"Pos",为每个位置拟合一条Lowess曲线。

In [41]:

# Fit a LOWESS curver for each position

sns.lmplot(x="Pick", y="CarAV", data=draft_df_2010, lowess=True, hue="Pos",

size=10, scatter=False)

plt.title("Career Approximate Value by Pick and Position")

plt.xlim(-5, 500)

plt.ylim(-1, 60)

plt.show()

由于太多的线条,上面的图有点太乱了。我们可以实际将曲线分离出来,并单独绘制位置曲线。要在不设置hue为"Pos"的情况下做到这一点,我们可以设置col为"Pos"。要将所有图都组织到5x3格子里,我们必须设置col_wrap为5。

In [44]:

lm = sns.lmplot(x="Pick", y="CarAV", data=draft_df_2010, lowess=True, col="Pos",

col_wrap=5, size=4, line_kws={"color": "black"},

scatter_kws={"color": sns.color_palette()[5], "alpha": 0.7})

# add title to the plot (which is a FacetGrid)

# https://stackoverflow.com/questions/29813694/how-to-add-a-title-to-seaborn-facet-plot

plt.subplots_adjust(top=0.9)

lm.fig.suptitle("Career Approximate Value by Pick and Position",

fontsize=30)

plt.xlim(-5, 500)

plt.ylim(-1, 100)

plt.show()

下面是涵盖这种东西一些其他的资源:

- 看看Michael Lopez最近关于nfl选秀,以及为每场主要比赛构建和比较选秀曲线的文章:

- 如果你刚开始使用Python,我建议读一读用Python自动化无聊的东西。其中有涵盖了网页抓取和正则表达式的章节。

如果有任何错误、问题或者建议,你可以在下面留下评论,以让我知道。你还可以在Twitter (@savvastj)或者通过电子邮件([email protected])联络到我。