diff --git a/edu.cuny.hunter.hybridize.core/tensorflow.xml b/edu.cuny.hunter.hybridize.core/tensorflow.xml

index 01b98e231..bfb90ab43 100644

--- a/edu.cuny.hunter.hybridize.core/tensorflow.xml

+++ b/edu.cuny.hunter.hybridize.core/tensorflow.xml

@@ -6,44 +6,34 @@

-

-

-

-

-

-

-

-

-

-

-

-

+

+

@@ -55,269 +45,196 @@

-

-

-

-

-

-

-

-

-

-

-

-

-

+

-

+

+

+

+

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

@@ -336,28 +253,41 @@

-

-

-

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

-

-

-

-

@@ -366,13 +296,11 @@

-

-

@@ -381,31 +309,26 @@

-

-

-

-

-

@@ -416,7 +339,6 @@

-

@@ -427,7 +349,6 @@

-

@@ -438,7 +359,6 @@

-

@@ -449,7 +369,6 @@

-

@@ -460,7 +379,6 @@

-

@@ -471,7 +389,6 @@

-

@@ -482,7 +399,6 @@

-

@@ -493,7 +409,6 @@

-

@@ -504,7 +419,6 @@

-

@@ -515,7 +429,6 @@

-

@@ -526,7 +439,6 @@

-

@@ -537,7 +449,6 @@

-

@@ -548,7 +459,6 @@

-

@@ -559,7 +469,6 @@

-

@@ -570,7 +479,6 @@

-

@@ -581,7 +489,6 @@

-

@@ -592,7 +499,6 @@

-

@@ -603,7 +509,6 @@

-

@@ -614,7 +519,6 @@

-

@@ -625,7 +529,6 @@

-

@@ -636,7 +539,6 @@

-

@@ -647,7 +549,6 @@

-

@@ -658,7 +559,6 @@

-

@@ -669,7 +569,6 @@

-

@@ -680,7 +579,6 @@

-

@@ -691,7 +589,6 @@

-

@@ -702,7 +599,6 @@

-

@@ -713,7 +609,6 @@

-

@@ -724,7 +619,6 @@

-

@@ -735,64 +629,47 @@

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

@@ -802,7 +679,6 @@

-

@@ -812,7 +688,6 @@

-

@@ -839,7 +714,6 @@

-

@@ -861,7 +735,6 @@

-

@@ -871,7 +744,6 @@

-

@@ -881,7 +753,6 @@

-

@@ -891,7 +762,6 @@

-

@@ -901,7 +771,6 @@

-

@@ -912,7 +781,6 @@

-

@@ -922,7 +790,6 @@

-

@@ -933,7 +800,6 @@

-

@@ -943,7 +809,6 @@

-

@@ -953,7 +818,6 @@

-

@@ -962,30 +826,22 @@

-

-

-

-

-

-

-

-

@@ -994,7 +850,6 @@

-

@@ -1010,7 +865,6 @@

-

@@ -1023,6 +877,5 @@

-

diff --git a/edu.cuny.hunter.hybridize.tests/resources/HybridizeFunction/testAutoEncoder/in/A.py b/edu.cuny.hunter.hybridize.tests/resources/HybridizeFunction/testAutoEncoder/in/A.py

new file mode 100644

index 000000000..9e99da203

--- /dev/null

+++ b/edu.cuny.hunter.hybridize.tests/resources/HybridizeFunction/testAutoEncoder/in/A.py

@@ -0,0 +1,188 @@

+# From https://github.com/aymericdamien/TensorFlow-Examples/blob/6dcbe14649163814e72a22a999f20c5e247ce988/tensorflow_v2/notebooks/3_NeuralNetworks/autoencoder.ipynb.

+

+# %%

+# """

+# # Auto-Encoder Example

+

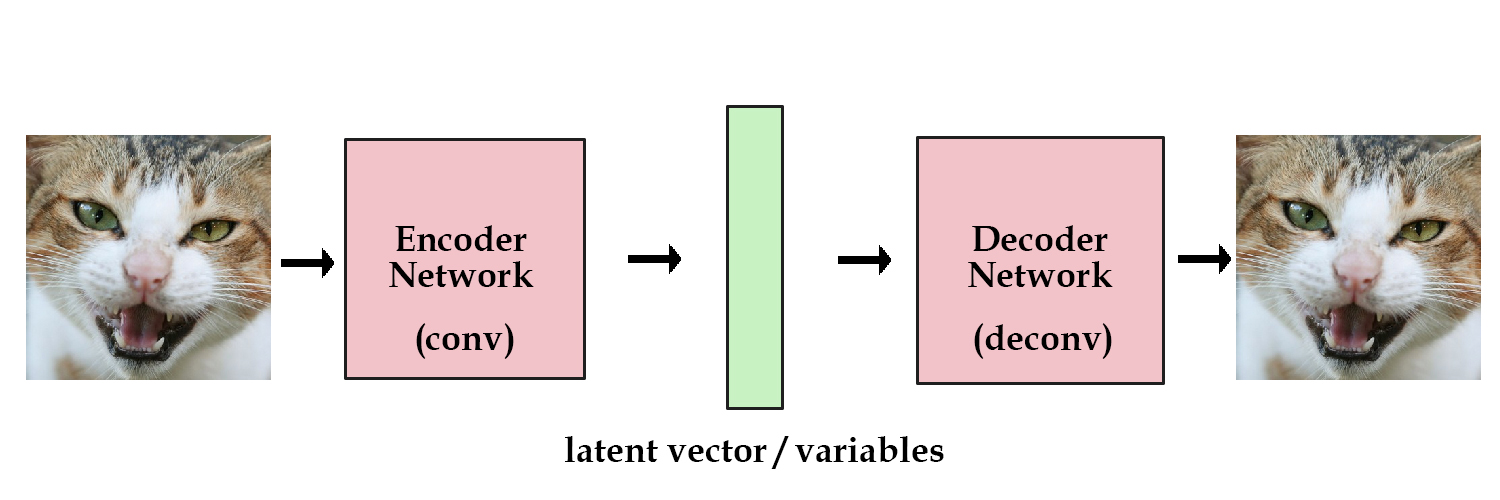

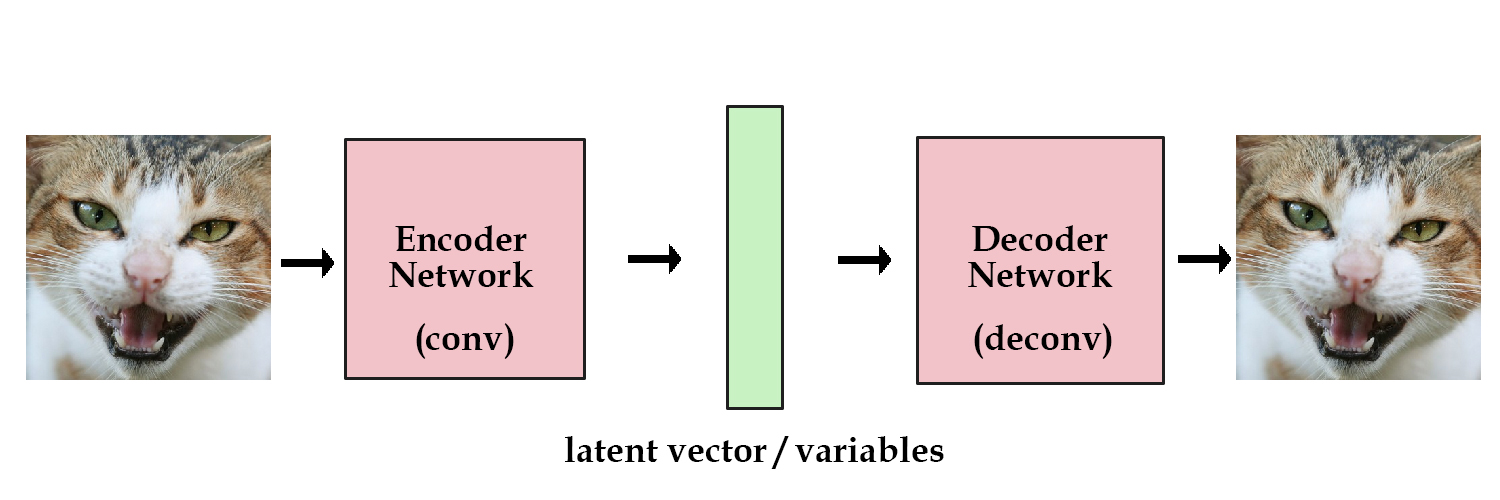

+# Build a 2 layers auto-encoder with TensorFlow v2 to compress images to a lower latent space and then reconstruct them.

+

+# - Author: Aymeric Damien

+# - Project: https://github.com/aymericdamien/TensorFlow-Examples/

+# """

+

+# %%

+# """

+# ## Auto-Encoder Overview

+

+#  +

+# References:

+# - [Gradient-based learning applied to document recognition](http://yann.lecun.com/exdb/publis/pdf/lecun-01a.pdf). Y. LeCun, L. Bottou, Y. Bengio, and P. Haffner. Proceedings of the IEEE, 86(11):2278-2324, November 1998.

+

+# ## MNIST Dataset Overview

+

+# This example is using MNIST handwritten digits. The dataset contains 60,000 examples for training and 10,000 examples for testing. The digits have been size-normalized and centered in a fixed-size image (28x28 pixels) with values from 0 to 255.

+

+# In this example, each image will be converted to float32, normalized to [0, 1] and flattened to a 1-D array of 784 features (28*28).

+

+#

+

+# More info: http://yann.lecun.com/exdb/mnist/

+# """

+

+# %%

+from __future__ import absolute_import, division, print_function

+

+import tensorflow as tf

+print("TensorFlow version:", tf.__version__)

+assert(tf.__version__ == "2.9.3")

+import numpy as np

+

+# %%

+# MNIST Dataset parameters.

+num_features = 784 # data features (img shape: 28*28).

+

+# Training parameters.

+learning_rate = 0.01

+training_steps = 1

+batch_size = 256

+display_step = 1000

+

+# Network Parameters

+num_hidden_1 = 128 # 1st layer num features.

+num_hidden_2 = 64 # 2nd layer num features (the latent dim).

+

+# %%

+# Prepare MNIST data.

+from tensorflow.keras.datasets import mnist

+(x_train, y_train), (x_test, y_test) = mnist.load_data()

+# Convert to float32.

+x_train, x_test = x_train.astype(np.float32), x_test.astype(np.float32)

+# Flatten images to 1-D vector of 784 features (28*28).

+x_train, x_test = x_train.reshape([-1, num_features]), x_test.reshape([-1, num_features])

+# Normalize images value from [0, 255] to [0, 1].

+x_train, x_test = x_train / 255., x_test / 255.

+

+# %%

+# Use tf.data API to shuffle and batch data.

+train_data = tf.data.Dataset.from_tensor_slices((x_train, y_train))

+train_data = train_data.repeat().shuffle(10000).batch(batch_size).prefetch(1)

+

+test_data = tf.data.Dataset.from_tensor_slices((x_test, y_test))

+test_data = test_data.repeat().batch(batch_size).prefetch(1)

+

+# %%

+# Store layers weight & bias

+

+# A random value generator to initialize weights.

+random_normal = tf.initializers.RandomNormal()

+

+weights = {

+ 'encoder_h1': tf.Variable(random_normal([num_features, num_hidden_1])),

+ 'encoder_h2': tf.Variable(random_normal([num_hidden_1, num_hidden_2])),

+ 'decoder_h1': tf.Variable(random_normal([num_hidden_2, num_hidden_1])),

+ 'decoder_h2': tf.Variable(random_normal([num_hidden_1, num_features])),

+}

+biases = {

+ 'encoder_b1': tf.Variable(random_normal([num_hidden_1])),

+ 'encoder_b2': tf.Variable(random_normal([num_hidden_2])),

+ 'decoder_b1': tf.Variable(random_normal([num_hidden_1])),

+ 'decoder_b2': tf.Variable(random_normal([num_features])),

+}

+

+

+# %%

+# Building the encoder.

+def encoder(x):

+ # Encoder Hidden layer with sigmoid activation.

+ layer_1 = tf.nn.sigmoid(tf.add(tf.matmul(x, weights['encoder_h1']),

+ biases['encoder_b1']))

+ # Encoder Hidden layer with sigmoid activation.

+ layer_2 = tf.nn.sigmoid(tf.add(tf.matmul(layer_1, weights['encoder_h2']),

+ biases['encoder_b2']))

+ return layer_2

+

+

+# Building the decoder.

+def decoder(x):

+ # Decoder Hidden layer with sigmoid activation.

+ layer_1 = tf.nn.sigmoid(tf.add(tf.matmul(x, weights['decoder_h1']),

+ biases['decoder_b1']))

+ # Decoder Hidden layer with sigmoid activation.

+ layer_2 = tf.nn.sigmoid(tf.add(tf.matmul(layer_1, weights['decoder_h2']),

+ biases['decoder_b2']))

+ return layer_2

+

+

+# %%

+# Mean square loss between original images and reconstructed ones.

+def mean_square(reconstructed, original):

+ return tf.reduce_mean(tf.pow(original - reconstructed, 2))

+

+

+# Adam optimizer.

+optimizer = tf.optimizers.Adam(learning_rate=learning_rate)

+

+

+# %%

+# Optimization process.

+def run_optimization(x):

+ # Wrap computation inside a GradientTape for automatic differentiation.

+ with tf.GradientTape() as g:

+ reconstructed_image = decoder(encoder(x))

+ loss = mean_square(reconstructed_image, x)

+

+ # Variables to update, i.e. trainable variables.

+ trainable_variables = list(weights.values()) + list(biases.values())

+

+ # Compute gradients.

+ gradients = g.gradient(loss, trainable_variables)

+

+ # Update W and b following gradients.

+ optimizer.apply_gradients(zip(gradients, trainable_variables))

+

+ return loss

+

+

+# %%

+# Run training for the given number of steps.

+for step, (batch_x, _) in enumerate(train_data.take(training_steps + 1)):

+

+ # Run the optimization.

+ loss = run_optimization(batch_x)

+

+ if step % display_step == 0:

+ print("step: %i, loss: %f" % (step, loss))

+

+# %%

+# Testing and Visualization.

+import matplotlib.pyplot as plt

+

+# %%

+# Encode and decode images from test set and visualize their reconstruction.

+n = 4

+canvas_orig = np.empty((28 * n, 28 * n))

+canvas_recon = np.empty((28 * n, 28 * n))

+for i, (batch_x, _) in enumerate(test_data.take(n)):

+ # Encode and decode the digit image.

+ reconstructed_images = decoder(encoder(batch_x))

+ # Display original images.

+ for j in range(n):

+ # Draw the generated digits.

+ img = batch_x[j].numpy().reshape([28, 28])

+ canvas_orig[i * 28:(i + 1) * 28, j * 28:(j + 1) * 28] = img

+ # Display reconstructed images.

+ for j in range(n):

+ # Draw the generated digits.

+ reconstr_img = reconstructed_images[j].numpy().reshape([28, 28])

+ canvas_recon[i * 28:(i + 1) * 28, j * 28:(j + 1) * 28] = reconstr_img

+

+# print("Original Images")

+# plt.figure(figsize=(n, n))

+# plt.imshow(canvas_orig, origin="upper", cmap="gray")

+# plt.show()

+#

+# print("Reconstructed Images")

+# plt.figure(figsize=(n, n))

+# plt.imshow(canvas_recon, origin="upper", cmap="gray")

+# plt.show()

diff --git a/edu.cuny.hunter.hybridize.tests/resources/HybridizeFunction/testAutoEncoder/in/requirements.txt b/edu.cuny.hunter.hybridize.tests/resources/HybridizeFunction/testAutoEncoder/in/requirements.txt

new file mode 100644

index 000000000..b154f958f

--- /dev/null

+++ b/edu.cuny.hunter.hybridize.tests/resources/HybridizeFunction/testAutoEncoder/in/requirements.txt

@@ -0,0 +1 @@

+tensorflow==2.9.3

diff --git a/edu.cuny.hunter.hybridize.tests/test cases/edu/cuny/hunter/hybridize/tests/HybridizeFunctionRefactoringTest.java b/edu.cuny.hunter.hybridize.tests/test cases/edu/cuny/hunter/hybridize/tests/HybridizeFunctionRefactoringTest.java

index aa7611038..18970c31d 100644

--- a/edu.cuny.hunter.hybridize.tests/test cases/edu/cuny/hunter/hybridize/tests/HybridizeFunctionRefactoringTest.java

+++ b/edu.cuny.hunter.hybridize.tests/test cases/edu/cuny/hunter/hybridize/tests/HybridizeFunctionRefactoringTest.java

@@ -6267,4 +6267,23 @@ public void testNeuralNetwork() throws Exception {

}

}

}

+

+ @Test

+ public void testAutoEncoder() throws Exception {

+ Set functions = getFunctions();

+

+ for (Function function : functions) {

+ switch (function.getIdentifier()) {

+ case "encoder":

+ case "mean_square":

+ case "run_optimization":

+ case "decoder":

+ testFunction(function, false, true, false, false, false, CONVERT_EAGER_FUNCTION_TO_HYBRID, P1,

+ singleton(CONVERT_TO_HYBRID), RefactoringStatus.OK);

+ break;

+ default:

+ throw new IllegalStateException("Not expecting: " + function.getIdentifier() + ".");

+ }

+ }

+ }

}

+

+# References:

+# - [Gradient-based learning applied to document recognition](http://yann.lecun.com/exdb/publis/pdf/lecun-01a.pdf). Y. LeCun, L. Bottou, Y. Bengio, and P. Haffner. Proceedings of the IEEE, 86(11):2278-2324, November 1998.

+

+# ## MNIST Dataset Overview

+

+# This example is using MNIST handwritten digits. The dataset contains 60,000 examples for training and 10,000 examples for testing. The digits have been size-normalized and centered in a fixed-size image (28x28 pixels) with values from 0 to 255.

+

+# In this example, each image will be converted to float32, normalized to [0, 1] and flattened to a 1-D array of 784 features (28*28).

+

+#

+

+# More info: http://yann.lecun.com/exdb/mnist/

+# """

+

+# %%

+from __future__ import absolute_import, division, print_function

+

+import tensorflow as tf

+print("TensorFlow version:", tf.__version__)

+assert(tf.__version__ == "2.9.3")

+import numpy as np

+

+# %%

+# MNIST Dataset parameters.

+num_features = 784 # data features (img shape: 28*28).

+

+# Training parameters.

+learning_rate = 0.01

+training_steps = 1

+batch_size = 256

+display_step = 1000

+

+# Network Parameters

+num_hidden_1 = 128 # 1st layer num features.

+num_hidden_2 = 64 # 2nd layer num features (the latent dim).

+

+# %%

+# Prepare MNIST data.

+from tensorflow.keras.datasets import mnist

+(x_train, y_train), (x_test, y_test) = mnist.load_data()

+# Convert to float32.

+x_train, x_test = x_train.astype(np.float32), x_test.astype(np.float32)

+# Flatten images to 1-D vector of 784 features (28*28).

+x_train, x_test = x_train.reshape([-1, num_features]), x_test.reshape([-1, num_features])

+# Normalize images value from [0, 255] to [0, 1].

+x_train, x_test = x_train / 255., x_test / 255.

+

+# %%

+# Use tf.data API to shuffle and batch data.

+train_data = tf.data.Dataset.from_tensor_slices((x_train, y_train))

+train_data = train_data.repeat().shuffle(10000).batch(batch_size).prefetch(1)

+

+test_data = tf.data.Dataset.from_tensor_slices((x_test, y_test))

+test_data = test_data.repeat().batch(batch_size).prefetch(1)

+

+# %%

+# Store layers weight & bias

+

+# A random value generator to initialize weights.

+random_normal = tf.initializers.RandomNormal()

+

+weights = {

+ 'encoder_h1': tf.Variable(random_normal([num_features, num_hidden_1])),

+ 'encoder_h2': tf.Variable(random_normal([num_hidden_1, num_hidden_2])),

+ 'decoder_h1': tf.Variable(random_normal([num_hidden_2, num_hidden_1])),

+ 'decoder_h2': tf.Variable(random_normal([num_hidden_1, num_features])),

+}

+biases = {

+ 'encoder_b1': tf.Variable(random_normal([num_hidden_1])),

+ 'encoder_b2': tf.Variable(random_normal([num_hidden_2])),

+ 'decoder_b1': tf.Variable(random_normal([num_hidden_1])),

+ 'decoder_b2': tf.Variable(random_normal([num_features])),

+}

+

+

+# %%

+# Building the encoder.

+def encoder(x):

+ # Encoder Hidden layer with sigmoid activation.

+ layer_1 = tf.nn.sigmoid(tf.add(tf.matmul(x, weights['encoder_h1']),

+ biases['encoder_b1']))

+ # Encoder Hidden layer with sigmoid activation.

+ layer_2 = tf.nn.sigmoid(tf.add(tf.matmul(layer_1, weights['encoder_h2']),

+ biases['encoder_b2']))

+ return layer_2

+

+

+# Building the decoder.

+def decoder(x):

+ # Decoder Hidden layer with sigmoid activation.

+ layer_1 = tf.nn.sigmoid(tf.add(tf.matmul(x, weights['decoder_h1']),

+ biases['decoder_b1']))

+ # Decoder Hidden layer with sigmoid activation.

+ layer_2 = tf.nn.sigmoid(tf.add(tf.matmul(layer_1, weights['decoder_h2']),

+ biases['decoder_b2']))

+ return layer_2

+

+

+# %%

+# Mean square loss between original images and reconstructed ones.

+def mean_square(reconstructed, original):

+ return tf.reduce_mean(tf.pow(original - reconstructed, 2))

+

+

+# Adam optimizer.

+optimizer = tf.optimizers.Adam(learning_rate=learning_rate)

+

+

+# %%

+# Optimization process.

+def run_optimization(x):

+ # Wrap computation inside a GradientTape for automatic differentiation.

+ with tf.GradientTape() as g:

+ reconstructed_image = decoder(encoder(x))

+ loss = mean_square(reconstructed_image, x)

+

+ # Variables to update, i.e. trainable variables.

+ trainable_variables = list(weights.values()) + list(biases.values())

+

+ # Compute gradients.

+ gradients = g.gradient(loss, trainable_variables)

+

+ # Update W and b following gradients.

+ optimizer.apply_gradients(zip(gradients, trainable_variables))

+

+ return loss

+

+

+# %%

+# Run training for the given number of steps.

+for step, (batch_x, _) in enumerate(train_data.take(training_steps + 1)):

+

+ # Run the optimization.

+ loss = run_optimization(batch_x)

+

+ if step % display_step == 0:

+ print("step: %i, loss: %f" % (step, loss))

+

+# %%

+# Testing and Visualization.

+import matplotlib.pyplot as plt

+

+# %%

+# Encode and decode images from test set and visualize their reconstruction.

+n = 4

+canvas_orig = np.empty((28 * n, 28 * n))

+canvas_recon = np.empty((28 * n, 28 * n))

+for i, (batch_x, _) in enumerate(test_data.take(n)):

+ # Encode and decode the digit image.

+ reconstructed_images = decoder(encoder(batch_x))

+ # Display original images.

+ for j in range(n):

+ # Draw the generated digits.

+ img = batch_x[j].numpy().reshape([28, 28])

+ canvas_orig[i * 28:(i + 1) * 28, j * 28:(j + 1) * 28] = img

+ # Display reconstructed images.

+ for j in range(n):

+ # Draw the generated digits.

+ reconstr_img = reconstructed_images[j].numpy().reshape([28, 28])

+ canvas_recon[i * 28:(i + 1) * 28, j * 28:(j + 1) * 28] = reconstr_img

+

+# print("Original Images")

+# plt.figure(figsize=(n, n))

+# plt.imshow(canvas_orig, origin="upper", cmap="gray")

+# plt.show()

+#

+# print("Reconstructed Images")

+# plt.figure(figsize=(n, n))

+# plt.imshow(canvas_recon, origin="upper", cmap="gray")

+# plt.show()

diff --git a/edu.cuny.hunter.hybridize.tests/resources/HybridizeFunction/testAutoEncoder/in/requirements.txt b/edu.cuny.hunter.hybridize.tests/resources/HybridizeFunction/testAutoEncoder/in/requirements.txt

new file mode 100644

index 000000000..b154f958f

--- /dev/null

+++ b/edu.cuny.hunter.hybridize.tests/resources/HybridizeFunction/testAutoEncoder/in/requirements.txt

@@ -0,0 +1 @@

+tensorflow==2.9.3

diff --git a/edu.cuny.hunter.hybridize.tests/test cases/edu/cuny/hunter/hybridize/tests/HybridizeFunctionRefactoringTest.java b/edu.cuny.hunter.hybridize.tests/test cases/edu/cuny/hunter/hybridize/tests/HybridizeFunctionRefactoringTest.java

index aa7611038..18970c31d 100644

--- a/edu.cuny.hunter.hybridize.tests/test cases/edu/cuny/hunter/hybridize/tests/HybridizeFunctionRefactoringTest.java

+++ b/edu.cuny.hunter.hybridize.tests/test cases/edu/cuny/hunter/hybridize/tests/HybridizeFunctionRefactoringTest.java

@@ -6267,4 +6267,23 @@ public void testNeuralNetwork() throws Exception {

}

}

}

+

+ @Test

+ public void testAutoEncoder() throws Exception {

+ Set functions = getFunctions();

+

+ for (Function function : functions) {

+ switch (function.getIdentifier()) {

+ case "encoder":

+ case "mean_square":

+ case "run_optimization":

+ case "decoder":

+ testFunction(function, false, true, false, false, false, CONVERT_EAGER_FUNCTION_TO_HYBRID, P1,

+ singleton(CONVERT_TO_HYBRID), RefactoringStatus.OK);

+ break;

+ default:

+ throw new IllegalStateException("Not expecting: " + function.getIdentifier() + ".");

+ }

+ }

+ }

}

+

+# References:

+# - [Gradient-based learning applied to document recognition](http://yann.lecun.com/exdb/publis/pdf/lecun-01a.pdf). Y. LeCun, L. Bottou, Y. Bengio, and P. Haffner. Proceedings of the IEEE, 86(11):2278-2324, November 1998.

+

+# ## MNIST Dataset Overview

+

+# This example is using MNIST handwritten digits. The dataset contains 60,000 examples for training and 10,000 examples for testing. The digits have been size-normalized and centered in a fixed-size image (28x28 pixels) with values from 0 to 255.

+

+# In this example, each image will be converted to float32, normalized to [0, 1] and flattened to a 1-D array of 784 features (28*28).

+

+#

+

+# More info: http://yann.lecun.com/exdb/mnist/

+# """

+

+# %%

+from __future__ import absolute_import, division, print_function

+

+import tensorflow as tf

+print("TensorFlow version:", tf.__version__)

+assert(tf.__version__ == "2.9.3")

+import numpy as np

+

+# %%

+# MNIST Dataset parameters.

+num_features = 784 # data features (img shape: 28*28).

+

+# Training parameters.

+learning_rate = 0.01

+training_steps = 1

+batch_size = 256

+display_step = 1000

+

+# Network Parameters

+num_hidden_1 = 128 # 1st layer num features.

+num_hidden_2 = 64 # 2nd layer num features (the latent dim).

+

+# %%

+# Prepare MNIST data.

+from tensorflow.keras.datasets import mnist

+(x_train, y_train), (x_test, y_test) = mnist.load_data()

+# Convert to float32.

+x_train, x_test = x_train.astype(np.float32), x_test.astype(np.float32)

+# Flatten images to 1-D vector of 784 features (28*28).

+x_train, x_test = x_train.reshape([-1, num_features]), x_test.reshape([-1, num_features])

+# Normalize images value from [0, 255] to [0, 1].

+x_train, x_test = x_train / 255., x_test / 255.

+

+# %%

+# Use tf.data API to shuffle and batch data.

+train_data = tf.data.Dataset.from_tensor_slices((x_train, y_train))

+train_data = train_data.repeat().shuffle(10000).batch(batch_size).prefetch(1)

+

+test_data = tf.data.Dataset.from_tensor_slices((x_test, y_test))

+test_data = test_data.repeat().batch(batch_size).prefetch(1)

+

+# %%

+# Store layers weight & bias

+

+# A random value generator to initialize weights.

+random_normal = tf.initializers.RandomNormal()

+

+weights = {

+ 'encoder_h1': tf.Variable(random_normal([num_features, num_hidden_1])),

+ 'encoder_h2': tf.Variable(random_normal([num_hidden_1, num_hidden_2])),

+ 'decoder_h1': tf.Variable(random_normal([num_hidden_2, num_hidden_1])),

+ 'decoder_h2': tf.Variable(random_normal([num_hidden_1, num_features])),

+}

+biases = {

+ 'encoder_b1': tf.Variable(random_normal([num_hidden_1])),

+ 'encoder_b2': tf.Variable(random_normal([num_hidden_2])),

+ 'decoder_b1': tf.Variable(random_normal([num_hidden_1])),

+ 'decoder_b2': tf.Variable(random_normal([num_features])),

+}

+

+

+# %%

+# Building the encoder.

+def encoder(x):

+ # Encoder Hidden layer with sigmoid activation.

+ layer_1 = tf.nn.sigmoid(tf.add(tf.matmul(x, weights['encoder_h1']),

+ biases['encoder_b1']))

+ # Encoder Hidden layer with sigmoid activation.

+ layer_2 = tf.nn.sigmoid(tf.add(tf.matmul(layer_1, weights['encoder_h2']),

+ biases['encoder_b2']))

+ return layer_2

+

+

+# Building the decoder.

+def decoder(x):

+ # Decoder Hidden layer with sigmoid activation.

+ layer_1 = tf.nn.sigmoid(tf.add(tf.matmul(x, weights['decoder_h1']),

+ biases['decoder_b1']))

+ # Decoder Hidden layer with sigmoid activation.

+ layer_2 = tf.nn.sigmoid(tf.add(tf.matmul(layer_1, weights['decoder_h2']),

+ biases['decoder_b2']))

+ return layer_2

+

+

+# %%

+# Mean square loss between original images and reconstructed ones.

+def mean_square(reconstructed, original):

+ return tf.reduce_mean(tf.pow(original - reconstructed, 2))

+

+

+# Adam optimizer.

+optimizer = tf.optimizers.Adam(learning_rate=learning_rate)

+

+

+# %%

+# Optimization process.

+def run_optimization(x):

+ # Wrap computation inside a GradientTape for automatic differentiation.

+ with tf.GradientTape() as g:

+ reconstructed_image = decoder(encoder(x))

+ loss = mean_square(reconstructed_image, x)

+

+ # Variables to update, i.e. trainable variables.

+ trainable_variables = list(weights.values()) + list(biases.values())

+

+ # Compute gradients.

+ gradients = g.gradient(loss, trainable_variables)

+

+ # Update W and b following gradients.

+ optimizer.apply_gradients(zip(gradients, trainable_variables))

+

+ return loss

+

+

+# %%

+# Run training for the given number of steps.

+for step, (batch_x, _) in enumerate(train_data.take(training_steps + 1)):

+

+ # Run the optimization.

+ loss = run_optimization(batch_x)

+

+ if step % display_step == 0:

+ print("step: %i, loss: %f" % (step, loss))

+

+# %%

+# Testing and Visualization.

+import matplotlib.pyplot as plt

+

+# %%

+# Encode and decode images from test set and visualize their reconstruction.

+n = 4

+canvas_orig = np.empty((28 * n, 28 * n))

+canvas_recon = np.empty((28 * n, 28 * n))

+for i, (batch_x, _) in enumerate(test_data.take(n)):

+ # Encode and decode the digit image.

+ reconstructed_images = decoder(encoder(batch_x))

+ # Display original images.

+ for j in range(n):

+ # Draw the generated digits.

+ img = batch_x[j].numpy().reshape([28, 28])

+ canvas_orig[i * 28:(i + 1) * 28, j * 28:(j + 1) * 28] = img

+ # Display reconstructed images.

+ for j in range(n):

+ # Draw the generated digits.

+ reconstr_img = reconstructed_images[j].numpy().reshape([28, 28])

+ canvas_recon[i * 28:(i + 1) * 28, j * 28:(j + 1) * 28] = reconstr_img

+

+# print("Original Images")

+# plt.figure(figsize=(n, n))

+# plt.imshow(canvas_orig, origin="upper", cmap="gray")

+# plt.show()

+#

+# print("Reconstructed Images")

+# plt.figure(figsize=(n, n))

+# plt.imshow(canvas_recon, origin="upper", cmap="gray")

+# plt.show()

diff --git a/edu.cuny.hunter.hybridize.tests/resources/HybridizeFunction/testAutoEncoder/in/requirements.txt b/edu.cuny.hunter.hybridize.tests/resources/HybridizeFunction/testAutoEncoder/in/requirements.txt

new file mode 100644

index 000000000..b154f958f

--- /dev/null

+++ b/edu.cuny.hunter.hybridize.tests/resources/HybridizeFunction/testAutoEncoder/in/requirements.txt

@@ -0,0 +1 @@

+tensorflow==2.9.3

diff --git a/edu.cuny.hunter.hybridize.tests/test cases/edu/cuny/hunter/hybridize/tests/HybridizeFunctionRefactoringTest.java b/edu.cuny.hunter.hybridize.tests/test cases/edu/cuny/hunter/hybridize/tests/HybridizeFunctionRefactoringTest.java

index aa7611038..18970c31d 100644

--- a/edu.cuny.hunter.hybridize.tests/test cases/edu/cuny/hunter/hybridize/tests/HybridizeFunctionRefactoringTest.java

+++ b/edu.cuny.hunter.hybridize.tests/test cases/edu/cuny/hunter/hybridize/tests/HybridizeFunctionRefactoringTest.java

@@ -6267,4 +6267,23 @@ public void testNeuralNetwork() throws Exception {

}

}

}

+

+ @Test

+ public void testAutoEncoder() throws Exception {

+ Set

+

+# References:

+# - [Gradient-based learning applied to document recognition](http://yann.lecun.com/exdb/publis/pdf/lecun-01a.pdf). Y. LeCun, L. Bottou, Y. Bengio, and P. Haffner. Proceedings of the IEEE, 86(11):2278-2324, November 1998.

+

+# ## MNIST Dataset Overview

+

+# This example is using MNIST handwritten digits. The dataset contains 60,000 examples for training and 10,000 examples for testing. The digits have been size-normalized and centered in a fixed-size image (28x28 pixels) with values from 0 to 255.

+

+# In this example, each image will be converted to float32, normalized to [0, 1] and flattened to a 1-D array of 784 features (28*28).

+

+#

+

+# More info: http://yann.lecun.com/exdb/mnist/

+# """

+

+# %%

+from __future__ import absolute_import, division, print_function

+

+import tensorflow as tf

+print("TensorFlow version:", tf.__version__)

+assert(tf.__version__ == "2.9.3")

+import numpy as np

+

+# %%

+# MNIST Dataset parameters.

+num_features = 784 # data features (img shape: 28*28).

+

+# Training parameters.

+learning_rate = 0.01

+training_steps = 1

+batch_size = 256

+display_step = 1000

+

+# Network Parameters

+num_hidden_1 = 128 # 1st layer num features.

+num_hidden_2 = 64 # 2nd layer num features (the latent dim).

+

+# %%

+# Prepare MNIST data.

+from tensorflow.keras.datasets import mnist

+(x_train, y_train), (x_test, y_test) = mnist.load_data()

+# Convert to float32.

+x_train, x_test = x_train.astype(np.float32), x_test.astype(np.float32)

+# Flatten images to 1-D vector of 784 features (28*28).

+x_train, x_test = x_train.reshape([-1, num_features]), x_test.reshape([-1, num_features])

+# Normalize images value from [0, 255] to [0, 1].

+x_train, x_test = x_train / 255., x_test / 255.

+

+# %%

+# Use tf.data API to shuffle and batch data.

+train_data = tf.data.Dataset.from_tensor_slices((x_train, y_train))

+train_data = train_data.repeat().shuffle(10000).batch(batch_size).prefetch(1)

+

+test_data = tf.data.Dataset.from_tensor_slices((x_test, y_test))

+test_data = test_data.repeat().batch(batch_size).prefetch(1)

+

+# %%

+# Store layers weight & bias

+

+# A random value generator to initialize weights.

+random_normal = tf.initializers.RandomNormal()

+

+weights = {

+ 'encoder_h1': tf.Variable(random_normal([num_features, num_hidden_1])),

+ 'encoder_h2': tf.Variable(random_normal([num_hidden_1, num_hidden_2])),

+ 'decoder_h1': tf.Variable(random_normal([num_hidden_2, num_hidden_1])),

+ 'decoder_h2': tf.Variable(random_normal([num_hidden_1, num_features])),

+}

+biases = {

+ 'encoder_b1': tf.Variable(random_normal([num_hidden_1])),

+ 'encoder_b2': tf.Variable(random_normal([num_hidden_2])),

+ 'decoder_b1': tf.Variable(random_normal([num_hidden_1])),

+ 'decoder_b2': tf.Variable(random_normal([num_features])),

+}

+

+

+# %%

+# Building the encoder.

+def encoder(x):

+ # Encoder Hidden layer with sigmoid activation.

+ layer_1 = tf.nn.sigmoid(tf.add(tf.matmul(x, weights['encoder_h1']),

+ biases['encoder_b1']))

+ # Encoder Hidden layer with sigmoid activation.

+ layer_2 = tf.nn.sigmoid(tf.add(tf.matmul(layer_1, weights['encoder_h2']),

+ biases['encoder_b2']))

+ return layer_2

+

+

+# Building the decoder.

+def decoder(x):

+ # Decoder Hidden layer with sigmoid activation.

+ layer_1 = tf.nn.sigmoid(tf.add(tf.matmul(x, weights['decoder_h1']),

+ biases['decoder_b1']))

+ # Decoder Hidden layer with sigmoid activation.

+ layer_2 = tf.nn.sigmoid(tf.add(tf.matmul(layer_1, weights['decoder_h2']),

+ biases['decoder_b2']))

+ return layer_2

+

+

+# %%

+# Mean square loss between original images and reconstructed ones.

+def mean_square(reconstructed, original):

+ return tf.reduce_mean(tf.pow(original - reconstructed, 2))

+

+

+# Adam optimizer.

+optimizer = tf.optimizers.Adam(learning_rate=learning_rate)

+

+

+# %%

+# Optimization process.

+def run_optimization(x):

+ # Wrap computation inside a GradientTape for automatic differentiation.

+ with tf.GradientTape() as g:

+ reconstructed_image = decoder(encoder(x))

+ loss = mean_square(reconstructed_image, x)

+

+ # Variables to update, i.e. trainable variables.

+ trainable_variables = list(weights.values()) + list(biases.values())

+

+ # Compute gradients.

+ gradients = g.gradient(loss, trainable_variables)

+

+ # Update W and b following gradients.

+ optimizer.apply_gradients(zip(gradients, trainable_variables))

+

+ return loss

+

+

+# %%

+# Run training for the given number of steps.

+for step, (batch_x, _) in enumerate(train_data.take(training_steps + 1)):

+

+ # Run the optimization.

+ loss = run_optimization(batch_x)

+

+ if step % display_step == 0:

+ print("step: %i, loss: %f" % (step, loss))

+

+# %%

+# Testing and Visualization.

+import matplotlib.pyplot as plt

+

+# %%

+# Encode and decode images from test set and visualize their reconstruction.

+n = 4

+canvas_orig = np.empty((28 * n, 28 * n))

+canvas_recon = np.empty((28 * n, 28 * n))

+for i, (batch_x, _) in enumerate(test_data.take(n)):

+ # Encode and decode the digit image.

+ reconstructed_images = decoder(encoder(batch_x))

+ # Display original images.

+ for j in range(n):

+ # Draw the generated digits.

+ img = batch_x[j].numpy().reshape([28, 28])

+ canvas_orig[i * 28:(i + 1) * 28, j * 28:(j + 1) * 28] = img

+ # Display reconstructed images.

+ for j in range(n):

+ # Draw the generated digits.

+ reconstr_img = reconstructed_images[j].numpy().reshape([28, 28])

+ canvas_recon[i * 28:(i + 1) * 28, j * 28:(j + 1) * 28] = reconstr_img

+

+# print("Original Images")

+# plt.figure(figsize=(n, n))

+# plt.imshow(canvas_orig, origin="upper", cmap="gray")

+# plt.show()

+#

+# print("Reconstructed Images")

+# plt.figure(figsize=(n, n))

+# plt.imshow(canvas_recon, origin="upper", cmap="gray")

+# plt.show()

diff --git a/edu.cuny.hunter.hybridize.tests/resources/HybridizeFunction/testAutoEncoder/in/requirements.txt b/edu.cuny.hunter.hybridize.tests/resources/HybridizeFunction/testAutoEncoder/in/requirements.txt

new file mode 100644

index 000000000..b154f958f

--- /dev/null

+++ b/edu.cuny.hunter.hybridize.tests/resources/HybridizeFunction/testAutoEncoder/in/requirements.txt

@@ -0,0 +1 @@

+tensorflow==2.9.3

diff --git a/edu.cuny.hunter.hybridize.tests/test cases/edu/cuny/hunter/hybridize/tests/HybridizeFunctionRefactoringTest.java b/edu.cuny.hunter.hybridize.tests/test cases/edu/cuny/hunter/hybridize/tests/HybridizeFunctionRefactoringTest.java

index aa7611038..18970c31d 100644

--- a/edu.cuny.hunter.hybridize.tests/test cases/edu/cuny/hunter/hybridize/tests/HybridizeFunctionRefactoringTest.java

+++ b/edu.cuny.hunter.hybridize.tests/test cases/edu/cuny/hunter/hybridize/tests/HybridizeFunctionRefactoringTest.java

@@ -6267,4 +6267,23 @@ public void testNeuralNetwork() throws Exception {

}

}

}

+

+ @Test

+ public void testAutoEncoder() throws Exception {

+ Set