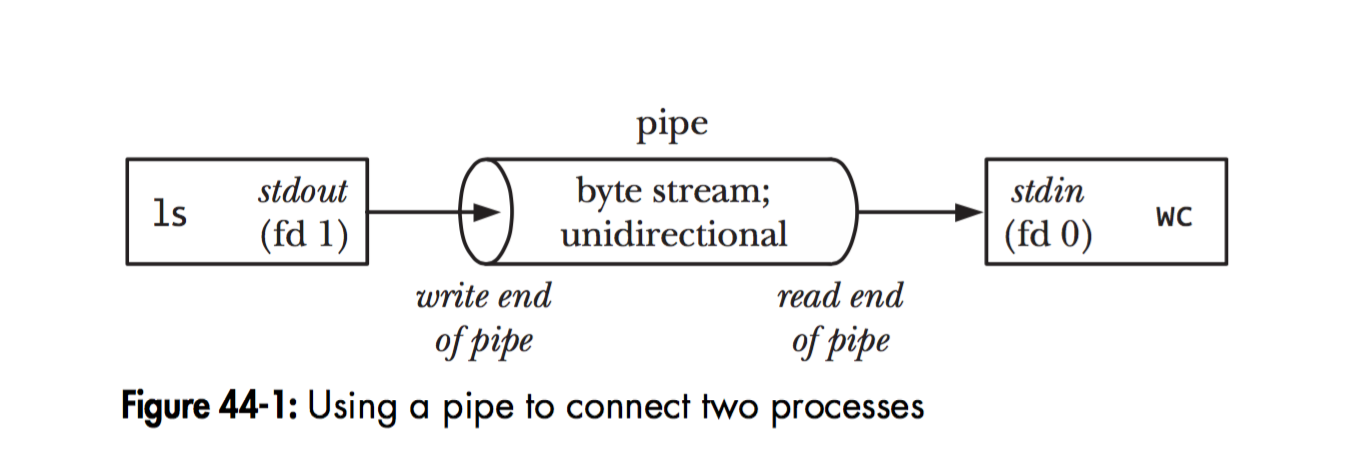

In Unix-like computer operating systems, a pipeline is a mechanism for inter-process communication using message passing. A pipeline is a set of processes chained together by their standard streams, so that the output text of each process (stdout) is passed directly as input (stdin) to the next one. The second process is started as the first process is still executing, and they are executed concurrently. The concept of pipelines was championed by Douglas McIlroy at Unix's ancestral home of Bell Labs, during the development of Unix, shaping its toolbox philosophy

Dennis Ritchie, the creator of the Unix operating system, introduced the concept of a pipeline to process data.

In a new version of the Unix operating system, a flexible coroutine-based design replaces the traditional rigid connection between processes and terminals or networks. Processing modules may be inserted dynamically into the stream that connects a user's program to a device. Programs may also connect directly to programs, providing inter-process communication.

AT&T Bell Laboratories Technical Journal 63, No. 8 Part 2 (October, 1984)

Nowadays, our software deployed on the cloud and serve people from all over the world. Building a complex geo-distributed system to provide secure and reliable services with low-latency is a challenge.

By introducting YoMo, we can build it just like unix pipeline over cloud.

Install YoMo CLI

$ curl -fsSL https://get.yomo.run | sh

==> Resolved version latest to v1.0.0

==> Downloading asset for darwin amd64

==> Installing yomo to /usr/local/bin

==> Installation complete$ go install github.com/yomorun/yomo/cmd/yomo@latest

$ yomo version

YoMo CLI Version: v1.0.0task run

yomo serve -c config.yaml

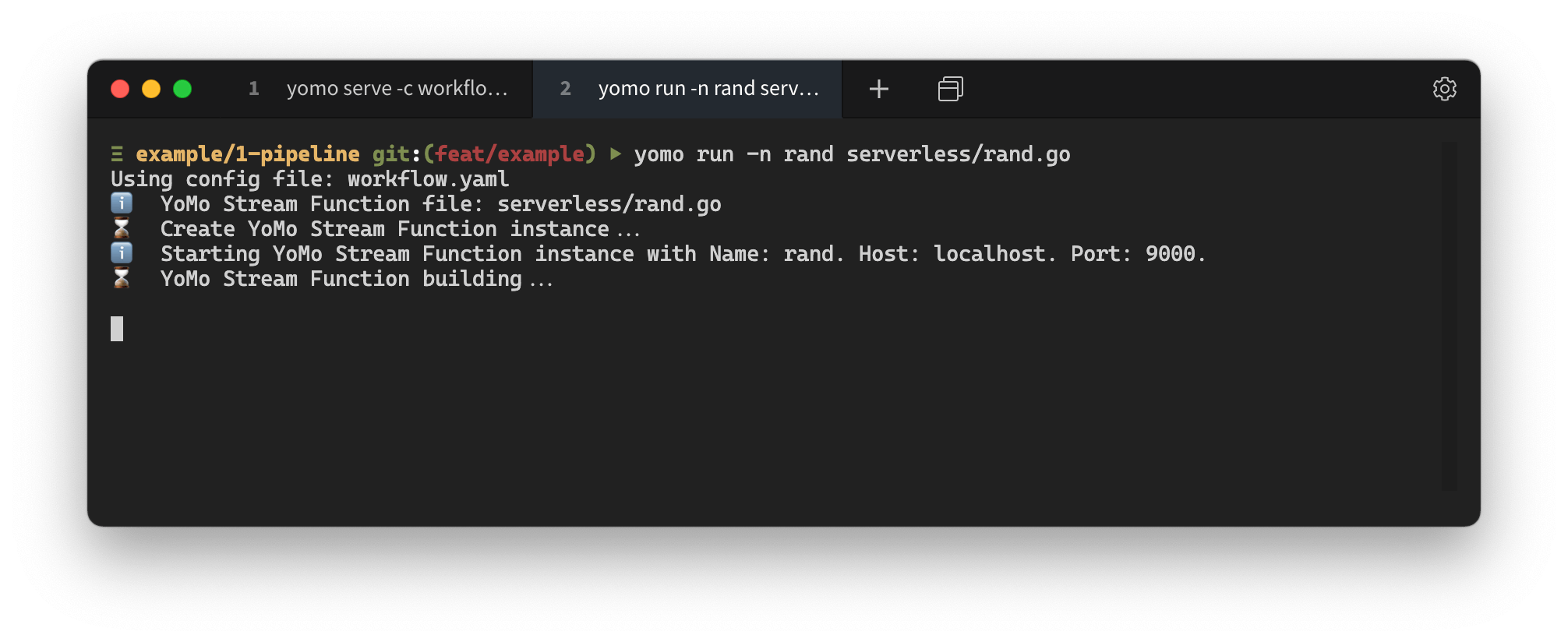

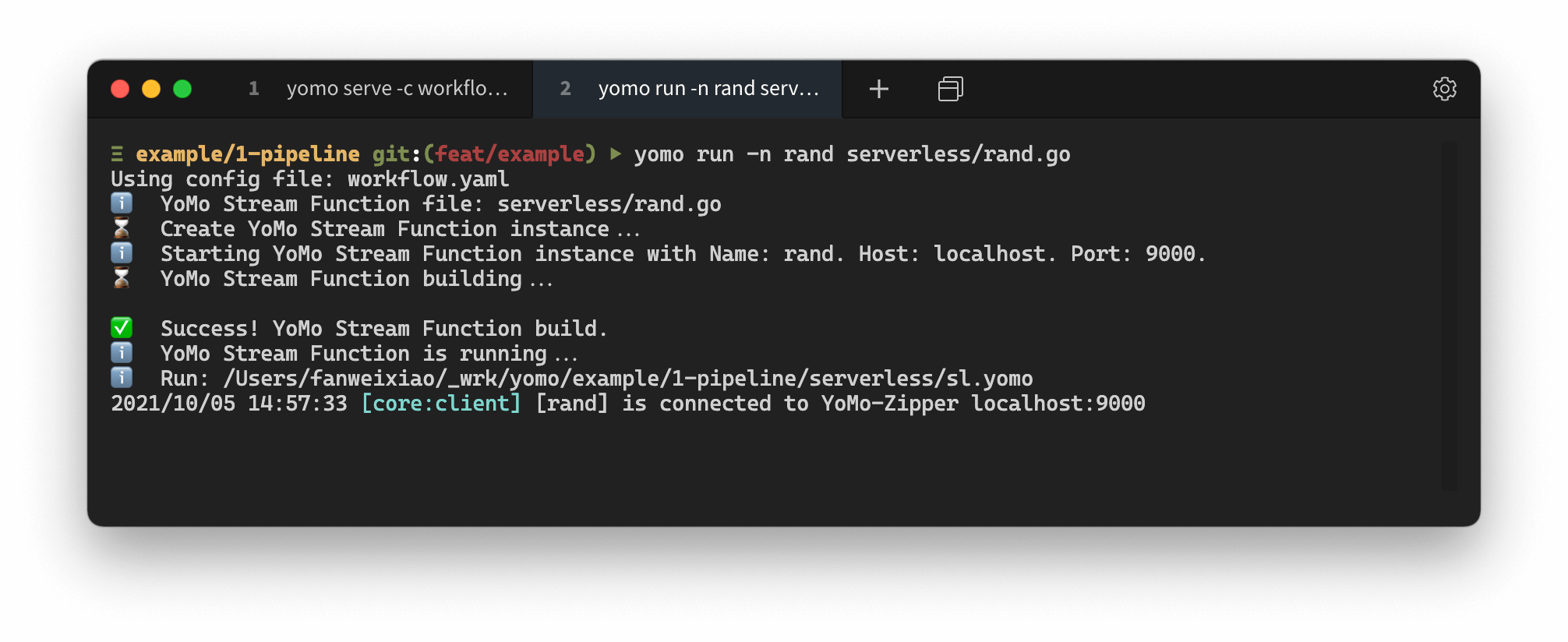

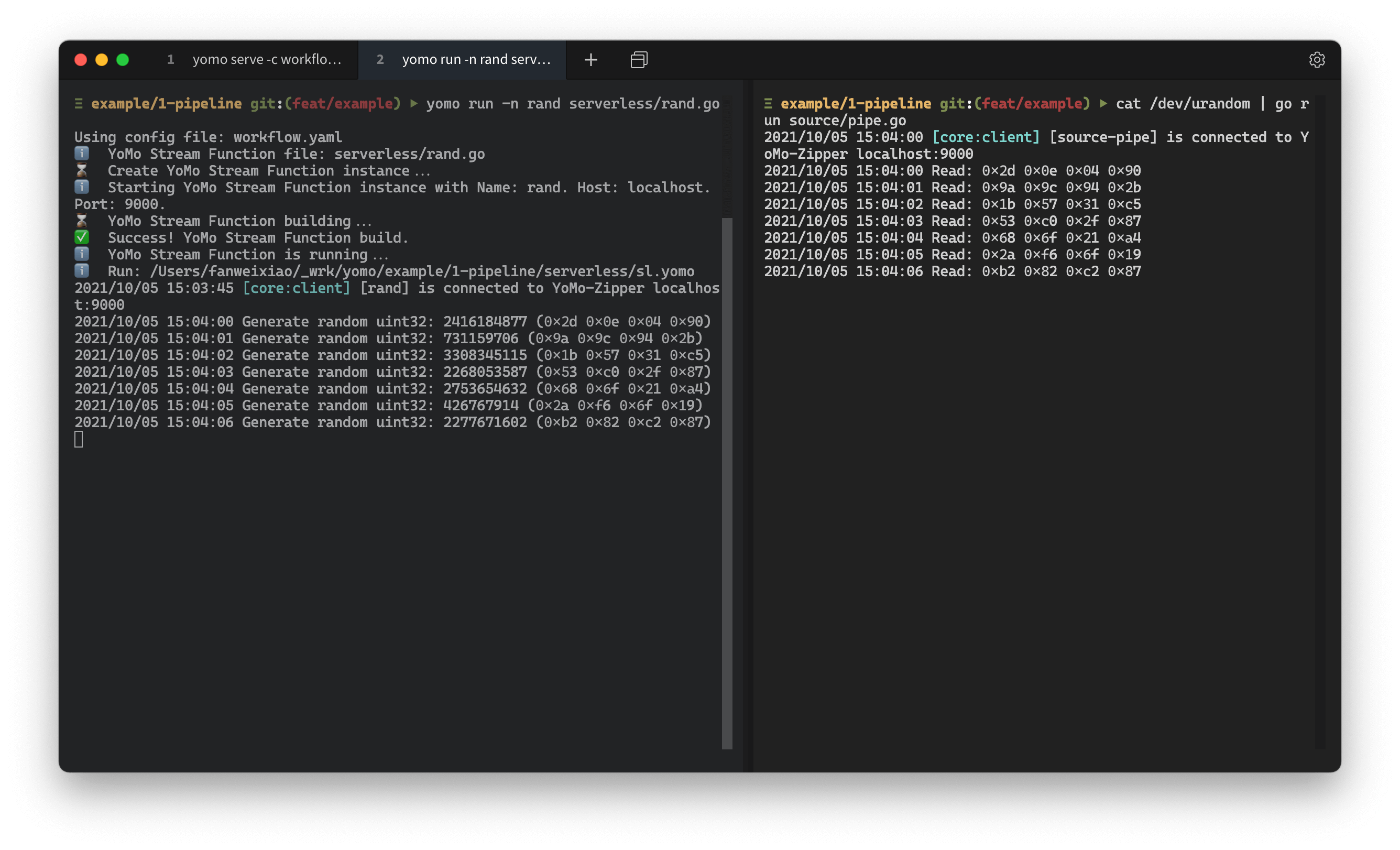

yomo run -n rand serverless/rand.go

after few seconds, build is success, you should see the following:

cat /dev/urandom | go run source/pipe.go