Releases: huggingface/transformers

Patch release v4.42.2

v4.42.1: Patch release

Patch release for commit:

- [HybridCache] Fix get_seq_length method (#31661)

v4.42.0: Gemma 2, RTDETR, InstructBLIP, LLAVa Next, New Model Adder

New model additions

Gemma-2

The Gemma2 model was proposed in Gemma2: Open Models Based on Gemini Technology and Research by Gemma2 Team, Google.

Gemma2 models are trained on 6T tokens, and released with 2 versions, 2b and 7b.

The abstract from the paper is the following:

This work introduces Gemma2, a new family of open language models demonstrating strong performance across academic benchmarks for language understanding, reasoning, and safety. We release two sizes of models (2 billion and 7 billion parameters), and provide both pretrained and fine-tuned checkpoints. Gemma2 outperforms similarly sized open models on 11 out of 18 text-based tasks, and we present comprehensive evaluations of safety and responsibility aspects of the models, alongside a detailed description of our model development. We believe the responsible release of LLMs is critical for improving the safety of frontier models, and for enabling the next wave of LLM innovations

- Add gemma 2 by @ArthurZucker in #31659

RTDETR

The RT-DETR model was proposed in DETRs Beat YOLOs on Real-time Object Detection by Wenyu Lv, Yian Zhao, Shangliang Xu, Jinman Wei, Guanzhong Wang, Cheng Cui, Yuning Du, Qingqing Dang, Yi Liu.

RT-DETR is an object detection model that stands for “Real-Time DEtection Transformer.” This model is designed to perform object detection tasks with a focus on achieving real-time performance while maintaining high accuracy. Leveraging the transformer architecture, which has gained significant popularity in various fields of deep learning, RT-DETR processes images to identify and locate multiple objects within them.

- New model support RTDETR by @SangbumChoi in #29077

InstructBlip

The InstructBLIP model was proposed in InstructBLIP: Towards General-purpose Vision-Language Models with Instruction Tuning by Wenliang Dai, Junnan Li, Dongxu Li, Anthony Meng Huat Tiong, Junqi Zhao, Weisheng Wang, Boyang Li, Pascale Fung, Steven Hoi. InstructBLIP leverages the BLIP-2 architecture for visual instruction tuning.

InstructBLIP uses the same architecture as BLIP-2 with a tiny but important difference: it also feeds the text prompt (instruction) to the Q-Former.

- Add video modality for InstrucBLIP by @zucchini-nlp in #30182

LlaVa NeXT Video

The LLaVa-NeXT-Video model was proposed in LLaVA-NeXT: A Strong Zero-shot Video Understanding Model by Yuanhan Zhang, Bo Li, Haotian Liu, Yong Jae Lee, Liangke Gui, Di Fu, Jiashi Feng, Ziwei Liu, Chunyuan Li. LLaVa-NeXT-Video improves upon LLaVa-NeXT by fine-tuning on a mix if video and image dataset thus increasing the model’s performance on videos.

LLaVA-NeXT surprisingly has strong performance in understanding video content in zero-shot fashion with the AnyRes technique that it uses. The AnyRes technique naturally represents a high-resolution image into multiple images. This technique is naturally generalizable to represent videos because videos can be considered as a set of frames (similar to a set of images in LLaVa-NeXT). The current version of LLaVA-NeXT makes use of AnyRes and trains with supervised fine-tuning (SFT) on top of LLaVA-Next on video data to achieves better video understanding capabilities.The model is a current SOTA among open-source models on VideoMME bench.

- Add LLaVa NeXT Video by @zucchini-nlp in #31252

New model adder

A very significant change makes its way within the transformers codebase, introducing a new way to add models to transformers. We recommend reading the description of the PR below, but here is the gist of it:

The diff_converter tool is here to replace our old Copied from statements, while keeping our core transformers philosophy:

- single model single file

- explicit code

- standardization of modeling code

- readable and educative code

- simple code

- least amount of modularity

This additionally unlocks the ability to very quickly see the differences between new architectures that get developed. While many architectures are similar, the "single model, single file" policy can obfuscate the changes. With this diff converter, we want to make the changes between architectures very explicit.

- Diff converter v2 by @ArthurZucker in #30868

Tool-use and RAG model support

We've made major updates to our support for tool-use and RAG models. We can now automatically generate JSON schema descriptions for Python functions which are suitable for passing to tool models, and we've defined a standard API for tool models which should allow the same tool inputs to be used with many different models. Models will need updates to their chat templates to support the new API, and we're targeting the Nous-Hermes, Command-R and Mistral/Mixtral model families for support in the very near future. Please see the updated chat template docs for more information.

If you are the owner of a model that supports tool use, but you're not sure how to update its chat template to support the new API, feel free to reach out to us for assistance with the update, for example on the Hugging Face Discord server. Ping Matt and yell key phrases like "chat templates" and "Jinja" and your issue will probably get resolved.

- Chat Template support for function calling and RAG by @Rocketknight1 in #30621

GGUF support

We further the support of GGUF files to offer fine-tuning within the python/HF ecosystem, before converting them back to the GGUF/GGML/llama.cpp libraries.

- Add Qwen2 GGUF loading support by @Isotr0py in #31175

- GGUF: Fix llama 3 GGUF by @younesbelkada in #31358

- Fix llama gguf converter by @SunMarc in #31575

Trainer improvements

A new optimizer is added in the Trainer.

- FEAT / Trainer: LOMO optimizer support by @younesbelkada in #30178

Quantization improvements

Several improvements are done related to quantization: a new cache (the quantized KV cache) is added, offering the ability to convert the cache of generative models, further reducing the memory requirements.

Additionally, the documentation related to quantization is entirely redone with the aim of helping users choose which is the best quantization method.

- Quantized KV Cache by @zucchini-nlp in #30483

- Docs / Quantization: refactor quantization documentation by @younesbelkada in #30942

Examples

New instance segmentation examples are added by @qubvel

Notable improvements

As a notable improvement to the HF vision models that leverage backbones, we enable leveraging HF pretrained model weights as backbones, with the following API:

from transformers import MaskFormerConfig, MaskFormerForInstanceSegmentation

config = MaskFormerConfig(backbone="microsoft/resnet-50", use_pretrained_backbone=True)

model = MaskFormerForInstanceSegmentation(config)- Enable HF pretrained backbones by @amyeroberts in #31145

Additionally, we thank @Cyrilvallez for diving into our generate method and greatly reducing the memory requirements.

- Reduce by 2 the memory requirement in

generate()🔥🔥🔥 by @Cyrilvallez in #30536

Breaking changes

Remove ConversationalPipeline and Conversation object

Both the ConversationalPipeline and the Conversation object have been deprecated for a while, and are due for removal in 4.42, which is the upcoming version.

The TextGenerationPipeline is recommended for this use-case, and now accepts inputs in the form of the OpenAI API.

- 🚨 Remove ConversationalPipeline and Conversation object by @Rocketknight1 in #31165

Remove an accidental duplicate softmax application in FLAVA's attention

Removes duplicate softmax application in FLAVA attention. Likely to have a small change on the outputs but flagging with 🚨 as it will change a bit.

- 🚨 FLAVA: Remove double softmax by @amyeroberts in #31322

Idefics2's ignore_index attribute of the loss is updated to -100

- 🚨 [Idefics2] Update ignore index by @NielsRogge in #30898

out_indices from timm being updated

Recent updates to timm changed the type of the attribute model.feature_info.out_indices. Previously, out_indices would reflect the input type of out_indices on the create_model call i.e. either tuple or list. Now, this value is always a tuple.

As list are more useful and consistent for us -- we cannot save tuples in configs, they must be converted to lists first -- we instead choose to cast out_indices to always be a list.

This has the possibility of being a slight breaking change if users are creating models and relying on out_indices on being a tuple. As this property only happens when a new model is created, and not if it's saved and reloaded (because of the config), then I think this has a low chance of having much of an impact.

- 🚨 out_indices always a list by @amyeroberts in #30941

datasets referenced in the quantization config get updated to remove referen...

Release v4.41.2

Release v4.41.2

Mostly fixing some stuff related to trust_remote_code=True and from_pretrained

The local_file_only was having a hard time when a .safetensors file did not exist. This is not expected and instead of trying to convert, we should just fallback to loading the .bin files.

- Do not trigger autoconversion if local_files_only #31004 from @Wauplin fixes this!

- Paligemma: Fix devices and dtype assignments (#31008) by @molbap

- Redirect transformers_agents doc to agents (#31054) @aymeric-roucher

- Fix from_pretrained in offline mode when model is preloaded in cache (#31010) by @oOraph

- Fix faulty rstrip in module loading (#31108) @Rocketknight1

Release v4.41.1 Fix PaliGemma finetuning, and some small bugs

Release v4.41.1

Fix PaliGemma finetuning:

The causal mask and label creation was causing label leaks when training. Kudos to @probicheaux for finding and reporting!

- a755745 : PaliGemma - fix processor with no input text (#30916) @hiyouga

- a25f7d3 : Paligemma causal attention mask (#30967) @molbap and @probicheaux

Other fixes:

- bb48e92: tokenizer_class = "AutoTokenizer" Llava Family (#30912)

- 1d568df : legacy to init the slow tokenizer when converting from slow was wrong (#30972)

- b1065aa : Generation: get special tokens from model config (#30899) @zucchini-nlp

Reverted 4ab7a28

v4.41.0: Phi3, JetMoE, PaliGemma, VideoLlava, Falcon2, FalconVLM & GGUF support

New models

Phi3

The Phi-3 model was proposed in Phi-3 Technical Report: A Highly Capable Language Model Locally on Your Phone by Microsoft.

TLDR; Phi-3 introduces new ROPE scaling methods, which seems to scale fairly well! A 3b and a

Phi-3-mini is available in two context-length variants—4K and 128K tokens. It is the first model in its class to support a context window of up to 128K tokens, with little impact on quality.

JetMoE

JetMoe-8B is an 8B Mixture-of-Experts (MoE) language model developed by Yikang Shen and MyShell. JetMoe project aims to provide a LLaMA2-level performance and efficient language model with a limited budget. To achieve this goal, JetMoe uses a sparsely activated architecture inspired by the ModuleFormer. Each JetMoe block consists of two MoE layers: Mixture of Attention Heads and Mixture of MLP Experts. Given the input tokens, it activates a subset of its experts to process them. This sparse activation schema enables JetMoe to achieve much better training throughput than similar size dense models. The training throughput of JetMoe-8B is around 100B tokens per day on a cluster of 96 H100 GPUs with a straightforward 3-way pipeline parallelism strategy.

- Add JetMoE model by @yikangshen in #30005

PaliGemma

PaliGemma is a lightweight open vision-language model (VLM) inspired by PaLI-3, and based on open components like the SigLIP vision model and the Gemma language model. PaliGemma takes both images and text as inputs and can answer questions about images with detail and context, meaning that PaliGemma can perform deeper analysis of images and provide useful insights, such as captioning for images and short videos, object detection, and reading text embedded within images.

More than 120 checkpoints are released see the collection here !

VideoLlava

Video-LLaVA exhibits remarkable interactive capabilities between images and videos, despite the absence of image-video pairs in the dataset.

💡 Simple baseline, learning united visual representation by alignment before projection

With the binding of unified visual representations to the language feature space, we enable an LLM to perform visual reasoning capabilities on both images and videos simultaneously.

🔥 High performance, complementary learning with video and image

Extensive experiments demonstrate the complementarity of modalities, showcasing significant superiority when compared to models specifically designed for either images or videos.

- Add Video Llava by @zucchini-nlp in #29733

Falcon 2 and FalconVLM:

Two new models from TII-UAE! They published a blog-post with more details! Falcon2 introduces parallel mlp, and falcon VLM uses the Llava framework

- Support for Falcon2-11B by @Nilabhra in #30771

- Support arbitrary processor by @ArthurZucker in #30875

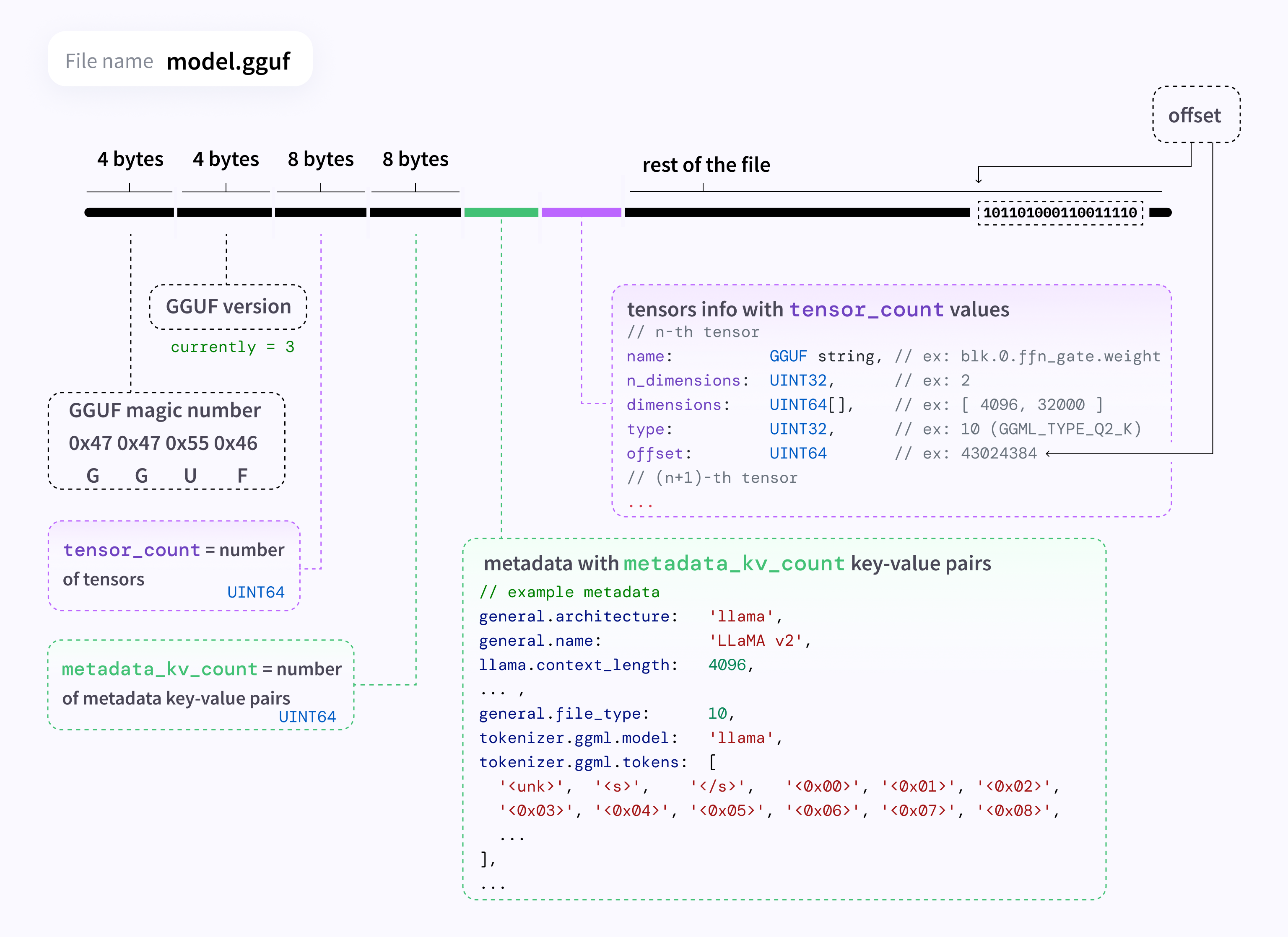

GGUF from_pretrained support

You can now load most of the GGUF quants directly with transformers' from_pretrained to convert it to a classic pytorch model. The API is simple:

from transformers import AutoTokenizer, AutoModelForCausalLM

model_id = "TheBloke/TinyLlama-1.1B-Chat-v1.0-GGUF"

filename = "tinyllama-1.1b-chat-v1.0.Q6_K.gguf"

tokenizer = AutoTokenizer.from_pretrained(model_id, gguf_file=filename)

model = AutoModelForCausalLM.from_pretrained(model_id, gguf_file=filename)We plan more closer integrations with llama.cpp / GGML ecosystem in the future, see: #27712 for more details

- Loading GGUF files support by @LysandreJik in #30391

Transformers Agents 2.0

v4.41.0 introduces a significant refactor of the Agents framework.

With this release, we allow you to build state-of-the-art agent systems, including the React Code Agent that writes its actions as code in ReAct iterations, following the insights from Wang et al., 2024

Just install with pip install "transformers[agents]". Then you're good to go!

from transformers import ReactCodeAgent

agent = ReactCodeAgent(tools=[])

code = """

list=[0, 1, 2]

for i in range(4):

print(list(i))

"""

corrected_code = agent.run(

"I have some code that creates a bug: please debug it and return the final code",

code=code,

)Quantization

New quant methods

In this release we support new quantization methods: HQQ & EETQ contributed by the community. Read more about how to quantize any transformers model using HQQ & EETQ in the dedicated documentation section

- Add HQQ quantization support by @mobicham in #29637

- [FEAT]: EETQ quantizer support by @dtlzhuangz in #30262

dequantize API for bitsandbytes models

In case you want to dequantize models that have been loaded with bitsandbytes, this is now possible through the dequantize API (e.g. to merge adapter weights)

- FEAT / Bitsandbytes: Add

dequantizeAPI for bitsandbytes quantized models by @younesbelkada in #30806

API-wise, you can achieve that with the following:

from transformers import AutoModelForCausalLM, BitsAndBytesConfig, AutoTokenizer

model_id = "facebook/opt-125m"

model = AutoModelForCausalLM.from_pretrained(model_id, quantization_config=BitsAndBytesConfig(load_in_4bit=True))

tokenizer = AutoTokenizer.from_pretrained(model_id)

model.dequantize()

text = tokenizer("Hello my name is", return_tensors="pt").to(0)

out = model.generate(**text)

print(tokenizer.decode(out[0]))Generation updates

- Add Watermarking LogitsProcessor and WatermarkDetector by @zucchini-nlp in #29676

- Cache: Static cache as a standalone object by @gante in #30476

- Generate: add

min_psampling by @gante in #30639 - Make

Gemmawork withtorch.compileby @ydshieh in #30775

SDPA support

- [

BERT] Add support for sdpa by @hackyon in #28802 - Add sdpa and fa2 the Wav2vec2 family. by @kamilakesbi in #30121

- add sdpa to ViT [follow up of #29325] by @hyenal in #30555

Improved Object Detection

Addition of fine-tuning script for object detection models

- Fix YOLOS image processor resizing by @qubvel in #30436

- Add examples for detection models finetuning by @qubvel in #30422

- Add installation of examples requirements in CI by @qubvel in #30708

- Update object detection guide by @qubvel in #30683

Interpolation of embeddings for vision models

Add interpolation of embeddings. This enables predictions from pretrained models on input images of sizes different than those the model was originally trained on. Simply pass interpolate_pos_embedding=True when calling the model.

Added for: BLIP, BLIP 2, InstructBLIP, SigLIP, ViViT

import requests

from PIL import Image

from transformers import Blip2Processor, Blip2ForConditionalGeneration

image = Image.open(requests.get("https://huggingface.co/hf-internal-testing/blip-test-image/resolve/main/demo.jpg", stream=True).raw)

processor = Blip2Processor.from_pretrained("Salesforce/blip2-opt-2.7b")

model = Blip2ForConditionalGeneration.from_pretrained(

"Salesforce/blip2-opt-2.7b",

torch_dtype=torch.float16

).to("cuda")

inputs = processor(images=image, size={"height": 500, "width": 500}, return_tensors="pt").to("cuda")

predictions = model(**inputs, interpolate_pos_encoding=True)

# Generated text: "a woman and dog on the beach"

generated_text = processor.batch_decode(predictions, skip_special_tokens=True)[0].strip()- Blip dynamic input resolution by @zafstojano in #30722

- Add dynamic resolution input/interpolate pos...

v4.40.2

Fix torch fx for LLama model

Thanks @michaelbenayoun !

v4.40.1: fix `EosTokenCriteria` for `Llama3` on `mps`

v4.40.0: Llama 3, Idefics 2, Recurrent Gemma, Jamba, DBRX, OLMo, Qwen2MoE, Grounding Dino

New model additions

Llama 3

Llama 3 is supported in this release through the Llama 2 architecture and some fixes in the tokenizers library.

Idefics2

The Idefics2 model was created by the Hugging Face M4 team and authored by Léo Tronchon, Hugo Laurencon, Victor Sanh. The accompanying blog post can be found here.

Idefics2 is an open multimodal model that accepts arbitrary sequences of image and text inputs and produces text outputs. The model can answer questions about images, describe visual content, create stories grounded on multiple images, or simply behave as a pure language model without visual inputs. It improves upon IDEFICS-1, notably on document understanding, OCR, or visual reasoning. Idefics2 is lightweight (8 billion parameters) and treats images in their native aspect ratio and resolution, which allows for varying inference efficiency.

- Add Idefics2 by @amyeroberts in #30253

Recurrent Gemma

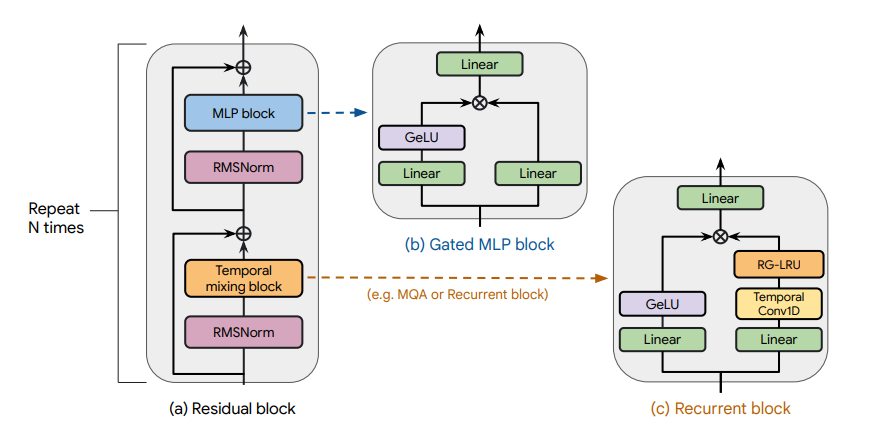

Recurrent Gemma architecture. Taken from the original paper.

The Recurrent Gemma model was proposed in RecurrentGemma: Moving Past Transformers for Efficient Open Language Models by the Griffin, RLHF and Gemma Teams of Google.

The abstract from the paper is the following:

We introduce RecurrentGemma, an open language model which uses Google’s novel Griffin architecture. Griffin combines linear recurrences with local attention to achieve excellent performance on language. It has a fixed-sized state, which reduces memory use and enables efficient inference on long sequences. We provide a pre-trained model with 2B non-embedding parameters, and an instruction tuned variant. Both models achieve comparable performance to Gemma-2B despite being trained on fewer tokens.

- Add recurrent gemma by @ArthurZucker in #30143

Jamba

Jamba is a pretrained, mixture-of-experts (MoE) generative text model, with 12B active parameters and an overall of 52B parameters across all experts. It supports a 256K context length, and can fit up to 140K tokens on a single 80GB GPU.

As depicted in the diagram below, Jamba’s architecture features a blocks-and-layers approach that allows Jamba to successfully integrate Transformer and Mamba architectures altogether. Each Jamba block contains either an attention or a Mamba layer, followed by a multi-layer perceptron (MLP), producing an overall ratio of one Transformer layer out of every eight total layers.

Jamba introduces the first HybridCache object that allows it to natively support assisted generation, contrastive search, speculative decoding, beam search and all of the awesome features from the generate API!

- Add jamba by @tomeras91 in #29943

DBRX

DBRX is a transformer-based decoder-only large language model (LLM) that was trained using next-token prediction. It uses a fine-grained mixture-of-experts (MoE) architecture with 132B total parameters of which 36B parameters are active on any input.

It was pre-trained on 12T tokens of text and code data. Compared to other open MoE models like Mixtral-8x7B and Grok-1, DBRX is fine-grained, meaning it uses a larger number of smaller experts. DBRX has 16 experts and chooses 4, while Mixtral-8x7B and Grok-1 have 8 experts and choose 2.

This provides 65x more possible combinations of experts and the authors found that this improves model quality. DBRX uses rotary position encodings (RoPE), gated linear units (GLU), and grouped query attention (GQA).

- Add DBRX Model by @abhi-mosaic in #29921

OLMo

The OLMo model was proposed in OLMo: Accelerating the Science of Language Models by Dirk Groeneveld, Iz Beltagy, Pete Walsh, Akshita Bhagia, Rodney Kinney, Oyvind Tafjord, Ananya Harsh Jha, Hamish Ivison, Ian Magnusson, Yizhong Wang, Shane Arora, David Atkinson, Russell Authur, Khyathi Raghavi Chandu, Arman Cohan, Jennifer Dumas, Yanai Elazar, Yuling Gu, Jack Hessel, Tushar Khot, William Merrill, Jacob Morrison, Niklas Muennighoff, Aakanksha Naik, Crystal Nam, Matthew E. Peters, Valentina Pyatkin, Abhilasha Ravichander, Dustin Schwenk, Saurabh Shah, Will Smith, Emma Strubell, Nishant Subramani, Mitchell Wortsman, Pradeep Dasigi, Nathan Lambert, Kyle Richardson, Luke Zettlemoyer, Jesse Dodge, Kyle Lo, Luca Soldaini, Noah A. Smith, Hannaneh Hajishirzi.

OLMo is a series of Open Language Models designed to enable the science of language models. The OLMo models are trained on the Dolma dataset. We release all code, checkpoints, logs (coming soon), and details involved in training these models.

- Add OLMo model family by @2015aroras in #29890

Qwen2MoE

Qwen2MoE is the new model series of large language models from the Qwen team. Previously, we released the Qwen series, including Qwen-72B, Qwen-1.8B, Qwen-VL, Qwen-Audio, etc.

Model Details

Qwen2MoE is a language model series including decoder language models of different model sizes. For each size, we release the base language model and the aligned chat model. Qwen2MoE has the following architectural choices:

Qwen2MoE is based on the Transformer architecture with SwiGLU activation, attention QKV bias, group query attention, mixture of sliding window attention and full attention, etc. Additionally, we have an improved tokenizer adaptive to multiple natural languages and codes.

Qwen2MoE employs Mixture of Experts (MoE) architecture, where the models are upcycled from dense language models. For instance, Qwen1.5-MoE-A2.7B is upcycled from Qwen-1.8B. It has 14.3B parameters in total and 2.7B activated parameters during runtime, while it achieves comparable performance with Qwen1.5-7B, with only 25% of the training resources.

- Add Qwen2MoE by @bozheng-hit in #29377

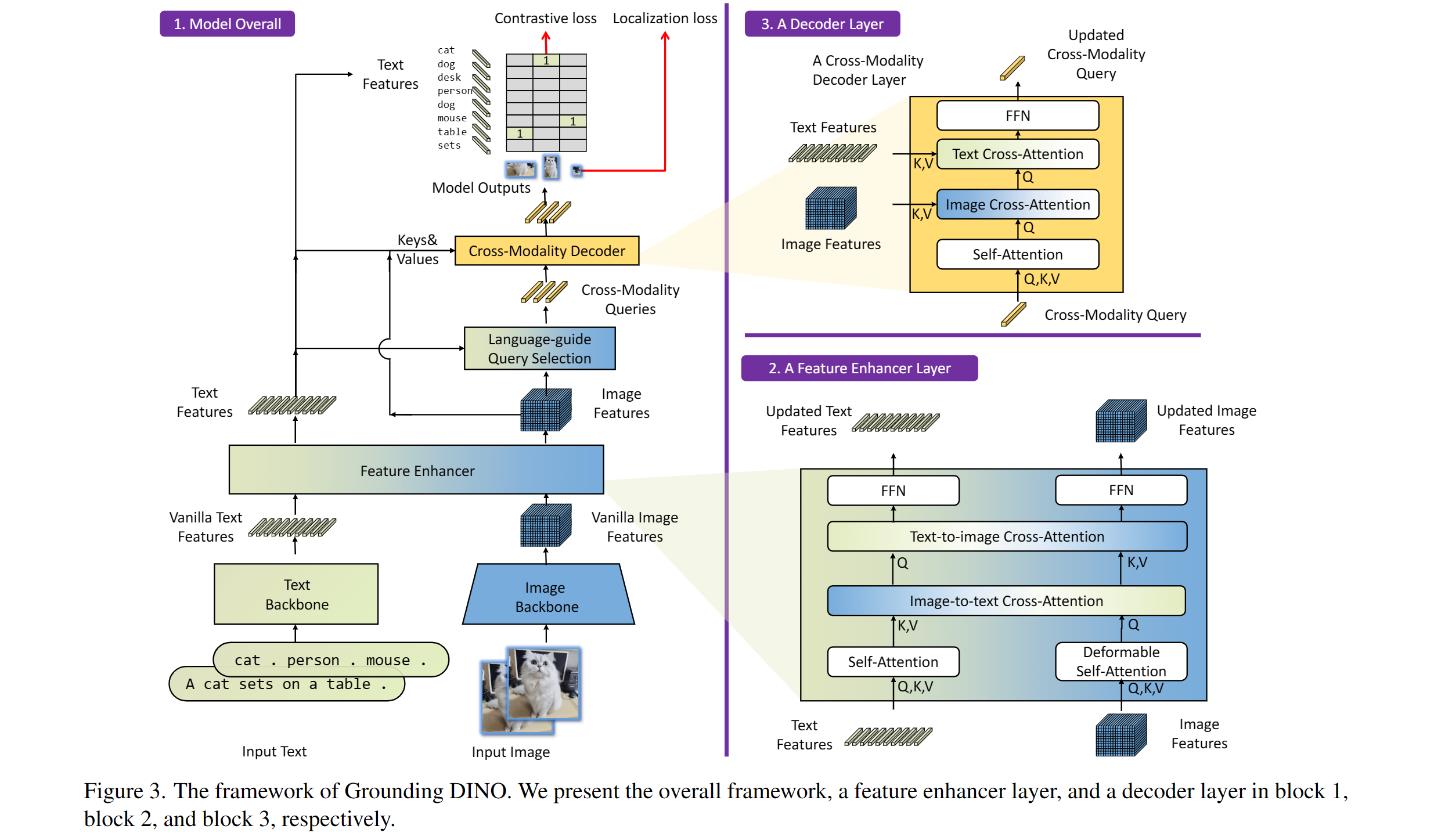

Grounding Dino

Taken from the original paper.

The Grounding DINO model was proposed in Grounding DINO: Marrying DINO with Grounded Pre-Training for Open-Set Object Detection by Shilong Liu, Zhaoyang Zeng, Tianhe Ren, Feng Li, Hao Zhang, Jie Yang, Chunyuan Li, Jianwei Yang, Hang Su, Jun Zhu, Lei Zhang. Grounding DINO extends a closed-set object detection model with a text encoder, enabling open-set object detection. The model achieves remarkable results, such as 52.5 AP on COCO zero-shot.

- Adding grounding dino by @EduardoPach in #26087

Static pretrained maps

Static pretrained maps have been removed from the library's internals and are currently deprecated. These used to reflect all the available checkpoints for a given architecture on the Hugging Face Hub, but their presence does not make sense in light of the huge growth of checkpoint shared by the community.

With the objective of lowering the bar of model contributions and reviewing, we first start by removing legacy objects such as this one which do not serve a purpose.

- Remove static pretrained maps from the library's internals by @LysandreJik in #29112

Notable improvements

Processors improvements

Processors are ungoing changes in order to uniformize them and make them clearer to use.

- Separate out kwargs in processor by @amyeroberts in #30193

- [Processor classes] Update docs by @NielsRogge in #29698

SDPA

Push to Hub for pipelines

Pipelines can now be pushed to Hub using a convenient push_to_hub method.

Flash Attention 2 for more models (M2M100, NLLB, GPT2, MusicGen) !

Thanks to the community contribution, Flash Attention 2 has been integrated for more architectures

- Adding Flash Attention 2 Support for GPT2 by @EduardoPach in #29226

- Add Flash Attention 2 support to Musicgen and Musicgen Melody by @ylacombe in #29939

- Add Flash Attention 2 to M2M100 model by @visheratin in #30256

Improvements and bugfixes

- [docs] Remove redundant

-andthefrom custom_tools.md by @windsonsea in #29767 - Fixed typo in quantization_config.py by @kurokiasahi222 in #29766

- OWL-ViT box_predictor inefficiency issue by @RVV-karma in #29712

- Allow

-OOmode fordocstring_decoratorby @matthid in #29689 - fix issue with logit processor during beam search in Flax by @giganttheo in #29636

- Fix docker image build for

Latest PyTorch + TensorFlow [dev]by @ydshieh in #29764 - [

LlavaNext] Fix llava next unsafe imports by @ArthurZucker in #29773 - Cast bfloat16 to float32 for Numpy conversions by @Rocketknight1 in #29755

- Silence deprecations and use the DataLoaderConfig by @muellerzr in #29779

- Add deterministic config to

set_seedby @muellerzr in #29778 - Add support for

torch_dtypein the run_mlm example by @jla524 in #29776 - Generate: remove legacy generation mixin imports by @gante in #29782

- Llama: always convert the causal mask in the SDPA code path by @gante in #29663

- Prepend

bos tokento Blip generations by @zucchini-nlp in #29642 - Change in-place operations to out-of-place in LogitsProcessors by @zucchini-nlp in #29680

- [

quality] update quality check to make sure we check imports 😈 by @ArthurZucker in #29771 - Fix type hint for train_dataset param of Trainer.init() to allow IterableDataset. Issue 29678 by @stevemadere in #29738

- Enable AMD docker buil...