diff --git a/docs/source/en/index.md b/docs/source/en/index.md

index 967049d89cbe12..130775f6420f9e 100644

--- a/docs/source/en/index.md

+++ b/docs/source/en/index.md

@@ -122,6 +122,7 @@ Flax), PyTorch, and/or TensorFlow.

| [DeiT](model_doc/deit) | ✅ | ✅ | ❌ |

| [DePlot](model_doc/deplot) | ✅ | ❌ | ❌ |

| [Depth Anything](model_doc/depth_anything) | ✅ | ❌ | ❌ |

+| [DepthPro](model_doc/depth_pro) | ✅ | ❌ | ❌ |

| [DETA](model_doc/deta) | ✅ | ❌ | ❌ |

| [DETR](model_doc/detr) | ✅ | ❌ | ❌ |

| [DialoGPT](model_doc/dialogpt) | ✅ | ✅ | ✅ |

diff --git a/docs/source/en/model_doc/depth_pro.md b/docs/source/en/model_doc/depth_pro.md

new file mode 100644

index 00000000000000..9019547434af84

--- /dev/null

+++ b/docs/source/en/model_doc/depth_pro.md

@@ -0,0 +1,123 @@

+

+

+# DepthPro

+

+## Overview

+

+The DepthPro model was proposed in [Depth Pro: Sharp Monocular Metric Depth in Less Than a Second](https://arxiv.org/abs/2410.02073) by Aleksei Bochkovskii, Amaël Delaunoy, Hugo Germain, Marcel Santos, Yichao Zhou, Stephan R. Richter, Vladlen Koltun.

+

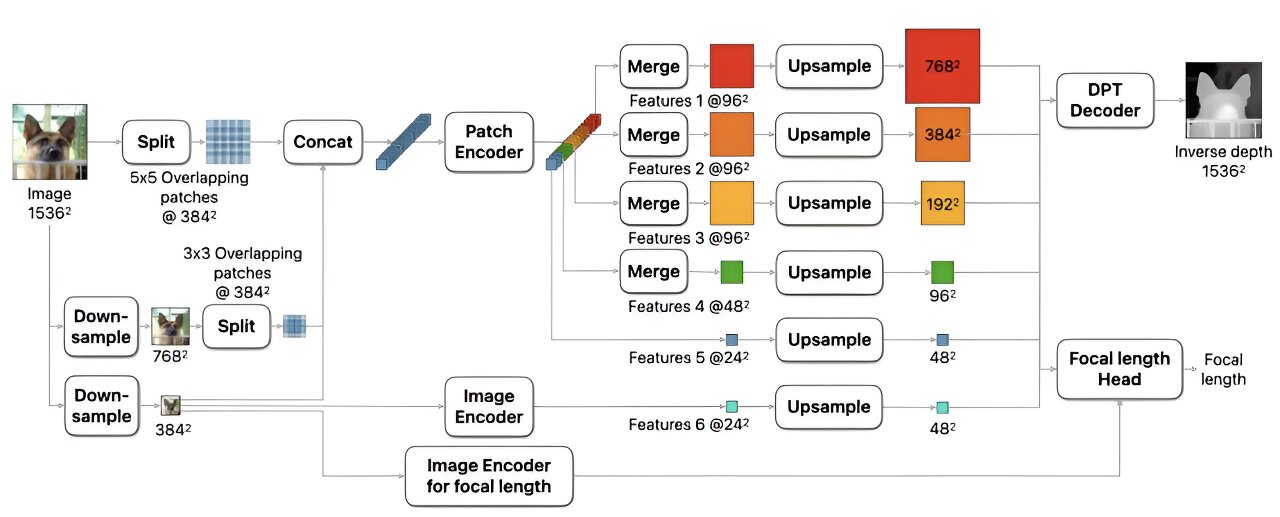

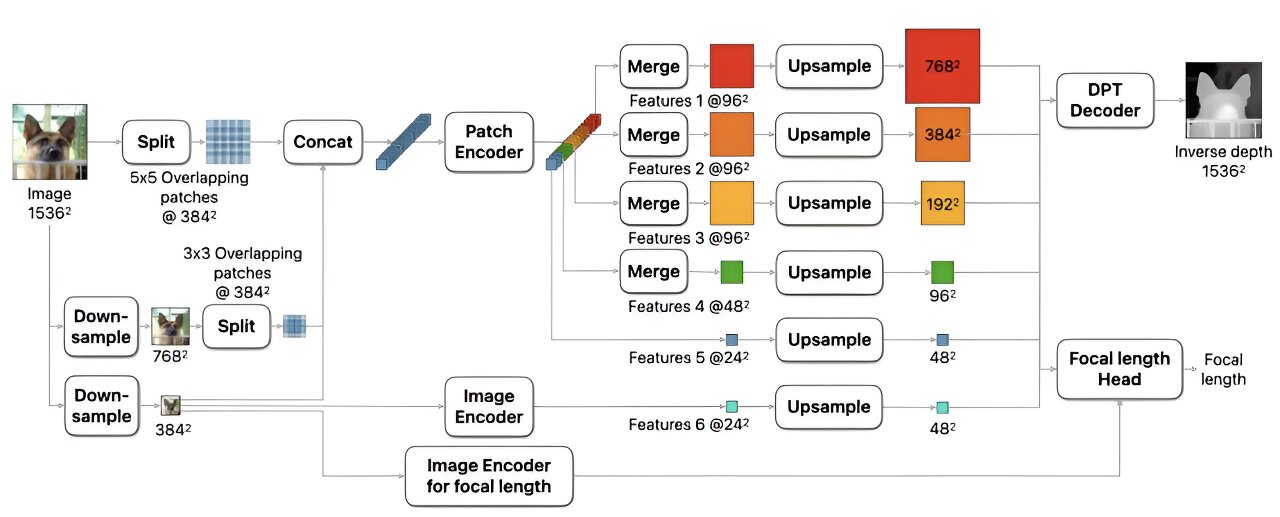

+It leverages a multi-scale [Vision Transformer (ViT)](vit) optimized for dense predictions. It downsamples an image at several scales. At each scale, it is split into patches, which are processed by a ViT-based [Dinov2](dinov2) patch encoder, with weights shared across scales. Patches are merged into feature maps, upsampled, and fused via a [DPT](dpt) like decoder.

+

+The abstract from the paper is the following:

+

+*We present a foundation model for zero-shot metric monocular depth estimation. Our model, Depth Pro, synthesizes high-resolution depth maps with unparalleled sharpness and high-frequency details. The predictions are metric, with absolute scale, without relying on the availability of metadata such as camera intrinsics. And the model is fast, producing a 2.25-megapixel depth map in 0.3 seconds on a standard GPU. These characteristics are enabled by a number of technical contributions, including an efficient multi-scale vision transformer for dense prediction, a training protocol that combines real and synthetic datasets to achieve high metric accuracy alongside fine boundary tracing, dedicated evaluation metrics for boundary accuracy in estimated depth maps, and state-of-the-art focal length estimation from a single image. Extensive experiments analyze specific design choices and demonstrate that Depth Pro outperforms prior work along multiple dimensions.*

+

+ +

+ DepthPro architecture. Taken from the original paper.

+

+This model was contributed by [geetu040](https://github.com/geetu040). The original code can be found [here](https://github.com/apple/ml-depth-pro).

+

+

+

+## Usage tips

+

+```python

+from transformers import DepthProConfig, DepthProForDepthEstimation

+

+config = DepthProConfig()

+model = DPTForDepthEstimation(config=config)

+```

+

+- By default model takes an input image of size `1536`, this can be changed via config, however the model is compatible with images of different width and height.

+- Input image is scaled with different ratios, as specified in `scaled_images_ratios`, then each of the scaled image is patched to `patch_size` with an overlap ratio of `scaled_images_overlap_ratios`.

+- These patches go through `DinoV2 (ViT)` based encoders and are reassembled via a `DPT` based decoder.

+- `DepthProForDepthEstimation` can also predict the `FOV (Field of View)` if `use_fov_model` is set to `True` in the config.

+- `DepthProImageProcessor` can be used for preprocessing the inputs and postprocessing the outputs. `DepthProImageProcessor.post_process_depth_estimation` interpolates the `predicted_depth` back to match the input image size.

+- To generate `predicted_depth` of the same size as input image, make sure the config is created such that

+```

+image_size / 2**(n_fusion_blocks+1) == patch_size / patch_embeddings_size

+

+where

+n_fusion_blocks = len(intermediate_hook_ids) + len(scaled_images_ratios)

+```

+

+

+### Using Scaled Dot Product Attention (SDPA)

+

+PyTorch includes a native scaled dot-product attention (SDPA) operator as part of `torch.nn.functional`. This function

+encompasses several implementations that can be applied depending on the inputs and the hardware in use. See the

+[official documentation](https://pytorch.org/docs/stable/generated/torch.nn.functional.scaled_dot_product_attention.html)

+or the [GPU Inference](https://huggingface.co/docs/transformers/main/en/perf_infer_gpu_one#pytorch-scaled-dot-product-attention)

+page for more information.

+

+SDPA is used by default for `torch>=2.1.1` when an implementation is available, but you may also set

+`attn_implementation="sdpa"` in `from_pretrained()` to explicitly request SDPA to be used.

+

+```py

+from transformers import DepthProForDepthEstimation

+model = DepthProForDepthEstimation.from_pretrained("geetu040/DepthPro", attn_implementation="sdpa", torch_dtype=torch.float16)

+...

+```

+

+For the best speedups, we recommend loading the model in half-precision (e.g. `torch.float16` or `torch.bfloat16`).

+

+On a local benchmark (A100-40GB, PyTorch 2.3.0, OS Ubuntu 22.04) with `float32` and `google/vit-base-patch16-224` model, we saw the following speedups during inference.

+

+| Batch size | Average inference time (ms), eager mode | Average inference time (ms), sdpa model | Speed up, Sdpa / Eager (x) |

+|--------------|-------------------------------------------|-------------------------------------------|------------------------------|

+| 1 | 7 | 6 | 1.17 |

+| 2 | 8 | 6 | 1.33 |

+| 4 | 8 | 6 | 1.33 |

+| 8 | 8 | 6 | 1.33 |

+

+## Resources

+

+- Research Paper: [Depth Pro: Sharp Monocular Metric Depth in Less Than a Second](https://arxiv.org/pdf/2410.02073)

+

+- Official Implementation: [apple/ml-depth-pro](https://github.com/apple/ml-depth-pro)

+

+

+

+If you're interested in submitting a resource to be included here, please feel free to open a Pull Request and we'll review it! The resource should ideally demonstrate something new instead of duplicating an existing resource.

+

+## DepthProConfig

+

+[[autodoc]] DepthProConfig

+

+## DepthProImageProcessor

+

+[[autodoc]] DepthProImageProcessor

+ - preprocess

+ - post_process_depth_estimation

+

+## DepthProImageProcessorFast

+

+[[autodoc]] DepthProImageProcessorFast

+ - preprocess

+ - post_process_depth_estimation

+

+## DepthProModel

+

+[[autodoc]] DepthProModel

+ - forward

+

+## DepthProForDepthEstimation

+

+[[autodoc]] DepthProForDepthEstimation

+ - forward

diff --git a/docs/source/en/perf_infer_gpu_one.md b/docs/source/en/perf_infer_gpu_one.md

index 930f41b6fefba7..9ead5ac276693f 100644

--- a/docs/source/en/perf_infer_gpu_one.md

+++ b/docs/source/en/perf_infer_gpu_one.md

@@ -237,6 +237,7 @@ For now, Transformers supports SDPA inference and training for the following arc

* [data2vec_vision](https://huggingface.co/docs/transformers/main/en/model_doc/data2vec#transformers.Data2VecVisionModel)

* [Dbrx](https://huggingface.co/docs/transformers/model_doc/dbrx#transformers.DbrxModel)

* [DeiT](https://huggingface.co/docs/transformers/model_doc/deit#transformers.DeiTModel)

+* [DepthPro](https://huggingface.co/docs/transformers/model_doc/depth_pro#transformers.DepthProModel)

* [Dinov2](https://huggingface.co/docs/transformers/en/model_doc/dinov2)

* [DistilBert](https://huggingface.co/docs/transformers/model_doc/distilbert#transformers.DistilBertModel)

* [Dpr](https://huggingface.co/docs/transformers/model_doc/dpr#transformers.DprReader)

diff --git a/src/transformers/__init__.py b/src/transformers/__init__.py

index ef140cc6d3a843..ebcfe53848502e 100755

--- a/src/transformers/__init__.py

+++ b/src/transformers/__init__.py

@@ -400,6 +400,7 @@

"models.deprecated.vit_hybrid": ["ViTHybridConfig"],

"models.deprecated.xlm_prophetnet": ["XLMProphetNetConfig"],

"models.depth_anything": ["DepthAnythingConfig"],

+ "models.depth_pro": ["DepthProConfig"],

"models.detr": ["DetrConfig"],

"models.dialogpt": [],

"models.dinat": ["DinatConfig"],

@@ -1212,6 +1213,7 @@

_import_structure["models.deprecated.efficientformer"].append("EfficientFormerImageProcessor")

_import_structure["models.deprecated.tvlt"].append("TvltImageProcessor")

_import_structure["models.deprecated.vit_hybrid"].extend(["ViTHybridImageProcessor"])

+ _import_structure["models.depth_pro"].extend(["DepthProImageProcessor", "DepthProImageProcessorFast"])

_import_structure["models.detr"].extend(["DetrFeatureExtractor", "DetrImageProcessor"])

_import_structure["models.donut"].extend(["DonutFeatureExtractor", "DonutImageProcessor"])

_import_structure["models.dpt"].extend(["DPTFeatureExtractor", "DPTImageProcessor"])

@@ -2136,6 +2138,13 @@

"DepthAnythingPreTrainedModel",

]

)

+ _import_structure["models.depth_pro"].extend(

+ [

+ "DepthProForDepthEstimation",

+ "DepthProModel",

+ "DepthProPreTrainedModel",

+ ]

+ )

_import_structure["models.detr"].extend(

[

"DetrForObjectDetection",

@@ -5359,6 +5368,7 @@

XLMProphetNetConfig,

)

from .models.depth_anything import DepthAnythingConfig

+ from .models.depth_pro import DepthProConfig

from .models.detr import DetrConfig

from .models.dinat import DinatConfig

from .models.dinov2 import Dinov2Config

@@ -6207,6 +6217,7 @@

from .models.deprecated.efficientformer import EfficientFormerImageProcessor

from .models.deprecated.tvlt import TvltImageProcessor

from .models.deprecated.vit_hybrid import ViTHybridImageProcessor

+ from .models.depth_pro import DepthProImageProcessor, DepthProImageProcessorFast

from .models.detr import DetrFeatureExtractor, DetrImageProcessor

from .models.donut import DonutFeatureExtractor, DonutImageProcessor

from .models.dpt import DPTFeatureExtractor, DPTImageProcessor

@@ -7001,6 +7012,11 @@

DepthAnythingForDepthEstimation,

DepthAnythingPreTrainedModel,

)

+ from .models.depth_pro import (

+ DepthProForDepthEstimation,

+ DepthProModel,

+ DepthProPreTrainedModel,

+ )

from .models.detr import (

DetrForObjectDetection,

DetrForSegmentation,

diff --git a/src/transformers/models/__init__.py b/src/transformers/models/__init__.py

index 7fcaddde704cf7..9030f178ab0cfa 100644

--- a/src/transformers/models/__init__.py

+++ b/src/transformers/models/__init__.py

@@ -73,6 +73,7 @@

deit,

deprecated,

depth_anything,

+ depth_pro,

detr,

dialogpt,

dinat,

diff --git a/src/transformers/models/auto/configuration_auto.py b/src/transformers/models/auto/configuration_auto.py

index 69ce8efa10c76c..b4b920b6d5886f 100644

--- a/src/transformers/models/auto/configuration_auto.py

+++ b/src/transformers/models/auto/configuration_auto.py

@@ -90,6 +90,7 @@

("deformable_detr", "DeformableDetrConfig"),

("deit", "DeiTConfig"),

("depth_anything", "DepthAnythingConfig"),

+ ("depth_pro", "DepthProConfig"),

("deta", "DetaConfig"),

("detr", "DetrConfig"),

("dinat", "DinatConfig"),

@@ -399,6 +400,7 @@

("deplot", "DePlot"),

("depth_anything", "Depth Anything"),

("depth_anything_v2", "Depth Anything V2"),

+ ("depth_pro", "DepthPro"),

("deta", "DETA"),

("detr", "DETR"),

("dialogpt", "DialoGPT"),

diff --git a/src/transformers/models/auto/image_processing_auto.py b/src/transformers/models/auto/image_processing_auto.py

index db25591eaa3544..41dbb4ab9e5be5 100644

--- a/src/transformers/models/auto/image_processing_auto.py

+++ b/src/transformers/models/auto/image_processing_auto.py

@@ -74,6 +74,7 @@

("deformable_detr", ("DeformableDetrImageProcessor", "DeformableDetrImageProcessorFast")),

("deit", ("DeiTImageProcessor",)),

("depth_anything", ("DPTImageProcessor",)),

+ ("depth_pro", ("DepthProImageProcessor", "DepthProImageProcessorFast")),

("deta", ("DetaImageProcessor",)),

("detr", ("DetrImageProcessor", "DetrImageProcessorFast")),

("dinat", ("ViTImageProcessor", "ViTImageProcessorFast")),

diff --git a/src/transformers/models/auto/modeling_auto.py b/src/transformers/models/auto/modeling_auto.py

index e8a2dece432476..dcf3ee974d2bf1 100644

--- a/src/transformers/models/auto/modeling_auto.py

+++ b/src/transformers/models/auto/modeling_auto.py

@@ -88,6 +88,7 @@

("decision_transformer", "DecisionTransformerModel"),

("deformable_detr", "DeformableDetrModel"),

("deit", "DeiTModel"),

+ ("depth_pro", "DepthProModel"),

("deta", "DetaModel"),

("detr", "DetrModel"),

("dinat", "DinatModel"),

@@ -580,6 +581,7 @@

("data2vec-vision", "Data2VecVisionModel"),

("deformable_detr", "DeformableDetrModel"),

("deit", "DeiTModel"),

+ ("depth_pro", "DepthProModel"),

("deta", "DetaModel"),

("detr", "DetrModel"),

("dinat", "DinatModel"),

@@ -891,6 +893,7 @@

[

# Model for depth estimation mapping

("depth_anything", "DepthAnythingForDepthEstimation"),

+ ("depth_pro", "DepthProForDepthEstimation"),

("dpt", "DPTForDepthEstimation"),

("glpn", "GLPNForDepthEstimation"),

("zoedepth", "ZoeDepthForDepthEstimation"),

diff --git a/src/transformers/models/depth_pro/__init__.py b/src/transformers/models/depth_pro/__init__.py

new file mode 100644

index 00000000000000..6fa380d6420834

--- /dev/null

+++ b/src/transformers/models/depth_pro/__init__.py

@@ -0,0 +1,72 @@

+# Copyright 2024 The HuggingFace Team. All rights reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+from typing import TYPE_CHECKING

+

+from ...file_utils import _LazyModule, is_torch_available, is_vision_available

+from ...utils import OptionalDependencyNotAvailable

+

+

+_import_structure = {"configuration_depth_pro": ["DepthProConfig"]}

+

+try:

+ if not is_vision_available():

+ raise OptionalDependencyNotAvailable()

+except OptionalDependencyNotAvailable:

+ pass

+else:

+ _import_structure["image_processing_depth_pro"] = ["DepthProImageProcessor"]

+ _import_structure["image_processing_depth_pro_fast"] = ["DepthProImageProcessorFast"]

+

+try:

+ if not is_torch_available():

+ raise OptionalDependencyNotAvailable()

+except OptionalDependencyNotAvailable:

+ pass

+else:

+ _import_structure["modeling_depth_pro"] = [

+ "DepthProForDepthEstimation",

+ "DepthProModel",

+ "DepthProPreTrainedModel",

+ ]

+

+

+if TYPE_CHECKING:

+ from .configuration_depth_pro import DepthProConfig

+

+ try:

+ if not is_vision_available():

+ raise OptionalDependencyNotAvailable()

+ except OptionalDependencyNotAvailable:

+ pass

+ else:

+ from .image_processing_depth_pro import DepthProImageProcessor

+ from .image_processing_depth_pro_fast import DepthProImageProcessorFast

+

+ try:

+ if not is_torch_available():

+ raise OptionalDependencyNotAvailable()

+ except OptionalDependencyNotAvailable:

+ pass

+ else:

+ from .modeling_depth_pro import (

+ DepthProForDepthEstimation,

+ DepthProModel,

+ DepthProPreTrainedModel,

+ )

+

+

+else:

+ import sys

+

+ sys.modules[__name__] = _LazyModule(__name__, globals()["__file__"], _import_structure, module_spec=__spec__)

diff --git a/src/transformers/models/depth_pro/configuration_depth_pro.py b/src/transformers/models/depth_pro/configuration_depth_pro.py

new file mode 100644

index 00000000000000..206c01eff191bd

--- /dev/null

+++ b/src/transformers/models/depth_pro/configuration_depth_pro.py

@@ -0,0 +1,203 @@

+# coding=utf-8

+# Copyright 2024 The HuggingFace Team. All rights reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+"""DepthPro model configuration"""

+

+from ...configuration_utils import PretrainedConfig

+from ...utils import logging

+

+

+logger = logging.get_logger(__name__)

+

+

+class DepthProConfig(PretrainedConfig):

+ r"""

+ This is the configuration class to store the configuration of a [`DepthProModel`]. It is used to instantiate a

+ DepthPro model according to the specified arguments, defining the model architecture. Instantiating a configuration

+ with the defaults will yield a similar configuration to that of the DepthPro

+ [apple/DepthPro](https://huggingface.co/apple/DepthPro) architecture.

+

+ Configuration objects inherit from [`PretrainedConfig`] and can be used to control the model outputs. Read the

+ documentation from [`PretrainedConfig`] for more information.

+

+ Args:

+ hidden_size (`int`, *optional*, defaults to 1024):

+ Dimensionality of the encoder layers and the pooler layer.

+ fusion_hidden_size (`int`, *optional*, defaults to 256):

+ The number of channels before fusion.

+ num_hidden_layers (`int`, *optional*, defaults to 24):

+ Number of hidden layers in the Transformer encoder.

+ num_attention_heads (`int`, *optional*, defaults to 16):

+ Number of attention heads for each attention layer in the Transformer encoder.

+ mlp_ratio (`int`, *optional*, defaults to 4):

+ Ratio of the hidden size of the MLPs relative to the `hidden_size`.

+ hidden_act (`str` or `function`, *optional*, defaults to `"gelu"`):

+ The non-linear activation function (function or string) in the encoder and pooler. If string, `"gelu"`,

+ `"relu"`, `"selu"` and `"gelu_new"` are supported.

+ hidden_dropout_prob (`float`, *optional*, defaults to 0.0):

+ The dropout probability for all fully connected layers in the embeddings, encoder, and pooler.

+ attention_probs_dropout_prob (`float`, *optional*, defaults to 0.0):

+ The dropout ratio for the attention probabilities.

+ initializer_range (`float`, *optional*, defaults to 0.02):

+ The standard deviation of the truncated_normal_initializer for initializing all weight matrices.

+ layer_norm_eps (`float`, *optional*, defaults to 1e-06):

+ The epsilon used by the layer normalization layers.

+ patch_size (`int`, *optional*, defaults to 384):

+ The size (resolution) of each patch.

+ num_channels (`int`, *optional*, defaults to 3):

+ The number of input channels.

+ patch_embeddings_size (`int`, *optional*, defaults to 16):

+ kernel_size and stride for convolution in PatchEmbeddings.

+ qkv_bias (`bool`, *optional*, defaults to `True`):

+ Whether to add a bias to the queries, keys and values.

+ layerscale_value (`float`, *optional*, defaults to 1.0):

+ Initial value to use for layer scale.

+ drop_path_rate (`float`, *optional*, defaults to 0.0):

+ Stochastic depth rate per sample (when applied in the main path of residual layers).

+ use_swiglu_ffn (`bool`, *optional*, defaults to `False`):

+ Whether to use the SwiGLU feedforward neural network.

+ intermediate_hook_ids (`List[int]`, *optional*, defaults to `[11, 5]`):

+ Indices of the intermediate hidden states from the patch encoder to use for fusion.

+ intermediate_feature_dims (`List[int]`, *optional*, defaults to `[256, 256]`):

+ Hidden state dimensions during upsampling for each intermediate hidden state in `intermediate_hook_ids`.

+ scaled_images_ratios (`List[float]`, *optional*, defaults to `[0.25, 0.5, 1]`):

+ Ratios of scaled images to be used by the patch encoder.

+ scaled_images_overlap_ratios (`List[float]`, *optional*, defaults to `[0.0, 0.5, 0.25]`):

+ Overlap ratios between patches for each scaled image in `scaled_images_ratios`.

+ scaled_images_feature_dims (`List[int]`, *optional*, defaults to `[1024, 1024, 512]`):

+ Hidden state dimensions during upsampling for each scaled image in `scaled_images_ratios`.

+ use_batch_norm_in_fusion_residual (`bool`, *optional*, defaults to `False`):

+ Whether to use batch normalization in the pre-activate residual units of the fusion blocks.

+ use_bias_in_fusion_residual (`bool`, *optional*, defaults to `True`):

+ Whether to use bias in the pre-activate residual units of the fusion blocks.

+ use_fov_model (`bool`, *optional*, defaults to `True`):

+ Whether to use `DepthProFOVModel` to generate the field of view.

+ num_fov_head_layers (`int`, *optional*, defaults to 2):

+ Number of convolution layers in the head of `DepthProFOVModel`.

+

+ Example:

+

+ ```python

+ >>> from transformers import DepthProConfig, DepthProModel

+

+ >>> # Initializing a DepthPro apple/DepthPro style configuration

+ >>> configuration = DepthProConfig()

+

+ >>> # Initializing a model (with random weights) from the apple/DepthPro style configuration

+ >>> model = DepthProModel(configuration)

+

+ >>> # Accessing the model configuration

+ >>> configuration = model.config

+ ```"""

+

+ model_type = "depth_pro"

+

+ def __init__(

+ self,

+ hidden_size=1024,

+ fusion_hidden_size=256,

+ num_hidden_layers=24,

+ num_attention_heads=16,

+ mlp_ratio=4,

+ hidden_act="gelu",

+ hidden_dropout_prob=0.0,

+ attention_probs_dropout_prob=0.0,

+ initializer_range=0.02,

+ layer_norm_eps=1e-6,

+ patch_size=384,

+ num_channels=3,

+ patch_embeddings_size=16,

+ qkv_bias=True,

+ layerscale_value=1.0,

+ drop_path_rate=0.0,

+ use_swiglu_ffn=False,

+ intermediate_hook_ids=[11, 5],

+ intermediate_feature_dims=[256, 256],

+ scaled_images_ratios=[0.25, 0.5, 1],

+ scaled_images_overlap_ratios=[0.0, 0.5, 0.25],

+ scaled_images_feature_dims=[1024, 1024, 512],

+ use_batch_norm_in_fusion_residual=False,

+ use_bias_in_fusion_residual=True,

+ use_fov_model=True,

+ num_fov_head_layers=2,

+ **kwargs,

+ ):

+ super().__init__(**kwargs)

+

+ # scaled_images_ratios is sorted

+ if scaled_images_ratios != sorted(scaled_images_ratios):

+ raise ValueError(

+ f"Values in scaled_images_ratios={scaled_images_ratios} " "should be sorted from low to high"

+ )

+

+ # patch_size should be a divisible by patch_embeddings_size

+ # else it raises an exception in DepthProViTPatchEmbeddings

+ if patch_size % patch_embeddings_size != 0:

+ raise ValueError(

+ f"patch_size={patch_size} should be divisible " f"by patch_embeddings_size={patch_embeddings_size}."

+ )

+

+ # scaled_images_ratios, scaled_images_overlap_ratios, scaled_images_feature_dims should be consistent

+ if not (len(scaled_images_ratios) == len(scaled_images_overlap_ratios) == len(scaled_images_feature_dims)):

+ raise ValueError(

+ f"len(scaled_images_ratios)={len(scaled_images_ratios)} and "

+ f"len(scaled_images_overlap_ratios)={len(scaled_images_overlap_ratios)} and "

+ f"len(scaled_images_feature_dims)={len(scaled_images_feature_dims)}, "

+ f"should match in config."

+ )

+

+ # intermediate_hook_ids, intermediate_feature_dims should be consistent

+ if not (len(intermediate_hook_ids) == len(intermediate_feature_dims)):

+ raise ValueError(

+ f"len(intermediate_hook_ids)={len(intermediate_hook_ids)} and "

+ f"len(intermediate_feature_dims)={len(intermediate_feature_dims)}, "

+ f"should match in config."

+ )

+

+ # fusion_hidden_size should be consistent with num_fov_head_layers

+ if fusion_hidden_size // 2**num_fov_head_layers == 0:

+ raise ValueError(

+ f"fusion_hidden_size={fusion_hidden_size} should be consistent with num_fov_head_layers={num_fov_head_layers} "

+ "i.e fusion_hidden_size // 2**num_fov_head_layers > 0"

+ )

+

+ self.hidden_size = hidden_size

+ self.fusion_hidden_size = fusion_hidden_size

+ self.num_hidden_layers = num_hidden_layers

+ self.num_attention_heads = num_attention_heads

+ self.mlp_ratio = mlp_ratio

+ self.hidden_act = hidden_act

+ self.hidden_dropout_prob = hidden_dropout_prob

+ self.attention_probs_dropout_prob = attention_probs_dropout_prob

+ self.initializer_range = initializer_range

+ self.layer_norm_eps = layer_norm_eps

+ self.patch_size = patch_size

+ self.num_channels = num_channels

+ self.patch_embeddings_size = patch_embeddings_size

+ self.qkv_bias = qkv_bias

+ self.layerscale_value = layerscale_value

+ self.drop_path_rate = drop_path_rate

+ self.use_swiglu_ffn = use_swiglu_ffn

+ self.use_batch_norm_in_fusion_residual = use_batch_norm_in_fusion_residual

+ self.use_bias_in_fusion_residual = use_bias_in_fusion_residual

+ self.use_fov_model = use_fov_model

+ self.num_fov_head_layers = num_fov_head_layers

+ self.intermediate_hook_ids = intermediate_hook_ids

+ self.intermediate_feature_dims = intermediate_feature_dims

+ self.scaled_images_ratios = scaled_images_ratios

+ self.scaled_images_overlap_ratios = scaled_images_overlap_ratios

+ self.scaled_images_feature_dims = scaled_images_feature_dims

+

+

+__all__ = ["DepthProConfig"]

diff --git a/src/transformers/models/depth_pro/convert_depth_pro_weights_to_hf.py b/src/transformers/models/depth_pro/convert_depth_pro_weights_to_hf.py

new file mode 100644

index 00000000000000..cca89f6a8b8cac

--- /dev/null

+++ b/src/transformers/models/depth_pro/convert_depth_pro_weights_to_hf.py

@@ -0,0 +1,281 @@

+# Copyright 2024 The HuggingFace Team. All rights reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+import argparse

+import gc

+import os

+

+import regex as re

+import torch

+from huggingface_hub import hf_hub_download

+

+from transformers import (

+ DepthProConfig,

+ DepthProForDepthEstimation,

+ DepthProImageProcessorFast,

+)

+from transformers.image_utils import PILImageResampling

+

+

+# fmt: off

+ORIGINAL_TO_CONVERTED_KEY_MAPPING = {

+

+ # encoder and head

+ r"encoder.(patch|image)_encoder.cls_token": r"depth_pro.encoder.\1_encoder.embeddings.cls_token",

+ r"encoder.(patch|image)_encoder.pos_embed": r"depth_pro.encoder.\1_encoder.embeddings.position_embeddings",

+ r"encoder.(patch|image)_encoder.patch_embed.proj.(weight|bias)": r"depth_pro.encoder.\1_encoder.embeddings.patch_embeddings.projection.\2",

+ r"encoder.(patch|image)_encoder.blocks.(\d+).norm(\d+).(weight|bias)": r"depth_pro.encoder.\1_encoder.encoder.layer.\2.norm\3.\4",

+ r"encoder.(patch|image)_encoder.blocks.(\d+).attn.qkv.(weight|bias)": r"depth_pro.encoder.\1_encoder.encoder.layer.\2.attention.attention.(query|key|value).\3",

+ r"encoder.(patch|image)_encoder.blocks.(\d+).attn.proj.(weight|bias)": r"depth_pro.encoder.\1_encoder.encoder.layer.\2.attention.output.dense.\3",

+ r"encoder.(patch|image)_encoder.blocks.(\d+).ls(\d+).gamma": r"depth_pro.encoder.\1_encoder.encoder.layer.\2.layer_scale\3.lambda1",

+ r"encoder.(patch|image)_encoder.blocks.(\d+).mlp.fc(\d+).(weight|bias)": r"depth_pro.encoder.\1_encoder.encoder.layer.\2.mlp.fc\3.\4",

+ r"encoder.(patch|image)_encoder.norm.(weight|bias)": r"depth_pro.encoder.\1_encoder.layernorm.\2",

+ r"encoder.fuse_lowres.(weight|bias)": r"depth_pro.encoder.fuse_image_with_low_res.\1",

+ r"head.(\d+).(weight|bias)": r"head.head.\1.\2",

+

+ # fov

+ r"fov.encoder.0.cls_token": r"fov_model.encoder.embeddings.cls_token",

+ r"fov.encoder.0.pos_embed": r"fov_model.encoder.embeddings.position_embeddings",

+ r"fov.encoder.0.patch_embed.proj.(weight|bias)": r"fov_model.encoder.embeddings.patch_embeddings.projection.\1",

+ r"fov.encoder.0.blocks.(\d+).norm(\d+).(weight|bias)": r"fov_model.encoder.encoder.layer.\1.norm\2.\3",

+ r"fov.encoder.0.blocks.(\d+).attn.qkv.(weight|bias)": r"fov_model.encoder.encoder.layer.\1.attention.attention.(query|key|value).\2",

+ r"fov.encoder.0.blocks.(\d+).attn.proj.(weight|bias)": r"fov_model.encoder.encoder.layer.\1.attention.output.dense.\2",

+ r"fov.encoder.0.blocks.(\d+).ls(\d+).gamma": r"fov_model.encoder.encoder.layer.\1.layer_scale\2.lambda1",

+ r"fov.encoder.0.blocks.(\d+).mlp.fc(\d+).(weight|bias)": r"fov_model.encoder.encoder.layer.\1.mlp.fc\2.\3",

+ r"fov.encoder.0.norm.(weight|bias)": r"fov_model.encoder.layernorm.\1",

+ r"fov.downsample.(\d+).(weight|bias)": r"fov_model.global_neck.\1.\2",

+ r"fov.encoder.1.(weight|bias)": r"fov_model.encoder_neck.\1",

+ r"fov.head.head.(\d+).(weight|bias)": r"fov_model.head.\1.\2",

+

+ # upsamples (hard coded; regex is not very feasible here)

+ "encoder.upsample_latent0.0.weight": "depth_pro.encoder.feature_upsample.upsample_blocks.5.0.weight",

+ "encoder.upsample_latent0.1.weight": "depth_pro.encoder.feature_upsample.upsample_blocks.5.1.weight",

+ "encoder.upsample_latent0.2.weight": "depth_pro.encoder.feature_upsample.upsample_blocks.5.2.weight",

+ "encoder.upsample_latent0.3.weight": "depth_pro.encoder.feature_upsample.upsample_blocks.5.3.weight",

+ "encoder.upsample_latent1.0.weight": "depth_pro.encoder.feature_upsample.upsample_blocks.4.0.weight",

+ "encoder.upsample_latent1.1.weight": "depth_pro.encoder.feature_upsample.upsample_blocks.4.1.weight",

+ "encoder.upsample_latent1.2.weight": "depth_pro.encoder.feature_upsample.upsample_blocks.4.2.weight",

+ "encoder.upsample0.0.weight": "depth_pro.encoder.feature_upsample.upsample_blocks.3.0.weight",

+ "encoder.upsample0.1.weight": "depth_pro.encoder.feature_upsample.upsample_blocks.3.1.weight",

+ "encoder.upsample1.0.weight": "depth_pro.encoder.feature_upsample.upsample_blocks.2.0.weight",

+ "encoder.upsample1.1.weight": "depth_pro.encoder.feature_upsample.upsample_blocks.2.1.weight",

+ "encoder.upsample2.0.weight": "depth_pro.encoder.feature_upsample.upsample_blocks.1.0.weight",

+ "encoder.upsample2.1.weight": "depth_pro.encoder.feature_upsample.upsample_blocks.1.1.weight",

+ "encoder.upsample_lowres.weight": "depth_pro.encoder.feature_upsample.upsample_blocks.0.0.weight",

+ "encoder.upsample_lowres.bias": "depth_pro.encoder.feature_upsample.upsample_blocks.0.0.bias",

+

+ # projections between encoder and fusion

+ r"decoder.convs.(\d+).weight": lambda match: (

+ f"depth_pro.encoder.feature_projection.projections.{4-int(match.group(1))}.weight"

+ ),

+

+ # fusion stage

+ r"decoder.fusions.(\d+).resnet(\d+).residual.(\d+).(weight|bias)": lambda match: (

+ f"fusion_stage.layers.{4-int(match.group(1))}.residual_layer{match.group(2)}.convolution{(int(match.group(3))+1)//2}.{match.group(4)}"

+ ),

+ r"decoder.fusions.(\d+).out_conv.(weight|bias)": lambda match: (

+ f"fusion_stage.layers.{4-int(match.group(1))}.projection.{match.group(2)}"

+ ),

+ r"decoder.fusions.(\d+).deconv.(weight|bias)": lambda match: (

+ f"fusion_stage.layers.{4-int(match.group(1))}.deconv.{match.group(2)}"

+ ),

+}

+# fmt: on

+

+

+def convert_old_keys_to_new_keys(state_dict_keys: dict = None):

+ output_dict = {}

+ if state_dict_keys is not None:

+ old_text = "\n".join(state_dict_keys)

+ new_text = old_text

+ for pattern, replacement in ORIGINAL_TO_CONVERTED_KEY_MAPPING.items():

+ if replacement is None:

+ new_text = re.sub(pattern, "", new_text) # an empty line

+ continue

+ new_text = re.sub(pattern, replacement, new_text)

+ output_dict = dict(zip(old_text.split("\n"), new_text.split("\n")))

+ return output_dict

+

+

+def get_qkv_state_dict(key, parameter):

+ """

+ new key which looks like this

+ xxxx.(q|k|v).xxx (m, n)

+

+ is converted to

+ xxxx.q.xxxx (m//3, n)

+ xxxx.k.xxxx (m//3, n)

+ xxxx.v.xxxx (m//3, n)

+ """

+ qkv_state_dict = {}

+ placeholder = re.search(r"(\(.*?\))", key).group(1) # finds "(query|key|value)"

+ replacements_keys = placeholder[1:-1].split("|") # creates ['query', 'key', 'value']

+ replacements_vals = torch.split(

+ parameter, split_size_or_sections=parameter.size(0) // len(replacements_keys), dim=0

+ )

+ for replacement_key, replacement_val in zip(replacements_keys, replacements_vals):

+ qkv_state_dict[key.replace(placeholder, replacement_key)] = replacement_val

+ return qkv_state_dict

+

+

+def write_model(

+ hf_repo_id: str,

+ output_dir: str,

+ safe_serialization: bool = True,

+):

+ os.makedirs(output_dir, exist_ok=True)

+

+ # ------------------------------------------------------------

+ # Create and save config

+ # ------------------------------------------------------------

+

+ # create config

+ config = DepthProConfig(

+ # this config is same as the default config and used for pre-trained weights

+ hidden_size=1024,

+ fusion_hidden_size=256,

+ num_hidden_layers=24,

+ num_attention_heads=16,

+ mlp_ratio=4,

+ hidden_act="gelu",

+ hidden_dropout_prob=0.0,

+ attention_probs_dropout_prob=0.0,

+ initializer_range=0.02,

+ layer_norm_eps=1e-6,

+ patch_size=384,

+ num_channels=3,

+ patch_embeddings_size=16,

+ qkv_bias=True,

+ layerscale_value=1.0,

+ drop_path_rate=0.0,

+ use_swiglu_ffn=False,

+ apply_layernorm=True,

+ reshape_hidden_states=True,

+ intermediate_hook_ids=[11, 5],

+ intermediate_feature_dims=[256, 256],

+ scaled_images_ratios=[0.25, 0.5, 1],

+ scaled_images_overlap_ratios=[0.0, 0.5, 0.25],

+ scaled_images_feature_dims=[1024, 1024, 512],

+ use_batch_norm_in_fusion_residual=False,

+ use_bias_in_fusion_residual=True,

+ use_fov_model=True,

+ num_fov_head_layers=2,

+ )

+

+ # save config

+ config.save_pretrained(output_dir)

+ print("Model config saved successfully...")

+

+ # ------------------------------------------------------------

+ # Convert weights

+ # ------------------------------------------------------------

+

+ # download and load state_dict from hf repo

+ file_path = hf_hub_download(hf_repo_id, "depth_pro.pt")

+ # file_path = "/home/geetu/work/hf/depth_pro/depth_pro.pt" # when you already have the files locally

+ loaded = torch.load(file_path, weights_only=True)

+

+ print("Converting model...")

+ all_keys = list(loaded.keys())

+ new_keys = convert_old_keys_to_new_keys(all_keys)

+

+ state_dict = {}

+ for key in all_keys:

+ new_key = new_keys[key]

+ current_parameter = loaded.pop(key)

+

+ if "qkv" in key:

+ qkv_state_dict = get_qkv_state_dict(new_key, current_parameter)

+ state_dict.update(qkv_state_dict)

+ else:

+ state_dict[new_key] = current_parameter

+

+ print("Loading the checkpoint in a DepthPro model.")

+ model = DepthProForDepthEstimation(config)

+ model.load_state_dict(state_dict, strict=True, assign=True)

+ print("Checkpoint loaded successfully.")

+

+ print("Saving the model.")

+ model.save_pretrained(output_dir, safe_serialization=safe_serialization)

+ del state_dict, model

+

+ # Safety check: reload the converted model

+ gc.collect()

+ print("Reloading the model to check if it's saved correctly.")

+ model = DepthProForDepthEstimation.from_pretrained(output_dir, device_map="auto")

+ print("Model reloaded successfully.")

+ return model

+

+

+def write_image_processor(output_dir: str):

+ image_processor = DepthProImageProcessorFast(

+ do_resize=True,

+ size={"height": 1536, "width": 1536},

+ resample=PILImageResampling.BILINEAR,

+ antialias=False,

+ do_rescale=True,

+ rescale_factor=1 / 255,

+ do_normalize=True,

+ image_mean=0.5,

+ image_std=0.5,

+ )

+ image_processor.save_pretrained(output_dir)

+ return image_processor

+

+

+def main():

+ parser = argparse.ArgumentParser()

+ parser.add_argument(

+ "--hf_repo_id",

+ default="apple/DepthPro",

+ help="Location of official weights from apple on HF",

+ )

+ parser.add_argument(

+ "--output_dir",

+ default="apple_DepthPro",

+ help="Location to write the converted model and processor",

+ )

+ parser.add_argument(

+ "--safe_serialization", default=True, type=bool, help="Whether or not to save using `safetensors`."

+ )

+ parser.add_argument(

+ "--push_to_hub",

+ action=argparse.BooleanOptionalAction,

+ help="Whether or not to push the converted model to the huggingface hub.",

+ )

+ parser.add_argument(

+ "--hub_repo_id",

+ default="geetu040/DepthPro",

+ help="Huggingface hub repo to write the converted model and processor",

+ )

+ args = parser.parse_args()

+

+ model = write_model(

+ hf_repo_id=args.hf_repo_id,

+ output_dir=args.output_dir,

+ safe_serialization=args.safe_serialization,

+ )

+

+ image_processor = write_image_processor(

+ output_dir=args.output_dir,

+ )

+

+ if args.push_to_hub:

+ print("Pushing to hub...")

+ model.push_to_hub(args.hub_repo_id)

+ image_processor.push_to_hub(args.hub_repo_id)

+

+

+if __name__ == "__main__":

+ main()

diff --git a/src/transformers/models/depth_pro/image_processing_depth_pro.py b/src/transformers/models/depth_pro/image_processing_depth_pro.py

new file mode 100644

index 00000000000000..76a12577dd6330

--- /dev/null

+++ b/src/transformers/models/depth_pro/image_processing_depth_pro.py

@@ -0,0 +1,406 @@

+# coding=utf-8

+# Copyright 2024 The HuggingFace Team. All rights reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+"""Image processor class for DepthPro."""

+

+from typing import TYPE_CHECKING, Dict, List, Optional, Tuple, Union

+

+import numpy as np

+

+

+if TYPE_CHECKING:

+ from ...modeling_outputs import DepthProDepthEstimatorOutput

+

+from ...image_processing_utils import BaseImageProcessor, BatchFeature, get_size_dict

+from ...image_transforms import to_channel_dimension_format

+from ...image_utils import (

+ IMAGENET_STANDARD_MEAN,

+ IMAGENET_STANDARD_STD,

+ ChannelDimension,

+ ImageInput,

+ PILImageResampling,

+ infer_channel_dimension_format,

+ is_scaled_image,

+ is_torch_available,

+ make_list_of_images,

+ pil_torch_interpolation_mapping,

+ to_numpy_array,

+ valid_images,

+)

+from ...utils import (

+ TensorType,

+ filter_out_non_signature_kwargs,

+ logging,

+ requires_backends,

+)

+

+

+if is_torch_available():

+ import torch

+

+

+logger = logging.get_logger(__name__)

+

+

+class DepthProImageProcessor(BaseImageProcessor):

+ r"""

+ Constructs a DepthPro image processor.

+

+ Args:

+ do_resize (`bool`, *optional*, defaults to `True`):

+ Whether to resize the image's (height, width) dimensions to the specified `(size["height"],

+ size["width"])`. Can be overridden by the `do_resize` parameter in the `preprocess` method.

+ size (`dict`, *optional*, defaults to `{"height": 1536, "width": 1536}`):

+ Size of the output image after resizing. Can be overridden by the `size` parameter in the `preprocess`

+ method.

+ resample (`PILImageResampling`, *optional*, defaults to `Resampling.BILINEAR`):

+ Resampling filter to use if resizing the image. Can be overridden by the `resample` parameter in the

+ `preprocess` method.

+ antialias (`bool`, *optional*, defaults to `False`):

+ Whether to apply an anti-aliasing filter when resizing the image. It only affects tensors with

+ bilinear or bicubic modes and it is ignored otherwise.

+ do_rescale (`bool`, *optional*, defaults to `True`):

+ Whether to rescale the image by the specified scale `rescale_factor`. Can be overridden by the `do_rescale`

+ parameter in the `preprocess` method.

+ rescale_factor (`int` or `float`, *optional*, defaults to `1/255`):

+ Scale factor to use if rescaling the image. Can be overridden by the `rescale_factor` parameter in the

+ `preprocess` method.

+ do_normalize (`bool`, *optional*, defaults to `True`):

+ Whether to normalize the image. Can be overridden by the `do_normalize` parameter in the `preprocess`

+ method.

+ image_mean (`float` or `List[float]`, *optional*, defaults to `IMAGENET_STANDARD_MEAN`):

+ Mean to use if normalizing the image. This is a float or list of floats the length of the number of

+ channels in the image. Can be overridden by the `image_mean` parameter in the `preprocess` method.

+ image_std (`float` or `List[float]`, *optional*, defaults to `IMAGENET_STANDARD_STD`):

+ Standard deviation to use if normalizing the image. This is a float or list of floats the length of the

+ number of channels in the image. Can be overridden by the `image_std` parameter in the `preprocess` method.

+ """

+

+ model_input_names = ["pixel_values"]

+

+ def __init__(

+ self,

+ do_resize: bool = True,

+ size: Optional[Dict[str, int]] = None,

+ resample: PILImageResampling = PILImageResampling.BILINEAR,

+ antialias: bool = False,

+ do_rescale: bool = True,

+ rescale_factor: Union[int, float] = 1 / 255,

+ do_normalize: bool = True,

+ image_mean: Optional[Union[float, List[float]]] = None,

+ image_std: Optional[Union[float, List[float]]] = None,

+ **kwargs,

+ ):

+ super().__init__(**kwargs)

+ size = size if size is not None else {"height": 1536, "width": 1536}

+ size = get_size_dict(size)

+ self.do_resize = do_resize

+ self.do_rescale = do_rescale

+ self.do_normalize = do_normalize

+ self.size = size

+ self.resample = resample

+ self.antialias = antialias

+ self.rescale_factor = rescale_factor

+ self.image_mean = image_mean if image_mean is not None else IMAGENET_STANDARD_MEAN

+ self.image_std = image_std if image_std is not None else IMAGENET_STANDARD_STD

+

+ def resize(

+ self,

+ image: np.ndarray,

+ size: Dict[str, int],

+ resample: PILImageResampling = PILImageResampling.BILINEAR,

+ antialias: bool = False,

+ data_format: Optional[Union[str, ChannelDimension]] = None,

+ input_data_format: Optional[Union[str, ChannelDimension]] = None,

+ **kwargs,

+ ) -> np.ndarray:

+ """

+ Resize an image to `(size["height"], size["width"])`.

+

+ Args:

+ image (`np.ndarray`):

+ Image to resize.

+ size (`Dict[str, int]`):

+ Dictionary in the format `{"height": int, "width": int}` specifying the size of the output image.

+ resample (`PILImageResampling`, *optional*, defaults to `PILImageResampling.BILINEAR`):

+ `PILImageResampling` filter to use when resizing the image e.g. `PILImageResampling.BILINEAR`.

+ antialias (`bool`, *optional*, defaults to `False`):

+ Whether to apply an anti-aliasing filter when resizing the image. It only affects tensors with

+ bilinear or bicubic modes and it is ignored otherwise.

+ data_format (`ChannelDimension` or `str`, *optional*):

+ The channel dimension format for the output image. If unset, the channel dimension format of the input

+ image is used. Can be one of:

+ - `"channels_first"` or `ChannelDimension.FIRST`: image in (num_channels, height, width) format.

+ - `"channels_last"` or `ChannelDimension.LAST`: image in (height, width, num_channels) format.

+ - `"none"` or `ChannelDimension.NONE`: image in (height, width) format.

+ input_data_format (`ChannelDimension` or `str`, *optional*):

+ The channel dimension format for the input image. If unset, the channel dimension format is inferred

+ from the input image. Can be one of:

+ - `"channels_first"` or `ChannelDimension.FIRST`: image in (num_channels, height, width) format.

+ - `"channels_last"` or `ChannelDimension.LAST`: image in (height, width, num_channels) format.

+ - `"none"` or `ChannelDimension.NONE`: image in (height, width) format.

+

+ Returns:

+ `np.ndarray`: The resized images.

+ """

+ requires_backends(self, "torch")

+

+ size = get_size_dict(size)

+ if "height" not in size or "width" not in size:

+ raise ValueError(f"The `size` dictionary must contain the keys `height` and `width`. Got {size.keys()}")

+ output_size = (size["height"], size["width"])

+

+ # we use torch interpolation instead of image.resize because DepthProImageProcessor

+ # rescales, then normalizes, which may cause some values to become negative, before resizing the image.

+ # image.resize expects all values to be in range [0, 1] or [0, 255] and throws an exception otherwise,

+ # however pytorch interpolation works with negative values.

+ # relevant issue here: https://github.com/huggingface/transformers/issues/34920

+ return (

+ torch.nn.functional.interpolate(

+ # input should be (B, C, H, W)

+ input=torch.from_numpy(image).unsqueeze(0),

+ size=output_size,

+ mode=pil_torch_interpolation_mapping[resample].value,

+ antialias=antialias,

+ )

+ .squeeze(0)

+ .numpy()

+ )

+

+ def _validate_input_arguments(

+ self,

+ do_resize: bool,

+ size: Dict[str, int],

+ resample: PILImageResampling,

+ antialias: bool,

+ do_rescale: bool,

+ rescale_factor: float,

+ do_normalize: bool,

+ image_mean: Union[float, List[float]],

+ image_std: Union[float, List[float]],

+ data_format: Union[str, ChannelDimension],

+ ):

+ if do_resize and None in (size, resample, antialias):

+ raise ValueError("Size, resample and antialias must be specified if do_resize is True.")

+

+ if do_rescale and rescale_factor is None:

+ raise ValueError("Rescale factor must be specified if do_rescale is True.")

+

+ if do_normalize and None in (image_mean, image_std):

+ raise ValueError("Image mean and standard deviation must be specified if do_normalize is True.")

+

+ @filter_out_non_signature_kwargs()

+ def preprocess(

+ self,

+ images: ImageInput,

+ do_resize: Optional[bool] = None,

+ size: Optional[Dict[str, int]] = None,

+ resample: Optional[PILImageResampling] = None,

+ antialias: Optional[bool] = None,

+ do_rescale: Optional[bool] = None,

+ rescale_factor: Optional[float] = None,

+ do_normalize: Optional[bool] = None,

+ image_mean: Optional[Union[float, List[float]]] = None,

+ image_std: Optional[Union[float, List[float]]] = None,

+ return_tensors: Optional[Union[str, TensorType]] = None,

+ data_format: Union[str, ChannelDimension] = ChannelDimension.FIRST,

+ input_data_format: Optional[Union[str, ChannelDimension]] = None,

+ ):

+ """

+ Preprocess an image or batch of images.

+

+ Args:

+ images (`ImageInput`):

+ Image to preprocess. Expects a single or batch of images with pixel values ranging from 0 to 255. If

+ passing in images with pixel values between 0 and 1, set `do_rescale=False`.

+ do_resize (`bool`, *optional*, defaults to `self.do_resize`):

+ Whether to resize the image.

+ size (`Dict[str, int]`, *optional*, defaults to `self.size`):

+ Dictionary in the format `{"height": h, "width": w}` specifying the size of the output image after

+ resizing.

+ resample (`PILImageResampling` filter, *optional*, defaults to `self.resample`):

+ `PILImageResampling` filter to use if resizing the image e.g. `PILImageResampling.BILINEAR`. Only has

+ an effect if `do_resize` is set to `True`.

+ antialias (`bool`, *optional*, defaults to `False`):

+ Whether to apply an anti-aliasing filter when resizing the image. It only affects tensors with

+ bilinear or bicubic modes and it is ignored otherwise.

+ do_rescale (`bool`, *optional*, defaults to `self.do_rescale`):

+ Whether to rescale the image values between [0 - 1].

+ rescale_factor (`float`, *optional*, defaults to `self.rescale_factor`):

+ Rescale factor to rescale the image by if `do_rescale` is set to `True`.

+ do_normalize (`bool`, *optional*, defaults to `self.do_normalize`):

+ Whether to normalize the image.

+ image_mean (`float` or `List[float]`, *optional*, defaults to `self.image_mean`):

+ Image mean to use if `do_normalize` is set to `True`.

+ image_std (`float` or `List[float]`, *optional*, defaults to `self.image_std`):

+ Image standard deviation to use if `do_normalize` is set to `True`.

+ return_tensors (`str` or `TensorType`, *optional*):

+ The type of tensors to return. Can be one of:

+ - Unset: Return a list of `np.ndarray`.

+ - `TensorType.TENSORFLOW` or `'tf'`: Return a batch of type `tf.Tensor`.

+ - `TensorType.PYTORCH` or `'pt'`: Return a batch of type `torch.Tensor`.

+ - `TensorType.NUMPY` or `'np'`: Return a batch of type `np.ndarray`.

+ - `TensorType.JAX` or `'jax'`: Return a batch of type `jax.numpy.ndarray`.

+ data_format (`ChannelDimension` or `str`, *optional*, defaults to `ChannelDimension.FIRST`):

+ The channel dimension format for the output image. Can be one of:

+ - `"channels_first"` or `ChannelDimension.FIRST`: image in (num_channels, height, width) format.

+ - `"channels_last"` or `ChannelDimension.LAST`: image in (height, width, num_channels) format.

+ - Unset: Use the channel dimension format of the input image.

+ input_data_format (`ChannelDimension` or `str`, *optional*):

+ The channel dimension format for the input image. If unset, the channel dimension format is inferred

+ from the input image. Can be one of:

+ - `"channels_first"` or `ChannelDimension.FIRST`: image in (num_channels, height, width) format.

+ - `"channels_last"` or `ChannelDimension.LAST`: image in (height, width, num_channels) format.

+ - `"none"` or `ChannelDimension.NONE`: image in (height, width) format.

+ """

+ do_resize = do_resize if do_resize is not None else self.do_resize

+ do_rescale = do_rescale if do_rescale is not None else self.do_rescale

+ do_normalize = do_normalize if do_normalize is not None else self.do_normalize

+ resample = resample if resample is not None else self.resample

+ antialias = antialias if antialias is not None else self.antialias

+ rescale_factor = rescale_factor if rescale_factor is not None else self.rescale_factor

+ image_mean = image_mean if image_mean is not None else self.image_mean

+ image_std = image_std if image_std is not None else self.image_std

+

+ size = size if size is not None else self.size

+

+ images = make_list_of_images(images)

+

+ if not valid_images(images):

+ raise ValueError(

+ "Invalid image type. Must be of type PIL.Image.Image, numpy.ndarray, "

+ "torch.Tensor, tf.Tensor or jax.ndarray."

+ )

+ self._validate_input_arguments(

+ do_resize=do_resize,

+ size=size,

+ resample=resample,

+ antialias=antialias,

+ do_rescale=do_rescale,

+ rescale_factor=rescale_factor,

+ do_normalize=do_normalize,

+ image_mean=image_mean,

+ image_std=image_std,

+ data_format=data_format,

+ )

+

+ # All transformations expect numpy arrays.

+ images = [to_numpy_array(image) for image in images]

+

+ if is_scaled_image(images[0]) and do_rescale:

+ logger.warning_once(

+ "It looks like you are trying to rescale already rescaled images. If the input"

+ " images have pixel values between 0 and 1, set `do_rescale=False` to avoid rescaling them again."

+ )

+

+ if input_data_format is None:

+ # We assume that all images have the same channel dimension format.

+ input_data_format = infer_channel_dimension_format(images[0])

+

+ all_images = []

+ for image in images:

+ if do_rescale:

+ image = self.rescale(image=image, scale=rescale_factor, input_data_format=input_data_format)

+

+ if do_normalize:

+ image = self.normalize(

+ image=image, mean=image_mean, std=image_std, input_data_format=input_data_format

+ )

+

+ # depth-pro rescales and normalizes the image before resizing it

+ # uses torch interpolation which requires ChannelDimension.FIRST

+ if do_resize:

+ image = to_channel_dimension_format(image, ChannelDimension.FIRST, input_channel_dim=input_data_format)

+ image = self.resize(image=image, size=size, resample=resample, antialias=antialias)

+ image = to_channel_dimension_format(image, data_format, input_channel_dim=ChannelDimension.FIRST)

+ else:

+ image = to_channel_dimension_format(image, data_format, input_channel_dim=input_data_format)

+

+ all_images.append(image)

+

+ data = {"pixel_values": all_images}

+ return BatchFeature(data=data, tensor_type=return_tensors)

+

+ def post_process_depth_estimation(

+ self,

+ outputs: "DepthProDepthEstimatorOutput",

+ target_sizes: Optional[Union[TensorType, List[Tuple[int, int]], None]] = None,

+ ) -> Dict[str, List[TensorType]]:

+ """

+ Post-processes the raw depth predictions from the model to generate final depth predictions and optionally

+ resizes them to specified target sizes. This function supports scaling based on the field of view (FoV)

+ and adjusts depth values accordingly.

+

+ Args:

+ outputs ([`DepthProDepthEstimatorOutput`]):

+ Raw outputs of the model.

+ target_sizes (`Optional[Union[TensorType, List[Tuple[int, int]], None]]`, *optional*, defaults to `None`):

+ Target sizes to resize the depth predictions. Can be a tensor of shape `(batch_size, 2)`

+ or a list of tuples `(height, width)` for each image in the batch. If `None`, no resizing

+ is performed.

+

+ Returns:

+ `List[Dict[str, TensorType]]`: A list of dictionaries of tensors representing the processed depth

+ predictions.

+

+ Raises:

+ `ValueError`:

+ If the lengths of `predicted_depths`, `fovs`, or `target_sizes` are mismatched.

+ """

+ requires_backends(self, "torch")

+

+ predicted_depth = outputs.predicted_depth

+ fov = outputs.fov

+

+ batch_size = len(predicted_depth)

+

+ if target_sizes is not None and batch_size != len(target_sizes):

+ raise ValueError(

+ "Make sure that you pass in as many fov values as the batch dimension of the predicted depth"

+ )

+

+ results = []

+ fov = [None] * batch_size if fov is None else fov

+ target_sizes = [None] * batch_size if target_sizes is None else target_sizes

+ for depth, fov_value, target_size in zip(predicted_depth, fov, target_sizes):

+ if target_size is not None:

+ # scale image w.r.t fov

+ if fov_value is not None:

+ width = target_size[1]

+ fov_value = 0.5 * width / torch.tan(0.5 * torch.deg2rad(fov_value))

+ depth = depth * width / fov_value

+

+ # interpolate

+ depth = torch.nn.functional.interpolate(

+ # input should be (B, C, H, W)

+ input=depth.unsqueeze(0).unsqueeze(1),

+ size=target_size,

+ mode=pil_torch_interpolation_mapping[self.resample].value,

+ antialias=self.antialias,

+ ).squeeze()

+

+ # inverse the depth

+ depth = 1.0 / torch.clamp(depth, min=1e-4, max=1e4)

+

+ results.append(

+ {

+ "predicted_depth": depth,

+ "fov": fov_value,

+ }

+ )

+

+ return results

+

+

+__all__ = ["DepthProImageProcessor"]

diff --git a/src/transformers/models/depth_pro/image_processing_depth_pro_fast.py b/src/transformers/models/depth_pro/image_processing_depth_pro_fast.py

new file mode 100644

index 00000000000000..521e5b8a06282e

--- /dev/null

+++ b/src/transformers/models/depth_pro/image_processing_depth_pro_fast.py

@@ -0,0 +1,386 @@

+# coding=utf-8

+# Copyright 2024 The HuggingFace Team. All rights reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+"""Fast Image processor class for DepthPro."""

+

+import functools

+from typing import TYPE_CHECKING, Dict, List, Optional, Tuple, Union

+

+

+if TYPE_CHECKING:

+ from ...modeling_outputs import DepthProDepthEstimatorOutput

+

+from ...image_processing_base import BatchFeature

+from ...image_processing_utils import get_size_dict

+from ...image_processing_utils_fast import BaseImageProcessorFast, SizeDict

+from ...image_transforms import FusedRescaleNormalize, NumpyToTensor, Rescale

+from ...image_utils import (

+ IMAGENET_STANDARD_MEAN,

+ IMAGENET_STANDARD_STD,

+ ChannelDimension,

+ ImageInput,

+ ImageType,

+ PILImageResampling,

+ get_image_type,

+ make_list_of_images,

+ pil_torch_interpolation_mapping,

+)

+from ...utils import TensorType, logging, requires_backends

+from ...utils.import_utils import is_torch_available, is_torchvision_available

+

+

+logger = logging.get_logger(__name__)

+

+

+if is_torch_available():

+ import torch

+

+

+if is_torchvision_available():

+ from torchvision.transforms import Compose, Normalize, PILToTensor, Resize

+

+

+class DepthProImageProcessorFast(BaseImageProcessorFast):

+ r"""

+ Constructs a DepthPro image processor.

+

+ Args:

+ do_resize (`bool`, *optional*, defaults to `True`):

+ Whether to resize the image's (height, width) dimensions to the specified `(size["height"],

+ size["width"])`. Can be overridden by the `do_resize` parameter in the `preprocess` method.

+ size (`dict`, *optional*, defaults to `{"height": 1536, "width": 1536}`):

+ Size of the output image after resizing. Can be overridden by the `size` parameter in the `preprocess`

+ method.

+ resample (`PILImageResampling`, *optional*, defaults to `Resampling.BILINEAR`):

+ Resampling filter to use if resizing the image. Can be overridden by the `resample` parameter in the

+ `preprocess` method.

+ antialias (`bool`, *optional*, defaults to `False`):

+ Whether to apply an anti-aliasing filter when resizing the image. It only affects tensors with

+ bilinear or bicubic modes and it is ignored otherwise.

+ do_rescale (`bool`, *optional*, defaults to `True`):

+ Whether to rescale the image by the specified scale `rescale_factor`. Can be overridden by the `do_rescale`

+ parameter in the `preprocess` method.

+ rescale_factor (`int` or `float`, *optional*, defaults to `1/255`):

+ Scale factor to use if rescaling the image. Can be overridden by the `rescale_factor` parameter in the

+ `preprocess` method.

+ do_normalize (`bool`, *optional*, defaults to `True`):

+ Whether to normalize the image. Can be overridden by the `do_normalize` parameter in the `preprocess`

+ method.

+ image_mean (`float` or `List[float]`, *optional*, defaults to `IMAGENET_STANDARD_MEAN`):

+ Mean to use if normalizing the image. This is a float or list of floats the length of the number of

+ channels in the image. Can be overridden by the `image_mean` parameter in the `preprocess` method.

+ image_std (`float` or `List[float]`, *optional*, defaults to `IMAGENET_STANDARD_STD`):

+ Standard deviation to use if normalizing the image. This is a float or list of floats the length of the

+ number of channels in the image. Can be overridden by the `image_std` parameter in the `preprocess` method.

+ """

+

+ model_input_names = ["pixel_values"]

+ _transform_params = [

+ "do_resize",

+ "do_rescale",

+ "do_normalize",

+ "size",

+ "resample",

+ "antialias",

+ "rescale_factor",

+ "image_mean",

+ "image_std",

+ "image_type",

+ ]

+

+ def __init__(

+ self,

+ do_resize: bool = True,

+ size: Optional[Dict[str, int]] = None,

+ resample: PILImageResampling = PILImageResampling.BILINEAR,

+ antialias: bool = False,

+ do_rescale: bool = True,

+ rescale_factor: Union[int, float] = 1 / 255,

+ do_normalize: bool = True,

+ image_mean: Optional[Union[float, List[float]]] = None,

+ image_std: Optional[Union[float, List[float]]] = None,

+ **kwargs,

+ ):

+ super().__init__(**kwargs)

+ size = size if size is not None else {"height": 1536, "width": 1536}

+ size = get_size_dict(size)

+ self.do_resize = do_resize

+ self.do_rescale = do_rescale

+ self.do_normalize = do_normalize

+ self.size = size

+ self.resample = resample

+ self.antialias = antialias

+ self.rescale_factor = rescale_factor

+ self.image_mean = image_mean if image_mean is not None else IMAGENET_STANDARD_MEAN

+ self.image_std = image_std if image_std is not None else IMAGENET_STANDARD_STD

+

+ def _build_transforms(

+ self,

+ do_resize: bool,

+ size: Dict[str, int],

+ resample: PILImageResampling,

+ antialias: bool,

+ do_rescale: bool,

+ rescale_factor: float,

+ do_normalize: bool,

+ image_mean: Union[float, List[float]],

+ image_std: Union[float, List[float]],

+ image_type: ImageType,

+ ) -> "Compose":

+ """

+ Given the input settings build the image transforms using `torchvision.transforms.Compose`.

+ """

+ transforms = []

+

+ # All PIL and numpy values need to be converted to a torch tensor

+ # to keep cross compatibility with slow image processors

+ if image_type == ImageType.PIL:

+ transforms.append(PILToTensor())

+

+ elif image_type == ImageType.NUMPY:

+ transforms.append(NumpyToTensor())

+

+ # We can combine rescale and normalize into a single operation for speed

+ if do_rescale and do_normalize:

+ transforms.append(FusedRescaleNormalize(image_mean, image_std, rescale_factor=rescale_factor))

+ elif do_rescale:

+ transforms.append(Rescale(rescale_factor=rescale_factor))

+ elif do_normalize:

+ transforms.append(Normalize(image_mean, image_std))

+

+ # depth-pro scales the image before resizing it

+ if do_resize:

+ transforms.append(

+ Resize(

+ (size["height"], size["width"]),

+ interpolation=pil_torch_interpolation_mapping[resample],

+ antialias=antialias,

+ )

+ )

+

+ return Compose(transforms)

+

+ @functools.lru_cache(maxsize=1)

+ def _validate_input_arguments(

+ self,

+ return_tensors: Union[str, TensorType],

+ do_resize: bool,

+ size: Dict[str, int],

+ resample: PILImageResampling,

+ antialias: bool,

+ do_rescale: bool,

+ rescale_factor: float,

+ do_normalize: bool,

+ image_mean: Union[float, List[float]],

+ image_std: Union[float, List[float]],

+ data_format: Union[str, ChannelDimension],

+ image_type: ImageType,

+ ):

+ if return_tensors != "pt":

+ raise ValueError("Only returning PyTorch tensors is currently supported.")

+

+ if data_format != ChannelDimension.FIRST:

+ raise ValueError("Only channel first data format is currently supported.")

+

+ if do_resize and None in (size, resample, antialias):

+ raise ValueError("Size, resample and antialias must be specified if do_resize is True.")

+

+ if do_rescale and rescale_factor is None:

+ raise ValueError("Rescale factor must be specified if do_rescale is True.")

+

+ if do_normalize and None in (image_mean, image_std):

+ raise ValueError("Image mean and standard deviation must be specified if do_normalize is True.")

+

+ def preprocess(

+ self,

+ images: ImageInput,

+ do_resize: Optional[bool] = None,

+ size: Optional[Dict[str, int]] = None,

+ resample: Optional[PILImageResampling] = None,

+ antialias: Optional[bool] = None,

+ do_rescale: Optional[bool] = None,

+ rescale_factor: Optional[float] = None,

+ do_normalize: Optional[bool] = None,

+ image_mean: Optional[Union[float, List[float]]] = None,

+ image_std: Optional[Union[float, List[float]]] = None,

+ return_tensors: Optional[Union[str, TensorType]] = "pt",

+ data_format: Union[str, ChannelDimension] = ChannelDimension.FIRST,

+ input_data_format: Optional[Union[str, ChannelDimension]] = None,

+ **kwargs,

+ ):

+ """

+ Preprocess an image or batch of images.

+

+ Args:

+ images (`ImageInput`):

+ Image to preprocess. Expects a single or batch of images with pixel values ranging from 0 to 255. If

+ passing in images with pixel values between 0 and 1, set `do_rescale=False`.

+ do_resize (`bool`, *optional*, defaults to `self.do_resize`):

+ Whether to resize the image.

+ size (`Dict[str, int]`, *optional*, defaults to `self.size`):

+ Dictionary in the format `{"height": h, "width": w}` specifying the size of the output image after

+ resizing.

+ resample (`PILImageResampling` filter, *optional*, defaults to `self.resample`):

+ `PILImageResampling` filter to use if resizing the image e.g. `PILImageResampling.BILINEAR`. Only has

+ an effect if `do_resize` is set to `True`.

+ antialias (`bool`, *optional*, defaults to `False`):

+ Whether to apply an anti-aliasing filter when resizing the image. It only affects tensors with

+ bilinear or bicubic modes and it is ignored otherwise.

+ do_rescale (`bool`, *optional*, defaults to `self.do_rescale`):

+ Whether to rescale the image values between [0 - 1].

+ rescale_factor (`float`, *optional*, defaults to `self.rescale_factor`):

+ Rescale factor to rescale the image by if `do_rescale` is set to `True`.

+ do_normalize (`bool`, *optional*, defaults to `self.do_normalize`):

+ Whether to normalize the image.

+ image_mean (`float` or `List[float]`, *optional*, defaults to `self.image_mean`):

+ Image mean to use if `do_normalize` is set to `True`.

+ image_std (`float` or `List[float]`, *optional*, defaults to `self.image_std`):

+ Image standard deviation to use if `do_normalize` is set to `True`.

+ return_tensors (`str` or `TensorType`, *optional*):

+ The type of tensors to return. Only "pt" is supported

+ data_format (`ChannelDimension` or `str`, *optional*, defaults to `ChannelDimension.FIRST`):

+ The channel dimension format for the output image. The following formats are currently supported:

+ - `"channels_first"` or `ChannelDimension.FIRST`: image in (num_channels, height, width) format.

+ input_data_format (`ChannelDimension` or `str`, *optional*):

+ The channel dimension format for the input image. If unset, the channel dimension format is inferred

+ from the input image. Can be one of:

+ - `"channels_first"` or `ChannelDimension.FIRST`: image in (num_channels, height, width) format.

+ - `"channels_last"` or `ChannelDimension.LAST`: image in (height, width, num_channels) format.

+ - `"none"` or `ChannelDimension.NONE`: image in (height, width) format.

+ """

+ do_resize = do_resize if do_resize is not None else self.do_resize

+ do_rescale = do_rescale if do_rescale is not None else self.do_rescale

+ do_normalize = do_normalize if do_normalize is not None else self.do_normalize

+ resample = resample if resample is not None else self.resample

+ antialias = antialias if antialias is not None else self.antialias

+ rescale_factor = rescale_factor if rescale_factor is not None else self.rescale_factor

+ image_mean = image_mean if image_mean is not None else self.image_mean

+ image_std = image_std if image_std is not None else self.image_std

+ size = size if size is not None else self.size

+ # Make hashable for cache

+ size = SizeDict(**size)

+ image_mean = tuple(image_mean) if isinstance(image_mean, list) else image_mean

+ image_std = tuple(image_std) if isinstance(image_std, list) else image_std

+

+ images = make_list_of_images(images)

+ image_type = get_image_type(images[0])

+

+ if image_type not in [ImageType.PIL, ImageType.TORCH, ImageType.NUMPY]:

+ raise ValueError(f"Unsupported input image type {image_type}")

+

+ self._validate_input_arguments(

+ do_resize=do_resize,

+ size=size,

+ resample=resample,

+ antialias=antialias,

+ do_rescale=do_rescale,

+ rescale_factor=rescale_factor,

+ do_normalize=do_normalize,

+ image_mean=image_mean,

+ image_std=image_std,

+ return_tensors=return_tensors,

+ data_format=data_format,

+ image_type=image_type,

+ )

+

+ transforms = self.get_transforms(

+ do_resize=do_resize,

+ do_rescale=do_rescale,

+ do_normalize=do_normalize,

+ size=size,

+ resample=resample,

+ antialias=antialias,

+ rescale_factor=rescale_factor,

+ image_mean=image_mean,

+ image_std=image_std,

+ image_type=image_type,

+ )

+ transformed_images = [transforms(image) for image in images]

+

+ data = {"pixel_values": torch.stack(transformed_images, dim=0)}

+ return BatchFeature(data, tensor_type=return_tensors)

+

+ # Copied from transformers.models.depth_pro.image_processing_depth_pro.DepthProImageProcessor.post_process_depth_estimation

+ def post_process_depth_estimation(

+ self,

+ outputs: "DepthProDepthEstimatorOutput",

+ target_sizes: Optional[Union[TensorType, List[Tuple[int, int]], None]] = None,

+ ) -> Dict[str, List[TensorType]]:

+ """

+ Post-processes the raw depth predictions from the model to generate final depth predictions and optionally

+ resizes them to specified target sizes. This function supports scaling based on the field of view (FoV)

+ and adjusts depth values accordingly.

+

+ Args:

+ outputs ([`DepthProDepthEstimatorOutput`]):

+ Raw outputs of the model.

+ target_sizes (`Optional[Union[TensorType, List[Tuple[int, int]], None]]`, *optional*, defaults to `None`):

+ Target sizes to resize the depth predictions. Can be a tensor of shape `(batch_size, 2)`

+ or a list of tuples `(height, width)` for each image in the batch. If `None`, no resizing

+ is performed.

+

+ Returns:

+ `List[Dict[str, TensorType]]`: A list of dictionaries of tensors representing the processed depth

+ predictions.

+

+ Raises:

+ `ValueError`:

+ If the lengths of `predicted_depths`, `fovs`, or `target_sizes` are mismatched.

+ """

+ requires_backends(self, "torch")

+

+ predicted_depth = outputs.predicted_depth

+ fov = outputs.fov

+

+ batch_size = len(predicted_depth)

+

+ if target_sizes is not None and batch_size != len(target_sizes):

+ raise ValueError(

+ "Make sure that you pass in as many fov values as the batch dimension of the predicted depth"

+ )

+

+ results = []

+ fov = [None] * batch_size if fov is None else fov

+ target_sizes = [None] * batch_size if target_sizes is None else target_sizes

+ for depth, fov_value, target_size in zip(predicted_depth, fov, target_sizes):

+ if target_size is not None:

+ # scale image w.r.t fov

+ if fov_value is not None:

+ width = target_size[1]

+ fov_value = 0.5 * width / torch.tan(0.5 * torch.deg2rad(fov_value))

+ depth = depth * width / fov_value

+

+ # interpolate

+ depth = torch.nn.functional.interpolate(

+ # input should be (B, C, H, W)

+ input=depth.unsqueeze(0).unsqueeze(1),

+ size=target_size,

+ mode=pil_torch_interpolation_mapping[self.resample].value,

+ antialias=self.antialias,

+ ).squeeze()

+

+ # inverse the depth

+ depth = 1.0 / torch.clamp(depth, min=1e-4, max=1e4)

+

+ results.append(

+ {

+ "predicted_depth": depth,

+ "fov": fov_value,

+ }

+ )

+

+ return results

+

+

+__all__ = ["DepthProImageProcessorFast"]

diff --git a/src/transformers/models/depth_pro/modeling_depth_pro.py b/src/transformers/models/depth_pro/modeling_depth_pro.py

new file mode 100644

index 00000000000000..633d765b49f3f0

--- /dev/null

+++ b/src/transformers/models/depth_pro/modeling_depth_pro.py

@@ -0,0 +1,1686 @@

+# coding=utf-8

+# Copyright 2024 The Apple Research Team Authors and The HuggingFace Team. All rights reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.