diff --git a/README.md b/README.md

index 8518fc09dce800..8356aaa3e27eba 100644

--- a/README.md

+++ b/README.md

@@ -389,6 +389,7 @@ Current number of checkpoints: ** (from BigCode) released with the paper [SantaCoder: don't reach for the stars!](https://arxiv.org/abs/2301.03988) by Loubna Ben Allal, Raymond Li, Denis Kocetkov, Chenghao Mou, Christopher Akiki, Carlos Munoz Ferrandis, Niklas Muennighoff, Mayank Mishra, Alex Gu, Manan Dey, Logesh Kumar Umapathi, Carolyn Jane Anderson, Yangtian Zi, Joel Lamy Poirier, Hailey Schoelkopf, Sergey Troshin, Dmitry Abulkhanov, Manuel Romero, Michael Lappert, Francesco De Toni, Bernardo García del Río, Qian Liu, Shamik Bose, Urvashi Bhattacharyya, Terry Yue Zhuo, Ian Yu, Paulo Villegas, Marco Zocca, Sourab Mangrulkar, David Lansky, Huu Nguyen, Danish Contractor, Luis Villa, Jia Li, Dzmitry Bahdanau, Yacine Jernite, Sean Hughes, Daniel Fried, Arjun Guha, Harm de Vries, Leandro von Werra.

1. **[GPTSAN-japanese](https://huggingface.co/docs/transformers/model_doc/gptsan-japanese)** released in the repository [tanreinama/GPTSAN](https://github.com/tanreinama/GPTSAN/blob/main/report/model.md) by Toshiyuki Sakamoto(tanreinama).

1. **[Graphormer](https://huggingface.co/docs/transformers/model_doc/graphormer)** (from Microsoft) released with the paper [Do Transformers Really Perform Bad for Graph Representation?](https://arxiv.org/abs/2106.05234) by Chengxuan Ying, Tianle Cai, Shengjie Luo, Shuxin Zheng, Guolin Ke, Di He, Yanming Shen, Tie-Yan Liu.

+1. **[Grounding DINO](https://huggingface.co/docs/transformers/main/model_doc/grounding-dino)** (from Institute for AI, Tsinghua-Bosch Joint Center for ML, Tsinghua University, IDEA Research and others) released with the paper [Grounding DINO: Marrying DINO with Grounded Pre-Training for Open-Set Object Detection](https://arxiv.org/abs/2303.05499) by Shilong Liu, Zhaoyang Zeng, Tianhe Ren, Feng Li, Hao Zhang, Jie Yang, Chunyuan Li, Jianwei Yang, Hang Su, Jun Zhu, Lei Zhang.

1. **[GroupViT](https://huggingface.co/docs/transformers/model_doc/groupvit)** (from UCSD, NVIDIA) released with the paper [GroupViT: Semantic Segmentation Emerges from Text Supervision](https://arxiv.org/abs/2202.11094) by Jiarui Xu, Shalini De Mello, Sifei Liu, Wonmin Byeon, Thomas Breuel, Jan Kautz, Xiaolong Wang.

1. **[HerBERT](https://huggingface.co/docs/transformers/model_doc/herbert)** (from Allegro.pl, AGH University of Science and Technology) released with the paper [KLEJ: Comprehensive Benchmark for Polish Language Understanding](https://www.aclweb.org/anthology/2020.acl-main.111.pdf) by Piotr Rybak, Robert Mroczkowski, Janusz Tracz, Ireneusz Gawlik.

1. **[Hubert](https://huggingface.co/docs/transformers/model_doc/hubert)** (from Facebook) released with the paper [HuBERT: Self-Supervised Speech Representation Learning by Masked Prediction of Hidden Units](https://arxiv.org/abs/2106.07447) by Wei-Ning Hsu, Benjamin Bolte, Yao-Hung Hubert Tsai, Kushal Lakhotia, Ruslan Salakhutdinov, Abdelrahman Mohamed.

diff --git a/README_de.md b/README_de.md

index 66fae9870653df..f0c712674f04e2 100644

--- a/README_de.md

+++ b/README_de.md

@@ -385,6 +385,7 @@ Aktuelle Anzahl der Checkpoints: ** (from BigCode) released with the paper [SantaCoder: don't reach for the stars!](https://arxiv.org/abs/2301.03988) by Loubna Ben Allal, Raymond Li, Denis Kocetkov, Chenghao Mou, Christopher Akiki, Carlos Munoz Ferrandis, Niklas Muennighoff, Mayank Mishra, Alex Gu, Manan Dey, Logesh Kumar Umapathi, Carolyn Jane Anderson, Yangtian Zi, Joel Lamy Poirier, Hailey Schoelkopf, Sergey Troshin, Dmitry Abulkhanov, Manuel Romero, Michael Lappert, Francesco De Toni, Bernardo García del Río, Qian Liu, Shamik Bose, Urvashi Bhattacharyya, Terry Yue Zhuo, Ian Yu, Paulo Villegas, Marco Zocca, Sourab Mangrulkar, David Lansky, Huu Nguyen, Danish Contractor, Luis Villa, Jia Li, Dzmitry Bahdanau, Yacine Jernite, Sean Hughes, Daniel Fried, Arjun Guha, Harm de Vries, Leandro von Werra.

1. **[GPTSAN-japanese](https://huggingface.co/docs/transformers/model_doc/gptsan-japanese)** released in the repository [tanreinama/GPTSAN](https://github.com/tanreinama/GPTSAN/blob/main/report/model.md) by Toshiyuki Sakamoto(tanreinama).

1. **[Graphormer](https://huggingface.co/docs/transformers/model_doc/graphormer)** (from Microsoft) released with the paper [Do Transformers Really Perform Bad for Graph Representation?](https://arxiv.org/abs/2106.05234) by Chengxuan Ying, Tianle Cai, Shengjie Luo, Shuxin Zheng, Guolin Ke, Di He, Yanming Shen, Tie-Yan Liu.

+1. **[Grounding DINO](https://huggingface.co/docs/transformers/main/model_doc/grounding-dino)** (from Institute for AI, Tsinghua-Bosch Joint Center for ML, Tsinghua University, IDEA Research and others) released with the paper [Grounding DINO: Marrying DINO with Grounded Pre-Training for Open-Set Object Detection](https://arxiv.org/abs/2303.05499) by Shilong Liu, Zhaoyang Zeng, Tianhe Ren, Feng Li, Hao Zhang, Jie Yang, Chunyuan Li, Jianwei Yang, Hang Su, Jun Zhu, Lei Zhang.

1. **[GroupViT](https://huggingface.co/docs/transformers/model_doc/groupvit)** (from UCSD, NVIDIA) released with the paper [GroupViT: Semantic Segmentation Emerges from Text Supervision](https://arxiv.org/abs/2202.11094) by Jiarui Xu, Shalini De Mello, Sifei Liu, Wonmin Byeon, Thomas Breuel, Jan Kautz, Xiaolong Wang.

1. **[HerBERT](https://huggingface.co/docs/transformers/model_doc/herbert)** (from Allegro.pl, AGH University of Science and Technology) released with the paper [KLEJ: Comprehensive Benchmark for Polish Language Understanding](https://www.aclweb.org/anthology/2020.acl-main.111.pdf) by Piotr Rybak, Robert Mroczkowski, Janusz Tracz, Ireneusz Gawlik.

1. **[Hubert](https://huggingface.co/docs/transformers/model_doc/hubert)** (from Facebook) released with the paper [HuBERT: Self-Supervised Speech Representation Learning by Masked Prediction of Hidden Units](https://arxiv.org/abs/2106.07447) by Wei-Ning Hsu, Benjamin Bolte, Yao-Hung Hubert Tsai, Kushal Lakhotia, Ruslan Salakhutdinov, Abdelrahman Mohamed.

diff --git a/README_es.md b/README_es.md

index e4f4dedc3ea0cf..f9fcb97ff35d6d 100644

--- a/README_es.md

+++ b/README_es.md

@@ -362,6 +362,7 @@ Número actual de puntos de control: ** (from BigCode) released with the paper [SantaCoder: don't reach for the stars!](https://arxiv.org/abs/2301.03988) by Loubna Ben Allal, Raymond Li, Denis Kocetkov, Chenghao Mou, Christopher Akiki, Carlos Munoz Ferrandis, Niklas Muennighoff, Mayank Mishra, Alex Gu, Manan Dey, Logesh Kumar Umapathi, Carolyn Jane Anderson, Yangtian Zi, Joel Lamy Poirier, Hailey Schoelkopf, Sergey Troshin, Dmitry Abulkhanov, Manuel Romero, Michael Lappert, Francesco De Toni, Bernardo García del Río, Qian Liu, Shamik Bose, Urvashi Bhattacharyya, Terry Yue Zhuo, Ian Yu, Paulo Villegas, Marco Zocca, Sourab Mangrulkar, David Lansky, Huu Nguyen, Danish Contractor, Luis Villa, Jia Li, Dzmitry Bahdanau, Yacine Jernite, Sean Hughes, Daniel Fried, Arjun Guha, Harm de Vries, Leandro von Werra.

1. **[GPTSAN-japanese](https://huggingface.co/docs/transformers/model_doc/gptsan-japanese)** released in the repository [tanreinama/GPTSAN](https://github.com/tanreinama/GPTSAN/blob/main/report/model.md) by Toshiyuki Sakamoto(tanreinama).

1. **[Graphormer](https://huggingface.co/docs/transformers/model_doc/graphormer)** (from Microsoft) released with the paper [Do Transformers Really Perform Bad for Graph Representation?](https://arxiv.org/abs/2106.05234) by Chengxuan Ying, Tianle Cai, Shengjie Luo, Shuxin Zheng, Guolin Ke, Di He, Yanming Shen, Tie-Yan Liu.

+1. **[Grounding DINO](https://huggingface.co/docs/transformers/main/model_doc/grounding-dino)** (from Institute for AI, Tsinghua-Bosch Joint Center for ML, Tsinghua University, IDEA Research and others) released with the paper [Grounding DINO: Marrying DINO with Grounded Pre-Training for Open-Set Object Detection](https://arxiv.org/abs/2303.05499) by Shilong Liu, Zhaoyang Zeng, Tianhe Ren, Feng Li, Hao Zhang, Jie Yang, Chunyuan Li, Jianwei Yang, Hang Su, Jun Zhu, Lei Zhang.

1. **[GroupViT](https://huggingface.co/docs/transformers/model_doc/groupvit)** (from UCSD, NVIDIA) released with the paper [GroupViT: Semantic Segmentation Emerges from Text Supervision](https://arxiv.org/abs/2202.11094) by Jiarui Xu, Shalini De Mello, Sifei Liu, Wonmin Byeon, Thomas Breuel, Jan Kautz, Xiaolong Wang.

1. **[HerBERT](https://huggingface.co/docs/transformers/model_doc/herbert)** (from Allegro.pl, AGH University of Science and Technology) released with the paper [KLEJ: Comprehensive Benchmark for Polish Language Understanding](https://www.aclweb.org/anthology/2020.acl-main.111.pdf) by Piotr Rybak, Robert Mroczkowski, Janusz Tracz, Ireneusz Gawlik.

1. **[Hubert](https://huggingface.co/docs/transformers/model_doc/hubert)** (from Facebook) released with the paper [HuBERT: Self-Supervised Speech Representation Learning by Masked Prediction of Hidden Units](https://arxiv.org/abs/2106.07447) by Wei-Ning Hsu, Benjamin Bolte, Yao-Hung Hubert Tsai, Kushal Lakhotia, Ruslan Salakhutdinov, Abdelrahman Mohamed.

diff --git a/README_fr.md b/README_fr.md

index c2da27829ecbb5..cb26e6631d507b 100644

--- a/README_fr.md

+++ b/README_fr.md

@@ -383,6 +383,7 @@ Nombre actuel de points de contrôle : ** (de BigCode) a été publié dans l'article [SantaCoder: don't reach for the stars!](https://arxiv.org/abs/2301.03988) par Loubna Ben Allal, Raymond Li, Denis Kocetkov, Chenghao Mou, Christopher Akiki, Carlos Munoz Ferrandis, Niklas Muennighoff, Mayank Mishra, Alex Gu, Manan Dey, Logesh Kumar Umapathi, Carolyn Jane Anderson, Yangtian Zi, Joel Lamy Poirier, Hailey Schoelkopf, Sergey Troshin, Dmitry Abulkhanov, Manuel Romero, Michael Lappert, Francesco De Toni, Bernardo García del Río, Qian Liu, Shamik Bose, Urvashi Bhattacharyya, Terry Yue Zhuo, Ian Yu, Paulo Villegas, Marco Zocca, Sourab Mangrulkar, David Lansky, Huu Nguyen, Danish Contractor, Luis Villa, Jia Li, Dzmitry Bahdanau, Yacine Jernite, Sean Hughes, Daniel Fried, Arjun Guha, Harm de Vries, Leandro von Werra.

1. **[GPTSAN-japanese](https://huggingface.co/docs/transformers/model_doc/gptsan-japanese)** a été publié dans le dépôt [tanreinama/GPTSAN](https://github.com/tanreinama/GPTSAN/blob/main/report/model.md) par Toshiyuki Sakamoto (tanreinama).

1. **[Graphormer](https://huggingface.co/docs/transformers/model_doc/graphormer)** (de Microsoft) a été publié dans l'article [Do Transformers Really Perform Bad for Graph Representation?](https://arxiv.org/abs/2106.05234) par Chengxuan Ying, Tianle Cai, Shengjie Luo, Shuxin Zheng, Guolin Ke, Di He, Yanming Shen, Tie-Yan Liu.

+1. **[Grounding DINO](https://huggingface.co/docs/transformers/main/model_doc/grounding-dino)** (de Institute for AI, Tsinghua-Bosch Joint Center for ML, Tsinghua University, IDEA Research and others) publié dans l'article [Grounding DINO: Marrying DINO with Grounded Pre-Training for Open-Set Object Detection](https://arxiv.org/abs/2303.05499) parShilong Liu, Zhaoyang Zeng, Tianhe Ren, Feng Li, Hao Zhang, Jie Yang, Chunyuan Li, Jianwei Yang, Hang Su, Jun Zhu, Lei Zhang.

1. **[GroupViT](https://huggingface.co/docs/transformers/model_doc/groupvit)** (de l'UCSD, NVIDIA) a été publié dans l'article [GroupViT: Semantic Segmentation Emerges from Text Supervision](https://arxiv.org/abs/2202.11094) par Jiarui Xu, Shalini De Mello, Sifei Liu, Wonmin Byeon, Thomas Breuel, Jan Kautz, Xiaolong Wang.

1. **[HerBERT](https://huggingface.co/docs/transformers/model_doc/herbert)** (d'Allegro.pl, AGH University of Science and Technology) a été publié dans l'article [KLEJ: Comprehensive Benchmark for Polish Language Understanding](https://www.aclweb.org/anthology/2020.acl-main.111.pdf) par Piotr Rybak, Robert Mroczkowski, Janusz Tracz, Ireneusz Gawlik.

1. **[Hubert](https://huggingface.co/docs/transformers/model_doc/hubert)** (de Facebook) a été publié dans l'article [HuBERT: Self-Supervised Speech Representation Learning by Masked Prediction of Hidden Units](https://arxiv.org/abs/2106.07447) par Wei-Ning Hsu, Benjamin Bolte, Yao-Hung Hubert Tsai, Kushal Lakhotia, Ruslan Salakhutdinov, Abdelrahman Mohamed.

diff --git a/README_hd.md b/README_hd.md

index a5bd56ee1c1dce..108e14b4225f97 100644

--- a/README_hd.md

+++ b/README_hd.md

@@ -336,6 +336,7 @@ conda install conda-forge::transformers

1. **[GPTBigCode](https://huggingface.co/docs/transformers/model_doc/gpt_bigcode)** (BigCode से) Loubna Ben Allal, Raymond Li, Denis Kocetkov, Chenghao Mou, Christopher Akiki, Carlos Munoz Ferrandis, Niklas Muennighoff, Mayank Mishra, Alex Gu, Manan Dey, Logesh Kumar Umapathi, Carolyn Jane Anderson, Yangtian Zi, Joel Lamy Poirier, Hailey Schoelkopf, Sergey Troshin, Dmitry Abulkhanov, Manuel Romero, Michael Lappert, Francesco De Toni, Bernardo García del Río, Qian Liu, Shamik Bose, Urvashi Bhattacharyya, Terry Yue Zhuo, Ian Yu, Paulo Villegas, Marco Zocca, Sourab Mangrulkar, David Lansky, Huu Nguyen, Danish Contractor, Luis Villa, Jia Li, Dzmitry Bahdanau, Yacine Jernite, Sean Hughes, Daniel Fried, Arjun Guha, Harm de Vries, Leandro von Werra. द्वाराअनुसंधान पत्र [SantaCoder: don't reach for the stars!](https://arxiv.org/abs/2301.03988) के साथ जारी किया गया

1. **[GPTSAN-japanese](https://huggingface.co/docs/transformers/model_doc/gptsan-japanese)** released in the repository [tanreinama/GPTSAN](https://github.com/tanreinama/GPTSAN/blob/main/report/model.md) by Toshiyuki Sakamoto(tanreinama).

1. **[Graphormer](https://huggingface.co/docs/transformers/model_doc/graphormer)** (from Microsoft) released with the paper [Do Transformers Really Perform Bad for Graph Representation?](https://arxiv.org/abs/2106.05234) by Chengxuan Ying, Tianle Cai, Shengjie Luo, Shuxin Zheng, Guolin Ke, Di He, Yanming Shen, Tie-Yan Liu.

+1. **[Grounding DINO](https://huggingface.co/docs/transformers/main/model_doc/grounding-dino)** (Institute for AI, Tsinghua-Bosch Joint Center for ML, Tsinghua University, IDEA Research and others से) Shilong Liu, Zhaoyang Zeng, Tianhe Ren, Feng Li, Hao Zhang, Jie Yang, Chunyuan Li, Jianwei Yang, Hang Su, Jun Zhu, Lei Zhang. द्वाराअनुसंधान पत्र [Grounding DINO: Marrying DINO with Grounded Pre-Training for Open-Set Object Detection](https://arxiv.org/abs/2303.05499) के साथ जारी किया गया

1. **[GroupViT](https://huggingface.co/docs/transformers/model_doc/groupvit)** (UCSD, NVIDIA से) साथ में कागज [GroupViT: Semantic Segmentation Emerges from Text Supervision](https://arxiv.org/abs/2202.11094) जियारुई जू, शालिनी डी मेलो, सिफ़ी लियू, वोनमिन बायन, थॉमस ब्रेउएल, जान कौट्ज़, ज़ियाओलोंग वांग द्वारा।

1. **[HerBERT](https://huggingface.co/docs/transformers/model_doc/herbert)** (Allegro.pl, AGH University of Science and Technology से) Piotr Rybak, Robert Mroczkowski, Janusz Tracz, Ireneusz Gawlik. द्वाराअनुसंधान पत्र [KLEJ: Comprehensive Benchmark for Polish Language Understanding](https://www.aclweb.org/anthology/2020.acl-main.111.pdf) के साथ जारी किया गया

1. **[Hubert](https://huggingface.co/docs/transformers/model_doc/hubert)** (फेसबुक से) साथ में पेपर [HuBERT: Self-Supervised Speech Representation Learning by Masked Prediction of Hidden Units](https://arxiv.org/abs/2106.07447) वेई-निंग सू, बेंजामिन बोल्टे, याओ-हंग ह्यूबर्ट त्साई, कुशाल लखोटिया, रुस्लान सालाखुतदीनोव, अब्देलरहमान मोहम्मद द्वारा।

diff --git a/README_ja.md b/README_ja.md

index e42a5680a79d7f..0b251cb21200ac 100644

--- a/README_ja.md

+++ b/README_ja.md

@@ -396,6 +396,7 @@ Flax、PyTorch、TensorFlowをcondaでインストールする方法は、それ

1. **[GPTBigCode](https://huggingface.co/docs/transformers/model_doc/gpt_bigcode)** (BigCode から) Loubna Ben Allal, Raymond Li, Denis Kocetkov, Chenghao Mou, Christopher Akiki, Carlos Munoz Ferrandis, Niklas Muennighoff, Mayank Mishra, Alex Gu, Manan Dey, Logesh Kumar Umapathi, Carolyn Jane Anderson, Yangtian Zi, Joel Lamy Poirier, Hailey Schoelkopf, Sergey Troshin, Dmitry Abulkhanov, Manuel Romero, Michael Lappert, Francesco De Toni, Bernardo García del Río, Qian Liu, Shamik Bose, Urvashi Bhattacharyya, Terry Yue Zhuo, Ian Yu, Paulo Villegas, Marco Zocca, Sourab Mangrulkar, David Lansky, Huu Nguyen, Danish Contractor, Luis Villa, Jia Li, Dzmitry Bahdanau, Yacine Jernite, Sean Hughes, Daniel Fried, Arjun Guha, Harm de Vries, Leandro von Werra. から公開された研究論文 [SantaCoder: don't reach for the stars!](https://arxiv.org/abs/2301.03988)

1. **[GPTSAN-japanese](https://huggingface.co/docs/transformers/model_doc/gptsan-japanese)** [tanreinama/GPTSAN](https://github.com/tanreinama/GPTSAN/blob/main/report/model.md) 坂本俊之(tanreinama)からリリースされました.

1. **[Graphormer](https://huggingface.co/docs/transformers/model_doc/graphormer)** (Microsoft から) Chengxuan Ying, Tianle Cai, Shengjie Luo, Shuxin Zheng, Guolin Ke, Di He, Yanming Shen, Tie-Yan Liu から公開された研究論文: [Do Transformers Really Perform Bad for Graph Representation?](https://arxiv.org/abs/2106.05234).

+1. **[Grounding DINO](https://huggingface.co/docs/transformers/main/model_doc/grounding-dino)** (Institute for AI, Tsinghua-Bosch Joint Center for ML, Tsinghua University, IDEA Research and others から) Shilong Liu, Zhaoyang Zeng, Tianhe Ren, Feng Li, Hao Zhang, Jie Yang, Chunyuan Li, Jianwei Yang, Hang Su, Jun Zhu, Lei Zhang. から公開された研究論文 [Grounding DINO: Marrying DINO with Grounded Pre-Training for Open-Set Object Detection](https://arxiv.org/abs/2303.05499)

1. **[GroupViT](https://huggingface.co/docs/transformers/model_doc/groupvit)** (UCSD, NVIDIA から) Jiarui Xu, Shalini De Mello, Sifei Liu, Wonmin Byeon, Thomas Breuel, Jan Kautz, Xiaolong Wang から公開された研究論文: [GroupViT: Semantic Segmentation Emerges from Text Supervision](https://arxiv.org/abs/2202.11094)

1. **[HerBERT](https://huggingface.co/docs/transformers/model_doc/herbert)** (Allegro.pl, AGH University of Science and Technology から) Piotr Rybak, Robert Mroczkowski, Janusz Tracz, Ireneusz Gawlik. から公開された研究論文 [KLEJ: Comprehensive Benchmark for Polish Language Understanding](https://www.aclweb.org/anthology/2020.acl-main.111.pdf)

1. **[Hubert](https://huggingface.co/docs/transformers/model_doc/hubert)** (Facebook から) Wei-Ning Hsu, Benjamin Bolte, Yao-Hung Hubert Tsai, Kushal Lakhotia, Ruslan Salakhutdinov, Abdelrahman Mohamed から公開された研究論文: [HuBERT: Self-Supervised Speech Representation Learning by Masked Prediction of Hidden Units](https://arxiv.org/abs/2106.07447)

diff --git a/README_ko.md b/README_ko.md

index 95cb1b0b79d5b0..03f2ff386ed3f4 100644

--- a/README_ko.md

+++ b/README_ko.md

@@ -311,6 +311,7 @@ Flax, PyTorch, TensorFlow 설치 페이지에서 이들을 conda로 설치하는

1. **[GPTBigCode](https://huggingface.co/docs/transformers/model_doc/gpt_bigcode)** (BigCode 에서 제공)은 Loubna Ben Allal, Raymond Li, Denis Kocetkov, Chenghao Mou, Christopher Akiki, Carlos Munoz Ferrandis, Niklas Muennighoff, Mayank Mishra, Alex Gu, Manan Dey, Logesh Kumar Umapathi, Carolyn Jane Anderson, Yangtian Zi, Joel Lamy Poirier, Hailey Schoelkopf, Sergey Troshin, Dmitry Abulkhanov, Manuel Romero, Michael Lappert, Francesco De Toni, Bernardo García del Río, Qian Liu, Shamik Bose, Urvashi Bhattacharyya, Terry Yue Zhuo, Ian Yu, Paulo Villegas, Marco Zocca, Sourab Mangrulkar, David Lansky, Huu Nguyen, Danish Contractor, Luis Villa, Jia Li, Dzmitry Bahdanau, Yacine Jernite, Sean Hughes, Daniel Fried, Arjun Guha, Harm de Vries, Leandro von Werra.의 [SantaCoder: don't reach for the stars!](https://arxiv.org/abs/2301.03988)논문과 함께 발표했습니다.

1. **[GPTSAN-japanese](https://huggingface.co/docs/transformers/model_doc/gptsan-japanese)** released in the repository [tanreinama/GPTSAN](https://github.com/tanreinama/GPTSAN/blob/main/report/model.md) by Toshiyuki Sakamoto(tanreinama).

1. **[Graphormer](https://huggingface.co/docs/transformers/model_doc/graphormer)** (from Microsoft) Chengxuan Ying, Tianle Cai, Shengjie Luo, Shuxin Zheng, Guolin Ke, Di He, Yanming Shen, Tie-Yan Liu 의 [Do Transformers Really Perform Bad for Graph Representation?](https://arxiv.org/abs/2106.05234) 논문과 함께 발표했습니다.

+1. **[Grounding DINO](https://huggingface.co/docs/transformers/main/model_doc/grounding-dino)** (Institute for AI, Tsinghua-Bosch Joint Center for ML, Tsinghua University, IDEA Research and others 에서 제공)은 Shilong Liu, Zhaoyang Zeng, Tianhe Ren, Feng Li, Hao Zhang, Jie Yang, Chunyuan Li, Jianwei Yang, Hang Su, Jun Zhu, Lei Zhang.의 [Grounding DINO: Marrying DINO with Grounded Pre-Training for Open-Set Object Detection](https://arxiv.org/abs/2303.05499)논문과 함께 발표했습니다.

1. **[GroupViT](https://huggingface.co/docs/transformers/model_doc/groupvit)** (UCSD, NVIDIA 에서) Jiarui Xu, Shalini De Mello, Sifei Liu, Wonmin Byeon, Thomas Breuel, Jan Kautz, Xiaolong Wang 의 [GroupViT: Semantic Segmentation Emerges from Text Supervision](https://arxiv.org/abs/2202.11094) 논문과 함께 발표했습니다.

1. **[HerBERT](https://huggingface.co/docs/transformers/model_doc/herbert)** (Allegro.pl, AGH University of Science and Technology 에서 제공)은 Piotr Rybak, Robert Mroczkowski, Janusz Tracz, Ireneusz Gawlik.의 [KLEJ: Comprehensive Benchmark for Polish Language Understanding](https://www.aclweb.org/anthology/2020.acl-main.111.pdf)논문과 함께 발표했습니다.

1. **[Hubert](https://huggingface.co/docs/transformers/model_doc/hubert)** (Facebook 에서) Wei-Ning Hsu, Benjamin Bolte, Yao-Hung Hubert Tsai, Kushal Lakhotia, Ruslan Salakhutdinov, Abdelrahman Mohamed 의 [HuBERT: Self-Supervised Speech Representation Learning by Masked Prediction of Hidden Units](https://arxiv.org/abs/2106.07447) 논문과 함께 발표했습니다.

diff --git a/README_pt-br.md b/README_pt-br.md

index 7d10ce5e8c986c..94260ac34dd3c2 100644

--- a/README_pt-br.md

+++ b/README_pt-br.md

@@ -394,6 +394,7 @@ Número atual de pontos de verificação: ** (from BigCode) released with the paper [SantaCoder: don't reach for the stars!](https://arxiv.org/abs/2301.03988) by Loubna Ben Allal, Raymond Li, Denis Kocetkov, Chenghao Mou, Christopher Akiki, Carlos Munoz Ferrandis, Niklas Muennighoff, Mayank Mishra, Alex Gu, Manan Dey, Logesh Kumar Umapathi, Carolyn Jane Anderson, Yangtian Zi, Joel Lamy Poirier, Hailey Schoelkopf, Sergey Troshin, Dmitry Abulkhanov, Manuel Romero, Michael Lappert, Francesco De Toni, Bernardo García del Río, Qian Liu, Shamik Bose, Urvashi Bhattacharyya, Terry Yue Zhuo, Ian Yu, Paulo Villegas, Marco Zocca, Sourab Mangrulkar, David Lansky, Huu Nguyen, Danish Contractor, Luis Villa, Jia Li, Dzmitry Bahdanau, Yacine Jernite, Sean Hughes, Daniel Fried, Arjun Guha, Harm de Vries, Leandro von Werra.

1. **[GPTSAN-japanese](https://huggingface.co/docs/transformers/model_doc/gptsan-japanese)** released in the repository [tanreinama/GPTSAN](https://github.com/tanreinama/GPTSAN/blob/main/report/model.md) by Toshiyuki Sakamoto(tanreinama).

1. **[Graphormer](https://huggingface.co/docs/transformers/model_doc/graphormer)** (from Microsoft) released with the paper [Do Transformers Really Perform Bad for Graph Representation?](https://arxiv.org/abs/2106.05234) by Chengxuan Ying, Tianle Cai, Shengjie Luo, Shuxin Zheng, Guolin Ke, Di He, Yanming Shen, Tie-Yan Liu.

+1. **[Grounding DINO](https://huggingface.co/docs/transformers/main/model_doc/grounding-dino)** (from Institute for AI, Tsinghua-Bosch Joint Center for ML, Tsinghua University, IDEA Research and others) released with the paper [Grounding DINO: Marrying DINO with Grounded Pre-Training for Open-Set Object Detection](https://arxiv.org/abs/2303.05499) by Shilong Liu, Zhaoyang Zeng, Tianhe Ren, Feng Li, Hao Zhang, Jie Yang, Chunyuan Li, Jianwei Yang, Hang Su, Jun Zhu, Lei Zhang.

1. **[GroupViT](https://huggingface.co/docs/transformers/model_doc/groupvit)** (from UCSD, NVIDIA) released with the paper [GroupViT: Semantic Segmentation Emerges from Text Supervision](https://arxiv.org/abs/2202.11094) by Jiarui Xu, Shalini De Mello, Sifei Liu, Wonmin Byeon, Thomas Breuel, Jan Kautz, Xiaolong Wang.

1. **[HerBERT](https://huggingface.co/docs/transformers/model_doc/herbert)** (from Allegro.pl, AGH University of Science and Technology) released with the paper [KLEJ: Comprehensive Benchmark for Polish Language Understanding](https://www.aclweb.org/anthology/2020.acl-main.111.pdf) by Piotr Rybak, Robert Mroczkowski, Janusz Tracz, Ireneusz Gawlik.

1. **[Hubert](https://huggingface.co/docs/transformers/model_doc/hubert)** (from Facebook) released with the paper [HuBERT: Self-Supervised Speech Representation Learning by Masked Prediction of Hidden Units](https://arxiv.org/abs/2106.07447) by Wei-Ning Hsu, Benjamin Bolte, Yao-Hung Hubert Tsai, Kushal Lakhotia, Ruslan Salakhutdinov, Abdelrahman Mohamed.

diff --git a/README_ru.md b/README_ru.md

index 03cc1b919e889f..f70118b57d83f5 100644

--- a/README_ru.md

+++ b/README_ru.md

@@ -384,6 +384,7 @@ conda install conda-forge::transformers

1. **[GPTBigCode](https://huggingface.co/docs/transformers/model_doc/gpt_bigcode)** (from BigCode) released with the paper [SantaCoder: don't reach for the stars!](https://arxiv.org/abs/2301.03988) by Loubna Ben Allal, Raymond Li, Denis Kocetkov, Chenghao Mou, Christopher Akiki, Carlos Munoz Ferrandis, Niklas Muennighoff, Mayank Mishra, Alex Gu, Manan Dey, Logesh Kumar Umapathi, Carolyn Jane Anderson, Yangtian Zi, Joel Lamy Poirier, Hailey Schoelkopf, Sergey Troshin, Dmitry Abulkhanov, Manuel Romero, Michael Lappert, Francesco De Toni, Bernardo García del Río, Qian Liu, Shamik Bose, Urvashi Bhattacharyya, Terry Yue Zhuo, Ian Yu, Paulo Villegas, Marco Zocca, Sourab Mangrulkar, David Lansky, Huu Nguyen, Danish Contractor, Luis Villa, Jia Li, Dzmitry Bahdanau, Yacine Jernite, Sean Hughes, Daniel Fried, Arjun Guha, Harm de Vries, Leandro von Werra.

1. **[GPTSAN-japanese](https://huggingface.co/docs/transformers/model_doc/gptsan-japanese)** released in the repository [tanreinama/GPTSAN](https://github.com/tanreinama/GPTSAN/blob/main/report/model.md) by Toshiyuki Sakamoto(tanreinama).

1. **[Graphormer](https://huggingface.co/docs/transformers/model_doc/graphormer)** (from Microsoft) released with the paper [Do Transformers Really Perform Bad for Graph Representation?](https://arxiv.org/abs/2106.05234) by Chengxuan Ying, Tianle Cai, Shengjie Luo, Shuxin Zheng, Guolin Ke, Di He, Yanming Shen, Tie-Yan Liu.

+1. **[Grounding DINO](https://huggingface.co/docs/transformers/main/model_doc/grounding-dino)** (from Institute for AI, Tsinghua-Bosch Joint Center for ML, Tsinghua University, IDEA Research and others) released with the paper [Grounding DINO: Marrying DINO with Grounded Pre-Training for Open-Set Object Detection](https://arxiv.org/abs/2303.05499) by Shilong Liu, Zhaoyang Zeng, Tianhe Ren, Feng Li, Hao Zhang, Jie Yang, Chunyuan Li, Jianwei Yang, Hang Su, Jun Zhu, Lei Zhang.

1. **[GroupViT](https://huggingface.co/docs/transformers/model_doc/groupvit)** (from UCSD, NVIDIA) released with the paper [GroupViT: Semantic Segmentation Emerges from Text Supervision](https://arxiv.org/abs/2202.11094) by Jiarui Xu, Shalini De Mello, Sifei Liu, Wonmin Byeon, Thomas Breuel, Jan Kautz, Xiaolong Wang.

1. **[HerBERT](https://huggingface.co/docs/transformers/model_doc/herbert)** (from Allegro.pl, AGH University of Science and Technology) released with the paper [KLEJ: Comprehensive Benchmark for Polish Language Understanding](https://www.aclweb.org/anthology/2020.acl-main.111.pdf) by Piotr Rybak, Robert Mroczkowski, Janusz Tracz, Ireneusz Gawlik.

1. **[Hubert](https://huggingface.co/docs/transformers/model_doc/hubert)** (from Facebook) released with the paper [HuBERT: Self-Supervised Speech Representation Learning by Masked Prediction of Hidden Units](https://arxiv.org/abs/2106.07447) by Wei-Ning Hsu, Benjamin Bolte, Yao-Hung Hubert Tsai, Kushal Lakhotia, Ruslan Salakhutdinov, Abdelrahman Mohamed.

diff --git a/README_te.md b/README_te.md

index fa762d5659b5da..ea3f5b28e3a745 100644

--- a/README_te.md

+++ b/README_te.md

@@ -386,6 +386,7 @@ Flax, PyTorch లేదా TensorFlow యొక్క ఇన్స్టా

1. **[GPTBigCode](https://huggingface.co/docs/transformers/model_doc/gpt_bigcode)** (from BigCode) released with the paper [SantaCoder: don't reach for the stars!](https://arxiv.org/abs/2301.03988) by Loubna Ben Allal, Raymond Li, Denis Kocetkov, Chenghao Mou, Christopher Akiki, Carlos Munoz Ferrandis, Niklas Muennighoff, Mayank Mishra, Alex Gu, Manan Dey, Logesh Kumar Umapathi, Carolyn Jane Anderson, Yangtian Zi, Joel Lamy Poirier, Hailey Schoelkopf, Sergey Troshin, Dmitry Abulkhanov, Manuel Romero, Michael Lappert, Francesco De Toni, Bernardo García del Río, Qian Liu, Shamik Bose, Urvashi Bhattacharyya, Terry Yue Zhuo, Ian Yu, Paulo Villegas, Marco Zocca, Sourab Mangrulkar, David Lansky, Huu Nguyen, Danish Contractor, Luis Villa, Jia Li, Dzmitry Bahdanau, Yacine Jernite, Sean Hughes, Daniel Fried, Arjun Guha, Harm de Vries, Leandro von Werra.

1. **[GPTSAN-japanese](https://huggingface.co/docs/transformers/model_doc/gptsan-japanese)** released in the repository [tanreinama/GPTSAN](https://github.com/tanreinama/GPTSAN/blob/main/report/model.md) by Toshiyuki Sakamoto(tanreinama).

1. **[Graphormer](https://huggingface.co/docs/transformers/model_doc/graphormer)** (from Microsoft) released with the paper [Do Transformers Really Perform Bad for Graph Representation?](https://arxiv.org/abs/2106.05234) by Chengxuan Ying, Tianle Cai, Shengjie Luo, Shuxin Zheng, Guolin Ke, Di He, Yanming Shen, Tie-Yan Liu.

+1. **[Grounding DINO](https://huggingface.co/docs/transformers/main/model_doc/grounding-dino)** (from Institute for AI, Tsinghua-Bosch Joint Center for ML, Tsinghua University, IDEA Research and others) released with the paper [Grounding DINO: Marrying DINO with Grounded Pre-Training for Open-Set Object Detection](https://arxiv.org/abs/2303.05499) by Shilong Liu, Zhaoyang Zeng, Tianhe Ren, Feng Li, Hao Zhang, Jie Yang, Chunyuan Li, Jianwei Yang, Hang Su, Jun Zhu, Lei Zhang.

1. **[GroupViT](https://huggingface.co/docs/transformers/model_doc/groupvit)** (from UCSD, NVIDIA) released with the paper [GroupViT: Semantic Segmentation Emerges from Text Supervision](https://arxiv.org/abs/2202.11094) by Jiarui Xu, Shalini De Mello, Sifei Liu, Wonmin Byeon, Thomas Breuel, Jan Kautz, Xiaolong Wang.

1. **[HerBERT](https://huggingface.co/docs/transformers/model_doc/herbert)** (from Allegro.pl, AGH University of Science and Technology) released with the paper [KLEJ: Comprehensive Benchmark for Polish Language Understanding](https://www.aclweb.org/anthology/2020.acl-main.111.pdf) by Piotr Rybak, Robert Mroczkowski, Janusz Tracz, Ireneusz Gawlik.

1. **[Hubert](https://huggingface.co/docs/transformers/model_doc/hubert)** (from Facebook) released with the paper [HuBERT: Self-Supervised Speech Representation Learning by Masked Prediction of Hidden Units](https://arxiv.org/abs/2106.07447) by Wei-Ning Hsu, Benjamin Bolte, Yao-Hung Hubert Tsai, Kushal Lakhotia, Ruslan Salakhutdinov, Abdelrahman Mohamed.

diff --git a/README_vi.md b/README_vi.md

index e7cad3b3649cc4..73ca0ecbf36946 100644

--- a/README_vi.md

+++ b/README_vi.md

@@ -385,6 +385,7 @@ Số lượng điểm kiểm tra hiện tại: ** (từ BigCode) được phát hành với bài báo [SantaCoder: don't reach for the stars!](https://arxiv.org/abs/2301.03988) by Loubna Ben Allal, Raymond Li, Denis Kocetkov, Chenghao Mou, Christopher Akiki, Carlos Munoz Ferrandis, Niklas Muennighoff, Mayank Mishra, Alex Gu, Manan Dey, Logesh Kumar Umapathi, Carolyn Jane Anderson, Yangtian Zi, Joel Lamy Poirier, Hailey Schoelkopf, Sergey Troshin, Dmitry Abulkhanov, Manuel Romero, Michael Lappert, Francesco De Toni, Bernardo García del Río, Qian Liu, Shamik Bose, Urvashi Bhattacharyya, Terry Yue Zhuo, Ian Yu, Paulo Villegas, Marco Zocca, Sourab Mangrulkar, David Lansky, Huu Nguyen, Danish Contractor, Luis Villa, Jia Li, Dzmitry Bahdanau, Yacine Jernite, Sean Hughes, Daniel Fried, Arjun Guha, Harm de Vries, Leandro von Werra.

1. **[GPTSAN-japanese](https://huggingface.co/docs/transformers/model_doc/gptsan-japanese)** released in the repository [tanreinama/GPTSAN](https://github.com/tanreinama/GPTSAN/blob/main/report/model.md) by Toshiyuki Sakamoto(tanreinama).

1. **[Graphormer](https://huggingface.co/docs/transformers/model_doc/graphormer)** (từ Microsoft) được phát hành với bài báo [Do Transformers Really Perform Bad for Graph Representation?](https://arxiv.org/abs/2106.05234) by Chengxuan Ying, Tianle Cai, Shengjie Luo, Shuxin Zheng, Guolin Ke, Di He, Yanming Shen, Tie-Yan Liu.

+1. **[Grounding DINO](https://huggingface.co/docs/transformers/main/model_doc/grounding-dino)** (từ Institute for AI, Tsinghua-Bosch Joint Center for ML, Tsinghua University, IDEA Research and others) được phát hành với bài báo [Grounding DINO: Marrying DINO with Grounded Pre-Training for Open-Set Object Detection](https://arxiv.org/abs/2303.05499) by Shilong Liu, Zhaoyang Zeng, Tianhe Ren, Feng Li, Hao Zhang, Jie Yang, Chunyuan Li, Jianwei Yang, Hang Su, Jun Zhu, Lei Zhang.

1. **[GroupViT](https://huggingface.co/docs/transformers/model_doc/groupvit)** (từ UCSD, NVIDIA) được phát hành với bài báo [GroupViT: Semantic Segmentation Emerges from Text Supervision](https://arxiv.org/abs/2202.11094) by Jiarui Xu, Shalini De Mello, Sifei Liu, Wonmin Byeon, Thomas Breuel, Jan Kautz, Xiaolong Wang.

1. **[HerBERT](https://huggingface.co/docs/transformers/model_doc/herbert)** (từ Allegro.pl, AGH University of Science and Technology) được phát hành với bài báo [KLEJ: Comprehensive Benchmark for Polish Language Understanding](https://www.aclweb.org/anthology/2020.acl-main.111.pdf) by Piotr Rybak, Robert Mroczkowski, Janusz Tracz, Ireneusz Gawlik.

1. **[Hubert](https://huggingface.co/docs/transformers/model_doc/hubert)** (từ Facebook) được phát hành với bài báo [HuBERT: Self-Supervised Speech Representation Learning by Masked Prediction of Hidden Units](https://arxiv.org/abs/2106.07447) by Wei-Ning Hsu, Benjamin Bolte, Yao-Hung Hubert Tsai, Kushal Lakhotia, Ruslan Salakhutdinov, Abdelrahman Mohamed.

diff --git a/README_zh-hans.md b/README_zh-hans.md

index 8ac4d5c388ee15..33507ea4127d0a 100644

--- a/README_zh-hans.md

+++ b/README_zh-hans.md

@@ -335,6 +335,7 @@ conda install conda-forge::transformers

1. **[GPTBigCode](https://huggingface.co/docs/transformers/model_doc/gpt_bigcode)** (来自 BigCode) 伴随论文 [SantaCoder: don't reach for the stars!](https://arxiv.org/abs/2301.03988) 由 Loubna Ben Allal, Raymond Li, Denis Kocetkov, Chenghao Mou, Christopher Akiki, Carlos Munoz Ferrandis, Niklas Muennighoff, Mayank Mishra, Alex Gu, Manan Dey, Logesh Kumar Umapathi, Carolyn Jane Anderson, Yangtian Zi, Joel Lamy Poirier, Hailey Schoelkopf, Sergey Troshin, Dmitry Abulkhanov, Manuel Romero, Michael Lappert, Francesco De Toni, Bernardo García del Río, Qian Liu, Shamik Bose, Urvashi Bhattacharyya, Terry Yue Zhuo, Ian Yu, Paulo Villegas, Marco Zocca, Sourab Mangrulkar, David Lansky, Huu Nguyen, Danish Contractor, Luis Villa, Jia Li, Dzmitry Bahdanau, Yacine Jernite, Sean Hughes, Daniel Fried, Arjun Guha, Harm de Vries, Leandro von Werra 发布。

1. **[GPTSAN-japanese](https://huggingface.co/docs/transformers/model_doc/gptsan-japanese)** released in the repository [tanreinama/GPTSAN](https://github.com/tanreinama/GPTSAN/blob/main/report/model.md) by 坂本俊之(tanreinama).

1. **[Graphormer](https://huggingface.co/docs/transformers/model_doc/graphormer)** (from Microsoft) released with the paper [Do Transformers Really Perform Bad for Graph Representation?](https://arxiv.org/abs/2106.05234) by Chengxuan Ying, Tianle Cai, Shengjie Luo, Shuxin Zheng, Guolin Ke, Di He, Yanming Shen, Tie-Yan Liu.

+1. **[Grounding DINO](https://huggingface.co/docs/transformers/main/model_doc/grounding-dino)** (来自 Institute for AI, Tsinghua-Bosch Joint Center for ML, Tsinghua University, IDEA Research and others) 伴随论文 [Grounding DINO: Marrying DINO with Grounded Pre-Training for Open-Set Object Detection](https://arxiv.org/abs/2303.05499) 由 Shilong Liu, Zhaoyang Zeng, Tianhe Ren, Feng Li, Hao Zhang, Jie Yang, Chunyuan Li, Jianwei Yang, Hang Su, Jun Zhu, Lei Zhang 发布。

1. **[GroupViT](https://huggingface.co/docs/transformers/model_doc/groupvit)** (来自 UCSD, NVIDIA) 伴随论文 [GroupViT: Semantic Segmentation Emerges from Text Supervision](https://arxiv.org/abs/2202.11094) 由 Jiarui Xu, Shalini De Mello, Sifei Liu, Wonmin Byeon, Thomas Breuel, Jan Kautz, Xiaolong Wang 发布。

1. **[HerBERT](https://huggingface.co/docs/transformers/model_doc/herbert)** (来自 Allegro.pl, AGH University of Science and Technology) 伴随论文 [KLEJ: Comprehensive Benchmark for Polish Language Understanding](https://www.aclweb.org/anthology/2020.acl-main.111.pdf) 由 Piotr Rybak, Robert Mroczkowski, Janusz Tracz, Ireneusz Gawlik 发布。

1. **[Hubert](https://huggingface.co/docs/transformers/model_doc/hubert)** (来自 Facebook) 伴随论文 [HuBERT: Self-Supervised Speech Representation Learning by Masked Prediction of Hidden Units](https://arxiv.org/abs/2106.07447) 由 Wei-Ning Hsu, Benjamin Bolte, Yao-Hung Hubert Tsai, Kushal Lakhotia, Ruslan Salakhutdinov, Abdelrahman Mohamed 发布。

diff --git a/README_zh-hant.md b/README_zh-hant.md

index 0e31d8e6b52f4a..24ce3519d69285 100644

--- a/README_zh-hant.md

+++ b/README_zh-hant.md

@@ -347,6 +347,7 @@ conda install conda-forge::transformers

1. **[GPTBigCode](https://huggingface.co/docs/transformers/model_doc/gpt_bigcode)** (from BigCode) released with the paper [SantaCoder: don't reach for the stars!](https://arxiv.org/abs/2301.03988) by Loubna Ben Allal, Raymond Li, Denis Kocetkov, Chenghao Mou, Christopher Akiki, Carlos Munoz Ferrandis, Niklas Muennighoff, Mayank Mishra, Alex Gu, Manan Dey, Logesh Kumar Umapathi, Carolyn Jane Anderson, Yangtian Zi, Joel Lamy Poirier, Hailey Schoelkopf, Sergey Troshin, Dmitry Abulkhanov, Manuel Romero, Michael Lappert, Francesco De Toni, Bernardo García del Río, Qian Liu, Shamik Bose, Urvashi Bhattacharyya, Terry Yue Zhuo, Ian Yu, Paulo Villegas, Marco Zocca, Sourab Mangrulkar, David Lansky, Huu Nguyen, Danish Contractor, Luis Villa, Jia Li, Dzmitry Bahdanau, Yacine Jernite, Sean Hughes, Daniel Fried, Arjun Guha, Harm de Vries, Leandro von Werra.

1. **[GPTSAN-japanese](https://huggingface.co/docs/transformers/model_doc/gptsan-japanese)** released in the repository [tanreinama/GPTSAN](https://github.com/tanreinama/GPTSAN/blob/main/report/model.md) by 坂本俊之(tanreinama).

1. **[Graphormer](https://huggingface.co/docs/transformers/model_doc/graphormer)** (from Microsoft) released with the paper [Do Transformers Really Perform Bad for Graph Representation?](https://arxiv.org/abs/2106.05234) by Chengxuan Ying, Tianle Cai, Shengjie Luo, Shuxin Zheng, Guolin Ke, Di He, Yanming Shen, Tie-Yan Liu.

+1. **[Grounding DINO](https://huggingface.co/docs/transformers/main/model_doc/grounding-dino)** (from Institute for AI, Tsinghua-Bosch Joint Center for ML, Tsinghua University, IDEA Research and others) released with the paper [Grounding DINO: Marrying DINO with Grounded Pre-Training for Open-Set Object Detection](https://arxiv.org/abs/2303.05499) by Shilong Liu, Zhaoyang Zeng, Tianhe Ren, Feng Li, Hao Zhang, Jie Yang, Chunyuan Li, Jianwei Yang, Hang Su, Jun Zhu, Lei Zhang.

1. **[GroupViT](https://huggingface.co/docs/transformers/model_doc/groupvit)** (from UCSD, NVIDIA) released with the paper [GroupViT: Semantic Segmentation Emerges from Text Supervision](https://arxiv.org/abs/2202.11094) by Jiarui Xu, Shalini De Mello, Sifei Liu, Wonmin Byeon, Thomas Breuel, Jan Kautz, Xiaolong Wang.

1. **[HerBERT](https://huggingface.co/docs/transformers/model_doc/herbert)** (from Allegro.pl, AGH University of Science and Technology) released with the paper [KLEJ: Comprehensive Benchmark for Polish Language Understanding](https://www.aclweb.org/anthology/2020.acl-main.111.pdf) by Piotr Rybak, Robert Mroczkowski, Janusz Tracz, Ireneusz Gawlik.

1. **[Hubert](https://huggingface.co/docs/transformers/model_doc/hubert)** (from Facebook) released with the paper [HuBERT: Self-Supervised Speech Representation Learning by Masked Prediction of Hidden Units](https://arxiv.org/abs/2106.07447) by Wei-Ning Hsu, Benjamin Bolte, Yao-Hung Hubert Tsai, Kushal Lakhotia, Ruslan Salakhutdinov, Abdelrahman Mohamed.

diff --git a/docs/source/en/_toctree.yml b/docs/source/en/_toctree.yml

index af44de4d1067b1..07b5e760544e66 100644

--- a/docs/source/en/_toctree.yml

+++ b/docs/source/en/_toctree.yml

@@ -730,6 +730,8 @@

title: FLAVA

- local: model_doc/git

title: GIT

+ - local: model_doc/grounding-dino

+ title: Grounding DINO

- local: model_doc/groupvit

title: GroupViT

- local: model_doc/idefics

diff --git a/docs/source/en/index.md b/docs/source/en/index.md

index ffa9ae3f4b0b41..dac172f66a2866 100644

--- a/docs/source/en/index.md

+++ b/docs/source/en/index.md

@@ -154,6 +154,7 @@ Flax), PyTorch, and/or TensorFlow.

| [GPTBigCode](model_doc/gpt_bigcode) | ✅ | ❌ | ❌ |

| [GPTSAN-japanese](model_doc/gptsan-japanese) | ✅ | ❌ | ❌ |

| [Graphormer](model_doc/graphormer) | ✅ | ❌ | ❌ |

+| [Grounding DINO](model_doc/grounding-dino) | ✅ | ❌ | ❌ |

| [GroupViT](model_doc/groupvit) | ✅ | ✅ | ❌ |

| [HerBERT](model_doc/herbert) | ✅ | ✅ | ✅ |

| [Hubert](model_doc/hubert) | ✅ | ✅ | ❌ |

diff --git a/docs/source/en/model_doc/grounding-dino.md b/docs/source/en/model_doc/grounding-dino.md

new file mode 100644

index 00000000000000..3c6bd6fce06920

--- /dev/null

+++ b/docs/source/en/model_doc/grounding-dino.md

@@ -0,0 +1,97 @@

+

+

+# Grounding DINO

+

+## Overview

+

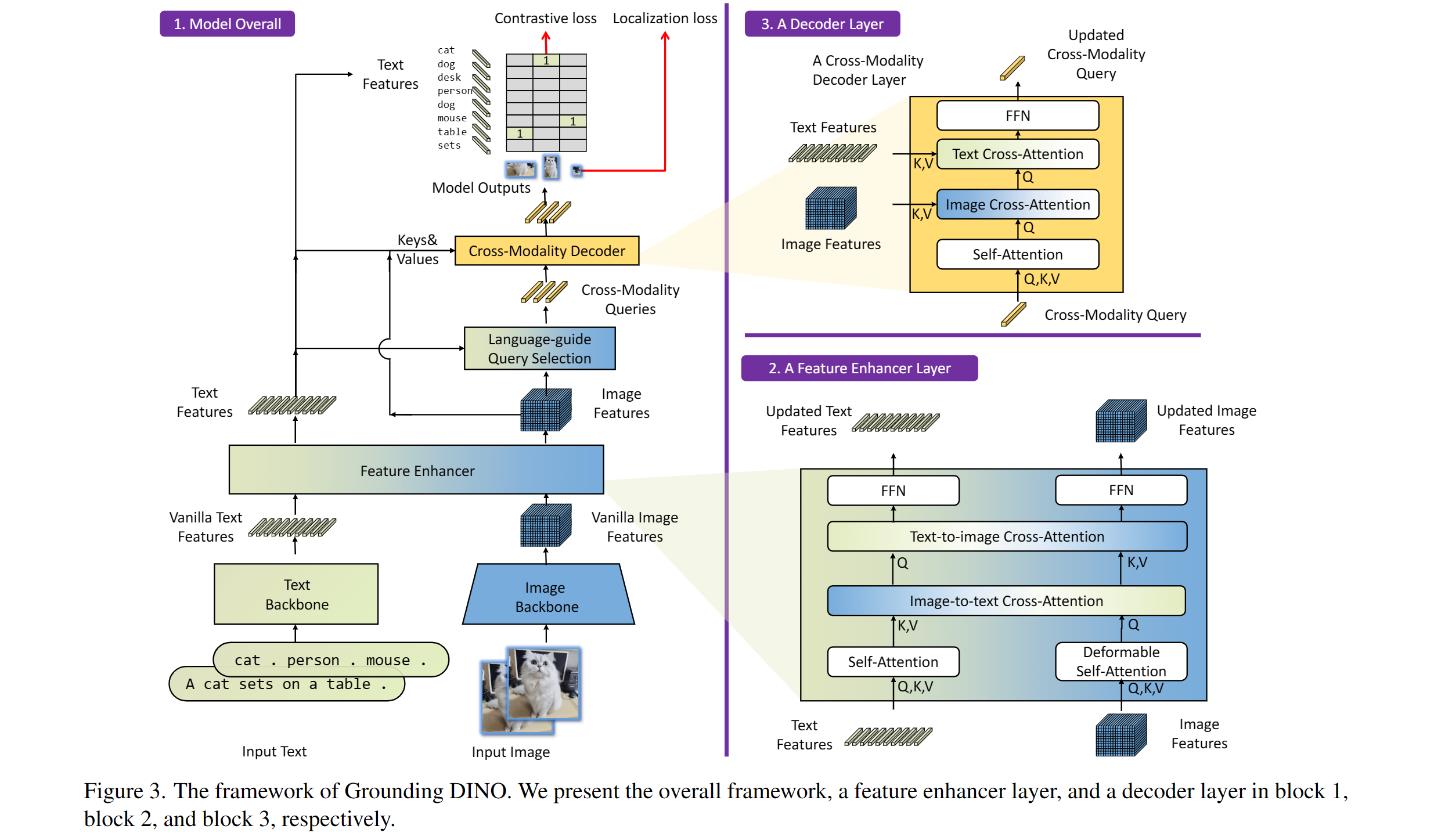

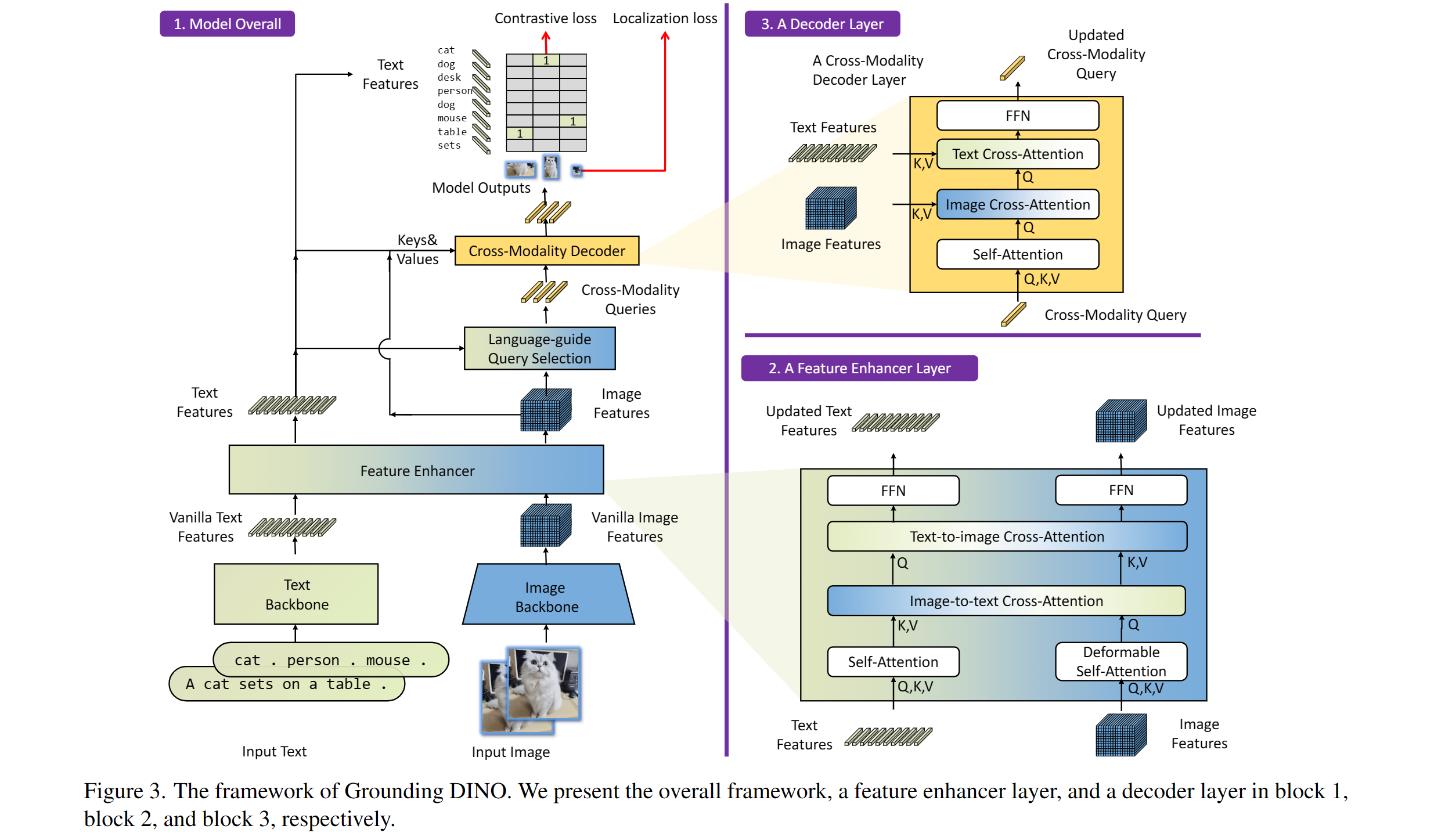

+The Grounding DINO model was proposed in [Grounding DINO: Marrying DINO with Grounded Pre-Training for Open-Set Object Detection](https://arxiv.org/abs/2303.05499) by Shilong Liu, Zhaoyang Zeng, Tianhe Ren, Feng Li, Hao Zhang, Jie Yang, Chunyuan Li, Jianwei Yang, Hang Su, Jun Zhu, Lei Zhang. Grounding DINO extends a closed-set object detection model with a text encoder, enabling open-set object detection. The model achieves remarkable results, such as 52.5 AP on COCO zero-shot.

+

+The abstract from the paper is the following:

+

+*In this paper, we present an open-set object detector, called Grounding DINO, by marrying Transformer-based detector DINO with grounded pre-training, which can detect arbitrary objects with human inputs such as category names or referring expressions. The key solution of open-set object detection is introducing language to a closed-set detector for open-set concept generalization. To effectively fuse language and vision modalities, we conceptually divide a closed-set detector into three phases and propose a tight fusion solution, which includes a feature enhancer, a language-guided query selection, and a cross-modality decoder for cross-modality fusion. While previous works mainly evaluate open-set object detection on novel categories, we propose to also perform evaluations on referring expression comprehension for objects specified with attributes. Grounding DINO performs remarkably well on all three settings, including benchmarks on COCO, LVIS, ODinW, and RefCOCO/+/g. Grounding DINO achieves a 52.5 AP on the COCO detection zero-shot transfer benchmark, i.e., without any training data from COCO. It sets a new record on the ODinW zero-shot benchmark with a mean 26.1 AP.*

+

+ +

+ Grounding DINO overview. Taken from the original paper.

+

+This model was contributed by [EduardoPacheco](https://huggingface.co/EduardoPacheco) and [nielsr](https://huggingface.co/nielsr).

+The original code can be found [here](https://github.com/IDEA-Research/GroundingDINO).

+

+## Usage tips

+

+- One can use [`GroundingDinoProcessor`] to prepare image-text pairs for the model.

+- To separate classes in the text use a period e.g. "a cat. a dog."

+- When using multiple classes (e.g. `"a cat. a dog."`), use `post_process_grounded_object_detection` from [`GroundingDinoProcessor`] to post process outputs. Since, the labels returned from `post_process_object_detection` represent the indices from the model dimension where prob > threshold.

+

+Here's how to use the model for zero-shot object detection:

+

+```python

+import requests

+

+import torch

+from PIL import Image

+from transformers import AutoProcessor, AutoModelForZeroShotObjectDetection,

+

+model_id = "IDEA-Research/grounding-dino-tiny"

+

+processor = AutoProcessor.from_pretrained(model_id)

+model = AutoModelForZeroShotObjectDetection.from_pretrained(model_id).to(device)

+

+image_url = "http://images.cocodataset.org/val2017/000000039769.jpg"

+image = Image.open(requests.get(image_url, stream=True).raw)

+# Check for cats and remote controls

+text = "a cat. a remote control."

+

+inputs = processor(images=image, text=text, return_tensors="pt").to(device)

+with torch.no_grad():

+ outputs = model(**inputs)

+

+results = processor.post_process_grounded_object_detection(

+ outputs,

+ inputs.input_ids,

+ box_threshold=0.4,

+ text_threshold=0.3,

+ target_sizes=[image.size[::-1]]

+)

+```

+

+

+## GroundingDinoImageProcessor

+

+[[autodoc]] GroundingDinoImageProcessor

+ - preprocess

+ - post_process_object_detection

+

+## GroundingDinoProcessor

+

+[[autodoc]] GroundingDinoProcessor

+ - post_process_grounded_object_detection

+

+## GroundingDinoConfig

+

+[[autodoc]] GroundingDinoConfig

+

+## GroundingDinoModel

+

+[[autodoc]] GroundingDinoModel

+ - forward

+

+## GroundingDinoForObjectDetection

+

+[[autodoc]] GroundingDinoForObjectDetection

+ - forward

diff --git a/src/transformers/__init__.py b/src/transformers/__init__.py

index da29d77972f425..ff6c04155f5a5f 100644

--- a/src/transformers/__init__.py

+++ b/src/transformers/__init__.py

@@ -488,9 +488,11 @@

"GPTSanJapaneseConfig",

"GPTSanJapaneseTokenizer",

],

- "models.graphormer": [

- "GRAPHORMER_PRETRAINED_CONFIG_ARCHIVE_MAP",

- "GraphormerConfig",

+ "models.graphormer": ["GRAPHORMER_PRETRAINED_CONFIG_ARCHIVE_MAP", "GraphormerConfig"],

+ "models.grounding_dino": [

+ "GROUNDING_DINO_PRETRAINED_CONFIG_ARCHIVE_MAP",

+ "GroundingDinoConfig",

+ "GroundingDinoProcessor",

],

"models.groupvit": [

"GROUPVIT_PRETRAINED_CONFIG_ARCHIVE_MAP",

@@ -1330,6 +1332,7 @@

_import_structure["models.flava"].extend(["FlavaFeatureExtractor", "FlavaImageProcessor", "FlavaProcessor"])

_import_structure["models.fuyu"].extend(["FuyuImageProcessor", "FuyuProcessor"])

_import_structure["models.glpn"].extend(["GLPNFeatureExtractor", "GLPNImageProcessor"])

+ _import_structure["models.grounding_dino"].extend(["GroundingDinoImageProcessor"])

_import_structure["models.idefics"].extend(["IdeficsImageProcessor"])

_import_structure["models.imagegpt"].extend(["ImageGPTFeatureExtractor", "ImageGPTImageProcessor"])

_import_structure["models.layoutlmv2"].extend(["LayoutLMv2FeatureExtractor", "LayoutLMv2ImageProcessor"])

@@ -2390,6 +2393,14 @@

"GraphormerPreTrainedModel",

]

)

+ _import_structure["models.grounding_dino"].extend(

+ [

+ "GROUNDING_DINO_PRETRAINED_MODEL_ARCHIVE_LIST",

+ "GroundingDinoForObjectDetection",

+ "GroundingDinoModel",

+ "GroundingDinoPreTrainedModel",

+ ]

+ )

_import_structure["models.groupvit"].extend(

[

"GROUPVIT_PRETRAINED_MODEL_ARCHIVE_LIST",

@@ -5372,9 +5383,11 @@

GPTSanJapaneseConfig,

GPTSanJapaneseTokenizer,

)

- from .models.graphormer import (

- GRAPHORMER_PRETRAINED_CONFIG_ARCHIVE_MAP,

- GraphormerConfig,

+ from .models.graphormer import GRAPHORMER_PRETRAINED_CONFIG_ARCHIVE_MAP, GraphormerConfig

+ from .models.grounding_dino import (

+ GROUNDING_DINO_PRETRAINED_CONFIG_ARCHIVE_MAP,

+ GroundingDinoConfig,

+ GroundingDinoProcessor,

)

from .models.groupvit import (

GROUPVIT_PRETRAINED_CONFIG_ARCHIVE_MAP,

@@ -6186,6 +6199,7 @@

)

from .models.fuyu import FuyuImageProcessor, FuyuProcessor

from .models.glpn import GLPNFeatureExtractor, GLPNImageProcessor

+ from .models.grounding_dino import GroundingDinoImageProcessor

from .models.idefics import IdeficsImageProcessor

from .models.imagegpt import ImageGPTFeatureExtractor, ImageGPTImageProcessor

from .models.layoutlmv2 import (

@@ -7103,6 +7117,12 @@

GraphormerModel,

GraphormerPreTrainedModel,

)

+ from .models.grounding_dino import (

+ GROUNDING_DINO_PRETRAINED_MODEL_ARCHIVE_LIST,

+ GroundingDinoForObjectDetection,

+ GroundingDinoModel,

+ GroundingDinoPreTrainedModel,

+ )

from .models.groupvit import (

GROUPVIT_PRETRAINED_MODEL_ARCHIVE_LIST,

GroupViTModel,

diff --git a/src/transformers/models/__init__.py b/src/transformers/models/__init__.py

index 0599d3b876e6af..89baf93f6886f8 100644

--- a/src/transformers/models/__init__.py

+++ b/src/transformers/models/__init__.py

@@ -105,6 +105,7 @@

gptj,

gptsan_japanese,

graphormer,

+ grounding_dino,

groupvit,

herbert,

hubert,

diff --git a/src/transformers/models/auto/configuration_auto.py b/src/transformers/models/auto/configuration_auto.py

index bf46066002feb9..ce8298d8c5cfc8 100755

--- a/src/transformers/models/auto/configuration_auto.py

+++ b/src/transformers/models/auto/configuration_auto.py

@@ -120,6 +120,7 @@

("gptj", "GPTJConfig"),

("gptsan-japanese", "GPTSanJapaneseConfig"),

("graphormer", "GraphormerConfig"),

+ ("grounding-dino", "GroundingDinoConfig"),

("groupvit", "GroupViTConfig"),

("hubert", "HubertConfig"),

("ibert", "IBertConfig"),

@@ -382,6 +383,7 @@

("gptj", "GPT-J"),

("gptsan-japanese", "GPTSAN-japanese"),

("graphormer", "Graphormer"),

+ ("grounding-dino", "Grounding DINO"),

("groupvit", "GroupViT"),

("herbert", "HerBERT"),

("hubert", "Hubert"),

diff --git a/src/transformers/models/auto/image_processing_auto.py b/src/transformers/models/auto/image_processing_auto.py

index 3debf97fea2081..6ae28bfa32cb63 100644

--- a/src/transformers/models/auto/image_processing_auto.py

+++ b/src/transformers/models/auto/image_processing_auto.py

@@ -68,6 +68,7 @@

("fuyu", "FuyuImageProcessor"),

("git", "CLIPImageProcessor"),

("glpn", "GLPNImageProcessor"),

+ ("grounding-dino", "GroundingDinoImageProcessor"),

("groupvit", "CLIPImageProcessor"),

("idefics", "IdeficsImageProcessor"),

("imagegpt", "ImageGPTImageProcessor"),

diff --git a/src/transformers/models/auto/modeling_auto.py b/src/transformers/models/auto/modeling_auto.py

index 150dea04f3745a..d422fe8c19b799 100755

--- a/src/transformers/models/auto/modeling_auto.py

+++ b/src/transformers/models/auto/modeling_auto.py

@@ -115,6 +115,7 @@

("gptj", "GPTJModel"),

("gptsan-japanese", "GPTSanJapaneseForConditionalGeneration"),

("graphormer", "GraphormerModel"),

+ ("grounding-dino", "GroundingDinoModel"),

("groupvit", "GroupViTModel"),

("hubert", "HubertModel"),

("ibert", "IBertModel"),

@@ -751,6 +752,7 @@

MODEL_FOR_ZERO_SHOT_OBJECT_DETECTION_MAPPING_NAMES = OrderedDict(

[

# Model for Zero Shot Object Detection mapping

+ ("grounding-dino", "GroundingDinoForObjectDetection"),

("owlv2", "Owlv2ForObjectDetection"),

("owlvit", "OwlViTForObjectDetection"),

]

diff --git a/src/transformers/models/auto/tokenization_auto.py b/src/transformers/models/auto/tokenization_auto.py

index 4bc5d8105316bf..c9143fd8be5200 100644

--- a/src/transformers/models/auto/tokenization_auto.py

+++ b/src/transformers/models/auto/tokenization_auto.py

@@ -195,6 +195,7 @@

("gpt_neox_japanese", ("GPTNeoXJapaneseTokenizer", None)),

("gptj", ("GPT2Tokenizer", "GPT2TokenizerFast" if is_tokenizers_available() else None)),

("gptsan-japanese", ("GPTSanJapaneseTokenizer", None)),

+ ("grounding-dino", ("BertTokenizer", "BertTokenizerFast" if is_tokenizers_available() else None)),

("groupvit", ("CLIPTokenizer", "CLIPTokenizerFast" if is_tokenizers_available() else None)),

("herbert", ("HerbertTokenizer", "HerbertTokenizerFast" if is_tokenizers_available() else None)),

("hubert", ("Wav2Vec2CTCTokenizer", None)),

diff --git a/src/transformers/models/deformable_detr/modeling_deformable_detr.py b/src/transformers/models/deformable_detr/modeling_deformable_detr.py

index 1e2296d177c4de..c0ac7cffc7ab44 100755

--- a/src/transformers/models/deformable_detr/modeling_deformable_detr.py

+++ b/src/transformers/models/deformable_detr/modeling_deformable_detr.py

@@ -710,13 +710,14 @@ def forward(

batch_size, num_queries, self.n_heads, self.n_levels, self.n_points

)

# batch_size, num_queries, n_heads, n_levels, n_points, 2

- if reference_points.shape[-1] == 2:

+ num_coordinates = reference_points.shape[-1]

+ if num_coordinates == 2:

offset_normalizer = torch.stack([spatial_shapes[..., 1], spatial_shapes[..., 0]], -1)

sampling_locations = (

reference_points[:, :, None, :, None, :]

+ sampling_offsets / offset_normalizer[None, None, None, :, None, :]

)

- elif reference_points.shape[-1] == 4:

+ elif num_coordinates == 4:

sampling_locations = (

reference_points[:, :, None, :, None, :2]

+ sampling_offsets / self.n_points * reference_points[:, :, None, :, None, 2:] * 0.5

@@ -1401,14 +1402,15 @@ def forward(

intermediate_reference_points = ()

for idx, decoder_layer in enumerate(self.layers):

- if reference_points.shape[-1] == 4:

+ num_coordinates = reference_points.shape[-1]

+ if num_coordinates == 4:

reference_points_input = (

reference_points[:, :, None] * torch.cat([valid_ratios, valid_ratios], -1)[:, None]

)

- else:

- if reference_points.shape[-1] != 2:

- raise ValueError("Reference points' last dimension must be of size 2")

+ elif reference_points.shape[-1] == 2:

reference_points_input = reference_points[:, :, None] * valid_ratios[:, None]

+ else:

+ raise ValueError("Reference points' last dimension must be of size 2")

if output_hidden_states:

all_hidden_states += (hidden_states,)

@@ -1442,17 +1444,18 @@ def forward(

# hack implementation for iterative bounding box refinement

if self.bbox_embed is not None:

tmp = self.bbox_embed[idx](hidden_states)

- if reference_points.shape[-1] == 4:

+ num_coordinates = reference_points.shape[-1]

+ if num_coordinates == 4:

new_reference_points = tmp + inverse_sigmoid(reference_points)

new_reference_points = new_reference_points.sigmoid()

- else:

- if reference_points.shape[-1] != 2:

- raise ValueError(

- f"Reference points' last dimension must be of size 2, but is {reference_points.shape[-1]}"

- )

+ elif num_coordinates == 2:

new_reference_points = tmp

new_reference_points[..., :2] = tmp[..., :2] + inverse_sigmoid(reference_points)

new_reference_points = new_reference_points.sigmoid()

+ else:

+ raise ValueError(

+ f"Last dim of reference_points must be 2 or 4, but got {reference_points.shape[-1]}"

+ )

reference_points = new_reference_points.detach()

intermediate += (hidden_states,)

diff --git a/src/transformers/models/deta/modeling_deta.py b/src/transformers/models/deta/modeling_deta.py

index 35d9b67d2f9923..e8491355591ab8 100644

--- a/src/transformers/models/deta/modeling_deta.py

+++ b/src/transformers/models/deta/modeling_deta.py

@@ -682,13 +682,14 @@ def forward(

batch_size, num_queries, self.n_heads, self.n_levels, self.n_points

)

# batch_size, num_queries, n_heads, n_levels, n_points, 2

- if reference_points.shape[-1] == 2:

+ num_coordinates = reference_points.shape[-1]

+ if num_coordinates == 2:

offset_normalizer = torch.stack([spatial_shapes[..., 1], spatial_shapes[..., 0]], -1)

sampling_locations = (

reference_points[:, :, None, :, None, :]

+ sampling_offsets / offset_normalizer[None, None, None, :, None, :]

)

- elif reference_points.shape[-1] == 4:

+ elif num_coordinates == 4:

sampling_locations = (

reference_points[:, :, None, :, None, :2]

+ sampling_offsets / self.n_points * reference_points[:, :, None, :, None, 2:] * 0.5

diff --git a/src/transformers/models/grounding_dino/__init__.py b/src/transformers/models/grounding_dino/__init__.py

new file mode 100644

index 00000000000000..3b0f792068c5f0

--- /dev/null

+++ b/src/transformers/models/grounding_dino/__init__.py

@@ -0,0 +1,81 @@

+# Copyright 2024 The HuggingFace Team. All rights reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+from typing import TYPE_CHECKING

+

+from ...utils import OptionalDependencyNotAvailable, _LazyModule, is_torch_available, is_vision_available

+

+

+_import_structure = {

+ "configuration_grounding_dino": [

+ "GROUNDING_DINO_PRETRAINED_CONFIG_ARCHIVE_MAP",

+ "GroundingDinoConfig",

+ ],

+ "processing_grounding_dino": ["GroundingDinoProcessor"],

+}

+

+try:

+ if not is_torch_available():

+ raise OptionalDependencyNotAvailable()

+except OptionalDependencyNotAvailable:

+ pass

+else:

+ _import_structure["modeling_grounding_dino"] = [

+ "GROUNDING_DINO_PRETRAINED_MODEL_ARCHIVE_LIST",

+ "GroundingDinoForObjectDetection",

+ "GroundingDinoModel",

+ "GroundingDinoPreTrainedModel",

+ ]

+

+try:

+ if not is_vision_available():

+ raise OptionalDependencyNotAvailable()

+except OptionalDependencyNotAvailable:

+ pass

+else:

+ _import_structure["image_processing_grounding_dino"] = ["GroundingDinoImageProcessor"]

+

+

+if TYPE_CHECKING:

+ from .configuration_grounding_dino import (

+ GROUNDING_DINO_PRETRAINED_CONFIG_ARCHIVE_MAP,

+ GroundingDinoConfig,

+ )

+ from .processing_grounding_dino import GroundingDinoProcessor

+

+ try:

+ if not is_torch_available():

+ raise OptionalDependencyNotAvailable()

+ except OptionalDependencyNotAvailable:

+ pass

+ else:

+ from .modeling_grounding_dino import (

+ GROUNDING_DINO_PRETRAINED_MODEL_ARCHIVE_LIST,

+ GroundingDinoForObjectDetection,

+ GroundingDinoModel,

+ GroundingDinoPreTrainedModel,

+ )

+

+ try:

+ if not is_vision_available():

+ raise OptionalDependencyNotAvailable()

+ except OptionalDependencyNotAvailable:

+ pass

+ else:

+ from .image_processing_grounding_dino import GroundingDinoImageProcessor

+

+else:

+ import sys

+

+ sys.modules[__name__] = _LazyModule(__name__, globals()["__file__"], _import_structure, module_spec=__spec__)

diff --git a/src/transformers/models/grounding_dino/configuration_grounding_dino.py b/src/transformers/models/grounding_dino/configuration_grounding_dino.py

new file mode 100644

index 00000000000000..fe683035039600

--- /dev/null

+++ b/src/transformers/models/grounding_dino/configuration_grounding_dino.py

@@ -0,0 +1,301 @@

+# coding=utf-8

+# Copyright 2024 The HuggingFace Inc. team. All rights reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+""" Grounding DINO model configuration"""

+

+from ...configuration_utils import PretrainedConfig

+from ...utils import logging

+from ..auto import CONFIG_MAPPING

+

+

+logger = logging.get_logger(__name__)

+

+GROUNDING_DINO_PRETRAINED_CONFIG_ARCHIVE_MAP = {

+ "IDEA-Research/grounding-dino-tiny": "https://huggingface.co/IDEA-Research/grounding-dino-tiny/resolve/main/config.json",

+}

+

+

+class GroundingDinoConfig(PretrainedConfig):

+ r"""

+ This is the configuration class to store the configuration of a [`GroundingDinoModel`]. It is used to instantiate a

+ Grounding DINO model according to the specified arguments, defining the model architecture. Instantiating a

+ configuration with the defaults will yield a similar configuration to that of the Grounding DINO

+ [IDEA-Research/grounding-dino-tiny](https://huggingface.co/IDEA-Research/grounding-dino-tiny) architecture.

+

+ Configuration objects inherit from [`PretrainedConfig`] and can be used to control the model outputs. Read the

+ documentation from [`PretrainedConfig`] for more information.

+

+ Args:

+ backbone_config (`PretrainedConfig` or `dict`, *optional*, defaults to `ResNetConfig()`):

+ The configuration of the backbone model.

+ backbone (`str`, *optional*):

+ Name of backbone to use when `backbone_config` is `None`. If `use_pretrained_backbone` is `True`, this

+ will load the corresponding pretrained weights from the timm or transformers library. If `use_pretrained_backbone`

+ is `False`, this loads the backbone's config and uses that to initialize the backbone with random weights.

+ use_pretrained_backbone (`bool`, *optional*, defaults to `False`):

+ Whether to use pretrained weights for the backbone.

+ use_timm_backbone (`bool`, *optional*, defaults to `False`):

+ Whether to load `backbone` from the timm library. If `False`, the backbone is loaded from the transformers

+ library.

+ backbone_kwargs (`dict`, *optional*):

+ Keyword arguments to be passed to AutoBackbone when loading from a checkpoint

+ e.g. `{'out_indices': (0, 1, 2, 3)}`. Cannot be specified if `backbone_config` is set.

+ text_config (`Union[AutoConfig, dict]`, *optional*, defaults to `BertConfig`):

+ The config object or dictionary of the text backbone.

+ num_queries (`int`, *optional*, defaults to 900):

+ Number of object queries, i.e. detection slots. This is the maximal number of objects

+ [`GroundingDinoModel`] can detect in a single image.

+ encoder_layers (`int`, *optional*, defaults to 6):

+ Number of encoder layers.

+ encoder_ffn_dim (`int`, *optional*, defaults to 2048):

+ Dimension of the "intermediate" (often named feed-forward) layer in decoder.

+ encoder_attention_heads (`int`, *optional*, defaults to 8):

+ Number of attention heads for each attention layer in the Transformer encoder.

+ decoder_layers (`int`, *optional*, defaults to 6):

+ Number of decoder layers.

+ decoder_ffn_dim (`int`, *optional*, defaults to 2048):

+ Dimension of the "intermediate" (often named feed-forward) layer in decoder.

+ decoder_attention_heads (`int`, *optional*, defaults to 8):

+ Number of attention heads for each attention layer in the Transformer decoder.

+ is_encoder_decoder (`bool`, *optional*, defaults to `True`):

+ Whether the model is used as an encoder/decoder or not.

+ activation_function (`str` or `function`, *optional*, defaults to `"relu"`):

+ The non-linear activation function (function or string) in the encoder and pooler. If string, `"gelu"`,

+ `"relu"`, `"silu"` and `"gelu_new"` are supported.

+ d_model (`int`, *optional*, defaults to 256):

+ Dimension of the layers.

+ dropout (`float`, *optional*, defaults to 0.1):

+ The dropout probability for all fully connected layers in the embeddings, encoder, and pooler.

+ attention_dropout (`float`, *optional*, defaults to 0.0):

+ The dropout ratio for the attention probabilities.

+ activation_dropout (`float`, *optional*, defaults to 0.0):

+ The dropout ratio for activations inside the fully connected layer.

+ auxiliary_loss (`bool`, *optional*, defaults to `False`):

+ Whether auxiliary decoding losses (loss at each decoder layer) are to be used.

+ position_embedding_type (`str`, *optional*, defaults to `"sine"`):

+ Type of position embeddings to be used on top of the image features. One of `"sine"` or `"learned"`.

+ num_feature_levels (`int`, *optional*, defaults to 4):

+ The number of input feature levels.

+ encoder_n_points (`int`, *optional*, defaults to 4):

+ The number of sampled keys in each feature level for each attention head in the encoder.

+ decoder_n_points (`int`, *optional*, defaults to 4):

+ The number of sampled keys in each feature level for each attention head in the decoder.

+ two_stage (`bool`, *optional*, defaults to `True`):

+ Whether to apply a two-stage deformable DETR, where the region proposals are also generated by a variant of

+ Grounding DINO, which are further fed into the decoder for iterative bounding box refinement.

+ class_cost (`float`, *optional*, defaults to 1.0):

+ Relative weight of the classification error in the Hungarian matching cost.

+ bbox_cost (`float`, *optional*, defaults to 5.0):

+ Relative weight of the L1 error of the bounding box coordinates in the Hungarian matching cost.

+ giou_cost (`float`, *optional*, defaults to 2.0):

+ Relative weight of the generalized IoU loss of the bounding box in the Hungarian matching cost.

+ bbox_loss_coefficient (`float`, *optional*, defaults to 5.0):

+ Relative weight of the L1 bounding box loss in the object detection loss.

+ giou_loss_coefficient (`float`, *optional*, defaults to 2.0):

+ Relative weight of the generalized IoU loss in the object detection loss.

+ focal_alpha (`float`, *optional*, defaults to 0.25):

+ Alpha parameter in the focal loss.

+ disable_custom_kernels (`bool`, *optional*, defaults to `False`):

+ Disable the use of custom CUDA and CPU kernels. This option is necessary for the ONNX export, as custom

+ kernels are not supported by PyTorch ONNX export.

+ max_text_len (`int`, *optional*, defaults to 256):

+ The maximum length of the text input.

+ text_enhancer_dropout (`float`, *optional*, defaults to 0.0):

+ The dropout ratio for the text enhancer.

+ fusion_droppath (`float`, *optional*, defaults to 0.1):

+ The droppath ratio for the fusion module.

+ fusion_dropout (`float`, *optional*, defaults to 0.0):

+ The dropout ratio for the fusion module.

+ embedding_init_target (`bool`, *optional*, defaults to `True`):

+ Whether to initialize the target with Embedding weights.

+ query_dim (`int`, *optional*, defaults to 4):

+ The dimension of the query vector.

+ decoder_bbox_embed_share (`bool`, *optional*, defaults to `True`):

+ Whether to share the bbox regression head for all decoder layers.

+ two_stage_bbox_embed_share (`bool`, *optional*, defaults to `False`):

+ Whether to share the bbox embedding between the two-stage bbox generator and the region proposal

+ generation.

+ positional_embedding_temperature (`float`, *optional*, defaults to 20):

+ The temperature for Sine Positional Embedding that is used together with vision backbone.

+ init_std (`float`, *optional*, defaults to 0.02):

+ The standard deviation of the truncated_normal_initializer for initializing all weight matrices.

+ layer_norm_eps (`float`, *optional*, defaults to 1e-05):

+ The epsilon used by the layer normalization layers.

+

+ Examples:

+

+ ```python

+ >>> from transformers import GroundingDinoConfig, GroundingDinoModel

+

+ >>> # Initializing a Grounding DINO IDEA-Research/grounding-dino-tiny style configuration

+ >>> configuration = GroundingDinoConfig()

+

+ >>> # Initializing a model (with random weights) from the IDEA-Research/grounding-dino-tiny style configuration

+ >>> model = GroundingDinoModel(configuration)

+

+ >>> # Accessing the model configuration

+ >>> configuration = model.config

+ ```"""

+

+ model_type = "grounding-dino"

+ attribute_map = {

+ "hidden_size": "d_model",

+ "num_attention_heads": "encoder_attention_heads",

+ }

+

+ def __init__(

+ self,

+ backbone_config=None,

+ backbone=None,

+ use_pretrained_backbone=False,

+ use_timm_backbone=False,

+ backbone_kwargs=None,

+ text_config=None,

+ num_queries=900,

+ encoder_layers=6,

+ encoder_ffn_dim=2048,

+ encoder_attention_heads=8,

+ decoder_layers=6,

+ decoder_ffn_dim=2048,

+ decoder_attention_heads=8,

+ is_encoder_decoder=True,

+ activation_function="relu",

+ d_model=256,

+ dropout=0.1,

+ attention_dropout=0.0,

+ activation_dropout=0.0,

+ auxiliary_loss=False,

+ position_embedding_type="sine",

+ num_feature_levels=4,

+ encoder_n_points=4,

+ decoder_n_points=4,

+ two_stage=True,

+ class_cost=1.0,

+ bbox_cost=5.0,

+ giou_cost=2.0,

+ bbox_loss_coefficient=5.0,

+ giou_loss_coefficient=2.0,

+ focal_alpha=0.25,

+ disable_custom_kernels=False,

+ # other parameters

+ max_text_len=256,

+ text_enhancer_dropout=0.0,

+ fusion_droppath=0.1,

+ fusion_dropout=0.0,

+ embedding_init_target=True,

+ query_dim=4,

+ decoder_bbox_embed_share=True,

+ two_stage_bbox_embed_share=False,

+ positional_embedding_temperature=20,

+ init_std=0.02,

+ layer_norm_eps=1e-5,

+ **kwargs,

+ ):

+ if not use_timm_backbone and use_pretrained_backbone:

+ raise ValueError(

+ "Loading pretrained backbone weights from the transformers library is not supported yet. `use_timm_backbone` must be set to `True` when `use_pretrained_backbone=True`"

+ )

+

+ if backbone_config is not None and backbone is not None:

+ raise ValueError("You can't specify both `backbone` and `backbone_config`.")

+

+ if backbone_config is None and backbone is None:

+ logger.info("`backbone_config` is `None`. Initializing the config with the default `Swin` backbone.")

+ backbone_config = CONFIG_MAPPING["swin"](

+ window_size=7,

+ image_size=224,

+ embed_dim=96,

+ depths=[2, 2, 6, 2],

+ num_heads=[3, 6, 12, 24],

+ out_indices=[2, 3, 4],

+ )

+ elif isinstance(backbone_config, dict):

+ backbone_model_type = backbone_config.pop("model_type")

+ config_class = CONFIG_MAPPING[backbone_model_type]

+ backbone_config = config_class.from_dict(backbone_config)

+

+ if backbone_kwargs is not None and backbone_kwargs and backbone_config is not None:

+ raise ValueError("You can't specify both `backbone_kwargs` and `backbone_config`.")

+

+ if text_config is None:

+ text_config = {}

+ logger.info("text_config is None. Initializing the text config with default values (`BertConfig`).")

+

+ self.backbone_config = backbone_config

+ self.backbone = backbone

+ self.use_pretrained_backbone = use_pretrained_backbone

+ self.use_timm_backbone = use_timm_backbone

+ self.backbone_kwargs = backbone_kwargs

+ self.num_queries = num_queries

+ self.d_model = d_model

+ self.encoder_ffn_dim = encoder_ffn_dim

+ self.encoder_layers = encoder_layers

+ self.encoder_attention_heads = encoder_attention_heads

+ self.decoder_ffn_dim = decoder_ffn_dim

+ self.decoder_layers = decoder_layers

+ self.decoder_attention_heads = decoder_attention_heads

+ self.dropout = dropout

+ self.attention_dropout = attention_dropout

+ self.activation_dropout = activation_dropout

+ self.activation_function = activation_function

+ self.auxiliary_loss = auxiliary_loss

+ self.position_embedding_type = position_embedding_type

+ # deformable attributes

+ self.num_feature_levels = num_feature_levels

+ self.encoder_n_points = encoder_n_points

+ self.decoder_n_points = decoder_n_points

+ self.two_stage = two_stage

+ # Hungarian matcher

+ self.class_cost = class_cost

+ self.bbox_cost = bbox_cost

+ self.giou_cost = giou_cost

+ # Loss coefficients

+ self.bbox_loss_coefficient = bbox_loss_coefficient

+ self.giou_loss_coefficient = giou_loss_coefficient

+ self.focal_alpha = focal_alpha

+ self.disable_custom_kernels = disable_custom_kernels

+ # Text backbone

+ if isinstance(text_config, dict):

+ text_config["model_type"] = text_config["model_type"] if "model_type" in text_config else "bert"

+ text_config = CONFIG_MAPPING[text_config["model_type"]](**text_config)

+ elif text_config is None:

+ text_config = CONFIG_MAPPING["bert"]()

+

+ self.text_config = text_config

+ self.max_text_len = max_text_len

+

+ # Text Enhancer

+ self.text_enhancer_dropout = text_enhancer_dropout

+ # Fusion

+ self.fusion_droppath = fusion_droppath

+ self.fusion_dropout = fusion_dropout

+ # Others

+ self.embedding_init_target = embedding_init_target

+ self.query_dim = query_dim

+ self.decoder_bbox_embed_share = decoder_bbox_embed_share

+ self.two_stage_bbox_embed_share = two_stage_bbox_embed_share

+ if two_stage_bbox_embed_share and not decoder_bbox_embed_share:

+ raise ValueError("If two_stage_bbox_embed_share is True, decoder_bbox_embed_share must be True.")

+ self.positional_embedding_temperature = positional_embedding_temperature

+ self.init_std = init_std

+ self.layer_norm_eps = layer_norm_eps

+ super().__init__(is_encoder_decoder=is_encoder_decoder, **kwargs)

+

+ @property

+ def num_attention_heads(self) -> int:

+ return self.encoder_attention_heads

+

+ @property

+ def hidden_size(self) -> int:

+ return self.d_model

diff --git a/src/transformers/models/grounding_dino/convert_grounding_dino_to_hf.py b/src/transformers/models/grounding_dino/convert_grounding_dino_to_hf.py

new file mode 100644

index 00000000000000..ac8e82bfd825d6

--- /dev/null

+++ b/src/transformers/models/grounding_dino/convert_grounding_dino_to_hf.py

@@ -0,0 +1,491 @@

+# coding=utf-8

+# Copyright 2024 The HuggingFace Inc. team.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+"""Convert Grounding DINO checkpoints from the original repository.

+

+URL: https://github.com/IDEA-Research/GroundingDINO"""

+

+import argparse

+

+import requests

+import torch

+from PIL import Image

+from torchvision import transforms as T

+

+from transformers import (

+ AutoTokenizer,

+ GroundingDinoConfig,

+ GroundingDinoForObjectDetection,

+ GroundingDinoImageProcessor,

+ GroundingDinoProcessor,

+ SwinConfig,

+)

+

+

+IMAGENET_MEAN = [0.485, 0.456, 0.406]

+IMAGENET_STD = [0.229, 0.224, 0.225]

+

+

+def get_grounding_dino_config(model_name):

+ if "tiny" in model_name:

+ window_size = 7

+ embed_dim = 96

+ depths = (2, 2, 6, 2)

+ num_heads = (3, 6, 12, 24)

+ image_size = 224

+ elif "base" in model_name:

+ window_size = 12

+ embed_dim = 128

+ depths = (2, 2, 18, 2)

+ num_heads = (4, 8, 16, 32)

+ image_size = 384

+ else:

+ raise ValueError("Model not supported, only supports base and large variants")

+

+ backbone_config = SwinConfig(

+ window_size=window_size,

+ image_size=image_size,

+ embed_dim=embed_dim,

+ depths=depths,

+ num_heads=num_heads,

+ out_indices=[2, 3, 4],

+ )

+

+ config = GroundingDinoConfig(backbone_config=backbone_config)

+

+ return config

+

+

+def create_rename_keys(state_dict, config):

+ rename_keys = []

+ # fmt: off

+ ########################################## VISION BACKBONE - START

+ # patch embedding layer

+ rename_keys.append(("backbone.0.patch_embed.proj.weight",

+ "model.backbone.conv_encoder.model.embeddings.patch_embeddings.projection.weight"))

+ rename_keys.append(("backbone.0.patch_embed.proj.bias",

+ "model.backbone.conv_encoder.model.embeddings.patch_embeddings.projection.bias"))

+ rename_keys.append(("backbone.0.patch_embed.norm.weight",

+ "model.backbone.conv_encoder.model.embeddings.norm.weight"))

+ rename_keys.append(("backbone.0.patch_embed.norm.bias",

+ "model.backbone.conv_encoder.model.embeddings.norm.bias"))

+

+ for layer, depth in enumerate(config.backbone_config.depths):

+ for block in range(depth):

+ # layernorms

+ rename_keys.append((f"backbone.0.layers.{layer}.blocks.{block}.norm1.weight",