diff --git a/README.md b/README.md

index dd46c1dee6e53f..a5d220c2eac08d 100644

--- a/README.md

+++ b/README.md

@@ -475,6 +475,7 @@ Current number of checkpoints: ** (from Meta AI) released with the paper [Segment Anything](https://arxiv.org/pdf/2304.02643v1.pdf) by Alexander Kirillov, Eric Mintun, Nikhila Ravi, Hanzi Mao, Chloe Rolland, Laura Gustafson, Tete Xiao, Spencer Whitehead, Alex Berg, Wan-Yen Lo, Piotr Dollar, Ross Girshick.

1. **[SEW](https://huggingface.co/docs/transformers/model_doc/sew)** (from ASAPP) released with the paper [Performance-Efficiency Trade-offs in Unsupervised Pre-training for Speech Recognition](https://arxiv.org/abs/2109.06870) by Felix Wu, Kwangyoun Kim, Jing Pan, Kyu Han, Kilian Q. Weinberger, Yoav Artzi.

1. **[SEW-D](https://huggingface.co/docs/transformers/model_doc/sew_d)** (from ASAPP) released with the paper [Performance-Efficiency Trade-offs in Unsupervised Pre-training for Speech Recognition](https://arxiv.org/abs/2109.06870) by Felix Wu, Kwangyoun Kim, Jing Pan, Kyu Han, Kilian Q. Weinberger, Yoav Artzi.

+1. **[SigLIP](https://huggingface.co/docs/transformers/main/model_doc/siglip)** (from Google AI) released with the paper [Sigmoid Loss for Language Image Pre-Training](https://arxiv.org/abs/2303.15343) by Xiaohua Zhai, Basil Mustafa, Alexander Kolesnikov, Lucas Beyer.

1. **[SpeechT5](https://huggingface.co/docs/transformers/model_doc/speecht5)** (from Microsoft Research) released with the paper [SpeechT5: Unified-Modal Encoder-Decoder Pre-Training for Spoken Language Processing](https://arxiv.org/abs/2110.07205) by Junyi Ao, Rui Wang, Long Zhou, Chengyi Wang, Shuo Ren, Yu Wu, Shujie Liu, Tom Ko, Qing Li, Yu Zhang, Zhihua Wei, Yao Qian, Jinyu Li, Furu Wei.

1. **[SpeechToTextTransformer](https://huggingface.co/docs/transformers/model_doc/speech_to_text)** (from Facebook), released together with the paper [fairseq S2T: Fast Speech-to-Text Modeling with fairseq](https://arxiv.org/abs/2010.05171) by Changhan Wang, Yun Tang, Xutai Ma, Anne Wu, Dmytro Okhonko, Juan Pino.

1. **[SpeechToTextTransformer2](https://huggingface.co/docs/transformers/model_doc/speech_to_text_2)** (from Facebook), released together with the paper [Large-Scale Self- and Semi-Supervised Learning for Speech Translation](https://arxiv.org/abs/2104.06678) by Changhan Wang, Anne Wu, Juan Pino, Alexei Baevski, Michael Auli, Alexis Conneau.

diff --git a/README_es.md b/README_es.md

index 3cc52152e5bcd5..625a3f3ed00117 100644

--- a/README_es.md

+++ b/README_es.md

@@ -450,6 +450,7 @@ Número actual de puntos de control: ** (from Meta AI) released with the paper [Segment Anything](https://arxiv.org/pdf/2304.02643v1.pdf) by Alexander Kirillov, Eric Mintun, Nikhila Ravi, Hanzi Mao, Chloe Rolland, Laura Gustafson, Tete Xiao, Spencer Whitehead, Alex Berg, Wan-Yen Lo, Piotr Dollar, Ross Girshick.

1. **[SEW](https://huggingface.co/docs/transformers/model_doc/sew)** (from ASAPP) released with the paper [Performance-Efficiency Trade-offs in Unsupervised Pre-training for Speech Recognition](https://arxiv.org/abs/2109.06870) by Felix Wu, Kwangyoun Kim, Jing Pan, Kyu Han, Kilian Q. Weinberger, Yoav Artzi.

1. **[SEW-D](https://huggingface.co/docs/transformers/model_doc/sew_d)** (from ASAPP) released with the paper [Performance-Efficiency Trade-offs in Unsupervised Pre-training for Speech Recognition](https://arxiv.org/abs/2109.06870) by Felix Wu, Kwangyoun Kim, Jing Pan, Kyu Han, Kilian Q. Weinberger, Yoav Artzi.

+1. **[SigLIP](https://huggingface.co/docs/transformers/main/model_doc/siglip)** (from Google AI) released with the paper [Sigmoid Loss for Language Image Pre-Training](https://arxiv.org/abs/2303.15343) by Xiaohua Zhai, Basil Mustafa, Alexander Kolesnikov, Lucas Beyer.

1. **[SpeechT5](https://huggingface.co/docs/transformers/model_doc/speecht5)** (from Microsoft Research) released with the paper [SpeechT5: Unified-Modal Encoder-Decoder Pre-Training for Spoken Language Processing](https://arxiv.org/abs/2110.07205) by Junyi Ao, Rui Wang, Long Zhou, Chengyi Wang, Shuo Ren, Yu Wu, Shujie Liu, Tom Ko, Qing Li, Yu Zhang, Zhihua Wei, Yao Qian, Jinyu Li, Furu Wei.

1. **[SpeechToTextTransformer](https://huggingface.co/docs/transformers/model_doc/speech_to_text)** (from Facebook), released together with the paper [fairseq S2T: Fast Speech-to-Text Modeling with fairseq](https://arxiv.org/abs/2010.05171) by Changhan Wang, Yun Tang, Xutai Ma, Anne Wu, Dmytro Okhonko, Juan Pino.

1. **[SpeechToTextTransformer2](https://huggingface.co/docs/transformers/model_doc/speech_to_text_2)** (from Facebook), released together with the paper [Large-Scale Self- and Semi-Supervised Learning for Speech Translation](https://arxiv.org/abs/2104.06678) by Changhan Wang, Anne Wu, Juan Pino, Alexei Baevski, Michael Auli, Alexis Conneau.

diff --git a/README_hd.md b/README_hd.md

index 20a62f5c05e516..87c45015db2349 100644

--- a/README_hd.md

+++ b/README_hd.md

@@ -424,6 +424,7 @@ conda install conda-forge::transformers

1. **[Segment Anything](https://huggingface.co/docs/transformers/model_doc/sam)** (Meta AI से) Alexander Kirillov, Eric Mintun, Nikhila Ravi, Hanzi Mao, Chloe Rolland, Laura Gustafson, Tete Xiao, Spencer Whitehead, Alex Berg, Wan-Yen Lo, Piotr Dollar, Ross Girshick. द्वाराअनुसंधान पत्र [Segment Anything](https://arxiv.org/pdf/2304.02643v1.pdf) के साथ जारी किया गया

1. **[SEW](https://huggingface.co/docs/transformers/model_doc/sew)** (ASAPP से) साथ देने वाला पेपर [भाषण पहचान के लिए अनसुपरवाइज्ड प्री-ट्रेनिंग में परफॉर्मेंस-एफिशिएंसी ट्रेड-ऑफ्स](https ://arxiv.org/abs/2109.06870) फेलिक्स वू, क्वांगयुन किम, जिंग पैन, क्यू हान, किलियन क्यू. वेनबर्गर, योव आर्टज़ी द्वारा।

1. **[SEW-D](https://huggingface.co/docs/transformers/model_doc/sew_d)** (ASAPP से) साथ में पेपर [भाषण पहचान के लिए अनसुपरवाइज्ड प्री-ट्रेनिंग में परफॉर्मेंस-एफिशिएंसी ट्रेड-ऑफ्स] (https://arxiv.org/abs/2109.06870) फेलिक्स वू, क्वांगयुन किम, जिंग पैन, क्यू हान, किलियन क्यू. वेनबर्गर, योआव आर्टज़ी द्वारा पोस्ट किया गया।

+1. **[SigLIP](https://huggingface.co/docs/transformers/main/model_doc/siglip)** (Google AI से) Xiaohua Zhai, Basil Mustafa, Alexander Kolesnikov, Lucas Beyer. द्वाराअनुसंधान पत्र [Sigmoid Loss for Language Image Pre-Training](https://arxiv.org/abs/2303.15343) के साथ जारी किया गया

1. **[SpeechT5](https://huggingface.co/docs/transformers/model_doc/speecht5)** (from Microsoft Research) released with the paper [SpeechT5: Unified-Modal Encoder-Decoder Pre-Training for Spoken Language Processing](https://arxiv.org/abs/2110.07205) by Junyi Ao, Rui Wang, Long Zhou, Chengyi Wang, Shuo Ren, Yu Wu, Shujie Liu, Tom Ko, Qing Li, Yu Zhang, Zhihua Wei, Yao Qian, Jinyu Li, Furu Wei.

1. **[SpeechToTextTransformer](https://huggingface.co/docs/transformers/model_doc/speech_to_text)** (फेसबुक से), साथ में पेपर [फेयरसेक S2T: फास्ट स्पीच-टू-टेक्स्ट मॉडलिंग विद फेयरसेक](https: //arxiv.org/abs/2010.05171) चांगहान वांग, यूं तांग, जुताई मा, ऐनी वू, दिमित्रो ओखोनको, जुआन पिनो द्वारा पोस्ट किया गया。

1. **[SpeechToTextTransformer2](https://huggingface.co/docs/transformers/model_doc/speech_to_text_2)** (फेसबुक से) साथ में पेपर [लार्ज-स्केल सेल्फ- एंड सेमी-सुपरवाइज्ड लर्निंग फॉर स्पीच ट्रांसलेशन](https://arxiv.org/abs/2104.06678) चांगहान वांग, ऐनी वू, जुआन पिनो, एलेक्सी बेवस्की, माइकल औली, एलेक्सिस द्वारा Conneau द्वारा पोस्ट किया गया।

diff --git a/README_ja.md b/README_ja.md

index b70719a2cd84f7..5a17f90525c343 100644

--- a/README_ja.md

+++ b/README_ja.md

@@ -484,6 +484,7 @@ Flax、PyTorch、TensorFlowをcondaでインストールする方法は、それ

1. **[Segment Anything](https://huggingface.co/docs/transformers/model_doc/sam)** (Meta AI から) Alexander Kirillov, Eric Mintun, Nikhila Ravi, Hanzi Mao, Chloe Rolland, Laura Gustafson, Tete Xiao, Spencer Whitehead, Alex Berg, Wan-Yen Lo, Piotr Dollar, Ross Girshick. から公開された研究論文 [Segment Anything](https://arxiv.org/pdf/2304.02643v1.pdf)

1. **[SEW](https://huggingface.co/docs/transformers/model_doc/sew)** (ASAPP から) Felix Wu, Kwangyoun Kim, Jing Pan, Kyu Han, Kilian Q. Weinberger, Yoav Artzi から公開された研究論文: [Performance-Efficiency Trade-offs in Unsupervised Pre-training for Speech Recognition](https://arxiv.org/abs/2109.06870)

1. **[SEW-D](https://huggingface.co/docs/transformers/model_doc/sew_d)** (ASAPP から) Felix Wu, Kwangyoun Kim, Jing Pan, Kyu Han, Kilian Q. Weinberger, Yoav Artzi から公開された研究論文: [Performance-Efficiency Trade-offs in Unsupervised Pre-training for Speech Recognition](https://arxiv.org/abs/2109.06870)

+1. **[SigLIP](https://huggingface.co/docs/transformers/main/model_doc/siglip)** (Google AI から) Xiaohua Zhai, Basil Mustafa, Alexander Kolesnikov, Lucas Beyer. から公開された研究論文 [Sigmoid Loss for Language Image Pre-Training](https://arxiv.org/abs/2303.15343)

1. **[SpeechT5](https://huggingface.co/docs/transformers/model_doc/speecht5)** (Microsoft Research から) Junyi Ao, Rui Wang, Long Zhou, Chengyi Wang, Shuo Ren, Yu Wu, Shujie Liu, Tom Ko, Qing Li, Yu Zhang, Zhihua Wei, Yao Qian, Jinyu Li, Furu Wei. から公開された研究論文 [SpeechT5: Unified-Modal Encoder-Decoder Pre-Training for Spoken Language Processing](https://arxiv.org/abs/2110.07205)

1. **[SpeechToTextTransformer](https://huggingface.co/docs/transformers/model_doc/speech_to_text)** (Facebook から), Changhan Wang, Yun Tang, Xutai Ma, Anne Wu, Dmytro Okhonko, Juan Pino から公開された研究論文: [fairseq S2T: Fast Speech-to-Text Modeling with fairseq](https://arxiv.org/abs/2010.05171)

1. **[SpeechToTextTransformer2](https://huggingface.co/docs/transformers/model_doc/speech_to_text_2)** (Facebook から), Changhan Wang, Anne Wu, Juan Pino, Alexei Baevski, Michael Auli, Alexis Conneau から公開された研究論文: [Large-Scale Self- and Semi-Supervised Learning for Speech Translation](https://arxiv.org/abs/2104.06678)

diff --git a/README_ko.md b/README_ko.md

index 48ced9d0122a06..b92a06b772c2ea 100644

--- a/README_ko.md

+++ b/README_ko.md

@@ -399,6 +399,7 @@ Flax, PyTorch, TensorFlow 설치 페이지에서 이들을 conda로 설치하는

1. **[Segment Anything](https://huggingface.co/docs/transformers/model_doc/sam)** (Meta AI 에서 제공)은 Alexander Kirillov, Eric Mintun, Nikhila Ravi, Hanzi Mao, Chloe Rolland, Laura Gustafson, Tete Xiao, Spencer Whitehead, Alex Berg, Wan-Yen Lo, Piotr Dollar, Ross Girshick.의 [Segment Anything](https://arxiv.org/pdf/2304.02643v1.pdf)논문과 함께 발표했습니다.

1. **[SEW](https://huggingface.co/docs/transformers/model_doc/sew)** (ASAPP 에서) Felix Wu, Kwangyoun Kim, Jing Pan, Kyu Han, Kilian Q. Weinberger, Yoav Artzi 의 [Performance-Efficiency Trade-offs in Unsupervised Pre-training for Speech Recognition](https://arxiv.org/abs/2109.06870) 논문과 함께 발표했습니다.

1. **[SEW-D](https://huggingface.co/docs/transformers/model_doc/sew_d)** (ASAPP 에서) Felix Wu, Kwangyoun Kim, Jing Pan, Kyu Han, Kilian Q. Weinberger, Yoav Artzi 의 [Performance-Efficiency Trade-offs in Unsupervised Pre-training for Speech Recognition](https://arxiv.org/abs/2109.06870) 논문과 함께 발표했습니다.

+1. **[SigLIP](https://huggingface.co/docs/transformers/main/model_doc/siglip)** (Google AI 에서 제공)은 Xiaohua Zhai, Basil Mustafa, Alexander Kolesnikov, Lucas Beyer.의 [Sigmoid Loss for Language Image Pre-Training](https://arxiv.org/abs/2303.15343)논문과 함께 발표했습니다.

1. **[SpeechT5](https://huggingface.co/docs/transformers/model_doc/speecht5)** (Microsoft Research 에서 제공)은 Junyi Ao, Rui Wang, Long Zhou, Chengyi Wang, Shuo Ren, Yu Wu, Shujie Liu, Tom Ko, Qing Li, Yu Zhang, Zhihua Wei, Yao Qian, Jinyu Li, Furu Wei.의 [SpeechT5: Unified-Modal Encoder-Decoder Pre-Training for Spoken Language Processing](https://arxiv.org/abs/2110.07205)논문과 함께 발표했습니다.

1. **[SpeechToTextTransformer](https://huggingface.co/docs/transformers/model_doc/speech_to_text)** (Facebook 에서) Changhan Wang, Yun Tang, Xutai Ma, Anne Wu, Dmytro Okhonko, Juan Pino 의 [fairseq S2T: Fast Speech-to-Text Modeling with fairseq](https://arxiv.org/abs/2010.05171) 논문과 함께 발표했습니다.

1. **[SpeechToTextTransformer2](https://huggingface.co/docs/transformers/model_doc/speech_to_text_2)** (Facebook 에서) Changhan Wang, Anne Wu, Juan Pino, Alexei Baevski, Michael Auli, Alexis Conneau 의 [Large-Scale Self- and Semi-Supervised Learning for Speech Translation](https://arxiv.org/abs/2104.06678) 논문과 함께 발표했습니다.

diff --git a/README_zh-hans.md b/README_zh-hans.md

index f537c779cdddd0..43e2381a4a6aff 100644

--- a/README_zh-hans.md

+++ b/README_zh-hans.md

@@ -423,6 +423,7 @@ conda install conda-forge::transformers

1. **[Segment Anything](https://huggingface.co/docs/transformers/model_doc/sam)** (来自 Meta AI) 伴随论文 [Segment Anything](https://arxiv.org/pdf/2304.02643v1.pdf) 由 Alexander Kirillov, Eric Mintun, Nikhila Ravi, Hanzi Mao, Chloe Rolland, Laura Gustafson, Tete Xiao, Spencer Whitehead, Alex Berg, Wan-Yen Lo, Piotr Dollar, Ross Girshick 发布。

1. **[SEW](https://huggingface.co/docs/transformers/model_doc/sew)** (来自 ASAPP) 伴随论文 [Performance-Efficiency Trade-offs in Unsupervised Pre-training for Speech Recognition](https://arxiv.org/abs/2109.06870) 由 Felix Wu, Kwangyoun Kim, Jing Pan, Kyu Han, Kilian Q. Weinberger, Yoav Artzi 发布。

1. **[SEW-D](https://huggingface.co/docs/transformers/model_doc/sew_d)** (来自 ASAPP) 伴随论文 [Performance-Efficiency Trade-offs in Unsupervised Pre-training for Speech Recognition](https://arxiv.org/abs/2109.06870) 由 Felix Wu, Kwangyoun Kim, Jing Pan, Kyu Han, Kilian Q. Weinberger, Yoav Artzi 发布。

+1. **[SigLIP](https://huggingface.co/docs/transformers/main/model_doc/siglip)** (来自 Google AI) 伴随论文 [Sigmoid Loss for Language Image Pre-Training](https://arxiv.org/abs/2303.15343) 由 Xiaohua Zhai, Basil Mustafa, Alexander Kolesnikov, Lucas Beyer 发布。

1. **[SpeechT5](https://huggingface.co/docs/transformers/model_doc/speecht5)** (来自 Microsoft Research) 伴随论文 [SpeechT5: Unified-Modal Encoder-Decoder Pre-Training for Spoken Language Processing](https://arxiv.org/abs/2110.07205) 由 Junyi Ao, Rui Wang, Long Zhou, Chengyi Wang, Shuo Ren, Yu Wu, Shujie Liu, Tom Ko, Qing Li, Yu Zhang, Zhihua Wei, Yao Qian, Jinyu Li, Furu Wei 发布。

1. **[SpeechToTextTransformer](https://huggingface.co/docs/transformers/model_doc/speech_to_text)** (来自 Facebook), 伴随论文 [fairseq S2T: Fast Speech-to-Text Modeling with fairseq](https://arxiv.org/abs/2010.05171) 由 Changhan Wang, Yun Tang, Xutai Ma, Anne Wu, Dmytro Okhonko, Juan Pino 发布。

1. **[SpeechToTextTransformer2](https://huggingface.co/docs/transformers/model_doc/speech_to_text_2)** (来自 Facebook) 伴随论文 [Large-Scale Self- and Semi-Supervised Learning for Speech Translation](https://arxiv.org/abs/2104.06678) 由 Changhan Wang, Anne Wu, Juan Pino, Alexei Baevski, Michael Auli, Alexis Conneau 发布。

diff --git a/README_zh-hant.md b/README_zh-hant.md

index 2f35e28089fca1..84005dec7d4d54 100644

--- a/README_zh-hant.md

+++ b/README_zh-hant.md

@@ -435,6 +435,7 @@ conda install conda-forge::transformers

1. **[Segment Anything](https://huggingface.co/docs/transformers/model_doc/sam)** (from Meta AI) released with the paper [Segment Anything](https://arxiv.org/pdf/2304.02643v1.pdf) by Alexander Kirillov, Eric Mintun, Nikhila Ravi, Hanzi Mao, Chloe Rolland, Laura Gustafson, Tete Xiao, Spencer Whitehead, Alex Berg, Wan-Yen Lo, Piotr Dollar, Ross Girshick.

1. **[SEW](https://huggingface.co/docs/transformers/model_doc/sew)** (from ASAPP) released with the paper [Performance-Efficiency Trade-offs in Unsupervised Pre-training for Speech Recognition](https://arxiv.org/abs/2109.06870) by Felix Wu, Kwangyoun Kim, Jing Pan, Kyu Han, Kilian Q. Weinberger, Yoav Artzi.

1. **[SEW-D](https://huggingface.co/docs/transformers/model_doc/sew_d)** (from ASAPP) released with the paper [Performance-Efficiency Trade-offs in Unsupervised Pre-training for Speech Recognition](https://arxiv.org/abs/2109.06870) by Felix Wu, Kwangyoun Kim, Jing Pan, Kyu Han, Kilian Q. Weinberger, Yoav Artzi.

+1. **[SigLIP](https://huggingface.co/docs/transformers/main/model_doc/siglip)** (from Google AI) released with the paper [Sigmoid Loss for Language Image Pre-Training](https://arxiv.org/abs/2303.15343) by Xiaohua Zhai, Basil Mustafa, Alexander Kolesnikov, Lucas Beyer.

1. **[SpeechT5](https://huggingface.co/docs/transformers/model_doc/speecht5)** (from Microsoft Research) released with the paper [SpeechT5: Unified-Modal Encoder-Decoder Pre-Training for Spoken Language Processing](https://arxiv.org/abs/2110.07205) by Junyi Ao, Rui Wang, Long Zhou, Chengyi Wang, Shuo Ren, Yu Wu, Shujie Liu, Tom Ko, Qing Li, Yu Zhang, Zhihua Wei, Yao Qian, Jinyu Li, Furu Wei.

1. **[SpeechToTextTransformer](https://huggingface.co/docs/transformers/model_doc/speech_to_text)** (from Facebook), released together with the paper [fairseq S2T: Fast Speech-to-Text Modeling with fairseq](https://arxiv.org/abs/2010.05171) by Changhan Wang, Yun Tang, Xutai Ma, Anne Wu, Dmytro Okhonko, Juan Pino.

1. **[SpeechToTextTransformer2](https://huggingface.co/docs/transformers/model_doc/speech_to_text_2)** (from Facebook) released with the paper [Large-Scale Self- and Semi-Supervised Learning for Speech Translation](https://arxiv.org/abs/2104.06678) by Changhan Wang, Anne Wu, Juan Pino, Alexei Baevski, Michael Auli, Alexis Conneau.

diff --git a/docs/source/en/_toctree.yml b/docs/source/en/_toctree.yml

index 739353d4d34a52..86cffb9a7e35cf 100644

--- a/docs/source/en/_toctree.yml

+++ b/docs/source/en/_toctree.yml

@@ -733,6 +733,8 @@

title: Pix2Struct

- local: model_doc/sam

title: Segment Anything

+ - local: model_doc/siglip

+ title: SigLIP

- local: model_doc/speech-encoder-decoder

title: Speech Encoder Decoder Models

- local: model_doc/tapas

diff --git a/docs/source/en/index.md b/docs/source/en/index.md

index bf87c991a996ef..52b5df6e59ba14 100644

--- a/docs/source/en/index.md

+++ b/docs/source/en/index.md

@@ -250,6 +250,7 @@ Flax), PyTorch, and/or TensorFlow.

| [SegFormer](model_doc/segformer) | ✅ | ✅ | ❌ |

| [SEW](model_doc/sew) | ✅ | ❌ | ❌ |

| [SEW-D](model_doc/sew-d) | ✅ | ❌ | ❌ |

+| [SigLIP](model_doc/siglip) | ✅ | ❌ | ❌ |

| [Speech Encoder decoder](model_doc/speech-encoder-decoder) | ✅ | ❌ | ✅ |

| [Speech2Text](model_doc/speech_to_text) | ✅ | ✅ | ❌ |

| [SpeechT5](model_doc/speecht5) | ✅ | ❌ | ❌ |

diff --git a/docs/source/en/model_doc/siglip.md b/docs/source/en/model_doc/siglip.md

new file mode 100644

index 00000000000000..5cebbf97848e9b

--- /dev/null

+++ b/docs/source/en/model_doc/siglip.md

@@ -0,0 +1,141 @@

+

+

+# SigLIP

+

+## Overview

+

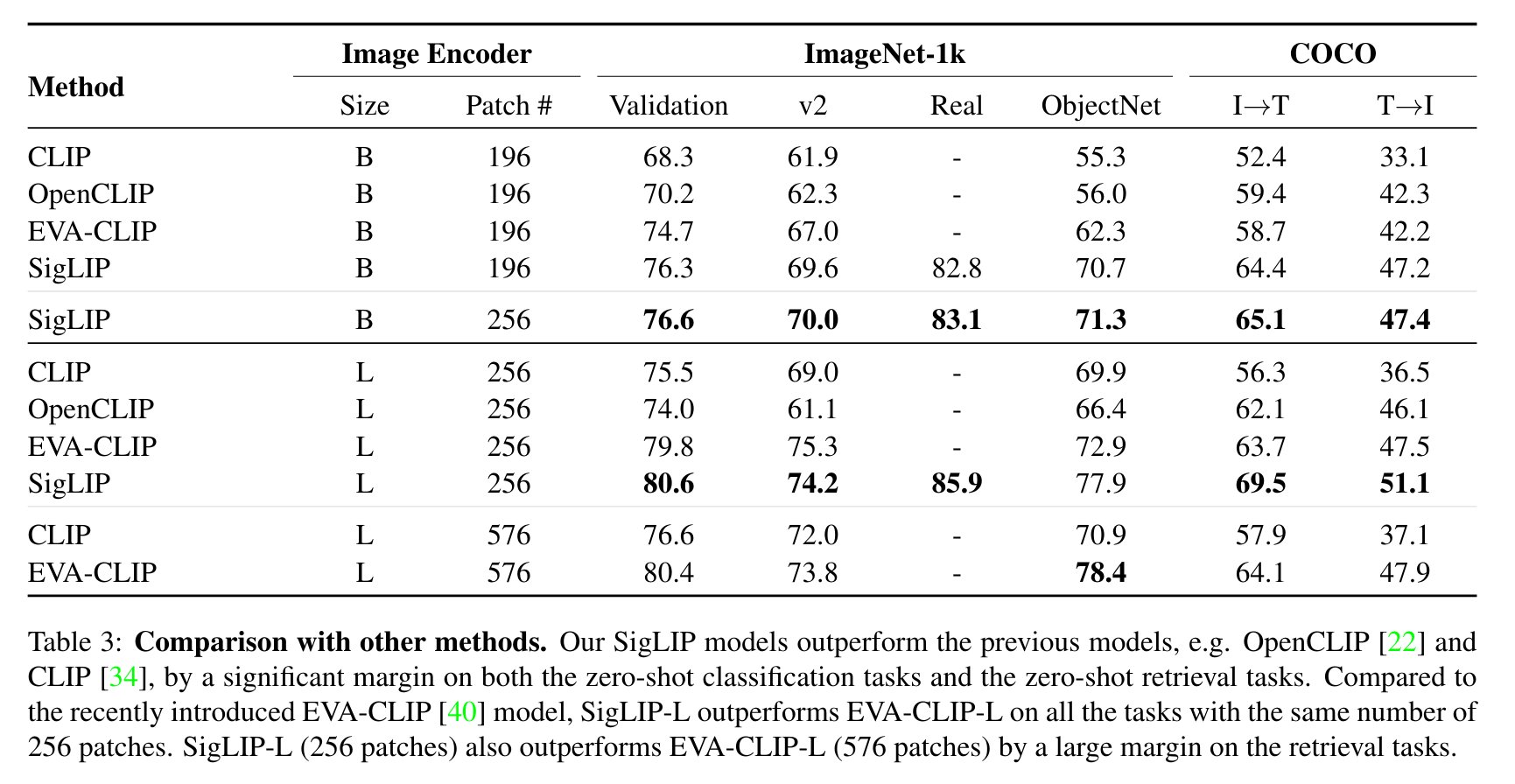

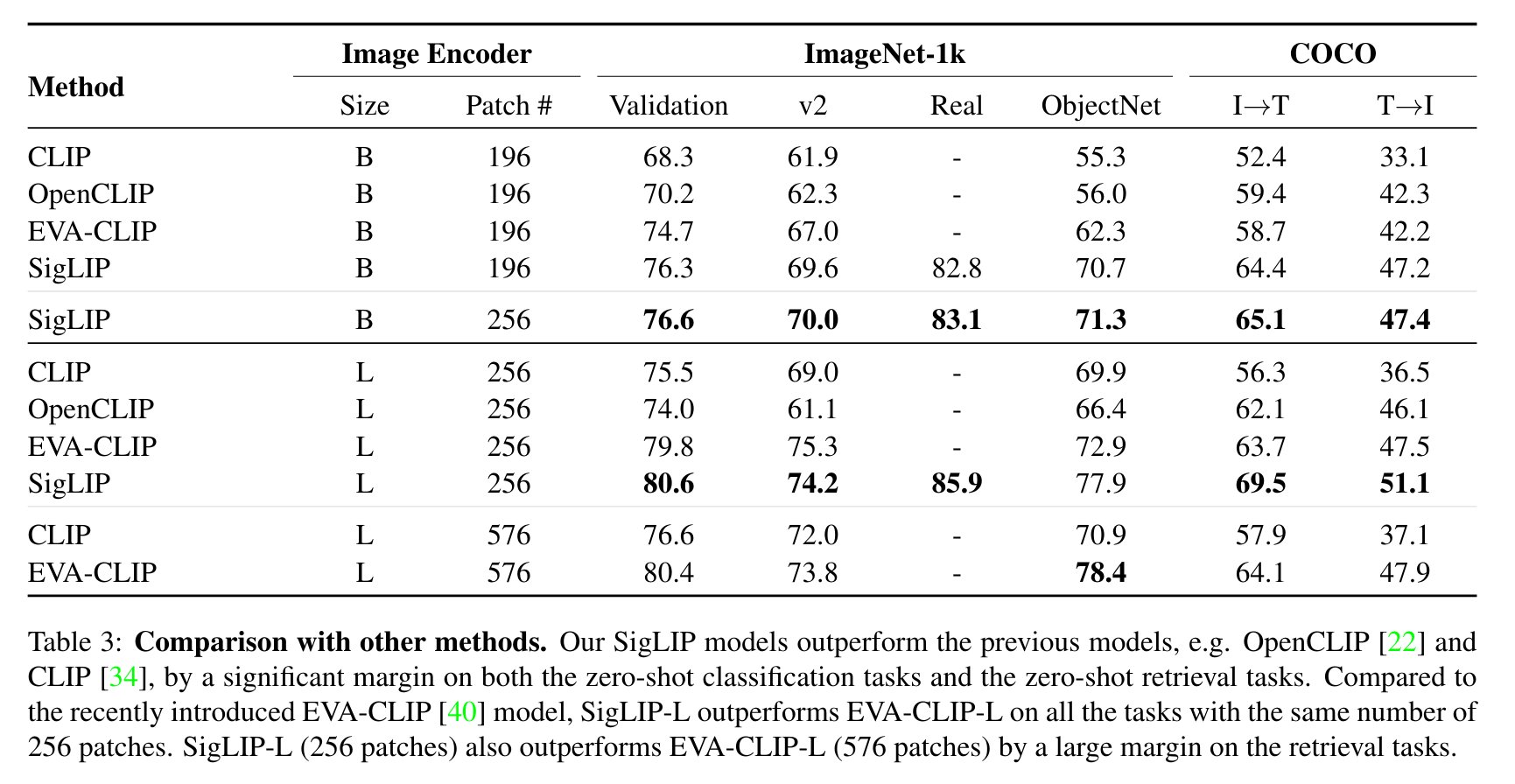

+The SigLIP model was proposed in [Sigmoid Loss for Language Image Pre-Training](https://arxiv.org/abs/2303.15343) by Xiaohua Zhai, Basil Mustafa, Alexander Kolesnikov, Lucas Beyer. SigLIP proposes to replace the loss function used in [CLIP](clip) by a simple pairwise sigmoid loss. This results in better performance in terms of zero-shot classification accuracy on ImageNet.

+

+The abstract from the paper is the following:

+

+*We propose a simple pairwise Sigmoid loss for Language-Image Pre-training (SigLIP). Unlike standard contrastive learning with softmax normalization, the sigmoid loss operates solely on image-text pairs and does not require a global view of the pairwise similarities for normalization. The sigmoid loss simultaneously allows further scaling up the batch size, while also performing better at smaller batch sizes. Combined with Locked-image Tuning, with only four TPUv4 chips, we train a SigLiT model that achieves 84.5% ImageNet zero-shot accuracy in two days. The disentanglement of the batch size from the loss further allows us to study the impact of examples vs pairs and negative to positive ratio. Finally, we push the batch size to the extreme, up to one million, and find that the benefits of growing batch size quickly diminish, with a more reasonable batch size of 32k being sufficient.*

+

+## Usage tips

+

+- Usage of SigLIP is similar to [CLIP](clip). The main difference is the training loss, which does not require a global view of all the pairwise similarities of images and texts within a batch. One needs to apply the sigmoid activation function to the logits, rather than the softmax.

+- Training is not yet supported. If you want to fine-tune SigLIP or train from scratch, refer to the loss function from [OpenCLIP](https://github.com/mlfoundations/open_clip/blob/73ad04ae7fb93ede1c02dc9040a828634cb1edf1/src/open_clip/loss.py#L307), which leverages various `torch.distributed` utilities.

+- When using the standalone [`SiglipTokenizer`], make sure to pass `padding="max_length"` as that's how the model was trained. The multimodal [`SiglipProcessor`] takes care of this behind the scenes.

+

+ +

+ SigLIP evaluation results compared to CLIP. Taken from the original paper.

+

+This model was contributed by [nielsr](https://huggingface.co/nielsr).

+The original code can be found [here](https://github.com/google-research/big_vision/tree/main).

+

+## Usage example

+

+There are 2 main ways to use SigLIP: either using the pipeline API, which abstracts away all the complexity for you, or by using the `SiglipModel` class yourself.

+

+### Pipeline API

+

+The pipeline allows to use the model in a few lines of code:

+

+```python

+>>> from transformers import pipeline

+>>> from PIL import Image

+>>> import requests

+

+>>> # load pipe

+>>> image_classifier = pipeline(task="zero-shot-image-classification", model="google/siglip-base-patch16-224")

+

+>>> # load image

+>>> url = 'http://images.cocodataset.org/val2017/000000039769.jpg'

+>>> image = Image.open(requests.get(url, stream=True).raw)

+

+>>> # inference

+>>> outputs = image_classifier(image, candidate_labels=["2 cats", "a plane", "a remote"])

+>>> outputs = [{"score": round(output["score"], 4), "label": output["label"] } for output in outputs]

+>>> print(outputs)

+[{'score': 0.1979, 'label': '2 cats'}, {'score': 0.0, 'label': 'a remote'}, {'score': 0.0, 'label': 'a plane'}]

+```

+

+### Using the model yourself

+

+If you want to do the pre- and postprocessing yourself, here's how to do that:

+

+```python

+>>> from PIL import Image

+>>> import requests

+>>> from transformers import AutoProcessor, AutoModel

+>>> import torch

+

+>>> model = AutoModel.from_pretrained("google/siglip-base-patch16-224")

+>>> processor = AutoProcessor.from_pretrained("google/siglip-base-patch16-224")

+

+>>> url = "http://images.cocodataset.org/val2017/000000039769.jpg"

+>>> image = Image.open(requests.get(url, stream=True).raw)

+

+>>> texts = ["a photo of 2 cats", "a photo of 2 dogs"]

+>>> inputs = processor(text=texts, images=image, return_tensors="pt")

+

+>>> with torch.no_grad():

+... outputs = model(**inputs)

+

+>>> logits_per_image = outputs.logits_per_image

+>>> probs = torch.sigmoid(logits_per_image) # these are the probabilities

+>>> print(f"{probs[0][0]:.1%} that image 0 is '{texts[0]}'")

+31.9% that image 0 is 'a photo of 2 cats'

+```

+

+## SiglipConfig

+

+[[autodoc]] SiglipConfig

+ - from_text_vision_configs

+

+## SiglipTextConfig

+

+[[autodoc]] SiglipTextConfig

+

+## SiglipVisionConfig

+

+[[autodoc]] SiglipVisionConfig

+

+## SiglipTokenizer

+

+[[autodoc]] SiglipTokenizer

+ - build_inputs_with_special_tokens

+ - get_special_tokens_mask

+ - create_token_type_ids_from_sequences

+ - save_vocabulary

+

+## SiglipImageProcessor

+

+[[autodoc]] SiglipImageProcessor

+ - preprocess

+

+## SiglipProcessor

+

+[[autodoc]] SiglipProcessor

+

+## SiglipModel

+

+[[autodoc]] SiglipModel

+ - forward

+ - get_text_features

+ - get_image_features

+

+## SiglipTextModel

+

+[[autodoc]] SiglipTextModel

+ - forward

+

+## SiglipVisionModel

+

+[[autodoc]] SiglipVisionModel

+ - forward

diff --git a/src/transformers/__init__.py b/src/transformers/__init__.py

index b3512b32eca93b..d721cc2bd2e84b 100644

--- a/src/transformers/__init__.py

+++ b/src/transformers/__init__.py

@@ -762,6 +762,14 @@

"models.segformer": ["SEGFORMER_PRETRAINED_CONFIG_ARCHIVE_MAP", "SegformerConfig"],

"models.sew": ["SEW_PRETRAINED_CONFIG_ARCHIVE_MAP", "SEWConfig"],

"models.sew_d": ["SEW_D_PRETRAINED_CONFIG_ARCHIVE_MAP", "SEWDConfig"],

+ "models.siglip": [

+ "SIGLIP_PRETRAINED_CONFIG_ARCHIVE_MAP",

+ "SiglipConfig",

+ "SiglipProcessor",

+ "SiglipTextConfig",

+ "SiglipTokenizer",

+ "SiglipVisionConfig",

+ ],

"models.speech_encoder_decoder": ["SpeechEncoderDecoderConfig"],

"models.speech_to_text": [

"SPEECH_TO_TEXT_PRETRAINED_CONFIG_ARCHIVE_MAP",

@@ -1290,6 +1298,7 @@

_import_structure["models.pvt"].extend(["PvtImageProcessor"])

_import_structure["models.sam"].extend(["SamImageProcessor"])

_import_structure["models.segformer"].extend(["SegformerFeatureExtractor", "SegformerImageProcessor"])

+ _import_structure["models.siglip"].append("SiglipImageProcessor")

_import_structure["models.swin2sr"].append("Swin2SRImageProcessor")

_import_structure["models.tvlt"].append("TvltImageProcessor")

_import_structure["models.tvp"].append("TvpImageProcessor")

@@ -3155,6 +3164,15 @@

"SEWDPreTrainedModel",

]

)

+ _import_structure["models.siglip"].extend(

+ [

+ "SIGLIP_PRETRAINED_MODEL_ARCHIVE_LIST",

+ "SiglipModel",

+ "SiglipPreTrainedModel",

+ "SiglipTextModel",

+ "SiglipVisionModel",

+ ]

+ )

_import_structure["models.speech_encoder_decoder"].extend(["SpeechEncoderDecoderModel"])

_import_structure["models.speech_to_text"].extend(

[

@@ -5441,6 +5459,14 @@

)

from .models.sew import SEW_PRETRAINED_CONFIG_ARCHIVE_MAP, SEWConfig

from .models.sew_d import SEW_D_PRETRAINED_CONFIG_ARCHIVE_MAP, SEWDConfig

+ from .models.siglip import (

+ SIGLIP_PRETRAINED_CONFIG_ARCHIVE_MAP,

+ SiglipConfig,

+ SiglipProcessor,

+ SiglipTextConfig,

+ SiglipTokenizer,

+ SiglipVisionConfig,

+ )

from .models.speech_encoder_decoder import SpeechEncoderDecoderConfig

from .models.speech_to_text import (

SPEECH_TO_TEXT_PRETRAINED_CONFIG_ARCHIVE_MAP,

@@ -5966,6 +5992,7 @@

from .models.pvt import PvtImageProcessor

from .models.sam import SamImageProcessor

from .models.segformer import SegformerFeatureExtractor, SegformerImageProcessor

+ from .models.siglip import SiglipImageProcessor

from .models.swin2sr import Swin2SRImageProcessor

from .models.tvlt import TvltImageProcessor

from .models.tvp import TvpImageProcessor

@@ -7508,6 +7535,13 @@

SEWDModel,

SEWDPreTrainedModel,

)

+ from .models.siglip import (

+ SIGLIP_PRETRAINED_MODEL_ARCHIVE_LIST,

+ SiglipModel,

+ SiglipPreTrainedModel,

+ SiglipTextModel,

+ SiglipVisionModel,

+ )

from .models.speech_encoder_decoder import SpeechEncoderDecoderModel

from .models.speech_to_text import (

SPEECH_TO_TEXT_PRETRAINED_MODEL_ARCHIVE_LIST,

diff --git a/src/transformers/models/__init__.py b/src/transformers/models/__init__.py

index 428eed37130ca6..2c20873c2ed79d 100644

--- a/src/transformers/models/__init__.py

+++ b/src/transformers/models/__init__.py

@@ -193,6 +193,7 @@

segformer,

sew,

sew_d,

+ siglip,

speech_encoder_decoder,

speech_to_text,

speech_to_text_2,

diff --git a/src/transformers/models/auto/configuration_auto.py b/src/transformers/models/auto/configuration_auto.py

index f1296b95484466..9eb3f1985c8536 100755

--- a/src/transformers/models/auto/configuration_auto.py

+++ b/src/transformers/models/auto/configuration_auto.py

@@ -200,6 +200,8 @@

("segformer", "SegformerConfig"),

("sew", "SEWConfig"),

("sew-d", "SEWDConfig"),

+ ("siglip", "SiglipConfig"),

+ ("siglip_vision_model", "SiglipVisionConfig"),

("speech-encoder-decoder", "SpeechEncoderDecoderConfig"),

("speech_to_text", "Speech2TextConfig"),

("speech_to_text_2", "Speech2Text2Config"),

@@ -419,6 +421,7 @@

("segformer", "SEGFORMER_PRETRAINED_CONFIG_ARCHIVE_MAP"),

("sew", "SEW_PRETRAINED_CONFIG_ARCHIVE_MAP"),

("sew-d", "SEW_D_PRETRAINED_CONFIG_ARCHIVE_MAP"),

+ ("siglip", "SIGLIP_PRETRAINED_CONFIG_ARCHIVE_MAP"),

("speech_to_text", "SPEECH_TO_TEXT_PRETRAINED_CONFIG_ARCHIVE_MAP"),

("speech_to_text_2", "SPEECH_TO_TEXT_2_PRETRAINED_CONFIG_ARCHIVE_MAP"),

("speecht5", "SPEECHT5_PRETRAINED_CONFIG_ARCHIVE_MAP"),

@@ -664,6 +667,8 @@

("segformer", "SegFormer"),

("sew", "SEW"),

("sew-d", "SEW-D"),

+ ("siglip", "SigLIP"),

+ ("siglip_vision_model", "SiglipVisionModel"),

("speech-encoder-decoder", "Speech Encoder decoder"),

("speech_to_text", "Speech2Text"),

("speech_to_text_2", "Speech2Text2"),

@@ -755,6 +760,7 @@

("maskformer-swin", "maskformer"),

("xclip", "x_clip"),

("clip_vision_model", "clip"),

+ ("siglip_vision_model", "siglip"),

]

)

diff --git a/src/transformers/models/auto/image_processing_auto.py b/src/transformers/models/auto/image_processing_auto.py

index 446c9adf1b6dc3..5180d2331aacc9 100644

--- a/src/transformers/models/auto/image_processing_auto.py

+++ b/src/transformers/models/auto/image_processing_auto.py

@@ -97,6 +97,7 @@

("resnet", "ConvNextImageProcessor"),

("sam", "SamImageProcessor"),

("segformer", "SegformerImageProcessor"),

+ ("siglip", "SiglipImageProcessor"),

("swiftformer", "ViTImageProcessor"),

("swin", "ViTImageProcessor"),

("swin2sr", "Swin2SRImageProcessor"),

diff --git a/src/transformers/models/auto/modeling_auto.py b/src/transformers/models/auto/modeling_auto.py

index 6657b8b1b6187e..7bf50a4518fa88 100755

--- a/src/transformers/models/auto/modeling_auto.py

+++ b/src/transformers/models/auto/modeling_auto.py

@@ -193,6 +193,8 @@

("segformer", "SegformerModel"),

("sew", "SEWModel"),

("sew-d", "SEWDModel"),

+ ("siglip", "SiglipModel"),

+ ("siglip_vision_model", "SiglipVisionModel"),

("speech_to_text", "Speech2TextModel"),

("speecht5", "SpeechT5Model"),

("splinter", "SplinterModel"),

@@ -1102,6 +1104,7 @@

("chinese_clip", "ChineseCLIPModel"),

("clip", "CLIPModel"),

("clipseg", "CLIPSegModel"),

+ ("siglip", "SiglipModel"),

]

)

diff --git a/src/transformers/models/auto/processing_auto.py b/src/transformers/models/auto/processing_auto.py

index 93dc6ab6050bb9..eee8af931e99ac 100644

--- a/src/transformers/models/auto/processing_auto.py

+++ b/src/transformers/models/auto/processing_auto.py

@@ -77,6 +77,7 @@

("seamless_m4t", "SeamlessM4TProcessor"),

("sew", "Wav2Vec2Processor"),

("sew-d", "Wav2Vec2Processor"),

+ ("siglip", "SiglipProcessor"),

("speech_to_text", "Speech2TextProcessor"),

("speech_to_text_2", "Speech2Text2Processor"),

("speecht5", "SpeechT5Processor"),

diff --git a/src/transformers/models/auto/tokenization_auto.py b/src/transformers/models/auto/tokenization_auto.py

index 5ff79fd822b950..2381d8153dc0ee 100644

--- a/src/transformers/models/auto/tokenization_auto.py

+++ b/src/transformers/models/auto/tokenization_auto.py

@@ -372,6 +372,7 @@

"SeamlessM4TTokenizerFast" if is_tokenizers_available() else None,

),

),

+ ("siglip", ("SiglipTokenizer", None)),

("speech_to_text", ("Speech2TextTokenizer" if is_sentencepiece_available() else None, None)),

("speech_to_text_2", ("Speech2Text2Tokenizer", None)),

("speecht5", ("SpeechT5Tokenizer" if is_sentencepiece_available() else None, None)),

diff --git a/src/transformers/models/siglip/__init__.py b/src/transformers/models/siglip/__init__.py

new file mode 100644

index 00000000000000..d47b7c5f5d02f6

--- /dev/null

+++ b/src/transformers/models/siglip/__init__.py

@@ -0,0 +1,94 @@

+# Copyright 2024 The HuggingFace Team. All rights reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+from typing import TYPE_CHECKING

+

+from ...utils import (

+ OptionalDependencyNotAvailable,

+ _LazyModule,

+ is_torch_available,

+ is_vision_available,

+)

+

+

+_import_structure = {

+ "configuration_siglip": [

+ "SIGLIP_PRETRAINED_CONFIG_ARCHIVE_MAP",

+ "SiglipConfig",

+ "SiglipTextConfig",

+ "SiglipVisionConfig",

+ ],

+ "processing_siglip": ["SiglipProcessor"],

+ "tokenization_siglip": ["SiglipTokenizer"],

+}

+

+try:

+ if not is_vision_available():

+ raise OptionalDependencyNotAvailable()

+except OptionalDependencyNotAvailable:

+ pass

+else:

+ _import_structure["image_processing_siglip"] = ["SiglipImageProcessor"]

+

+try:

+ if not is_torch_available():

+ raise OptionalDependencyNotAvailable()

+except OptionalDependencyNotAvailable:

+ pass

+else:

+ _import_structure["modeling_siglip"] = [

+ "SIGLIP_PRETRAINED_MODEL_ARCHIVE_LIST",

+ "SiglipModel",

+ "SiglipPreTrainedModel",

+ "SiglipTextModel",

+ "SiglipVisionModel",

+ ]

+

+

+if TYPE_CHECKING:

+ from .configuration_siglip import (

+ SIGLIP_PRETRAINED_CONFIG_ARCHIVE_MAP,

+ SiglipConfig,

+ SiglipTextConfig,

+ SiglipVisionConfig,

+ )

+ from .processing_siglip import SiglipProcessor

+ from .tokenization_siglip import SiglipTokenizer

+

+ try:

+ if not is_vision_available():

+ raise OptionalDependencyNotAvailable()

+ except OptionalDependencyNotAvailable:

+ pass

+ else:

+ from .image_processing_siglip import SiglipImageProcessor

+

+ try:

+ if not is_torch_available():

+ raise OptionalDependencyNotAvailable()

+ except OptionalDependencyNotAvailable:

+ pass

+ else:

+ from .modeling_siglip import (

+ SIGLIP_PRETRAINED_MODEL_ARCHIVE_LIST,

+ SiglipModel,

+ SiglipPreTrainedModel,

+ SiglipTextModel,

+ SiglipVisionModel,

+ )

+

+

+else:

+ import sys

+

+ sys.modules[__name__] = _LazyModule(__name__, globals()["__file__"], _import_structure, module_spec=__spec__)

diff --git a/src/transformers/models/siglip/configuration_siglip.py b/src/transformers/models/siglip/configuration_siglip.py

new file mode 100644

index 00000000000000..990bad7ace3808

--- /dev/null

+++ b/src/transformers/models/siglip/configuration_siglip.py

@@ -0,0 +1,302 @@

+# coding=utf-8

+# Copyright 2024 The HuggingFace Inc. team. All rights reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+""" Siglip model configuration"""

+

+import os

+from typing import Union

+

+from ...configuration_utils import PretrainedConfig

+from ...utils import logging

+

+

+logger = logging.get_logger(__name__)

+

+SIGLIP_PRETRAINED_CONFIG_ARCHIVE_MAP = {

+ "google/siglip-base-patch16-224": "https://huggingface.co/google/siglip-base-patch16-224/resolve/main/config.json",

+}

+

+

+class SiglipTextConfig(PretrainedConfig):

+ r"""

+ This is the configuration class to store the configuration of a [`SiglipTextModel`]. It is used to instantiate a

+ Siglip text encoder according to the specified arguments, defining the model architecture. Instantiating a

+ configuration with the defaults will yield a similar configuration to that of the text encoder of the Siglip

+ [google/siglip-base-patch16-224](https://huggingface.co/google/siglip-base-patch16-224) architecture.

+

+ Configuration objects inherit from [`PretrainedConfig`] and can be used to control the model outputs. Read the

+ documentation from [`PretrainedConfig`] for more information.

+

+ Args:

+ vocab_size (`int`, *optional*, defaults to 32000):

+ Vocabulary size of the Siglip text model. Defines the number of different tokens that can be represented by

+ the `inputs_ids` passed when calling [`SiglipModel`].

+ hidden_size (`int`, *optional*, defaults to 768):

+ Dimensionality of the encoder layers and the pooler layer.

+ intermediate_size (`int`, *optional*, defaults to 3072):

+ Dimensionality of the "intermediate" (i.e., feed-forward) layer in the Transformer encoder.

+ num_hidden_layers (`int`, *optional*, defaults to 12):

+ Number of hidden layers in the Transformer encoder.

+ num_attention_heads (`int`, *optional*, defaults to 12):

+ Number of attention heads for each attention layer in the Transformer encoder.

+ max_position_embeddings (`int`, *optional*, defaults to 64):

+ The maximum sequence length that this model might ever be used with. Typically set this to something large

+ just in case (e.g., 512 or 1024 or 2048).

+ hidden_act (`str` or `function`, *optional*, defaults to `"gelu_pytorch_tanh"`):

+ The non-linear activation function (function or string) in the encoder and pooler. If string, `"gelu"`,

+ `"relu"`, `"selu"` and `"gelu_new"` `"quick_gelu"` are supported.

+ layer_norm_eps (`float`, *optional*, defaults to 1e-06):

+ The epsilon used by the layer normalization layers.

+ attention_dropout (`float`, *optional*, defaults to 0.0):

+ The dropout ratio for the attention probabilities.

+ pad_token_id (`int`, *optional*, defaults to 1):

+ The id of the padding token in the vocabulary.

+ bos_token_id (`int`, *optional*, defaults to 49406):

+ The id of the beginning-of-sequence token in the vocabulary.

+ eos_token_id (`int`, *optional*, defaults to 49407):

+ The id of the end-of-sequence token in the vocabulary.

+

+ Example:

+

+ ```python

+ >>> from transformers import SiglipTextConfig, SiglipTextModel

+

+ >>> # Initializing a SiglipTextConfig with google/siglip-base-patch16-224 style configuration

+ >>> configuration = SiglipTextConfig()

+

+ >>> # Initializing a SiglipTextModel (with random weights) from the google/siglip-base-patch16-224 style configuration

+ >>> model = SiglipTextModel(configuration)

+

+ >>> # Accessing the model configuration

+ >>> configuration = model.config

+ ```"""

+

+ model_type = "siglip_text_model"

+

+ def __init__(

+ self,

+ vocab_size=32000,

+ hidden_size=768,

+ intermediate_size=3072,

+ num_hidden_layers=12,

+ num_attention_heads=12,

+ max_position_embeddings=64,

+ hidden_act="gelu_pytorch_tanh",

+ layer_norm_eps=1e-6,

+ attention_dropout=0.0,

+ # This differs from `CLIPTokenizer`'s default and from openai/siglip

+ # See https://github.com/huggingface/transformers/pull/24773#issuecomment-1632287538

+ pad_token_id=1,

+ bos_token_id=49406,

+ eos_token_id=49407,

+ **kwargs,

+ ):

+ super().__init__(pad_token_id=pad_token_id, bos_token_id=bos_token_id, eos_token_id=eos_token_id, **kwargs)

+

+ self.vocab_size = vocab_size

+ self.hidden_size = hidden_size

+ self.intermediate_size = intermediate_size

+ self.num_hidden_layers = num_hidden_layers

+ self.num_attention_heads = num_attention_heads

+ self.max_position_embeddings = max_position_embeddings

+ self.layer_norm_eps = layer_norm_eps

+ self.hidden_act = hidden_act

+ self.attention_dropout = attention_dropout

+

+ @classmethod

+ def from_pretrained(cls, pretrained_model_name_or_path: Union[str, os.PathLike], **kwargs) -> "PretrainedConfig":

+ cls._set_token_in_kwargs(kwargs)

+

+ config_dict, kwargs = cls.get_config_dict(pretrained_model_name_or_path, **kwargs)

+

+ # get the text config dict if we are loading from SiglipConfig

+ if config_dict.get("model_type") == "siglip":

+ config_dict = config_dict["text_config"]

+

+ if "model_type" in config_dict and hasattr(cls, "model_type") and config_dict["model_type"] != cls.model_type:

+ logger.warning(

+ f"You are using a model of type {config_dict['model_type']} to instantiate a model of type "

+ f"{cls.model_type}. This is not supported for all configurations of models and can yield errors."

+ )

+

+ return cls.from_dict(config_dict, **kwargs)

+

+

+class SiglipVisionConfig(PretrainedConfig):

+ r"""

+ This is the configuration class to store the configuration of a [`SiglipVisionModel`]. It is used to instantiate a

+ Siglip vision encoder according to the specified arguments, defining the model architecture. Instantiating a

+ configuration with the defaults will yield a similar configuration to that of the vision encoder of the Siglip

+ [google/siglip-base-patch16-224](https://huggingface.co/google/siglip-base-patch16-224) architecture.

+

+ Configuration objects inherit from [`PretrainedConfig`] and can be used to control the model outputs. Read the

+ documentation from [`PretrainedConfig`] for more information.

+

+ Args:

+ hidden_size (`int`, *optional*, defaults to 768):

+ Dimensionality of the encoder layers and the pooler layer.

+ intermediate_size (`int`, *optional*, defaults to 3072):

+ Dimensionality of the "intermediate" (i.e., feed-forward) layer in the Transformer encoder.

+ num_hidden_layers (`int`, *optional*, defaults to 12):

+ Number of hidden layers in the Transformer encoder.

+ num_attention_heads (`int`, *optional*, defaults to 12):

+ Number of attention heads for each attention layer in the Transformer encoder.

+ num_channels (`int`, *optional*, defaults to 3):

+ Number of channels in the input images.

+ image_size (`int`, *optional*, defaults to 224):

+ The size (resolution) of each image.

+ patch_size (`int`, *optional*, defaults to 16):

+ The size (resolution) of each patch.

+ hidden_act (`str` or `function`, *optional*, defaults to `"gelu_pytorch_tanh"`):

+ The non-linear activation function (function or string) in the encoder and pooler. If string, `"gelu"`,

+ `"relu"`, `"selu"` and `"gelu_new"` ``"quick_gelu"` are supported.

+ layer_norm_eps (`float`, *optional*, defaults to 1e-06):

+ The epsilon used by the layer normalization layers.

+ attention_dropout (`float`, *optional*, defaults to 0.0):

+ The dropout ratio for the attention probabilities.

+

+ Example:

+

+ ```python

+ >>> from transformers import SiglipVisionConfig, SiglipVisionModel

+

+ >>> # Initializing a SiglipVisionConfig with google/siglip-base-patch16-224 style configuration

+ >>> configuration = SiglipVisionConfig()

+

+ >>> # Initializing a SiglipVisionModel (with random weights) from the google/siglip-base-patch16-224 style configuration

+ >>> model = SiglipVisionModel(configuration)

+

+ >>> # Accessing the model configuration

+ >>> configuration = model.config

+ ```"""

+

+ model_type = "siglip_vision_model"

+

+ def __init__(

+ self,

+ hidden_size=768,

+ intermediate_size=3072,

+ num_hidden_layers=12,

+ num_attention_heads=12,

+ num_channels=3,

+ image_size=224,

+ patch_size=16,

+ hidden_act="gelu_pytorch_tanh",

+ layer_norm_eps=1e-6,

+ attention_dropout=0.0,

+ **kwargs,

+ ):

+ super().__init__(**kwargs)

+

+ self.hidden_size = hidden_size

+ self.intermediate_size = intermediate_size

+ self.num_hidden_layers = num_hidden_layers

+ self.num_attention_heads = num_attention_heads

+ self.num_channels = num_channels

+ self.patch_size = patch_size

+ self.image_size = image_size

+ self.attention_dropout = attention_dropout

+ self.layer_norm_eps = layer_norm_eps

+ self.hidden_act = hidden_act

+

+ @classmethod

+ def from_pretrained(cls, pretrained_model_name_or_path: Union[str, os.PathLike], **kwargs) -> "PretrainedConfig":

+ cls._set_token_in_kwargs(kwargs)

+

+ config_dict, kwargs = cls.get_config_dict(pretrained_model_name_or_path, **kwargs)

+

+ # get the vision config dict if we are loading from SiglipConfig

+ if config_dict.get("model_type") == "siglip":

+ config_dict = config_dict["vision_config"]

+

+ if "model_type" in config_dict and hasattr(cls, "model_type") and config_dict["model_type"] != cls.model_type:

+ logger.warning(

+ f"You are using a model of type {config_dict['model_type']} to instantiate a model of type "

+ f"{cls.model_type}. This is not supported for all configurations of models and can yield errors."

+ )

+

+ return cls.from_dict(config_dict, **kwargs)

+

+

+class SiglipConfig(PretrainedConfig):

+ r"""

+ [`SiglipConfig`] is the configuration class to store the configuration of a [`SiglipModel`]. It is used to

+ instantiate a Siglip model according to the specified arguments, defining the text model and vision model configs.

+ Instantiating a configuration with the defaults will yield a similar configuration to that of the Siglip

+ [google/siglip-base-patch16-224](https://huggingface.co/google/siglip-base-patch16-224) architecture.

+

+ Configuration objects inherit from [`PretrainedConfig`] and can be used to control the model outputs. Read the

+ documentation from [`PretrainedConfig`] for more information.

+

+ Args:

+ text_config (`dict`, *optional*):

+ Dictionary of configuration options used to initialize [`SiglipTextConfig`].

+ vision_config (`dict`, *optional*):

+ Dictionary of configuration options used to initialize [`SiglipVisionConfig`].

+ kwargs (*optional*):

+ Dictionary of keyword arguments.

+

+ Example:

+

+ ```python

+ >>> from transformers import SiglipConfig, SiglipModel

+

+ >>> # Initializing a SiglipConfig with google/siglip-base-patch16-224 style configuration

+ >>> configuration = SiglipConfig()

+

+ >>> # Initializing a SiglipModel (with random weights) from the google/siglip-base-patch16-224 style configuration

+ >>> model = SiglipModel(configuration)

+

+ >>> # Accessing the model configuration

+ >>> configuration = model.config

+

+ >>> # We can also initialize a SiglipConfig from a SiglipTextConfig and a SiglipVisionConfig

+ >>> from transformers import SiglipTextConfig, SiglipVisionConfig

+

+ >>> # Initializing a SiglipText and SiglipVision configuration

+ >>> config_text = SiglipTextConfig()

+ >>> config_vision = SiglipVisionConfig()

+

+ >>> config = SiglipConfig.from_text_vision_configs(config_text, config_vision)

+ ```"""

+

+ model_type = "siglip"

+

+ def __init__(self, text_config=None, vision_config=None, **kwargs):

+ super().__init__(**kwargs)

+

+ if text_config is None:

+ text_config = {}

+ logger.info("`text_config` is `None`. Initializing the `SiglipTextConfig` with default values.")

+

+ if vision_config is None:

+ vision_config = {}

+ logger.info("`vision_config` is `None`. initializing the `SiglipVisionConfig` with default values.")

+

+ self.text_config = SiglipTextConfig(**text_config)

+ self.vision_config = SiglipVisionConfig(**vision_config)

+

+ self.initializer_factor = 1.0

+

+ @classmethod

+ def from_text_vision_configs(cls, text_config: SiglipTextConfig, vision_config: SiglipVisionConfig, **kwargs):

+ r"""

+ Instantiate a [`SiglipConfig`] (or a derived class) from siglip text model configuration and siglip vision

+ model configuration.

+

+ Returns:

+ [`SiglipConfig`]: An instance of a configuration object

+ """

+

+ return cls(text_config=text_config.to_dict(), vision_config=vision_config.to_dict(), **kwargs)

diff --git a/src/transformers/models/siglip/convert_siglip_to_hf.py b/src/transformers/models/siglip/convert_siglip_to_hf.py

new file mode 100644

index 00000000000000..6adacef84f9e27

--- /dev/null

+++ b/src/transformers/models/siglip/convert_siglip_to_hf.py

@@ -0,0 +1,413 @@

+# coding=utf-8

+# Copyright 2024 The HuggingFace Inc. team.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+"""Convert SigLIP checkpoints from the original repository.

+

+URL: https://github.com/google-research/big_vision/tree/main

+"""

+

+

+import argparse

+import collections

+from pathlib import Path

+

+import numpy as np

+import requests

+import torch

+from huggingface_hub import hf_hub_download

+from numpy import load

+from PIL import Image

+

+from transformers import SiglipConfig, SiglipImageProcessor, SiglipModel, SiglipProcessor, SiglipTokenizer

+from transformers.utils import logging

+

+

+logging.set_verbosity_info()

+logger = logging.get_logger(__name__)

+

+

+model_name_to_checkpoint = {

+ # base checkpoints

+ "siglip-base-patch16-224": "/Users/nielsrogge/Documents/SigLIP/webli_en_b16_224_63724782.npz",

+ "siglip-base-patch16-256": "/Users/nielsrogge/Documents/SigLIP/webli_en_b16_256_60500360.npz",

+ "siglip-base-patch16-384": "/Users/nielsrogge/Documents/SigLIP/webli_en_b16_384_68578854.npz",

+ "siglip-base-patch16-512": "/Users/nielsrogge/Documents/SigLIP/webli_en_b16_512_68580893.npz",

+ # large checkpoints

+ "siglip-large-patch16-256": "/Users/nielsrogge/Documents/SigLIP/webli_en_l16_256_60552751.npz",

+ "siglip-large-patch16-384": "/Users/nielsrogge/Documents/SigLIP/webli_en_l16_384_63634585.npz",

+ # multilingual checkpoint

+ "siglip-base-patch16-256-i18n": "/Users/nielsrogge/Documents/SigLIP/webli_i18n_b16_256_66117334.npz",

+ # so400m checkpoints

+ "siglip-so400m-patch14-384": "/Users/nielsrogge/Documents/SigLIP/webli_en_so400m_384_58765454.npz",

+}

+

+model_name_to_image_size = {

+ "siglip-base-patch16-224": 224,

+ "siglip-base-patch16-256": 256,

+ "siglip-base-patch16-384": 384,

+ "siglip-base-patch16-512": 512,

+ "siglip-large-patch16-256": 256,

+ "siglip-large-patch16-384": 384,

+ "siglip-base-patch16-256-i18n": 256,

+ "siglip-so400m-patch14-384": 384,

+}

+

+

+def get_siglip_config(model_name):

+ config = SiglipConfig()

+

+ vocab_size = 250000 if "i18n" in model_name else 32000

+ image_size = model_name_to_image_size[model_name]

+ patch_size = 16 if "patch16" in model_name else 14

+

+ # size of the architecture

+ config.vision_config.image_size = image_size

+ config.vision_config.patch_size = patch_size

+ config.text_config.vocab_size = vocab_size

+

+ if "base" in model_name:

+ pass

+ elif "large" in model_name:

+ config.text_config.hidden_size = 1024

+ config.text_config.intermediate_size = 4096

+ config.text_config.num_hidden_layers = 24

+ config.text_config.num_attention_heads = 16

+ config.vision_config.hidden_size = 1024

+ config.vision_config.intermediate_size = 4096

+ config.vision_config.num_hidden_layers = 24

+ config.vision_config.num_attention_heads = 16

+ elif "so400m" in model_name:

+ config.text_config.hidden_size = 1152

+ config.text_config.intermediate_size = 4304

+ config.text_config.num_hidden_layers = 27

+ config.text_config.num_attention_heads = 16

+ config.vision_config.hidden_size = 1152

+ config.vision_config.intermediate_size = 4304

+ config.vision_config.num_hidden_layers = 27

+ config.vision_config.num_attention_heads = 16

+ else:

+ raise ValueError("Model not supported")

+

+ return config

+

+

+def create_rename_keys(config):

+ rename_keys = []

+ # fmt: off

+

+ # vision encoder

+

+ rename_keys.append(("params/img/embedding/kernel", "vision_model.embeddings.patch_embedding.weight"))

+ rename_keys.append(("params/img/embedding/bias", "vision_model.embeddings.patch_embedding.bias"))

+ rename_keys.append(("params/img/pos_embedding", "vision_model.embeddings.position_embedding.weight"))

+

+ for i in range(config.vision_config.num_hidden_layers):

+ rename_keys.append((f"params/img/Transformer/encoderblock_{i}/LayerNorm_0/scale", f"vision_model.encoder.layers.{i}.layer_norm1.weight"))

+ rename_keys.append((f"params/img/Transformer/encoderblock_{i}/LayerNorm_0/bias", f"vision_model.encoder.layers.{i}.layer_norm1.bias"))

+ rename_keys.append((f"params/img/Transformer/encoderblock_{i}/LayerNorm_1/scale", f"vision_model.encoder.layers.{i}.layer_norm2.weight"))

+ rename_keys.append((f"params/img/Transformer/encoderblock_{i}/LayerNorm_1/bias", f"vision_model.encoder.layers.{i}.layer_norm2.bias"))

+ rename_keys.append((f"params/img/Transformer/encoderblock_{i}/MlpBlock_0/Dense_0/kernel", f"vision_model.encoder.layers.{i}.mlp.fc1.weight"))

+ rename_keys.append((f"params/img/Transformer/encoderblock_{i}/MlpBlock_0/Dense_0/bias", f"vision_model.encoder.layers.{i}.mlp.fc1.bias"))

+ rename_keys.append((f"params/img/Transformer/encoderblock_{i}/MlpBlock_0/Dense_1/kernel", f"vision_model.encoder.layers.{i}.mlp.fc2.weight"))

+ rename_keys.append((f"params/img/Transformer/encoderblock_{i}/MlpBlock_0/Dense_1/bias", f"vision_model.encoder.layers.{i}.mlp.fc2.bias"))

+ rename_keys.append((f"params/img/Transformer/encoderblock_{i}/MultiHeadDotProductAttention_0/key/kernel", f"vision_model.encoder.layers.{i}.self_attn.k_proj.weight"))

+ rename_keys.append((f"params/img/Transformer/encoderblock_{i}/MultiHeadDotProductAttention_0/key/bias", f"vision_model.encoder.layers.{i}.self_attn.k_proj.bias"))

+ rename_keys.append((f"params/img/Transformer/encoderblock_{i}/MultiHeadDotProductAttention_0/value/kernel", f"vision_model.encoder.layers.{i}.self_attn.v_proj.weight"))

+ rename_keys.append((f"params/img/Transformer/encoderblock_{i}/MultiHeadDotProductAttention_0/value/bias", f"vision_model.encoder.layers.{i}.self_attn.v_proj.bias"))

+ rename_keys.append((f"params/img/Transformer/encoderblock_{i}/MultiHeadDotProductAttention_0/query/kernel", f"vision_model.encoder.layers.{i}.self_attn.q_proj.weight"))

+ rename_keys.append((f"params/img/Transformer/encoderblock_{i}/MultiHeadDotProductAttention_0/query/bias", f"vision_model.encoder.layers.{i}.self_attn.q_proj.bias"))

+ rename_keys.append((f"params/img/Transformer/encoderblock_{i}/MultiHeadDotProductAttention_0/out/kernel", f"vision_model.encoder.layers.{i}.self_attn.out_proj.weight"))

+ rename_keys.append((f"params/img/Transformer/encoderblock_{i}/MultiHeadDotProductAttention_0/out/bias", f"vision_model.encoder.layers.{i}.self_attn.out_proj.bias"))

+

+ rename_keys.append(("params/img/Transformer/encoder_norm/scale", "vision_model.post_layernorm.weight"))

+ rename_keys.append(("params/img/Transformer/encoder_norm/bias", "vision_model.post_layernorm.bias"))

+

+ rename_keys.append(("params/img/MAPHead_0/probe", "vision_model.head.probe"))

+ rename_keys.append(("params/img/MAPHead_0/LayerNorm_0/scale", "vision_model.head.layernorm.weight"))

+ rename_keys.append(("params/img/MAPHead_0/LayerNorm_0/bias", "vision_model.head.layernorm.bias"))

+ rename_keys.append(("params/img/MAPHead_0/MlpBlock_0/Dense_0/kernel", "vision_model.head.mlp.fc1.weight"))

+ rename_keys.append(("params/img/MAPHead_0/MlpBlock_0/Dense_0/bias", "vision_model.head.mlp.fc1.bias"))

+ rename_keys.append(("params/img/MAPHead_0/MlpBlock_0/Dense_1/kernel", "vision_model.head.mlp.fc2.weight"))

+ rename_keys.append(("params/img/MAPHead_0/MlpBlock_0/Dense_1/bias", "vision_model.head.mlp.fc2.bias"))

+ rename_keys.append(("params/img/MAPHead_0/MultiHeadDotProductAttention_0/out/kernel", "vision_model.head.attention.out_proj.weight"))

+ rename_keys.append(("params/img/MAPHead_0/MultiHeadDotProductAttention_0/out/bias", "vision_model.head.attention.out_proj.bias"))

+

+ # text encoder

+

+ rename_keys.append(("params/txt/Embed_0/embedding", "text_model.embeddings.token_embedding.weight"))

+ rename_keys.append(("params/txt/pos_embedding", "text_model.embeddings.position_embedding.weight"))

+

+ for i in range(config.text_config.num_hidden_layers):

+ rename_keys.append((f"params/txt/Encoder_0/encoderblock_{i}/LayerNorm_0/scale", f"text_model.encoder.layers.{i}.layer_norm1.weight"))

+ rename_keys.append((f"params/txt/Encoder_0/encoderblock_{i}/LayerNorm_0/bias", f"text_model.encoder.layers.{i}.layer_norm1.bias"))

+ rename_keys.append((f"params/txt/Encoder_0/encoderblock_{i}/LayerNorm_1/scale", f"text_model.encoder.layers.{i}.layer_norm2.weight"))

+ rename_keys.append((f"params/txt/Encoder_0/encoderblock_{i}/LayerNorm_1/bias", f"text_model.encoder.layers.{i}.layer_norm2.bias"))

+ rename_keys.append((f"params/txt/Encoder_0/encoderblock_{i}/MlpBlock_0/Dense_0/kernel", f"text_model.encoder.layers.{i}.mlp.fc1.weight"))

+ rename_keys.append((f"params/txt/Encoder_0/encoderblock_{i}/MlpBlock_0/Dense_0/bias", f"text_model.encoder.layers.{i}.mlp.fc1.bias"))

+ rename_keys.append((f"params/txt/Encoder_0/encoderblock_{i}/MlpBlock_0/Dense_1/kernel", f"text_model.encoder.layers.{i}.mlp.fc2.weight"))

+ rename_keys.append((f"params/txt/Encoder_0/encoderblock_{i}/MlpBlock_0/Dense_1/bias", f"text_model.encoder.layers.{i}.mlp.fc2.bias"))

+ rename_keys.append((f"params/txt/Encoder_0/encoderblock_{i}/MultiHeadDotProductAttention_0/key/kernel", f"text_model.encoder.layers.{i}.self_attn.k_proj.weight"))

+ rename_keys.append((f"params/txt/Encoder_0/encoderblock_{i}/MultiHeadDotProductAttention_0/key/bias", f"text_model.encoder.layers.{i}.self_attn.k_proj.bias"))

+ rename_keys.append((f"params/txt/Encoder_0/encoderblock_{i}/MultiHeadDotProductAttention_0/value/kernel", f"text_model.encoder.layers.{i}.self_attn.v_proj.weight"))

+ rename_keys.append((f"params/txt/Encoder_0/encoderblock_{i}/MultiHeadDotProductAttention_0/value/bias", f"text_model.encoder.layers.{i}.self_attn.v_proj.bias"))

+ rename_keys.append((f"params/txt/Encoder_0/encoderblock_{i}/MultiHeadDotProductAttention_0/query/kernel", f"text_model.encoder.layers.{i}.self_attn.q_proj.weight"))

+ rename_keys.append((f"params/txt/Encoder_0/encoderblock_{i}/MultiHeadDotProductAttention_0/query/bias", f"text_model.encoder.layers.{i}.self_attn.q_proj.bias"))

+ rename_keys.append((f"params/txt/Encoder_0/encoderblock_{i}/MultiHeadDotProductAttention_0/out/kernel", f"text_model.encoder.layers.{i}.self_attn.out_proj.weight"))

+ rename_keys.append((f"params/txt/Encoder_0/encoderblock_{i}/MultiHeadDotProductAttention_0/out/bias", f"text_model.encoder.layers.{i}.self_attn.out_proj.bias"))

+

+ rename_keys.append(("params/txt/Encoder_0/encoder_norm/scale", "text_model.final_layer_norm.weight"))

+ rename_keys.append(("params/txt/Encoder_0/encoder_norm/bias", "text_model.final_layer_norm.bias"))

+ rename_keys.append(("params/txt/head/kernel", "text_model.head.weight"))

+ rename_keys.append(("params/txt/head/bias", "text_model.head.bias"))

+

+ # learned temperature and bias

+ rename_keys.append(("params/t", "logit_scale"))

+ rename_keys.append(("params/b", "logit_bias"))

+

+ # fmt: on

+ return rename_keys

+

+

+def rename_key(dct, old, new, config):

+ val = dct.pop(old)

+

+ if ("out_proj" in new or "v_proj" in new or "k_proj" in new or "q_proj" in new) and "vision" in new:

+ val = val.reshape(-1, config.vision_config.hidden_size)

+ if ("out_proj" in new or "v_proj" in new or "k_proj" in new or "q_proj" in new) and "text" in new:

+ val = val.reshape(-1, config.text_config.hidden_size)

+

+ if "patch_embedding.weight" in new:

+ val = val.transpose(3, 2, 0, 1)

+ elif new.endswith("weight") and "position_embedding" not in new and "token_embedding" not in new:

+ val = val.T

+

+ if "position_embedding" in new and "vision" in new:

+ val = val.reshape(-1, config.vision_config.hidden_size)

+ if "position_embedding" in new and "text" in new:

+ val = val.reshape(-1, config.text_config.hidden_size)

+

+ if new.endswith("bias"):

+ val = val.reshape(-1)

+

+ dct[new] = torch.from_numpy(val)

+

+

+def read_in_q_k_v_head(state_dict, config):

+ # read in individual input projection layers

+ key_proj_weight = (

+ state_dict.pop("params/img/MAPHead_0/MultiHeadDotProductAttention_0/key/kernel")

+ .reshape(-1, config.vision_config.hidden_size)

+ .T

+ )

+ key_proj_bias = state_dict.pop("params/img/MAPHead_0/MultiHeadDotProductAttention_0/key/bias").reshape(-1)

+ value_proj_weight = (

+ state_dict.pop("params/img/MAPHead_0/MultiHeadDotProductAttention_0/value/kernel")

+ .reshape(-1, config.vision_config.hidden_size)

+ .T

+ )

+ value_proj_bias = state_dict.pop("params/img/MAPHead_0/MultiHeadDotProductAttention_0/value/bias").reshape(-1)

+ query_proj_weight = (

+ state_dict.pop("params/img/MAPHead_0/MultiHeadDotProductAttention_0/query/kernel")

+ .reshape(-1, config.vision_config.hidden_size)

+ .T

+ )

+ query_proj_bias = state_dict.pop("params/img/MAPHead_0/MultiHeadDotProductAttention_0/query/bias").reshape(-1)

+

+ # next, add them to the state dict as a single matrix + vector

+ state_dict["vision_model.head.attention.in_proj_weight"] = torch.from_numpy(

+ np.concatenate([query_proj_weight, key_proj_weight, value_proj_weight], axis=0)

+ )

+ state_dict["vision_model.head.attention.in_proj_bias"] = torch.from_numpy(

+ np.concatenate([query_proj_bias, key_proj_bias, value_proj_bias], axis=0)

+ )

+

+

+# We will verify our results on an image of cute cats

+def prepare_img():

+ url = "http://images.cocodataset.org/val2017/000000039769.jpg"

+ image = Image.open(requests.get(url, stream=True).raw)

+ return image

+

+

+def flatten_nested_dict(params, parent_key="", sep="/"):

+ items = []

+

+ for k, v in params.items():

+ new_key = parent_key + sep + k if parent_key else k

+

+ if isinstance(v, collections.abc.MutableMapping):

+ items.extend(flatten_nested_dict(v, new_key, sep=sep).items())

+ else:

+ items.append((new_key, v))

+ return dict(items)

+

+

+@torch.no_grad()

+def convert_siglip_checkpoint(model_name, pytorch_dump_folder_path, verify_logits=True, push_to_hub=False):

+ """

+ Copy/paste/tweak model's weights to our SigLIP structure.

+ """

+

+ # define default SigLIP configuration

+ config = get_siglip_config(model_name)

+

+ # get checkpoint

+ checkpoint = model_name_to_checkpoint[model_name]

+

+ # get vocab file

+ if "i18n" in model_name:

+ vocab_file = "/Users/nielsrogge/Documents/SigLIP/multilingual_vocab/sentencepiece.model"

+ else:

+ vocab_file = "/Users/nielsrogge/Documents/SigLIP/english_vocab/sentencepiece.model"

+

+ # load original state dict

+ data = load(checkpoint)

+ state_dict = flatten_nested_dict(data)

+

+ # remove and rename some keys

+ rename_keys = create_rename_keys(config)

+ for src, dest in rename_keys:

+ rename_key(state_dict, src, dest, config)

+

+ # qkv matrices of attention pooling head need special treatment

+ read_in_q_k_v_head(state_dict, config)

+

+ # load HuggingFace model

+ model = SiglipModel(config).eval()

+ model.load_state_dict(state_dict)

+

+ # create processor

+ # important: make tokenizer not return attention_mask since original one doesn't require it

+ image_size = config.vision_config.image_size

+ size = {"height": image_size, "width": image_size}

+ image_processor = SiglipImageProcessor(size=size)

+ tokenizer = SiglipTokenizer(vocab_file=vocab_file, model_input_names=["input_ids"])

+ processor = SiglipProcessor(image_processor=image_processor, tokenizer=tokenizer)

+

+ # verify on dummy images and texts

+ url_1 = "https://cdn.openai.com/multimodal-neurons/assets/apple/apple-ipod.jpg"

+ image_1 = Image.open(requests.get(url_1, stream=True).raw).convert("RGB")

+ url_2 = "https://cdn.openai.com/multimodal-neurons/assets/apple/apple-blank.jpg"

+ image_2 = Image.open(requests.get(url_2, stream=True).raw).convert("RGB")

+ texts = ["an apple", "a picture of an apple"]

+

+ inputs = processor(images=[image_1, image_2], text=texts, return_tensors="pt", padding="max_length")

+

+ # verify input_ids against original ones

+ if image_size == 224:

+ filename = "siglip_pixel_values.pt"

+ elif image_size == 256:

+ filename = "siglip_pixel_values_256.pt"

+ elif image_size == 384:

+ filename = "siglip_pixel_values_384.pt"

+ elif image_size == 512:

+ filename = "siglip_pixel_values_512.pt"

+ else:

+ raise ValueError("Image size not supported")

+

+ filepath = hf_hub_download(repo_id="nielsr/test-image", filename=filename, repo_type="dataset")

+ original_pixel_values = torch.load(filepath)

+ filepath = hf_hub_download(repo_id="nielsr/test-image", filename="siglip_input_ids.pt", repo_type="dataset")

+ original_input_ids = torch.load(filepath)

+

+ if "i18n" not in model_name:

+ assert inputs.input_ids.tolist() == original_input_ids.tolist()

+

+ print("Mean of original pixel values:", original_pixel_values.mean())

+ print("Mean of new pixel values:", inputs.pixel_values.mean())

+

+ # note: we're testing with original pixel values here since we don't have exact pixel values

+ with torch.no_grad():

+ outputs = model(input_ids=inputs.input_ids, pixel_values=original_pixel_values)

+

+ # with torch.no_grad():

+ # outputs = model(input_ids=inputs.input_ids, pixel_values=inputs.pixel_values)

+

+ print(outputs.logits_per_image[:3, :3])

+

+ probs = torch.sigmoid(outputs.logits_per_image) # these are the probabilities

+ print(f"{probs[0][0]:.1%} that image 0 is '{texts[0]}'")

+ print(f"{probs[0][1]:.1%} that image 0 is '{texts[1]}'")

+

+ if verify_logits:

+ if model_name == "siglip-base-patch16-224":

+ expected_slice = torch.tensor(

+ [[-2.9621, -2.1672], [-0.2713, 0.2910]],

+ )

+ elif model_name == "siglip-base-patch16-256":

+ expected_slice = torch.tensor(

+ [[-3.1146, -1.9894], [-0.7312, 0.6387]],

+ )

+ elif model_name == "siglip-base-patch16-384":

+ expected_slice = torch.tensor(

+ [[-2.8098, -2.1891], [-0.4242, 0.4102]],

+ )

+ elif model_name == "siglip-base-patch16-512":

+ expected_slice = torch.tensor(

+ [[-2.7899, -2.2668], [-0.4295, -0.0735]],

+ )

+ elif model_name == "siglip-large-patch16-256":

+ expected_slice = torch.tensor(

+ [[-1.5827, -0.5801], [-0.9153, 0.1363]],

+ )

+ elif model_name == "siglip-large-patch16-384":

+ expected_slice = torch.tensor(

+ [[-2.1523, -0.2899], [-0.2959, 0.7884]],

+ )

+ elif model_name == "siglip-so400m-patch14-384":

+ expected_slice = torch.tensor([[-1.2441, -0.6649], [-0.7060, 0.7374]])

+ elif model_name == "siglip-base-patch16-256-i18n":

+ expected_slice = torch.tensor(

+ [[-0.9064, 0.1073], [-0.0299, 0.5304]],

+ )

+

+ assert torch.allclose(outputs.logits_per_image[:3, :3], expected_slice, atol=1e-4)

+ print("Looks ok!")

+

+ if pytorch_dump_folder_path is not None:

+ Path(pytorch_dump_folder_path).mkdir(exist_ok=True)

+ print(f"Saving model {model_name} to {pytorch_dump_folder_path}")

+ model.save_pretrained(pytorch_dump_folder_path)

+ print(f"Saving processor to {pytorch_dump_folder_path}")

+ processor.save_pretrained(pytorch_dump_folder_path)

+

+ if push_to_hub:

+ model.push_to_hub(f"nielsr/{model_name}")

+ processor.push_to_hub(f"nielsr/{model_name}")

+

+

+if __name__ == "__main__":

+ parser = argparse.ArgumentParser()

+ # Required parameters

+ parser.add_argument(

+ "--model_name",

+ default="siglip-base-patch16-224",

+ type=str,

+ choices=model_name_to_checkpoint.keys(),

+ help="Name of the model you'd like to convert.",

+ )

+ parser.add_argument(

+ "--pytorch_dump_folder_path", default=None, type=str, help="Path to the output PyTorch model directory."

+ )

+ parser.add_argument(

+ "--verify_logits",

+ action="store_false",

+ help="Whether to verify logits against the original implementation.",

+ )

+ parser.add_argument(

+ "--push_to_hub", action="store_true", help="Whether or not to push the converted model to the 🤗 hub."

+ )

+

+ args = parser.parse_args()

+ convert_siglip_checkpoint(args.model_name, args.pytorch_dump_folder_path, args.verify_logits, args.push_to_hub)

diff --git a/src/transformers/models/siglip/image_processing_siglip.py b/src/transformers/models/siglip/image_processing_siglip.py

new file mode 100644

index 00000000000000..285b6e9e559f32

--- /dev/null

+++ b/src/transformers/models/siglip/image_processing_siglip.py

@@ -0,0 +1,225 @@

+# coding=utf-8

+# Copyright 2024 The HuggingFace Inc. team. All rights reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+"""Image processor class for SigLIP."""

+

+from typing import Dict, List, Optional, Union

+

+from ...image_processing_utils import BaseImageProcessor, BatchFeature, get_size_dict

+from ...image_transforms import (

+ resize,

+ to_channel_dimension_format,

+)

+from ...image_utils import (

+ IMAGENET_STANDARD_MEAN,

+ IMAGENET_STANDARD_STD,

+ ChannelDimension,

+ ImageInput,

+ PILImageResampling,

+ infer_channel_dimension_format,

+ is_scaled_image,

+ make_list_of_images,

+ to_numpy_array,

+ valid_images,

+)

+from ...utils import TensorType, is_vision_available, logging

+

+

+logger = logging.get_logger(__name__)

+

+

+if is_vision_available():

+ import PIL

+

+

+class SiglipImageProcessor(BaseImageProcessor):

+ r"""

+ Constructs a SigLIP image processor.

+

+ Args:

+ do_resize (`bool`, *optional*, defaults to `True`):

+ Whether to resize the image's (height, width) dimensions to the specified `size`. Can be overridden by

+ `do_resize` in the `preprocess` method.

+ size (`Dict[str, int]` *optional*, defaults to `{"height": 224, "width": 224}`):

+ Size of the image after resizing. Can be overridden by `size` in the `preprocess` method.

+ resample (`PILImageResampling`, *optional*, defaults to `Resampling.BICUBIC`):

+ Resampling filter to use if resizing the image. Can be overridden by `resample` in the `preprocess` method.

+ do_rescale (`bool`, *optional*, defaults to `True`):

+ Whether to rescale the image by the specified scale `rescale_factor`. Can be overridden by `do_rescale` in

+ the `preprocess` method.

+ rescale_factor (`int` or `float`, *optional*, defaults to `1/255`):

+ Scale factor to use if rescaling the image. Can be overridden by `rescale_factor` in the `preprocess`

+ method.

+ do_normalize (`bool`, *optional*, defaults to `True`):

+ Whether to normalize the image by the specified mean and standard deviation. Can be overridden by

+ `do_normalize` in the `preprocess` method.

+ image_mean (`float` or `List[float]`, *optional*, defaults to `[0.5, 0.5, 0.5]`):

+ Mean to use if normalizing the image. This is a float or list of floats the length of the number of

+ channels in the image. Can be overridden by the `image_mean` parameter in the `preprocess` method.

+ image_std (`float` or `List[float]`, *optional*, defaults to `[0.5, 0.5, 0.5]`):

+ Standard deviation to use if normalizing the image. This is a float or list of floats the length of the

+ number of channels in the image. Can be overridden by the `image_std` parameter in the `preprocess` method.

+ Can be overridden by the `image_std` parameter in the `preprocess` method.

+ """

+

+ model_input_names = ["pixel_values"]

+

+ def __init__(

+ self,

+ do_resize: bool = True,

+ size: Dict[str, int] = None,

+ resample: PILImageResampling = PILImageResampling.BICUBIC,

+ do_rescale: bool = True,

+ rescale_factor: Union[int, float] = 1 / 255,

+ do_normalize: bool = True,

+ image_mean: Optional[Union[float, List[float]]] = None,

+ image_std: Optional[Union[float, List[float]]] = None,

+ **kwargs,

+ ) -> None:

+ super().__init__(**kwargs)

+ size = size if size is not None else {"height": 224, "width": 224}

+ image_mean = image_mean if image_mean is not None else IMAGENET_STANDARD_MEAN

+ image_std = image_std if image_std is not None else IMAGENET_STANDARD_STD

+

+ self.do_resize = do_resize

+ self.size = size

+ self.resample = resample

+ self.do_rescale = do_rescale

+ self.rescale_factor = rescale_factor

+ self.do_normalize = do_normalize

+ self.image_mean = image_mean

+ self.image_std = image_std

+

+ def preprocess(

+ self,

+ images: ImageInput,

+ do_resize: bool = None,

+ size: Dict[str, int] = None,

+ resample: PILImageResampling = None,

+ do_rescale: bool = None,

+ rescale_factor: float = None,

+ do_normalize: bool = None,

+ image_mean: Optional[Union[float, List[float]]] = None,

+ image_std: Optional[Union[float, List[float]]] = None,

+ return_tensors: Optional[Union[str, TensorType]] = None,

+ data_format: Optional[ChannelDimension] = ChannelDimension.FIRST,

+ input_data_format: Optional[Union[str, ChannelDimension]] = None,

+ **kwargs,

+ ) -> PIL.Image.Image:

+ """

+ Preprocess an image or batch of images.

+

+ Args:

+ images (`ImageInput`):

+ Image to preprocess. Expects a single or batch of images with pixel values ranging from 0 to 255. If

+ passing in images with pixel values between 0 and 1, set `do_rescale=False`.

+ do_resize (`bool`, *optional*, defaults to `self.do_resize`):

+ Whether to resize the image.

+ size (`Dict[str, int]`, *optional*, defaults to `self.size`):

+ Size of the image after resizing.

+ resample (`int`, *optional*, defaults to `self.resample`):

+ Resampling filter to use if resizing the image. This can be one of the enum `PILImageResampling`. Only