diff --git a/packages/flutter_webrtc/CHANGELOG.md b/packages/flutter_webrtc/CHANGELOG.md

index f78c34649..66de06050 100644

--- a/packages/flutter_webrtc/CHANGELOG.md

+++ b/packages/flutter_webrtc/CHANGELOG.md

@@ -1,6 +1,10 @@

-## NEXT

+## 0.1.3

* Increase the minimum Flutter version to 3.3.

+* Update libwebrtc to m114 version.

+* Update flutter_webrtc to 0.9.46.

+* Update and format flutter_webrtc_demo.

+* Support the empty candidate for 'addIceCandidate' api.

## 0.1.2

diff --git a/packages/flutter_webrtc/README.md b/packages/flutter_webrtc/README.md

index 77810fa1c..1c7d8bee2 100644

--- a/packages/flutter_webrtc/README.md

+++ b/packages/flutter_webrtc/README.md

@@ -40,8 +40,8 @@ For other Tizen devices :

```yaml

dependencies:

- flutter_webrtc: ^0.9.28

- flutter_webrtc_tizen: ^0.1.2

+ flutter_webrtc: ^0.9.46

+ flutter_webrtc_tizen: ^0.1.3

```

## Functionality

diff --git a/packages/flutter_webrtc/example/flutter_webrtc_demo/README.md b/packages/flutter_webrtc/example/flutter_webrtc_demo/README.md

index 51ac241e8..94d8e998b 100644

--- a/packages/flutter_webrtc/example/flutter_webrtc_demo/README.md

+++ b/packages/flutter_webrtc/example/flutter_webrtc_demo/README.md

@@ -1,25 +1,21 @@

# flutter-webrtc-demo

-

[](https://join.slack.com/t/flutterwebrtc/shared_invite/zt-q83o7y1s-FExGLWEvtkPKM8ku_F8cEQ)

Flutter WebRTC plugin Demo

-Online Demo:

+Online Demo: https://flutter-webrtc.github.io/flutter-webrtc-demo/

## Usage

-

+- `cd flutter_webrtc_demo`

+- `flutter-tizen pub get`

- `flutter-tizen run`

## Note

-

- If you want to test `P2P Call Sample`, please use the [webrtc-flutter-server](https://github.com/cloudwebrtc/flutter-webrtc-server), and enter your server address into the example app.

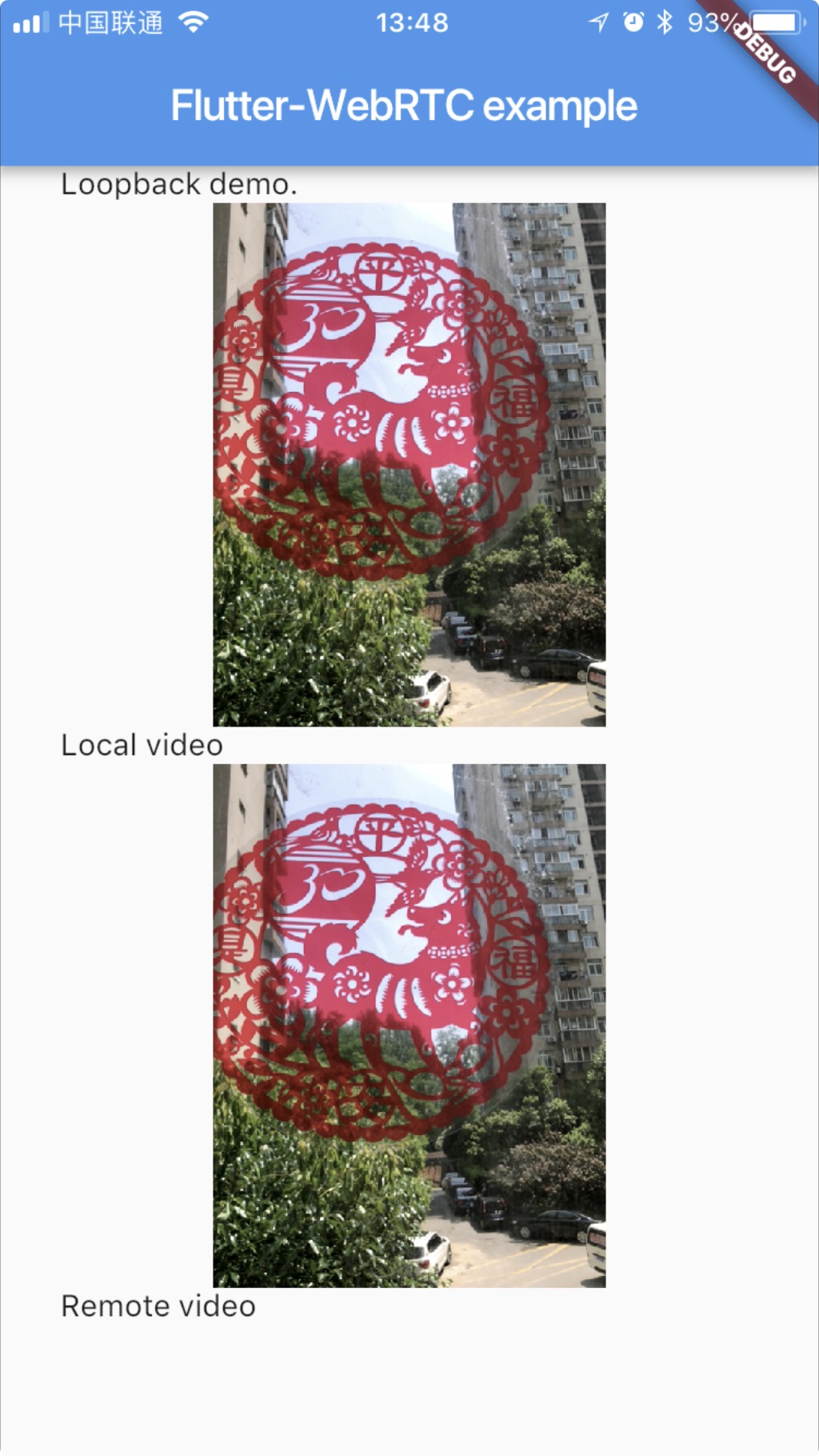

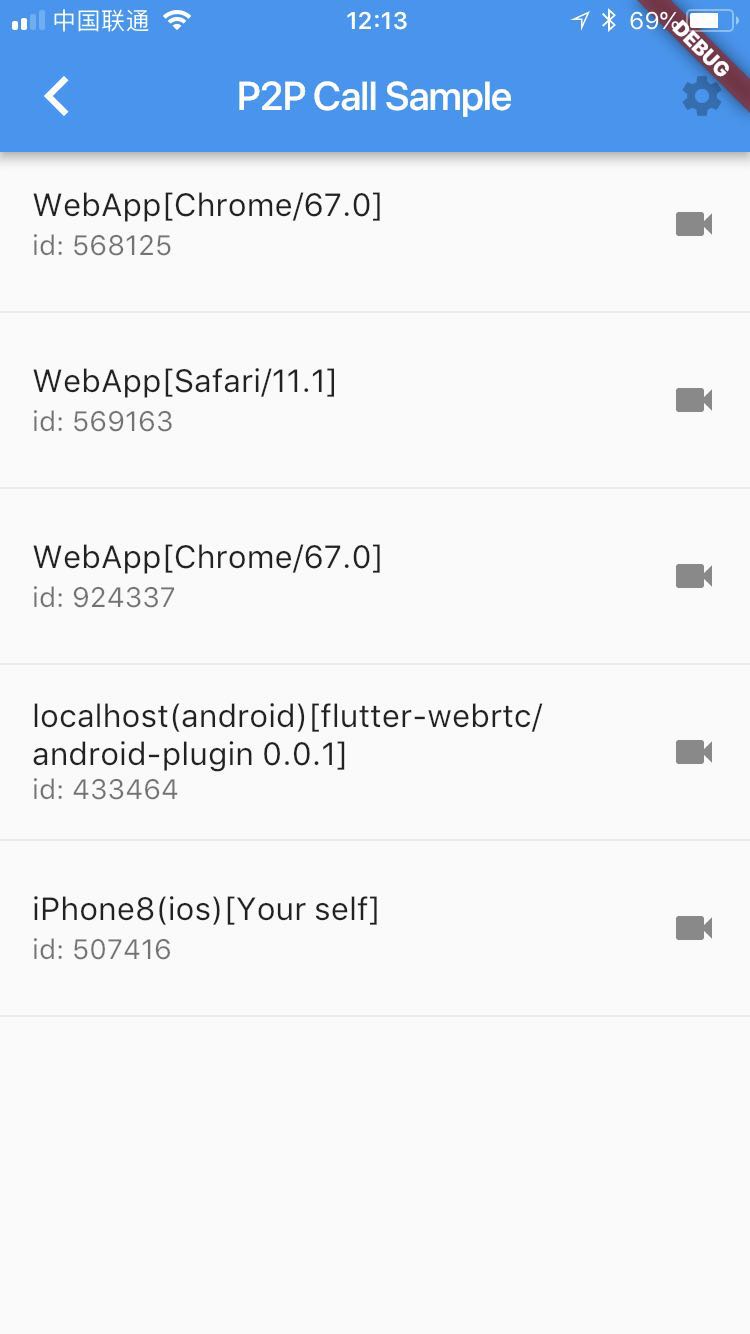

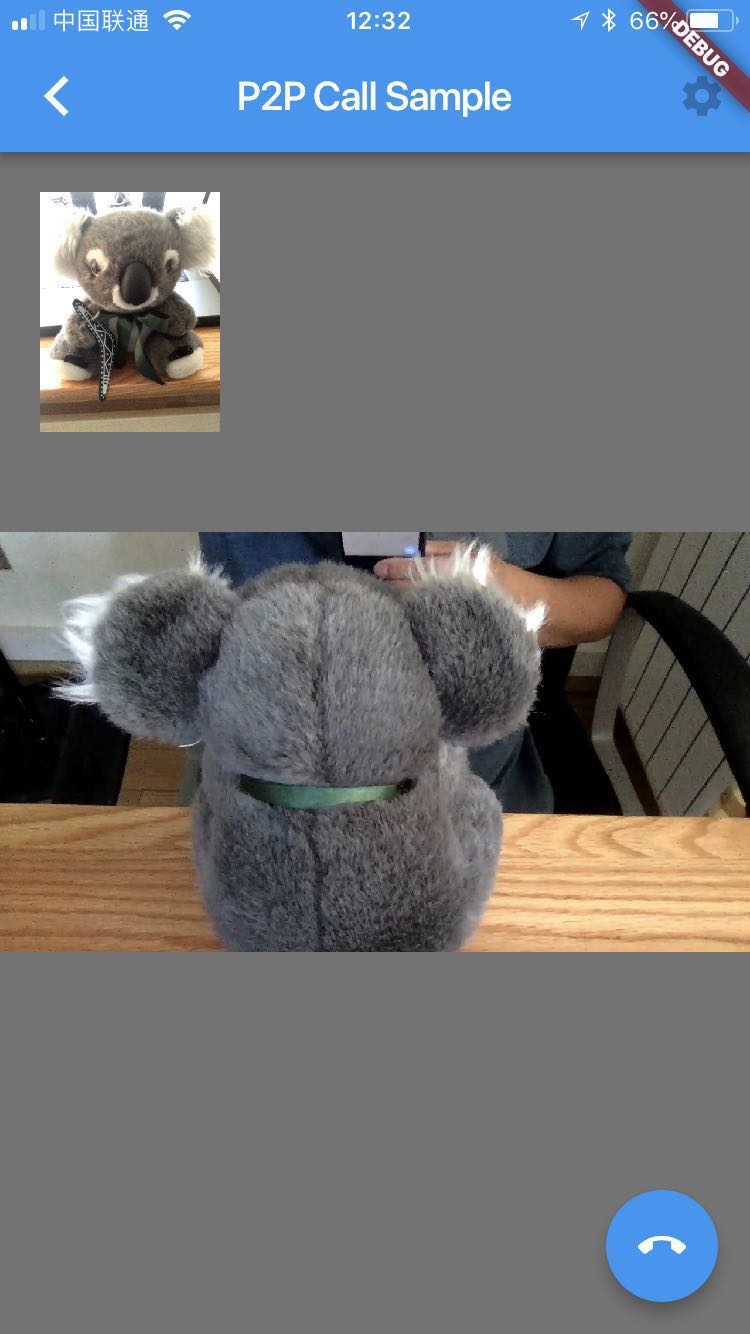

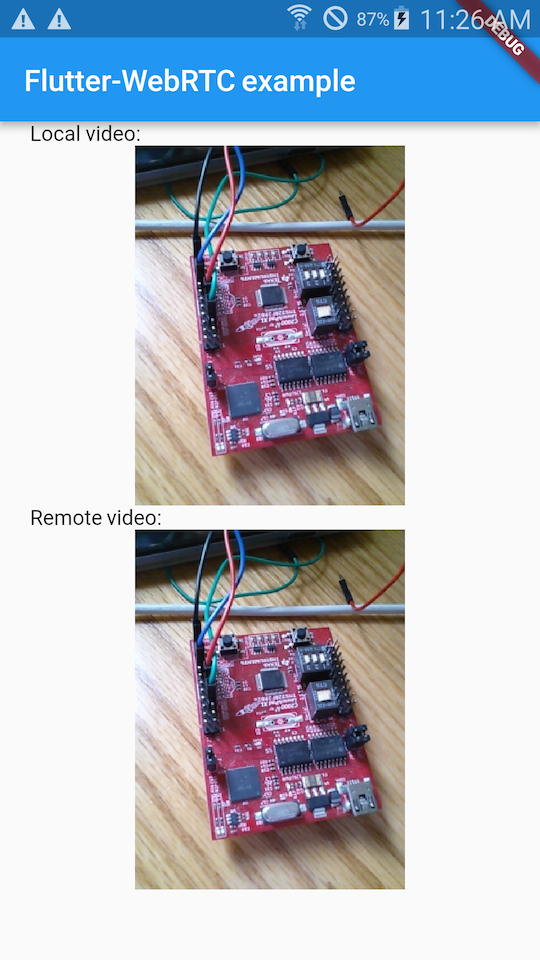

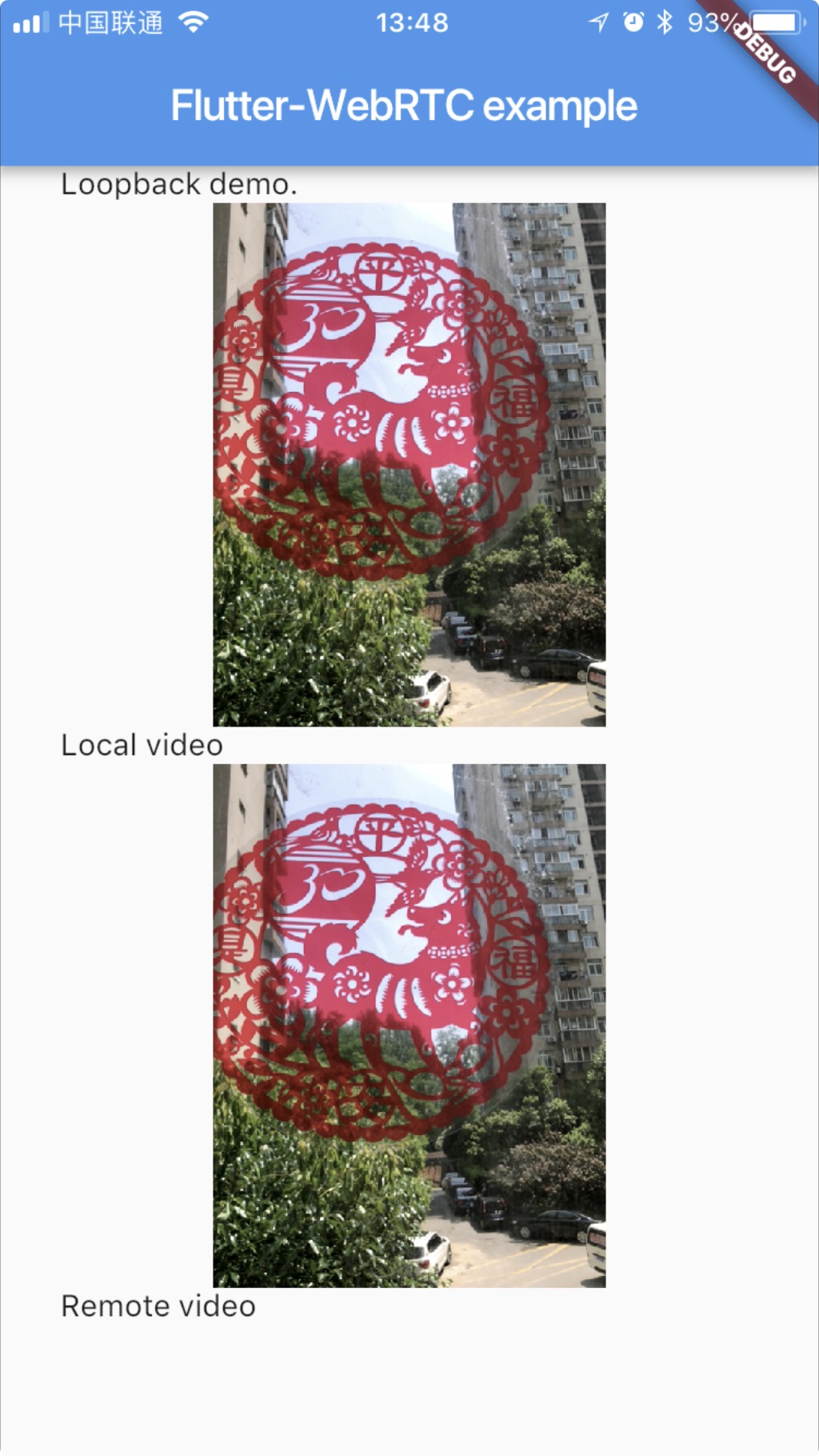

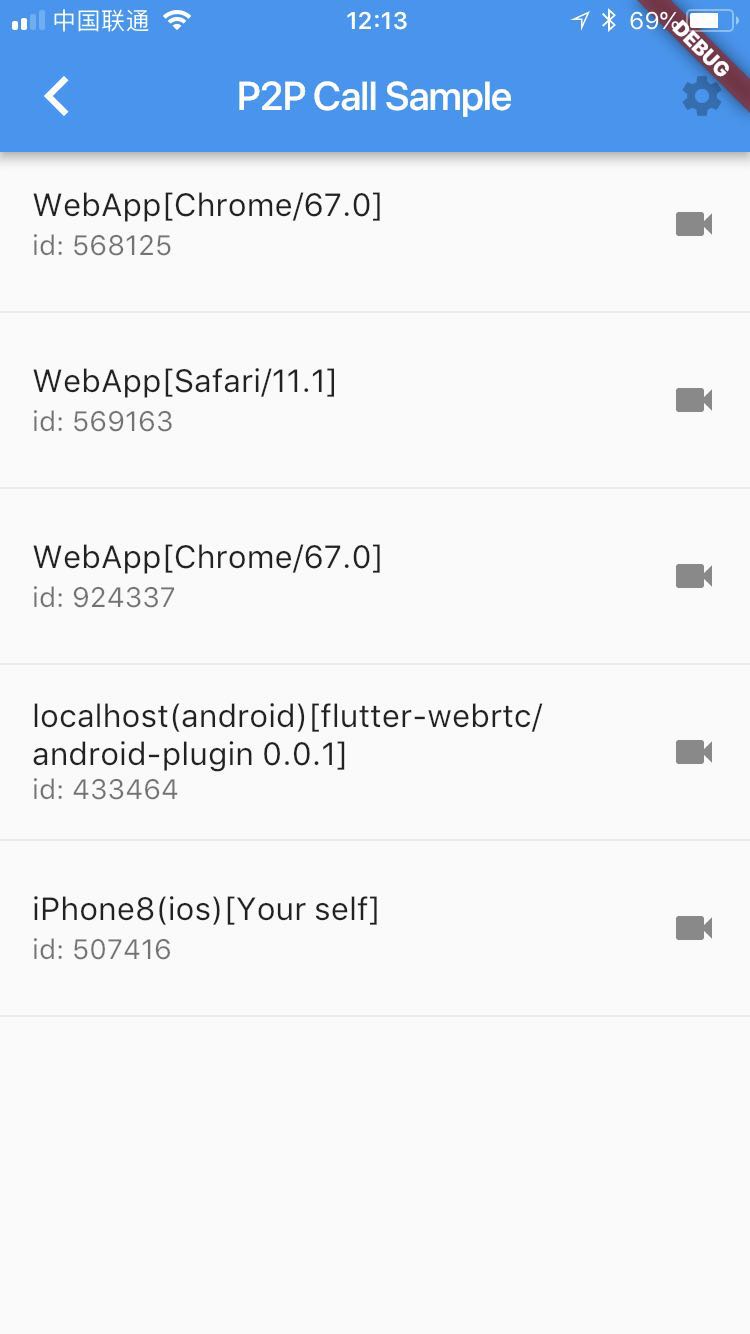

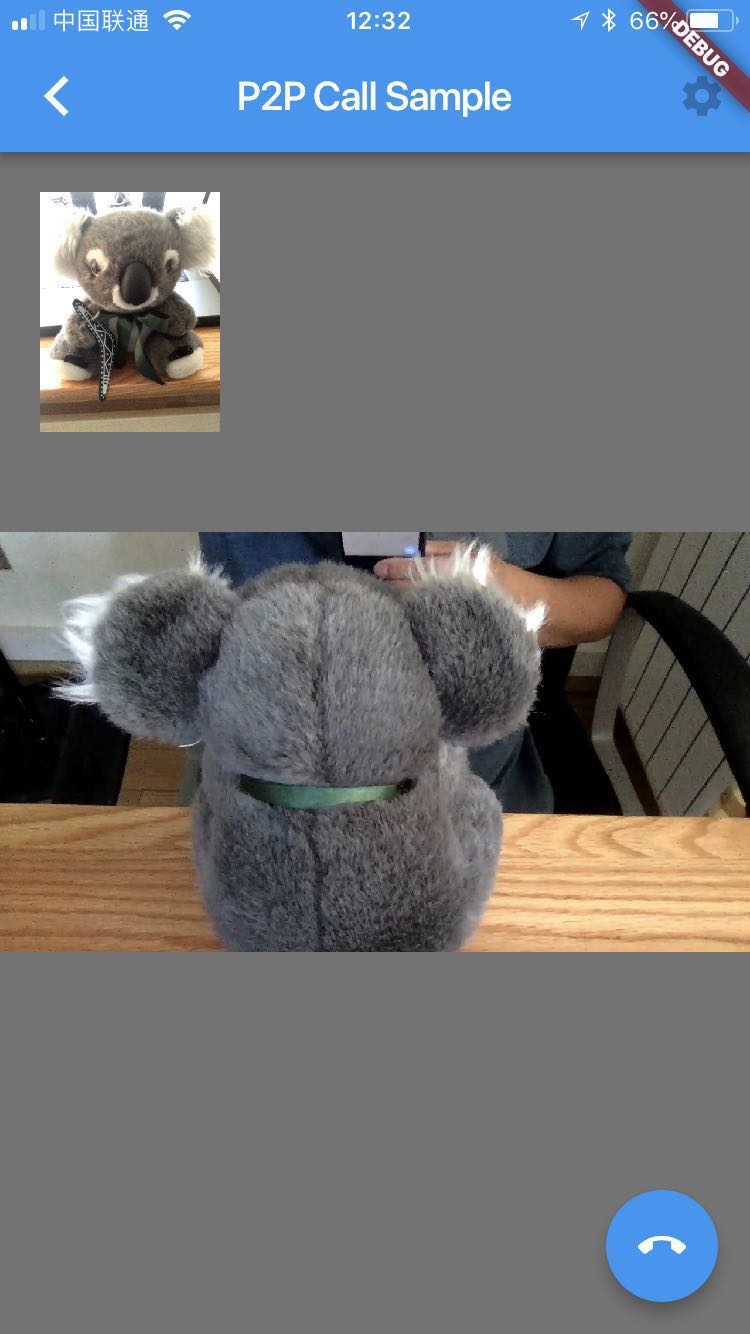

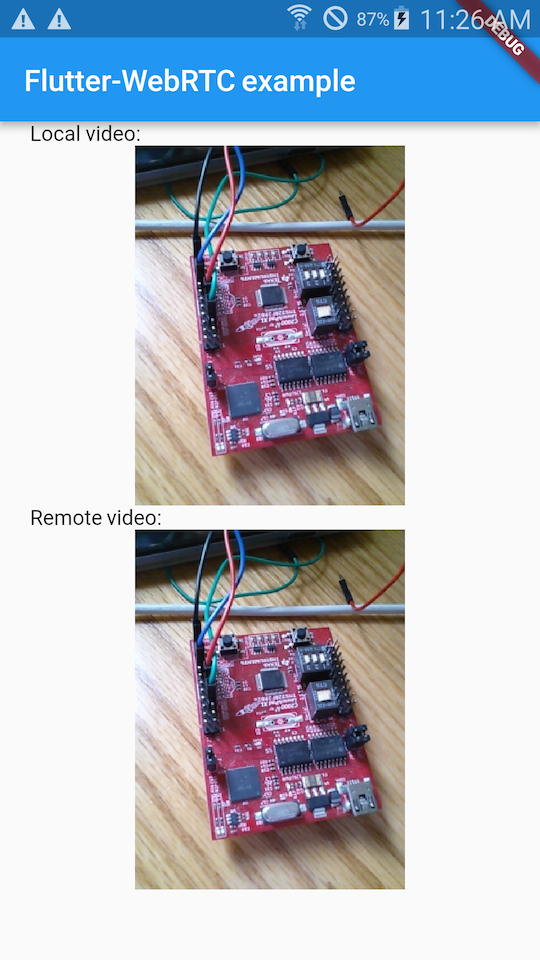

## screenshots

-

-### iOS

-

+# iOS

-### Android

-

+# Android

-### Android

-

+# Android

diff --git a/packages/flutter_webrtc/example/flutter_webrtc_demo/analysis_options.yaml b/packages/flutter_webrtc/example/flutter_webrtc_demo/analysis_options.yaml

index 2d6b313d4..fea5e03d6 100644

--- a/packages/flutter_webrtc/example/flutter_webrtc_demo/analysis_options.yaml

+++ b/packages/flutter_webrtc/example/flutter_webrtc_demo/analysis_options.yaml

@@ -40,7 +40,3 @@ analyzer:

# allow self-reference to deprecated members (we do this because otherwise we have

# to annotate every member in every test, assert, etc, when we deprecate something)

deprecated_member_use_from_same_package: ignore

- # Ignore analyzer hints for updating pubspecs when using Future or

- # Stream and not importing dart:async

- # Please see https://github.com/flutter/flutter/pull/24528 for details.

- sdk_version_async_exported_from_core: ignore

diff --git a/packages/flutter_webrtc/example/flutter_webrtc_demo/lib/main.dart b/packages/flutter_webrtc/example/flutter_webrtc_demo/lib/main.dart

index 74cc18ae6..97392e7c4 100644

--- a/packages/flutter_webrtc/example/flutter_webrtc_demo/lib/main.dart

+++ b/packages/flutter_webrtc/example/flutter_webrtc_demo/lib/main.dart

@@ -32,7 +32,7 @@ class _MyAppState extends State {

_initItems();

}

- ListBody _buildRow(context, item) {

+ Widget _buildRow(context, item) {

return ListBody(children: [

ListTile(

title: Text(item.title),

diff --git a/packages/flutter_webrtc/example/flutter_webrtc_demo/lib/src/call_sample/call_sample.dart b/packages/flutter_webrtc/example/flutter_webrtc_demo/lib/src/call_sample/call_sample.dart

index 4d8faae84..e780bbb52 100644

--- a/packages/flutter_webrtc/example/flutter_webrtc_demo/lib/src/call_sample/call_sample.dart

+++ b/packages/flutter_webrtc/example/flutter_webrtc_demo/lib/src/call_sample/call_sample.dart

@@ -24,7 +24,6 @@ class _CallSampleState extends State {

final RTCVideoRenderer _remoteRenderer = RTCVideoRenderer();

bool _inCalling = false;

Session? _session;

-

bool _waitAccept = false;

@override

@@ -42,6 +41,9 @@ class _CallSampleState extends State {

@override

void deactivate() {

super.deactivate();

+ if (_inCalling) {

+ _hangUp();

+ }

_signaling?.close();

_localRenderer.dispose();

_remoteRenderer.dispose();

@@ -92,7 +94,7 @@ class _CallSampleState extends State {

break;

case CallState.CallStateInvite:

_waitAccept = true;

- await _showInvateDialog();

+ await _showInviteDialog();

break;

case CallState.CallStateConnected:

if (_waitAccept) {

@@ -156,7 +158,7 @@ class _CallSampleState extends State {

);

}

- Future _showInvateDialog() {

+ Future _showInviteDialog() {

return showDialog(

context: context,

builder: (context) {

@@ -276,96 +278,86 @@ class _CallSampleState extends State {

]);

}

- Future _onWillPop(BuildContext context) async {

- if (_inCalling) {

- _hangUp();

- }

- return true;

- }

-

@override

Widget build(BuildContext context) {

- return WillPopScope(

- onWillPop: () => _onWillPop(context),

- child: Scaffold(

- appBar: AppBar(

- title: Text(

- 'P2P Call Sample${_selfId != null ? ' [Your ID ($_selfId)] ' : ''}'),

- actions: [

- IconButton(

- icon: const Icon(Icons.settings),

- onPressed: null,

- tooltip: 'setup',

- ),

- ],

- ),

- floatingActionButtonLocation: FloatingActionButtonLocation.centerFloat,

- floatingActionButton: _inCalling

- ? SizedBox(

- width: 240.0,

+ return Scaffold(

+ appBar: AppBar(

+ title: Text(

+ 'P2P Call Sample${_selfId != null ? ' [Your ID ($_selfId)] ' : ''}'),

+ actions: [

+ IconButton(

+ icon: const Icon(Icons.settings),

+ onPressed: null,

+ tooltip: 'setup',

+ ),

+ ],

+ ),

+ floatingActionButtonLocation: FloatingActionButtonLocation.centerFloat,

+ floatingActionButton: _inCalling

+ ? SizedBox(

+ width: 240.0,

+ child: Row(

+ mainAxisAlignment: MainAxisAlignment.spaceBetween,

+ children: [

+ FloatingActionButton(

+ tooltip: 'Camera',

+ onPressed: _switchCamera,

+ child: const Icon(Icons.switch_camera),

+ ),

+ FloatingActionButton(

+ tooltip: 'Screen Sharing',

+ onPressed: () => selectScreenSourceDialog(context),

+ child: const Icon(Icons.desktop_mac),

+ ),

+ FloatingActionButton(

+ onPressed: _hangUp,

+ tooltip: 'Hangup',

+ backgroundColor: Colors.pink,

+ child: Icon(Icons.call_end),

+ ),

+ FloatingActionButton(

+ tooltip: 'Mute Mic',

+ onPressed: _muteMic,

+ child: const Icon(Icons.mic_off),

+ )

+ ]))

+ : null,

+ body: _inCalling

+ ? OrientationBuilder(builder: (context, orientation) {

+ return Container(

+ width: MediaQuery.of(context).size.width,

+ height: MediaQuery.of(context).size.height,

child: Row(

- mainAxisAlignment: MainAxisAlignment.spaceBetween,

- children: [

- FloatingActionButton(

- tooltip: 'Camera',

- onPressed: _switchCamera,

- child: const Icon(Icons.switch_camera),

- ),

- FloatingActionButton(

- tooltip: 'Screen Sharing',

- onPressed: () => selectScreenSourceDialog(context),

- child: const Icon(Icons.desktop_mac),

+ children: [

+ Expanded(

+ flex: 1,

+ child: Container(

+ alignment: Alignment.center,

+ padding: const EdgeInsets.all(8.0),

+ decoration: BoxDecoration(color: Colors.black54),

+ child: RTCVideoView(_localRenderer),

),

- FloatingActionButton(

- onPressed: _hangUp,

- tooltip: 'Hangup',

- backgroundColor: Colors.pink,

- child: Icon(Icons.call_end),

+ ),

+ Expanded(

+ flex: 1,

+ child: Container(

+ alignment: Alignment.center,

+ padding: const EdgeInsets.all(8.0),

+ decoration: BoxDecoration(color: Colors.black54),

+ child: RTCVideoView(_remoteRenderer),

),

- FloatingActionButton(

- tooltip: 'Mute Mic',

- onPressed: _muteMic,

- child: const Icon(Icons.mic_off),

- )

- ]))

- : null,

- body: _inCalling

- ? OrientationBuilder(builder: (context, orientation) {

- return Container(

- width: MediaQuery.of(context).size.width,

- height: MediaQuery.of(context).size.height,

- child: Row(

- children: [

- Expanded(

- flex: 1,

- child: Container(

- alignment: Alignment.center,

- padding: const EdgeInsets.all(8.0),

- decoration: BoxDecoration(color: Colors.black54),

- child: RTCVideoView(_localRenderer),

- ),

- ),

- Expanded(

- flex: 1,

- child: Container(

- alignment: Alignment.center,

- padding: const EdgeInsets.all(8.0),

- decoration: BoxDecoration(color: Colors.black54),

- child: RTCVideoView(_remoteRenderer),

- ),

- ),

- ],

- ),

- );

- })

- : ListView.builder(

- shrinkWrap: true,

- padding: const EdgeInsets.all(0.0),

- itemCount: _peers.length,

- itemBuilder: (context, i) {

- return _buildRow(context, _peers[i]);

- }),

- ),

+ ),

+ ],

+ ),

+ );

+ })

+ : ListView.builder(

+ shrinkWrap: true,

+ padding: const EdgeInsets.all(0.0),

+ itemCount: _peers.length,

+ itemBuilder: (context, i) {

+ return _buildRow(context, _peers[i]);

+ }),

);

}

}

diff --git a/packages/flutter_webrtc/example/flutter_webrtc_demo/lib/src/call_sample/data_channel_sample.dart b/packages/flutter_webrtc/example/flutter_webrtc_demo/lib/src/call_sample/data_channel_sample.dart

index d90881119..c26a874d2 100644

--- a/packages/flutter_webrtc/example/flutter_webrtc_demo/lib/src/call_sample/data_channel_sample.dart

+++ b/packages/flutter_webrtc/example/flutter_webrtc_demo/lib/src/call_sample/data_channel_sample.dart

@@ -25,7 +25,7 @@ class _DataChannelSampleState extends State {

RTCDataChannel? _dataChannel;

Session? _session;

Timer? _timer;

- var _text = '';

+ String _text = '';

bool _waitAccept = false;

@override

@@ -69,7 +69,7 @@ class _DataChannelSampleState extends State {

);

}

- Future _showInvateDialog() {

+ Future _showInviteDialog() {

return showDialog(

context: context,

builder: (context) {

@@ -93,6 +93,7 @@ class _DataChannelSampleState extends State {

void _connect(BuildContext context) async {

_signaling ??= Signaling(widget.host, context);

await _signaling!.connect();

+

_signaling?.onDataChannelMessage = (_, dc, RTCDataChannelMessage data) {

setState(() {

if (data.isBinary) {

@@ -140,7 +141,7 @@ class _DataChannelSampleState extends State {

break;

case CallState.CallStateInvite:

_waitAccept = true;

- await _showInvateDialog();

+ await _showInviteDialog();

break;

case CallState.CallStateConnected:

if (_waitAccept) {

@@ -203,7 +204,7 @@ class _DataChannelSampleState extends State {

_signaling?.bye(_session!.sid);

}

- ListBody _buildRow(context, peer) {

+ Widget _buildRow(context, peer) {

var self = peer['id'] == _selfId;

return ListBody(children: [

ListTile(

@@ -242,7 +243,7 @@ class _DataChannelSampleState extends State {

body: _inCalling

? Center(

child: Container(

- child: Text('Recevied => $_text'),

+ child: Text('Received => $_text'),

),

)

: ListView.builder(

diff --git a/packages/flutter_webrtc/example/flutter_webrtc_demo/lib/src/call_sample/signaling.dart b/packages/flutter_webrtc/example/flutter_webrtc_demo/lib/src/call_sample/signaling.dart

index 4e2e01398..fc3fdb1fe 100644

--- a/packages/flutter_webrtc/example/flutter_webrtc_demo/lib/src/call_sample/signaling.dart

+++ b/packages/flutter_webrtc/example/flutter_webrtc_demo/lib/src/call_sample/signaling.dart

@@ -106,26 +106,26 @@ class Signaling {

void switchCamera() {

if (_localStream != null) {

if (_videoSource != VideoSource.Camera) {

- for (var sender in _senders) {

+ _senders.forEach((sender) {

if (sender.track!.kind == 'video') {

- sender.replaceTrack(_localStream!.getVideoTracks()[0]);

+ sender.replaceTrack(_localStream!.getVideoTracks().first);

}

- }

+ });

_videoSource = VideoSource.Camera;

onLocalStream?.call(_localStream!);

} else {

- Helper.switchCamera(_localStream!.getVideoTracks()[0]);

+ Helper.switchCamera(_localStream!.getVideoTracks().first);

}

}

}

void switchToScreenSharing(MediaStream stream) {

if (_localStream != null && _videoSource != VideoSource.Screen) {

- for (var sender in _senders) {

+ _senders.forEach((sender) {

if (sender.track!.kind == 'video') {

- sender.replaceTrack(stream.getVideoTracks()[0]);

+ sender.replaceTrack(stream.getVideoTracks().first);

}

- }

+ });

onLocalStream?.call(stream);

_videoSource = VideoSource.Screen;

}

@@ -133,8 +133,8 @@ class Signaling {

void muteMic() {

if (_localStream != null) {

- var enabled = _localStream!.getAudioTracks()[0].enabled;

- _localStream!.getAudioTracks()[0].enabled = !enabled;

+ var enabled = _localStream!.getAudioTracks().first.enabled;

+ _localStream!.getAudioTracks().first.enabled = !enabled;

}

}

@@ -159,9 +159,9 @@ class Signaling {

'session_id': sessionId,

'from': _selfId,

});

- var sess = _sessions[sessionId];

- if (sess != null) {

- _closeSession(sess);

+ var session = _sessions[sessionId];

+ if (session != null) {

+ _closeSession(session);

}

}

@@ -181,16 +181,13 @@ class Signaling {

bye(session.sid);

}

- void onMessage(message) async {

- Map mapData = message;

- var data = mapData['data'];

-

- switch (mapData['type']) {

+ void onMessage(Map message) async {

+ switch (message['type']) {

case 'peers':

{

- List peers = data;

+ List peers = message['data'] ?? [];

if (onPeersUpdate != null) {

- var event = {};

+ var event = {};

event['self'] = _selfId;

event['peers'] = peers;

onPeersUpdate?.call(event);

@@ -199,10 +196,11 @@ class Signaling {

break;

case 'offer':

{

- var peerId = data['from'];

- var description = data['description'];

- var media = data['media'];

- var sessionId = data['session_id'];

+ Map data = message['data'] ?? {};

+ String peerId = data['from'] ?? '';

+ Map description = data['description'] ?? {};

+ String media = data['media'] ?? '';

+ String sessionId = data['session_id'] ?? '';

var session = _sessions[sessionId];

var newSession = await _createSession(session,

peerId: peerId,

@@ -215,7 +213,6 @@ class Signaling {

// await _createAnswer(newSession, media);

if (newSession.remoteCandidates.isNotEmpty) {

- // ignore: avoid_function_literals_in_foreach_calls

newSession.remoteCandidates.forEach((candidate) async {

await newSession.pc?.addCandidate(candidate);

});

@@ -227,8 +224,9 @@ class Signaling {

break;

case 'answer':

{

- var description = data['description'];

- var sessionId = data['session_id'];

+ Map data = message['data'] ?? {};

+ Map description = data['description'] ?? {};

+ String sessionId = data['session_id'] ?? '';

var session = _sessions[sessionId];

await session?.pc?.setRemoteDescription(

RTCSessionDescription(description['sdp'], description['type']));

@@ -237,9 +235,10 @@ class Signaling {

break;

case 'candidate':

{

- var peerId = data['from'];

- var candidateMap = data['candidate'];

- var sessionId = data['session_id'];

+ Map data = message['data'] ?? {};

+ String peerId = data['from'] ?? '';

+ Map candidateMap = data['candidate'] ?? {};

+ String sessionId = data['session_id'] ?? '';

var session = _sessions[sessionId];

var candidate = RTCIceCandidate(candidateMap['candidate'],

candidateMap['sdpMid'], candidateMap['sdpMLineIndex']);

@@ -258,13 +257,16 @@ class Signaling {

break;

case 'leave':

{

- var peerId = data as String;

- _closeSessionByPeerId(peerId);

+ String? peerId = message['data'];

+ if (peerId != null) {

+ _closeSessionByPeerId(peerId);

+ }

}

break;

case 'bye':

{

- var sessionId = data['session_id'];

+ Map data = message['data'] ?? {};

+ String sessionId = data['session_id'] ?? '';

print('bye: $sessionId');

var session = _sessions.remove(sessionId);

if (session != null) {

@@ -299,10 +301,11 @@ class Signaling {

"uris": ["turn:127.0.0.1:19302?transport=udp"]

}

*/

+ List uris = _turnCredential!['uris'] ?? [];

_iceServers = {

'iceServers': [

{

- 'urls': _turnCredential!['uris'][0],

+ 'urls': uris.first,

'username': _turnCredential!['username'],

'credential': _turnCredential!['password']

},

@@ -409,7 +412,7 @@ class Signaling {

// Unified-Plan

pc.onTrack = (event) {

if (event.track.kind == 'video') {

- onAddRemoteStream?.call(newSession, event.streams[0]);

+ onAddRemoteStream?.call(newSession, event.streams.first);

}

};

_localStream!.getTracks().forEach((track) async {

@@ -533,7 +536,7 @@ class Signaling {

RTCSessionDescription _fixSdp(RTCSessionDescription s) {

var sdp = s.sdp;

s.sdp =

- sdp!.replaceAll('profile-level-id=640c1f', 'profile-level-id=42e032');

+ sdp?.replaceAll('profile-level-id=640c1f', 'profile-level-id=42e032');

return s;

}

@@ -568,25 +571,23 @@ class Signaling {

await _localStream!.dispose();

_localStream = null;

}

- _sessions.forEach((key, sess) async {

- await sess.pc?.close();

- await sess.dc?.close();

+ _sessions.forEach((key, session) async {

+ await session.pc?.close();

+ await session.dc?.close();

});

_sessions.clear();

}

void _closeSessionByPeerId(String peerId) {

- // ignore: prefer_typing_uninitialized_variables

- var session;

- _sessions.removeWhere((String key, Session sess) {

+ _sessions.removeWhere((String key, Session session) {

var ids = key.split('-');

- session = sess;

- return peerId == ids[0] || peerId == ids[1];

+ var found = peerId == ids[0] || peerId == ids[1];

+ if (found) {

+ _closeSession(session);

+ onCallStateChange?.call(session, CallState.CallStateBye);

+ }

+ return found;

});

- if (session != null) {

- _closeSession(session);

- onCallStateChange?.call(session, CallState.CallStateBye);

- }

}

Future _closeSession(Session session) async {

diff --git a/packages/flutter_webrtc/example/flutter_webrtc_demo/lib/src/utils/screen_select_dialog.dart b/packages/flutter_webrtc/example/flutter_webrtc_demo/lib/src/utils/screen_select_dialog.dart

index 299e87f2d..bd25aed4b 100644

--- a/packages/flutter_webrtc/example/flutter_webrtc_demo/lib/src/utils/screen_select_dialog.dart

+++ b/packages/flutter_webrtc/example/flutter_webrtc_demo/lib/src/utils/screen_select_dialog.dart

@@ -34,9 +34,9 @@ class _ThumbnailWidgetState extends State {

@override

void deactivate() {

- for (var element in _subscriptions) {

+ _subscriptions.forEach((element) {

element.cancel();

- }

+ });

super.deactivate();

}

@@ -124,18 +124,18 @@ class ScreenSelectDialog extends Dialog {

Future _getSources() async {

try {

var sources = await desktopCapturer.getSources(types: [_sourceType]);

- for (var element in sources) {

+ sources.forEach((element) {

print(

'name: ${element.name}, id: ${element.id}, type: ${element.type}');

- }

+ });

_timer?.cancel();

_timer = Timer.periodic(Duration(seconds: 3), (timer) {

desktopCapturer.updateSources(types: [_sourceType]);

});

_sources.clear();

- for (var element in sources) {

+ sources.forEach((element) {

_sources[element.id] = element;

- }

+ });

_stateSetter?.call(() {});

return;

} catch (e) {

diff --git a/packages/flutter_webrtc/example/flutter_webrtc_demo/lib/src/utils/websocket_web.dart b/packages/flutter_webrtc/example/flutter_webrtc_demo/lib/src/utils/websocket_web.dart

index aac8e8b2c..ea1856ec7 100644

--- a/packages/flutter_webrtc/example/flutter_webrtc_demo/lib/src/utils/websocket_web.dart

+++ b/packages/flutter_webrtc/example/flutter_webrtc_demo/lib/src/utils/websocket_web.dart

@@ -1,3 +1,4 @@

+// ignore: avoid_web_libraries_in_flutter

import 'dart:html';

class SimpleWebSocket {

@@ -8,7 +9,7 @@ class SimpleWebSocket {

WebSocket? _socket;

Function()? onOpen;

Function(dynamic msg)? onMessage;

- Function(int code, String reason)? onClose;

+ Function(int? code, String? reason)? onClose;

Future connect() async {

try {

@@ -22,7 +23,7 @@ class SimpleWebSocket {

});

_socket!.onClose.listen((e) {

- onClose!.call(e.code!, e.reason!);

+ onClose?.call(e.code, e.reason);

});

} catch (e) {

onClose?.call(500, e.toString());

diff --git a/packages/flutter_webrtc/example/flutter_webrtc_demo/lib/src/widgets/screen_select_dialog.dart b/packages/flutter_webrtc/example/flutter_webrtc_demo/lib/src/widgets/screen_select_dialog.dart

index 299e87f2d..bd25aed4b 100644

--- a/packages/flutter_webrtc/example/flutter_webrtc_demo/lib/src/widgets/screen_select_dialog.dart

+++ b/packages/flutter_webrtc/example/flutter_webrtc_demo/lib/src/widgets/screen_select_dialog.dart

@@ -34,9 +34,9 @@ class _ThumbnailWidgetState extends State {

@override

void deactivate() {

- for (var element in _subscriptions) {

+ _subscriptions.forEach((element) {

element.cancel();

- }

+ });

super.deactivate();

}

@@ -124,18 +124,18 @@ class ScreenSelectDialog extends Dialog {

Future _getSources() async {

try {

var sources = await desktopCapturer.getSources(types: [_sourceType]);

- for (var element in sources) {

+ sources.forEach((element) {

print(

'name: ${element.name}, id: ${element.id}, type: ${element.type}');

- }

+ });

_timer?.cancel();

_timer = Timer.periodic(Duration(seconds: 3), (timer) {

desktopCapturer.updateSources(types: [_sourceType]);

});

_sources.clear();

- for (var element in sources) {

+ sources.forEach((element) {

_sources[element.id] = element;

- }

+ });

_stateSetter?.call(() {});

return;

} catch (e) {

diff --git a/packages/flutter_webrtc/example/flutter_webrtc_demo/pubspec.yaml b/packages/flutter_webrtc/example/flutter_webrtc_demo/pubspec.yaml

index 1a3b54463..b972e4849 100644

--- a/packages/flutter_webrtc/example/flutter_webrtc_demo/pubspec.yaml

+++ b/packages/flutter_webrtc/example/flutter_webrtc_demo/pubspec.yaml

@@ -10,15 +10,14 @@ dependencies:

cupertino_icons: ^1.0.3

flutter:

sdk: flutter

- flutter_webrtc: ^0.9.23

+ flutter_webrtc: ^0.9.46

flutter_webrtc_tizen:

path: ../../

http: ^0.13.3

path_provider: ^2.0.7

- path_provider_tizen: ^2.0.2

- shared_preferences: ^2.0.9

- shared_preferences_tizen:

- path: ../../../shared_preferences/

+ path_provider_tizen: ^2.1.0

+ shared_preferences: ^2.2.0

+ shared_preferences_tizen: ^2.2.0

dev_dependencies:

flutter_test:

diff --git a/packages/flutter_webrtc/example/flutter_webrtc_example/analysis_options.yaml b/packages/flutter_webrtc/example/flutter_webrtc_example/analysis_options.yaml

index 2d6b313d4..fea5e03d6 100644

--- a/packages/flutter_webrtc/example/flutter_webrtc_example/analysis_options.yaml

+++ b/packages/flutter_webrtc/example/flutter_webrtc_example/analysis_options.yaml

@@ -40,7 +40,3 @@ analyzer:

# allow self-reference to deprecated members (we do this because otherwise we have

# to annotate every member in every test, assert, etc, when we deprecate something)

deprecated_member_use_from_same_package: ignore

- # Ignore analyzer hints for updating pubspecs when using Future or

- # Stream and not importing dart:async

- # Please see https://github.com/flutter/flutter/pull/24528 for details.

- sdk_version_async_exported_from_core: ignore

diff --git a/packages/flutter_webrtc/example/flutter_webrtc_example/lib/main.dart b/packages/flutter_webrtc/example/flutter_webrtc_example/lib/main.dart

index 9d9435dc7..49cafc84e 100644

--- a/packages/flutter_webrtc/example/flutter_webrtc_example/lib/main.dart

+++ b/packages/flutter_webrtc/example/flutter_webrtc_example/lib/main.dart

@@ -11,7 +11,6 @@ import 'src/get_display_media_sample.dart';

import 'src/get_user_media_sample.dart'

if (dart.library.html) 'src/get_user_media_sample_web.dart';

import 'src/loopback_data_channel_sample.dart';

-import 'src/loopback_sample.dart';

import 'src/loopback_sample_unified_tracks.dart';

import 'src/route_item.dart';

@@ -109,14 +108,6 @@ class _MyAppState extends State {

builder: (BuildContext context) =>

GetDisplayMediaSample()));

}),

- RouteItem(

- title: 'LoopBack Sample',

- push: (BuildContext context) {

- Navigator.push(

- context,

- MaterialPageRoute(

- builder: (BuildContext context) => LoopBackSample()));

- }),

RouteItem(

title: 'LoopBack Sample (Unified Tracks)',

push: (BuildContext context) {

diff --git a/packages/flutter_webrtc/example/flutter_webrtc_example/lib/src/device_enumeration_sample.dart b/packages/flutter_webrtc/example/flutter_webrtc_example/lib/src/device_enumeration_sample.dart

index e0ad866d0..463000157 100644

--- a/packages/flutter_webrtc/example/flutter_webrtc_example/lib/src/device_enumeration_sample.dart

+++ b/packages/flutter_webrtc/example/flutter_webrtc_example/lib/src/device_enumeration_sample.dart

@@ -4,6 +4,7 @@ import 'package:collection/collection.dart';

import 'package:flutter/foundation.dart';

import 'package:flutter/material.dart';

import 'package:flutter_webrtc/flutter_webrtc.dart';

+import 'package:permission_handler/permission_handler.dart';

class VideoSize {

VideoSize(this.width, this.height);

@@ -63,6 +64,7 @@ class _DeviceEnumerationSampleState extends State {

@override

void initState() {

super.initState();

+

initRenderers();

loadDevices();

navigator.mediaDevices.ondevicechange = (event) {

@@ -130,6 +132,18 @@ class _DeviceEnumerationSampleState extends State {

}

Future loadDevices() async {

+ if (WebRTC.platformIsAndroid || WebRTC.platformIsIOS) {

+ //Ask for runtime permissions if necessary.

+ var status = await Permission.bluetooth.request();

+ if (status.isPermanentlyDenied) {

+ print('BLEpermdisabled');

+ }

+

+ status = await Permission.bluetoothConnect.request();

+ if (status.isPermanentlyDenied) {

+ print('ConnectPermdisabled');

+ }

+ }

final devices = await navigator.mediaDevices.enumerateDevices();

setState(() {

_devices = devices;

@@ -187,6 +201,14 @@ class _DeviceEnumerationSampleState extends State {

await _localRenderer.audioOutput(deviceId!);

}

+ var _speakerphoneOn = false;

+

+ Future _setSpeakerphoneOn() async {

+ _speakerphoneOn = !_speakerphoneOn;

+ await Helper.setSpeakerphoneOn(_speakerphoneOn);

+ setState(() {});

+ }

+

Future _selectVideoInput(String? deviceId) async {

_selectedVideoInputId = deviceId;

if (!_inCalling) {

@@ -281,6 +303,8 @@ class _DeviceEnumerationSampleState extends State {

senders.clear();

_inCalling = false;

await stopPCs();

+ _speakerphoneOn = false;

+ await Helper.setSpeakerphoneOn(_speakerphoneOn);

setState(() {});

} catch (e) {

print(e.toString());

@@ -307,20 +331,29 @@ class _DeviceEnumerationSampleState extends State {

}).toList();

},

),

- PopupMenuButton(

- onSelected: _selectAudioOutput,

- icon: Icon(Icons.volume_down_alt),

- itemBuilder: (BuildContext context) {

- return _devices

- .where((device) => device.kind == 'audiooutput')

- .map((device) {

- return PopupMenuItem(

- value: device.deviceId,

- child: Text(device.label),

- );

- }).toList();

- },

- ),

+ if (!WebRTC.platformIsMobile)

+ PopupMenuButton(

+ onSelected: _selectAudioOutput,

+ icon: Icon(Icons.volume_down_alt),

+ itemBuilder: (BuildContext context) {

+ return _devices

+ .where((device) => device.kind == 'audiooutput')

+ .map((device) {

+ return PopupMenuItem(

+ value: device.deviceId,

+ child: Text(device.label),

+ );

+ }).toList();

+ },

+ ),

+ if (!kIsWeb && WebRTC.platformIsMobile)

+ IconButton(

+ disabledColor: Colors.grey,

+ onPressed: _setSpeakerphoneOn,

+ icon: Icon(

+ _speakerphoneOn ? Icons.speaker_phone : Icons.phone_android),

+ tooltip: 'Switch SpeakerPhone',

+ ),

PopupMenuButton(

onSelected: _selectVideoInput,

icon: Icon(Icons.switch_camera),

@@ -364,7 +397,7 @@ class _DeviceEnumerationSampleState extends State {

child: Text('Select Video Size ($_selectedVideoSize)'),

),

PopupMenuDivider(),

- ...['320x240', '640x480', '1280x720', '1920x1080']

+ ...['320x180', '640x360', '1280x720', '1920x1080']

.map((fps) => PopupMenuItem(

value: fps,

child: Text(fps),

diff --git a/packages/flutter_webrtc/example/flutter_webrtc_example/lib/src/get_display_media_sample.dart b/packages/flutter_webrtc/example/flutter_webrtc_example/lib/src/get_display_media_sample.dart

index 0bfc3d196..5fe193d08 100644

--- a/packages/flutter_webrtc/example/flutter_webrtc_example/lib/src/get_display_media_sample.dart

+++ b/packages/flutter_webrtc/example/flutter_webrtc_example/lib/src/get_display_media_sample.dart

@@ -2,6 +2,7 @@ import 'dart:core';

import 'package:flutter/foundation.dart';

import 'package:flutter/material.dart';

+import 'package:flutter_background/flutter_background.dart';

import 'package:flutter_webrtc/flutter_webrtc.dart';

import 'package:flutter_webrtc_example/src/widgets/screen_select_dialog.dart';

@@ -50,6 +51,38 @@ class _GetDisplayMediaSampleState extends State {

await _makeCall(source);

}

} else {

+ if (WebRTC.platformIsAndroid) {

+ // Android specific

+ Future requestBackgroundPermission([bool isRetry = false]) async {

+ // Required for android screenshare.

+ try {

+ var hasPermissions = await FlutterBackground.hasPermissions;

+ if (!isRetry) {

+ const androidConfig = FlutterBackgroundAndroidConfig(

+ notificationTitle: 'Screen Sharing',

+ notificationText: 'LiveKit Example is sharing the screen.',

+ notificationImportance: AndroidNotificationImportance.Default,

+ notificationIcon: AndroidResource(

+ name: 'livekit_ic_launcher', defType: 'mipmap'),

+ );

+ hasPermissions = await FlutterBackground.initialize(

+ androidConfig: androidConfig);

+ }

+ if (hasPermissions &&

+ !FlutterBackground.isBackgroundExecutionEnabled) {

+ await FlutterBackground.enableBackgroundExecution();

+ }

+ } catch (e) {

+ if (!isRetry) {

+ return await Future.delayed(const Duration(seconds: 1),

+ () => requestBackgroundPermission(true));

+ }

+ print('could not publish video: $e');

+ }

+ }

+

+ await requestBackgroundPermission();

+ }

await _makeCall(null);

}

}

diff --git a/packages/flutter_webrtc/example/flutter_webrtc_example/lib/src/get_user_media_sample.dart b/packages/flutter_webrtc/example/flutter_webrtc_example/lib/src/get_user_media_sample.dart

index 9cc0296ba..d9c427a0d 100644

--- a/packages/flutter_webrtc/example/flutter_webrtc_example/lib/src/get_user_media_sample.dart

+++ b/packages/flutter_webrtc/example/flutter_webrtc_example/lib/src/get_user_media_sample.dart

@@ -22,6 +22,7 @@ class _GetUserMediaSampleState extends State {

bool _inCalling = false;

bool _isTorchOn = false;

MediaRecorder? _mediaRecorder;

+

bool get _isRec => _mediaRecorder != null;

List? _mediaDevicesList;

@@ -143,6 +144,18 @@ class _GetUserMediaSampleState extends State {

}

}

+ void setZoom(double zoomLevel) async {

+ if (_localStream == null) throw Exception('Stream is not initialized');

+ // await videoTrack.setZoom(zoomLevel); //Use it after published webrtc_interface 1.1.1

+

+ // before the release, use can just call native method directly.

+ final videoTrack = _localStream!

+ .getVideoTracks()

+ .firstWhere((track) => track.kind == 'video');

+ await WebRTC.invokeMethod('mediaStreamTrackSetZoom',

+ {'trackId': videoTrack.id, 'zoomLevel': zoomLevel});

+ }

+

void _toggleCamera() async {

if (_localStream == null) throw Exception('Stream is not initialized');

@@ -218,14 +231,21 @@ class _GetUserMediaSampleState extends State {

body: OrientationBuilder(

builder: (context, orientation) {

return Center(

- child: Container(

- margin: EdgeInsets.fromLTRB(0.0, 0.0, 0.0, 0.0),

- width: MediaQuery.of(context).size.width,

- height: MediaQuery.of(context).size.height,

- decoration: BoxDecoration(color: Colors.black54),

+ child: Container(

+ margin: EdgeInsets.fromLTRB(0.0, 0.0, 0.0, 0.0),

+ width: MediaQuery.of(context).size.width,

+ height: MediaQuery.of(context).size.height,

+ decoration: BoxDecoration(color: Colors.black54),

+ child: GestureDetector(

+ onScaleStart: (details) {},

+ onScaleUpdate: (details) {

+ if (details.scale != 1.0) {

+ setZoom(details.scale);

+ }

+ },

child: RTCVideoView(_localRenderer, mirror: true),

),

- );

+ ));

},

),

floatingActionButton: FloatingActionButton(

diff --git a/packages/flutter_webrtc/example/flutter_webrtc_example/lib/src/loopback_data_channel_sample.dart b/packages/flutter_webrtc/example/flutter_webrtc_example/lib/src/loopback_data_channel_sample.dart

index 4110a5731..d65be13a5 100644

--- a/packages/flutter_webrtc/example/flutter_webrtc_example/lib/src/loopback_data_channel_sample.dart

+++ b/packages/flutter_webrtc/example/flutter_webrtc_example/lib/src/loopback_data_channel_sample.dart

@@ -115,10 +115,12 @@ class _DataChannelLoopBackSampleState extends State {

});

Timer(const Duration(seconds: 1), () {

- setState(() {

- _dc1Status = '';

- _dc2Status = '';

- });

+ if (mounted) {

+ setState(() {

+ _dc1Status = '';

+ _dc2Status = '';

+ });

+ }

});

}

diff --git a/packages/flutter_webrtc/example/flutter_webrtc_example/lib/src/loopback_sample.dart b/packages/flutter_webrtc/example/flutter_webrtc_example/lib/src/loopback_sample.dart

deleted file mode 100644

index d3d93da81..000000000

--- a/packages/flutter_webrtc/example/flutter_webrtc_example/lib/src/loopback_sample.dart

+++ /dev/null

@@ -1,349 +0,0 @@

-import 'dart:async';

-import 'dart:core';

-

-import 'package:flutter/material.dart';

-import 'package:flutter_webrtc/flutter_webrtc.dart';

-

-class LoopBackSample extends StatefulWidget {

- static String tag = 'loopback_sample';

-

- @override

- _MyAppState createState() => _MyAppState();

-}

-

-class _MyAppState extends State {

- MediaStream? _localStream;

- RTCPeerConnection? _peerConnection;

- final _localRenderer = RTCVideoRenderer();

- final _remoteRenderer = RTCVideoRenderer();

- bool _inCalling = false;

- Timer? _timer;

- final List _senders = [];

- String get sdpSemantics => 'unified-plan';

-

- @override

- void initState() {

- super.initState();

- initRenderers();

- }

-

- @override

- void deactivate() {

- super.deactivate();

- if (_inCalling) {

- _hangUp();

- }

- _localRenderer.dispose();

- _remoteRenderer.dispose();

- }

-

- void initRenderers() async {

- await _localRenderer.initialize();

- await _remoteRenderer.initialize();

- }

-

- void handleStatsReport(Timer timer) async {

- if (_peerConnection != null) {

- var reports = await _peerConnection?.getStats();

- reports?.forEach((report) {

- print('report => { ');

- print(' id: ' + report.id + ',');

- print(' type: ' + report.type + ',');

- print(' timestamp: ${report.timestamp},');

- print(' values => {');

- report.values.forEach((key, value) {

- print(' ' + key + ' : ' + value.toString() + ', ');

- });

- print(' }');

- print('}');

- });

-

- /*

- var senders = await _peerConnection.getSenders();

- var canInsertDTMF = await senders[0].dtmfSender.canInsertDtmf();

- print(canInsertDTMF);

- await senders[0].dtmfSender.insertDTMF('1');

- var receivers = await _peerConnection.getReceivers();

- print(receivers[0].track.id);

- var transceivers = await _peerConnection.getTransceivers();

- print(transceivers[0].sender.parameters);

- print(transceivers[0].receiver.parameters);

- */

- }

- }

-

- void _onSignalingState(RTCSignalingState state) {

- print(state);

- }

-

- void _onIceGatheringState(RTCIceGatheringState state) {

- print(state);

- }

-

- void _onIceConnectionState(RTCIceConnectionState state) {

- print(state);

- }

-

- void _onPeerConnectionState(RTCPeerConnectionState state) {

- print(state);

- }

-

- void _onAddStream(MediaStream stream) {

- print('New stream: ' + stream.id);

- _remoteRenderer.srcObject = stream;

- }

-

- void _onRemoveStream(MediaStream stream) {

- _remoteRenderer.srcObject = null;

- }

-

- void _onCandidate(RTCIceCandidate candidate) {

- print('onCandidate: ${candidate.candidate}');

- _peerConnection?.addCandidate(candidate);

- }

-

- void _onTrack(RTCTrackEvent event) {

- print('onTrack');

- if (event.track.kind == 'video') {

- _remoteRenderer.srcObject = event.streams[0];

- }

- }

-

- void _onAddTrack(MediaStream stream, MediaStreamTrack track) {

- if (track.kind == 'video') {

- _remoteRenderer.srcObject = stream;

- }

- }

-

- void _onRemoveTrack(MediaStream stream, MediaStreamTrack track) {

- if (track.kind == 'video') {

- _remoteRenderer.srcObject = null;

- }

- }

-

- void _onRenegotiationNeeded() {

- print('RenegotiationNeeded');

- }

-

- // Platform messages are asynchronous, so we initialize in an async method.

- void _makeCall() async {

- final mediaConstraints = {

- 'audio': true,

- 'video': {

- 'mandatory': {

- 'minWidth':

- '640', // Provide your own width, height and frame rate here

- 'minHeight': '480',

- 'minFrameRate': '30',

- },

- 'facingMode': 'user',

- 'optional': [],

- }

- };

-

- var configuration = {

- 'iceServers': [

- {'url': 'stun:stun.l.google.com:19302'},

- ],

- 'sdpSemantics': sdpSemantics

- };

-

- final offerSdpConstraints = {

- 'mandatory': {

- 'OfferToReceiveAudio': true,

- 'OfferToReceiveVideo': true,

- },

- 'optional': [],

- };

-

- final loopbackConstraints = {

- 'mandatory': {},

- 'optional': [

- {'DtlsSrtpKeyAgreement': false},

- ],

- };

-

- if (_peerConnection != null) return;

-

- try {

- _peerConnection =

- await createPeerConnection(configuration, loopbackConstraints);

-

- _peerConnection!.onSignalingState = _onSignalingState;

- _peerConnection!.onIceGatheringState = _onIceGatheringState;

- _peerConnection!.onIceConnectionState = _onIceConnectionState;

- _peerConnection!.onConnectionState = _onPeerConnectionState;

- _peerConnection!.onIceCandidate = _onCandidate;

- _peerConnection!.onRenegotiationNeeded = _onRenegotiationNeeded;

-

- _localStream =

- await navigator.mediaDevices.getUserMedia(mediaConstraints);

- _localRenderer.srcObject = _localStream;

-

- switch (sdpSemantics) {

- case 'plan-b':

- _peerConnection!.onAddStream = _onAddStream;

- _peerConnection!.onRemoveStream = _onRemoveStream;

- await _peerConnection!.addStream(_localStream!);

- break;

- case 'unified-plan':

- _peerConnection!.onTrack = _onTrack;

- _peerConnection!.onAddTrack = _onAddTrack;

- _peerConnection!.onRemoveTrack = _onRemoveTrack;

- _localStream!.getTracks().forEach((track) async {

- _senders.add(await _peerConnection!.addTrack(track, _localStream!));

- });

- break;

- }

-

- /*

- await _peerConnection.addTransceiver(

- track: _localStream.getAudioTracks()[0],

- init: RTCRtpTransceiverInit(

- direction: TransceiverDirection.SendRecv, streams: [_localStream]),

- );

- */

- /*

- // ignore: unused_local_variable

- var transceiver = await _peerConnection.addTransceiver(

- track: _localStream.getVideoTracks()[0],

- init: RTCRtpTransceiverInit(

- direction: TransceiverDirection.SendRecv, streams: [_localStream]),

- );

- */

-

- /*

- // Unified-Plan Simulcast

- await _peerConnection.addTransceiver(

- track: _localStream.getVideoTracks()[0],

- init: RTCRtpTransceiverInit(

- direction: TransceiverDirection.SendOnly,

- streams: [_localStream],

- sendEncodings: [

- // for firefox order matters... first high resolution, then scaled resolutions...

- RTCRtpEncoding(

- rid: 'f',

- maxBitrate: 900000,

- numTemporalLayers: 3,

- ),

- RTCRtpEncoding(

- rid: 'h',

- numTemporalLayers: 3,

- maxBitrate: 300000,

- scaleResolutionDownBy: 2.0,

- ),

- RTCRtpEncoding(

- rid: 'q',

- numTemporalLayers: 3,

- maxBitrate: 100000,

- scaleResolutionDownBy: 4.0,

- ),

- ],

- ));

-

- await _peerConnection.addTransceiver(

- kind: RTCRtpMediaType.RTCRtpMediaTypeVideo);

- await _peerConnection.addTransceiver(

- kind: RTCRtpMediaType.RTCRtpMediaTypeVideo);

- await _peerConnection.addTransceiver(

- kind: RTCRtpMediaType.RTCRtpMediaTypeVideo,

- init:

- RTCRtpTransceiverInit(direction: TransceiverDirection.RecvOnly));

- */

- var description = await _peerConnection!.createOffer(offerSdpConstraints);

- var sdp = description.sdp;

- print('sdp = $sdp');

- await _peerConnection!.setLocalDescription(description);

- //change for loopback.

- var sdp_answer = sdp?.replaceAll('setup:actpass', 'setup:active');

- var description_answer = RTCSessionDescription(sdp_answer!, 'answer');

- await _peerConnection!.setRemoteDescription(description_answer);

-

- // _peerConnection!.getStats();

- /* Unfied-Plan replaceTrack

- var stream = await MediaDevices.getDisplayMedia(mediaConstraints);

- _localRenderer.srcObject = _localStream;

- await transceiver.sender.replaceTrack(stream.getVideoTracks()[0]);

- // do re-negotiation ....

- */

- } catch (e) {

- print(e.toString());

- }

- if (!mounted) return;

-

- _timer = Timer.periodic(Duration(seconds: 1), handleStatsReport);

-

- setState(() {

- _inCalling = true;

- });

- }

-

- void _hangUp() async {

- try {

- await _localStream?.dispose();

- await _peerConnection?.close();

- _peerConnection = null;

- _localRenderer.srcObject = null;

- _remoteRenderer.srcObject = null;

- } catch (e) {

- print(e.toString());

- }

- setState(() {

- _inCalling = false;

- });

- _timer?.cancel();

- }

-

- void _sendDtmf() async {

- var rtpSender =

- _senders.firstWhere((element) => element.track?.kind == 'audio');

- var dtmfSender = rtpSender.dtmfSender;

- await dtmfSender.insertDTMF('123#');

- }

-

- @override

- Widget build(BuildContext context) {

- var widgets = [

- Expanded(

- child: RTCVideoView(_localRenderer, mirror: true),

- ),

- Expanded(

- child: RTCVideoView(_remoteRenderer),

- )

- ];

- return Scaffold(

- appBar: AppBar(

- title: Text('LoopBack example'),

- actions: _inCalling

- ? [

- IconButton(

- icon: Icon(Icons.keyboard),

- onPressed: _sendDtmf,

- ),

- ]

- : null,

- ),

- body: OrientationBuilder(

- builder: (context, orientation) {

- return Center(

- child: Container(

- decoration: BoxDecoration(color: Colors.black54),

- child: orientation == Orientation.portrait

- ? Column(

- mainAxisAlignment: MainAxisAlignment.spaceEvenly,

- children: widgets)

- : Row(

- mainAxisAlignment: MainAxisAlignment.spaceEvenly,

- children: widgets),

- ),

- );

- },

- ),

- floatingActionButton: FloatingActionButton(

- onPressed: _inCalling ? _hangUp : _makeCall,

- tooltip: _inCalling ? 'Hangup' : 'Call',

- child: Icon(_inCalling ? Icons.call_end : Icons.phone),

- ),

- );

- }

-}

diff --git a/packages/flutter_webrtc/example/flutter_webrtc_example/lib/src/loopback_sample_unified_tracks.dart b/packages/flutter_webrtc/example/flutter_webrtc_example/lib/src/loopback_sample_unified_tracks.dart

index b694b8bf3..e5da4834b 100644

--- a/packages/flutter_webrtc/example/flutter_webrtc_example/lib/src/loopback_sample_unified_tracks.dart

+++ b/packages/flutter_webrtc/example/flutter_webrtc_example/lib/src/loopback_sample_unified_tracks.dart

@@ -2,6 +2,7 @@ import 'dart:async';

import 'dart:core';

import 'package:flutter/material.dart';

+import 'package:flutter/services.dart';

import 'package:flutter_webrtc/flutter_webrtc.dart';

class LoopBackSampleUnifiedTracks extends StatefulWidget {

@@ -11,7 +12,20 @@ class LoopBackSampleUnifiedTracks extends StatefulWidget {

_MyAppState createState() => _MyAppState();

}

+const List audioCodecList = [

+ 'OPUS',

+ 'ISAC',

+ 'PCMA',

+ 'PCMU',

+ 'G729'

+];

+const List videoCodecList = ['VP8', 'VP9', 'H264', 'AV1'];

+

class _MyAppState extends State {

+ String audioDropdownValue = audioCodecList.first;

+ String videoDropdownValue = videoCodecList.first;

+ RTCRtpCapabilities? acaps;

+ RTCRtpCapabilities? vcaps;

MediaStream? _localStream;

RTCPeerConnection? _localPeerConnection;

RTCPeerConnection? _remotePeerConnection;

@@ -23,13 +37,21 @@ class _MyAppState extends State {

bool _micOn = false;

bool _cameraOn = false;

bool _speakerOn = false;

+ bool _audioEncrypt = false;

+ bool _videoEncrypt = false;

+ bool _audioDecrypt = false;

+ bool _videoDecrypt = false;

List? _mediaDevicesList;

+ final FrameCryptorFactory _frameCyrptorFactory = frameCryptorFactory;

+ KeyProvider? _keySharedProvider;

+ final Map _frameCyrptors = {};

Timer? _timer;

final _configuration = {

'iceServers': [

{'urls': 'stun:stun.l.google.com:19302'},

],

- 'sdpSemantics': 'unified-plan'

+ 'sdpSemantics': 'unified-plan',

+ 'encodedInsertableStreams': true,

};

final _constraints = {

@@ -39,6 +61,43 @@ class _MyAppState extends State {

],

};

+ final demoRatchetSalt = 'flutter-webrtc-ratchet-salt';

+

+ final aesKey = Uint8List.fromList([

+ 200,

+ 244,

+ 58,

+ 72,

+ 214,

+ 245,

+ 86,

+ 82,

+ 192,

+ 127,

+ 23,

+ 153,

+ 167,

+ 172,

+ 122,

+ 234,

+ 140,

+ 70,

+ 175,

+ 74,

+ 61,

+ 11,

+ 134,

+ 58,

+ 185,

+ 102,

+ 172,

+ 17,

+ 11,

+ 6,

+ 119,

+ 253

+ ]);

+

@override

void initState() {

print('Init State');

@@ -109,52 +168,35 @@ class _MyAppState extends State {

void initLocalConnection() async {

if (_localPeerConnection != null) return;

try {

- _localPeerConnection =

- await createPeerConnection(_configuration, _constraints);

-

- _localPeerConnection!.onSignalingState = _onLocalSignalingState;

- _localPeerConnection!.onIceGatheringState = _onLocalIceGatheringState;

- _localPeerConnection!.onIceConnectionState = _onLocalIceConnectionState;

- _localPeerConnection!.onConnectionState = _onLocalPeerConnectionState;

- _localPeerConnection!.onIceCandidate = _onLocalCandidate;

- _localPeerConnection!.onRenegotiationNeeded = _onLocalRenegotiationNeeded;

+ var pc = await createPeerConnection(_configuration, _constraints);

+

+ pc.onSignalingState = (state) async {

+ var state2 = await pc.getSignalingState();

+ print('local pc: onSignalingState($state), state2($state2)');

+ };

+

+ pc.onIceGatheringState = (state) async {

+ var state2 = await pc.getIceGatheringState();

+ print('local pc: onIceGatheringState($state), state2($state2)');

+ };

+ pc.onIceConnectionState = (state) async {

+ var state2 = await pc.getIceConnectionState();

+ print('local pc: onIceConnectionState($state), state2($state2)');

+ };

+ pc.onConnectionState = (state) async {

+ var state2 = await pc.getConnectionState();

+ print('local pc: onConnectionState($state), state2($state2)');

+ };

+

+ pc.onIceCandidate = _onLocalCandidate;

+ pc.onRenegotiationNeeded = _onLocalRenegotiationNeeded;

+

+ _localPeerConnection = pc;

} catch (e) {

print(e.toString());

}

}

- void _onLocalSignalingState(RTCSignalingState state) {

- print('localSignalingState: $state');

- }

-

- void _onRemoteSignalingState(RTCSignalingState state) {

- print('remoteSignalingState: $state');

- }

-

- void _onLocalIceGatheringState(RTCIceGatheringState state) {

- print('localIceGatheringState: $state');

- }

-

- void _onRemoteIceGatheringState(RTCIceGatheringState state) {

- print('remoteIceGatheringState: $state');

- }

-

- void _onLocalIceConnectionState(RTCIceConnectionState state) {

- print('localIceConnectionState: $state');

- }

-

- void _onRemoteIceConnectionState(RTCIceConnectionState state) {

- print('remoteIceConnectionState: $state');

- }

-

- void _onLocalPeerConnectionState(RTCPeerConnectionState state) {

- print('localPeerConnectionState: $state');

- }

-

- void _onRemotePeerConnectionState(RTCPeerConnectionState state) {

- print('remotePeerConnectionState: $state');

- }

-

void _onLocalCandidate(RTCIceCandidate localCandidate) async {

print('onLocalCandidate: ${localCandidate.candidate}');

try {

@@ -187,33 +229,10 @@ class _MyAppState extends State {

void _onTrack(RTCTrackEvent event) async {

print('onTrack ${event.track.id}');

+

if (event.track.kind == 'video') {

- // onMute/onEnded/onUnMute are not wired up

- // event.track.onEnded = () {

- // print("Ended");

- // setState(() {

- // _remoteRenderer.srcObject = null;

- // });

- // };

- // event.track.onUnMute = () async {

- // print("UnMute");

- // var stream = await createLocalMediaStream(event.track.id!);

- // await stream.addTrack(event.track);

- // setState(() {

- // _remoteRenderer.srcObject = stream;

- // });

- // };

- // event.track.onMute = () {

- // print("OnMute");

- // setState(() {

- // _remoteRenderer.srcObject = null;

- // });

- // };

-

- var stream = await createLocalMediaStream(event.track.id!);

- await stream.addTrack(event.track);

setState(() {

- _remoteRenderer.srcObject = stream;

+ _remoteRenderer.srcObject = event.streams[0];

});

}

}

@@ -231,27 +250,50 @@ class _MyAppState extends State {

initRenderers();

initLocalConnection();

- var acaps = await getRtpSenderCapabilities('audio');

- print('sender audio capabilities: ${acaps.toMap()}');

+ var keyProviderOptions = KeyProviderOptions(

+ sharedKey: true,

+ ratchetSalt: Uint8List.fromList(demoRatchetSalt.codeUnits),

+ ratchetWindowSize: 16,

+ failureTolerance: -1,

+ );

+

+ _keySharedProvider ??=

+ await _frameCyrptorFactory.createDefaultKeyProvider(keyProviderOptions);

+ await _keySharedProvider?.setSharedKey(key: aesKey);

+ acaps = await getRtpSenderCapabilities('audio');

+ print('sender audio capabilities: ${acaps!.toMap()}');

- var vcaps = await getRtpSenderCapabilities('video');

- print('sender video capabilities: ${vcaps.toMap()}');

+ vcaps = await getRtpSenderCapabilities('video');

+ print('sender video capabilities: ${vcaps!.toMap()}');

if (_remotePeerConnection != null) return;

try {

- _remotePeerConnection =

- await createPeerConnection(_configuration, _constraints);

-

- _remotePeerConnection!.onTrack = _onTrack;

- _remotePeerConnection!.onSignalingState = _onRemoteSignalingState;

- _remotePeerConnection!.onIceGatheringState = _onRemoteIceGatheringState;

- _remotePeerConnection!.onIceConnectionState = _onRemoteIceConnectionState;

- _remotePeerConnection!.onConnectionState = _onRemotePeerConnectionState;

- _remotePeerConnection!.onIceCandidate = _onRemoteCandidate;

- _remotePeerConnection!.onRenegotiationNeeded =

- _onRemoteRenegotiationNeeded;

-

+ var pc = await createPeerConnection(_configuration, _constraints);

+

+ pc.onTrack = _onTrack;

+

+ pc.onSignalingState = (state) async {

+ var state2 = await pc.getSignalingState();

+ print('remote pc: onSignalingState($state), state2($state2)');

+ };

+

+ pc.onIceGatheringState = (state) async {

+ var state2 = await pc.getIceGatheringState();

+ print('remote pc: onIceGatheringState($state), state2($state2)');

+ };

+ pc.onIceConnectionState = (state) async {

+ var state2 = await pc.getIceConnectionState();

+ print('remote pc: onIceConnectionState($state), state2($state2)');

+ };

+ pc.onConnectionState = (state) async {

+ var state2 = await pc.getConnectionState();

+ print('remote pc: onConnectionState($state), state2($state2)');

+ };

+

+ pc.onIceCandidate = _onRemoteCandidate;

+ pc.onRenegotiationNeeded = _onRemoteRenegotiationNeeded;

+ _remotePeerConnection = pc;

await _negotiate();

} catch (e) {

print(e.toString());

@@ -274,7 +316,7 @@ class _MyAppState extends State {

if (_remotePeerConnection == null) return;

- var offer = await _localPeerConnection!.createOffer(oaConstraints);

+ var offer = await _localPeerConnection!.createOffer({});

await _localPeerConnection!.setLocalDescription(offer);

var localDescription = await _localPeerConnection!.getLocalDescription();

@@ -286,6 +328,63 @@ class _MyAppState extends State {

await _localPeerConnection!.setRemoteDescription(remoteDescription!);

}

+ void _enableEncryption({bool video = false, bool enabled = true}) async {

+ var senders = await _localPeerConnection?.senders;

+

+ var kind = video ? 'video' : 'audio';

+

+ senders?.forEach((element) async {

+ if (kind != element.track?.kind) return;

+

+ var trackId = element.track?.id;

+ var id = kind + '_' + trackId! + '_sender';

+ if (!_frameCyrptors.containsKey(id)) {

+ var frameCyrptor =

+ await _frameCyrptorFactory.createFrameCryptorForRtpSender(

+ participantId: id,

+ sender: element,

+ algorithm: Algorithm.kAesGcm,

+ keyProvider: _keySharedProvider!);

+ frameCyrptor.onFrameCryptorStateChanged = (participantId, state) =>

+ print('EN onFrameCryptorStateChanged $participantId $state');

+ _frameCyrptors[id] = frameCyrptor;

+ await frameCyrptor.setKeyIndex(0);

+ }

+

+ var _frameCyrptor = _frameCyrptors[id];

+ await _frameCyrptor?.setEnabled(enabled);

+ await _frameCyrptor?.updateCodec(

+ kind == 'video' ? videoDropdownValue : audioDropdownValue);

+ });

+ }

+

+ void _enableDecryption({bool video = false, bool enabled = true}) async {

+ var receivers = await _remotePeerConnection?.receivers;

+ var kind = video ? 'video' : 'audio';

+ receivers?.forEach((element) async {

+ if (kind != element.track?.kind) return;

+ var trackId = element.track?.id;

+ var id = kind + '_' + trackId! + '_receiver';

+ if (!_frameCyrptors.containsKey(id)) {

+ var frameCyrptor =

+ await _frameCyrptorFactory.createFrameCryptorForRtpReceiver(

+ participantId: id,

+ receiver: element,

+ algorithm: Algorithm.kAesGcm,

+ keyProvider: _keySharedProvider!);

+ frameCyrptor.onFrameCryptorStateChanged = (participantId, state) =>

+ print('DE onFrameCryptorStateChanged $participantId $state');

+ _frameCyrptors[id] = frameCyrptor;

+ await frameCyrptor.setKeyIndex(0);

+ }

+

+ var _frameCyrptor = _frameCyrptors[id];

+ await _frameCyrptor?.setEnabled(enabled);

+ await _frameCyrptor?.updateCodec(

+ kind == 'video' ? videoDropdownValue : audioDropdownValue);

+ });

+ }

+

void _hangUp() async {

try {

await _remotePeerConnection?.close();

@@ -300,6 +399,11 @@ class _MyAppState extends State {

});

}

+ void _ratchetKey() async {

+ var newKey = await _keySharedProvider?.ratchetSharedKey(index: 0);

+ print('newKey $newKey');

+ }

+

Map _getMediaConstraints({audio = true, video = true}) {

return {

'audio': audio ? true : false,

@@ -339,26 +443,15 @@ class _MyAppState extends State {

var transceivers = await _localPeerConnection?.getTransceivers();

transceivers?.forEach((transceiver) {

- if (transceiver.sender.track == null) return;

- print('transceiver: ${transceiver.sender.track!.kind!}');

- transceiver.setCodecPreferences([

- RTCRtpCodecCapability(

- mimeType: 'video/VP8',

- clockRate: 90000,

- ),

- RTCRtpCodecCapability(

- mimeType: 'video/H264',

- clockRate: 90000,

- sdpFmtpLine:

- 'level-asymmetry-allowed=1;packetization-mode=0;profile-level-id=42e01f',

- ),

- RTCRtpCodecCapability(

- mimeType: 'video/AV1',

- clockRate: 90000,

- )

- ]);

+ if (transceiver.sender.senderId != _videoSender?.senderId) return;

+ var codecs = vcaps?.codecs

+ ?.where((element) => element.mimeType

+ .toLowerCase()

+ .contains(videoDropdownValue.toLowerCase()))

+ .toList() ??

+ [];

+ transceiver.setCodecPreferences(codecs);

});

-

await _negotiate();

setState(() {

@@ -368,11 +461,23 @@ class _MyAppState extends State {

_timer?.cancel();

_timer = Timer.periodic(Duration(seconds: 1), (timer) async {

- handleStatsReport(timer);

+ //handleStatsReport(timer);

});

}

void _stopVideo() async {

+ _frameCyrptors.removeWhere((key, value) {

+ if (key.startsWith('video')) {

+ value.dispose();

+ return true;

+ }

+ return false;

+ });

+

+ _localStream?.getTracks().forEach((track) async {

+ await track.stop();

+ });

+

await _removeExistingVideoTrack(fromConnection: true);

await _negotiate();

setState(() {

@@ -401,23 +506,29 @@ class _MyAppState extends State {

await _addOrReplaceAudioTracks();

var transceivers = await _localPeerConnection?.getTransceivers();

transceivers?.forEach((transceiver) {

- if (transceiver.sender.track == null) return;

- transceiver.setCodecPreferences([

- RTCRtpCodecCapability(

- mimeType: 'audio/PCMA',

- clockRate: 8000,

- channels: 1,

- )

- ]);

+ if (transceiver.sender.senderId != _audioSender?.senderId) return;

+ var codecs = acaps?.codecs

+ ?.where((element) => element.mimeType

+ .toLowerCase()

+ .contains(audioDropdownValue.toLowerCase()))

+ .toList() ??

+ [];

+ transceiver.setCodecPreferences(codecs);

});

await _negotiate();

-

setState(() {

_micOn = true;

});

}

void _stopAudio() async {

+ _frameCyrptors.removeWhere((key, value) {

+ if (key.startsWith('audio')) {

+ value.dispose();

+ return true;

+ }

+ return false;

+ });

await _removeExistingAudioTrack(fromConnection: true);

await _negotiate();

setState(() {

@@ -548,10 +659,129 @@ class _MyAppState extends State {

Widget build(BuildContext context) {

var widgets = [

Expanded(

- child: RTCVideoView(_localRenderer, mirror: true),

+ child: Container(

+ child: Column(

+ mainAxisAlignment: MainAxisAlignment.spaceEvenly,

+ children: [

+ Row(

+ children: [

+ Text('audio codec:'),

+ DropdownButton(

+ value: audioDropdownValue,

+ icon: const Icon(

+ Icons.arrow_drop_down,

+ color: Colors.blue,

+ ),

+ elevation: 16,

+ style: const TextStyle(color: Colors.blue),

+ underline: Container(

+ height: 2,

+ color: Colors.blueAccent,

+ ),

+ onChanged: (String? value) {

+ // This is called when the user selects an item.

+ setState(() {

+ audioDropdownValue = value!;

+ });

+ },

+ items: audioCodecList

+ .map>((String value) {

+ return DropdownMenuItem(

+ value: value,

+ child: Text(value),

+ );

+ }).toList(),

+ ),

+ Text('video codec:'),

+ DropdownButton(

+ value: videoDropdownValue,

+ icon: const Icon(

+ Icons.arrow_drop_down,

+ color: Colors.blue,

+ ),

+ elevation: 16,

+ style: const TextStyle(color: Colors.blue),

+ underline: Container(

+ height: 2,

+ color: Colors.blueAccent,

+ ),

+ onChanged: (String? value) {

+ // This is called when the user selects an item.

+ setState(() {

+ videoDropdownValue = value!;

+ });

+ },

+ items: videoCodecList

+ .map>((String value) {

+ return DropdownMenuItem(

+ value: value,

+ child: Text(value),

+ );

+ }).toList(),

+ ),

+ TextButton(onPressed: _ratchetKey, child: Text('Ratchet Key'))

+ ],

+ ),

+ Row(

+ children: [

+ Text('audio encrypt:'),

+ Switch(

+ value: _audioEncrypt,

+ onChanged: (value) {

+ setState(() {

+ _audioEncrypt = value;

+ _enableEncryption(video: false, enabled: _audioEncrypt);

+ });

+ }),

+ Text('video encrypt:'),

+ Switch(

+ value: _videoEncrypt,

+ onChanged: (value) {

+ setState(() {

+ _videoEncrypt = value;

+ _enableEncryption(video: true, enabled: _videoEncrypt);

+ });

+ })

+ ],

+ ),

+ Expanded(

+ child: RTCVideoView(_localRenderer, mirror: true),

+ ),

+ ],

+ )),

),

Expanded(

- child: RTCVideoView(_remoteRenderer),

+ child: Container(

+ child: Column(

+ mainAxisAlignment: MainAxisAlignment.spaceEvenly,

+ children: [

+ Row(

+ children: [

+ Text('audio decrypt:'),

+ Switch(

+ value: _audioDecrypt,

+ onChanged: (value) {

+ setState(() {

+ _audioDecrypt = value;

+ _enableDecryption(video: false, enabled: _audioDecrypt);

+ });

+ }),

+ Text('video decrypt:'),

+ Switch(

+ value: _videoDecrypt,

+ onChanged: (value) {

+ setState(() {

+ _videoDecrypt = value;

+ _enableDecryption(video: true, enabled: _videoDecrypt);

+ });

+ })

+ ],

+ ),

+ Expanded(

+ child: RTCVideoView(_remoteRenderer),

+ ),

+ ],

+ )),

)

];

return Scaffold(

diff --git a/packages/flutter_webrtc/example/flutter_webrtc_example/pubspec.yaml b/packages/flutter_webrtc/example/flutter_webrtc_example/pubspec.yaml

index 3054f2086..b3cec1407 100644

--- a/packages/flutter_webrtc/example/flutter_webrtc_example/pubspec.yaml

+++ b/packages/flutter_webrtc/example/flutter_webrtc_example/pubspec.yaml

@@ -15,6 +15,8 @@ dependencies:

path: ../../

path_provider: ^2.0.7

path_provider_tizen: ^2.0.2

+ permission_handler: ^10.4.3

+ permission_handler_tizen: ^1.3.0

dev_dependencies:

flutter_test:

diff --git a/packages/flutter_webrtc/tizen/inc/flutter_frame_cryptor.h b/packages/flutter_webrtc/tizen/inc/flutter_frame_cryptor.h

index c7e572832..2a04ac3f5 100755

--- a/packages/flutter_webrtc/tizen/inc/flutter_frame_cryptor.h

+++ b/packages/flutter_webrtc/tizen/inc/flutter_frame_cryptor.h

@@ -49,12 +49,26 @@ class FlutterFrameCryptor {

const EncodableMap& constraints,

std::unique_ptr result);

+ void KeyProviderSetSharedKey(const EncodableMap& constraints,

+ std::unique_ptr result);

+

+ void KeyProviderRatchetSharedKey(const EncodableMap& constraints,

+ std::unique_ptr result);

+

+ void KeyProviderExportSharedKey(const EncodableMap& constraints,

+ std::unique_ptr result);

void KeyProviderSetKey(const EncodableMap& constraints,

std::unique_ptr result);

void KeyProviderRatchetKey(const EncodableMap& constraints,

std::unique_ptr result);

+ void KeyProviderExportKey(const EncodableMap& constraints,

+ std::unique_ptr result);

+

+ void KeyProviderSetSifTrailer(const EncodableMap& constraints,

+ std::unique_ptr result);

+

void KeyProviderDispose(const EncodableMap& constraints,

std::unique_ptr result);

@@ -75,7 +89,7 @@ class FlutterFrameCryptor {

FlutterWebRTCBase* base_;

std::map>

frame_cryptors_;

- std::map>

+ std::map>

frame_cryptor_observers_;

std::map> key_providers_;

};

diff --git a/packages/flutter_webrtc/tizen/inc/flutter_peerconnection.h b/packages/flutter_webrtc/tizen/inc/flutter_peerconnection.h

index a090f23a7..469ed0fa0 100644

--- a/packages/flutter_webrtc/tizen/inc/flutter_peerconnection.h

+++ b/packages/flutter_webrtc/tizen/inc/flutter_peerconnection.h

@@ -170,6 +170,19 @@ class FlutterPeerConnection {

private:

FlutterWebRTCBase* base_;

};

+

+std::string RTCMediaTypeToString(RTCMediaType type);

+

+std::string transceiverDirectionString(RTCRtpTransceiverDirection direction);

+

+const char* iceConnectionStateString(RTCIceConnectionState state);

+

+const char* signalingStateString(RTCSignalingState state);

+

+const char* peerConnectionStateString(RTCPeerConnectionState state);

+

+const char* iceGatheringStateString(RTCIceGatheringState state);

+

} // namespace flutter_webrtc_plugin

#endif // !FLUTTER_WEBRTC_RTC_PEER_CONNECTION_HXX

\ No newline at end of file

diff --git a/packages/flutter_webrtc/tizen/inc/flutter_video_renderer.h b/packages/flutter_webrtc/tizen/inc/flutter_video_renderer.h

index 8ab9e71e3..7eb598ef3 100644

--- a/packages/flutter_webrtc/tizen/inc/flutter_video_renderer.h

+++ b/packages/flutter_webrtc/tizen/inc/flutter_video_renderer.h

@@ -13,9 +13,15 @@ namespace flutter_webrtc_plugin {

using namespace libwebrtc;

class FlutterVideoRenderer

- : public RTCVideoRenderer> {

+ : public RTCVideoRenderer>,

+ public RefCountInterface {

public:

- FlutterVideoRenderer(TextureRegistrar* registrar, BinaryMessenger* messenger);

+ FlutterVideoRenderer() = default;

+ ~FlutterVideoRenderer();

+

+ void initialize(TextureRegistrar* registrar, BinaryMessenger* messenger,

+ std::unique_ptr texture,

+ int64_t texture_id);

virtual const FlutterDesktopPixelBuffer* CopyPixelBuffer(size_t width,

size_t height) const;

@@ -57,15 +63,17 @@ class FlutterVideoRendererManager {

void CreateVideoRendererTexture(std::unique_ptr result);

- void SetMediaStream(int64_t texture_id, const std::string& stream_id,

- const std::string& peerConnectionId);

+ void VideoRendererSetSrcObject(int64_t texture_id,

+ const std::string& stream_id,

+ const std::string& owner_tag,

+ const std::string& track_id);

void VideoRendererDispose(int64_t texture_id,

std::unique_ptr result);

private:

FlutterWebRTCBase* base_;

- std::map> renderers_;

+ std::map> renderers_;

};

} // namespace flutter_webrtc_plugin

diff --git a/packages/flutter_webrtc/tizen/lib/aarch64/libwebrtc.so b/packages/flutter_webrtc/tizen/lib/aarch64/libwebrtc.so

index 4e3ae823a..c1276dbb5 100755

Binary files a/packages/flutter_webrtc/tizen/lib/aarch64/libwebrtc.so and b/packages/flutter_webrtc/tizen/lib/aarch64/libwebrtc.so differ

diff --git a/packages/flutter_webrtc/tizen/lib/armel/libwebrtc.so b/packages/flutter_webrtc/tizen/lib/armel/libwebrtc.so

index 60873f931..14e112f99 100755

Binary files a/packages/flutter_webrtc/tizen/lib/armel/libwebrtc.so and b/packages/flutter_webrtc/tizen/lib/armel/libwebrtc.so differ

diff --git a/packages/flutter_webrtc/tizen/lib/i586/libwebrtc.so b/packages/flutter_webrtc/tizen/lib/i586/libwebrtc.so

index d89a5e258..a0b45ad83 100755

Binary files a/packages/flutter_webrtc/tizen/lib/i586/libwebrtc.so and b/packages/flutter_webrtc/tizen/lib/i586/libwebrtc.so differ

diff --git a/packages/flutter_webrtc/tizen/src/flutter_common.cc b/packages/flutter_webrtc/tizen/src/flutter_common.cc

index 8936fd88e..56e0fb34b 100644

--- a/packages/flutter_webrtc/tizen/src/flutter_common.cc

+++ b/packages/flutter_webrtc/tizen/src/flutter_common.cc

@@ -81,11 +81,12 @@ class EventChannelProxyImpl : public EventChannelProxy {

sink_->Success(event);

}

event_queue_.clear();

+ on_listen_called_ = true;

return nullptr;

},

[&](const EncodableValue* arguments)

-> std::unique_ptr> {

- sink_.reset();

+ on_listen_called_ = false;

return nullptr;

});

@@ -95,7 +96,7 @@ class EventChannelProxyImpl : public EventChannelProxy {

virtual ~EventChannelProxyImpl() {}

void Success(const EncodableValue& event, bool cache_event = true) override {

- if (sink_) {

+ if (on_listen_called_) {

sink_->Success(event);

} else {

if (cache_event) {

@@ -108,6 +109,7 @@ class EventChannelProxyImpl : public EventChannelProxy {

std::unique_ptr channel_;

std::unique_ptr sink_;

std::list event_queue_;

+ bool on_listen_called_ = false;

};

std::unique_ptr EventChannelProxy::Create(

diff --git a/packages/flutter_webrtc/tizen/src/flutter_frame_cryptor.cc b/packages/flutter_webrtc/tizen/src/flutter_frame_cryptor.cc

index ec3aeaa0f..8caf7b730 100755

--- a/packages/flutter_webrtc/tizen/src/flutter_frame_cryptor.cc

+++ b/packages/flutter_webrtc/tizen/src/flutter_frame_cryptor.cc

@@ -77,12 +77,27 @@ bool FlutterFrameCryptor::HandleFrameCryptorMethodCall(

} else if (method_name == "frameCryptorFactoryCreateKeyProvider") {

FrameCryptorFactoryCreateKeyProvider(params, std::move(result));

return true;

+ } else if (method_name == "keyProviderSetSharedKey") {

+ KeyProviderSetSharedKey(params, std::move(result));

+ return true;

+ } else if (method_name == "keyProviderRatchetSharedKey") {

+ KeyProviderRatchetSharedKey(params, std::move(result));

+ return true;

+ } else if (method_name == "keyProviderExportSharedKey") {

+ KeyProviderExportSharedKey(params, std::move(result));

+ return true;

} else if (method_name == "keyProviderSetKey") {

KeyProviderSetKey(params, std::move(result));

return true;

} else if (method_name == "keyProviderRatchetKey") {

KeyProviderRatchetKey(params, std::move(result));

return true;

+ } else if (method_name == "keyProviderExportKey") {

+ KeyProviderExportKey(params, std::move(result));

+ return true;

+ } else if (method_name == "keyProviderSetSifTrailer") {

+ KeyProviderSetSifTrailer(params, std::move(result));

+ return true;

} else if (method_name == "keyProviderDispose") {

KeyProviderDispose(params, std::move(result));

return true;

@@ -145,17 +160,18 @@ void FlutterFrameCryptor::FrameCryptorFactoryCreateFrameCryptor(

}

auto frameCryptor =

libwebrtc::FrameCryptorFactory::frameCryptorFromRtpSender(

- string(participantId), sender, AlgorithmFromInt(algorithm),

- keyProvider);

+ base_->factory_, string(participantId), sender,

+ AlgorithmFromInt(algorithm), keyProvider);

std::string event_channel = "FlutterWebRTC/frameCryptorEvent" + uuid;

- std::unique_ptr observer(

- new FlutterFrameCryptorObserver(base_->messenger_, event_channel));

+ scoped_refptr observer(

+ new RefCountedObject(base_->messenger_,

+ event_channel));

- frameCryptor->RegisterRTCFrameCryptorObserver(observer.get());

+ frameCryptor->RegisterRTCFrameCryptorObserver(observer);

frame_cryptors_[uuid] = frameCryptor;

- frame_cryptor_observers_[uuid] = std::move(observer);

+ frame_cryptor_observers_[uuid] = observer;

EncodableMap params;

params[EncodableValue("frameCryptorId")] = uuid;

@@ -171,18 +187,19 @@ void FlutterFrameCryptor::FrameCryptorFactoryCreateFrameCryptor(

auto keyProvider = key_providers_[keyProviderId];

auto frameCryptor =

libwebrtc::FrameCryptorFactory::frameCryptorFromRtpReceiver(

- string(participantId), receiver, AlgorithmFromInt(algorithm),

- keyProvider);

+ base_->factory_, string(participantId), receiver,

+ AlgorithmFromInt(algorithm), keyProvider);

std::string event_channel = "FlutterWebRTC/frameCryptorEvent" + uuid;

- std::unique_ptr observer(

- new FlutterFrameCryptorObserver(base_->messenger_, event_channel));

+ scoped_refptr observer(

+ new RefCountedObject(base_->messenger_,

+ event_channel));

frameCryptor->RegisterRTCFrameCryptorObserver(observer.get());

frame_cryptors_[uuid] = frameCryptor;

- frame_cryptor_observers_[uuid] = std::move(observer);

+ frame_cryptor_observers_[uuid] = observer;

EncodableMap params;

params[EncodableValue("frameCryptorId")] = uuid;

@@ -329,6 +346,9 @@ void FlutterFrameCryptor::FrameCryptorFactoryCreateKeyProvider(

options.ratchet_window_size = ratchetWindowSize;

+ auto failureTolerance = findInt(keyProviderOptions, "failureTolerance");

+ options.failure_tolerance = failureTolerance;

+

auto keyProvider = libwebrtc::KeyProvider::Create(&options);

if (nullptr == keyProvider.get()) {

result->Error("FrameCryptorFactoryCreateKeyProviderFailed",

@@ -342,6 +362,156 @@ void FlutterFrameCryptor::FrameCryptorFactoryCreateKeyProvider(