-

-

Notifications

You must be signed in to change notification settings - Fork 70

Custom Scraper

This page introduce how to setup your custom scraper.

My research topic is computer vision, which is only one piece of puzzle of the computer science. If the builtin metadata scrapers are not suitable for your research, you can write your own metadata scraper.

A metadata scraper consists of three main functions: preProcess, parsingProcess, and scrapeImpl. The return values of the preProcess function usually are three elements: scrapeURL, headers, enable. parsingProcess parses the response of the database API url scrapeURL and assigns metadata to a paper entity draft: entityDraft. This entityDraft will go through all enabled scrapers and finally be inserted or updated to the Paperlib database. scrapeImpl firstly calls the preProcess, then does the network requesting, and finally calls the parsingProcess.

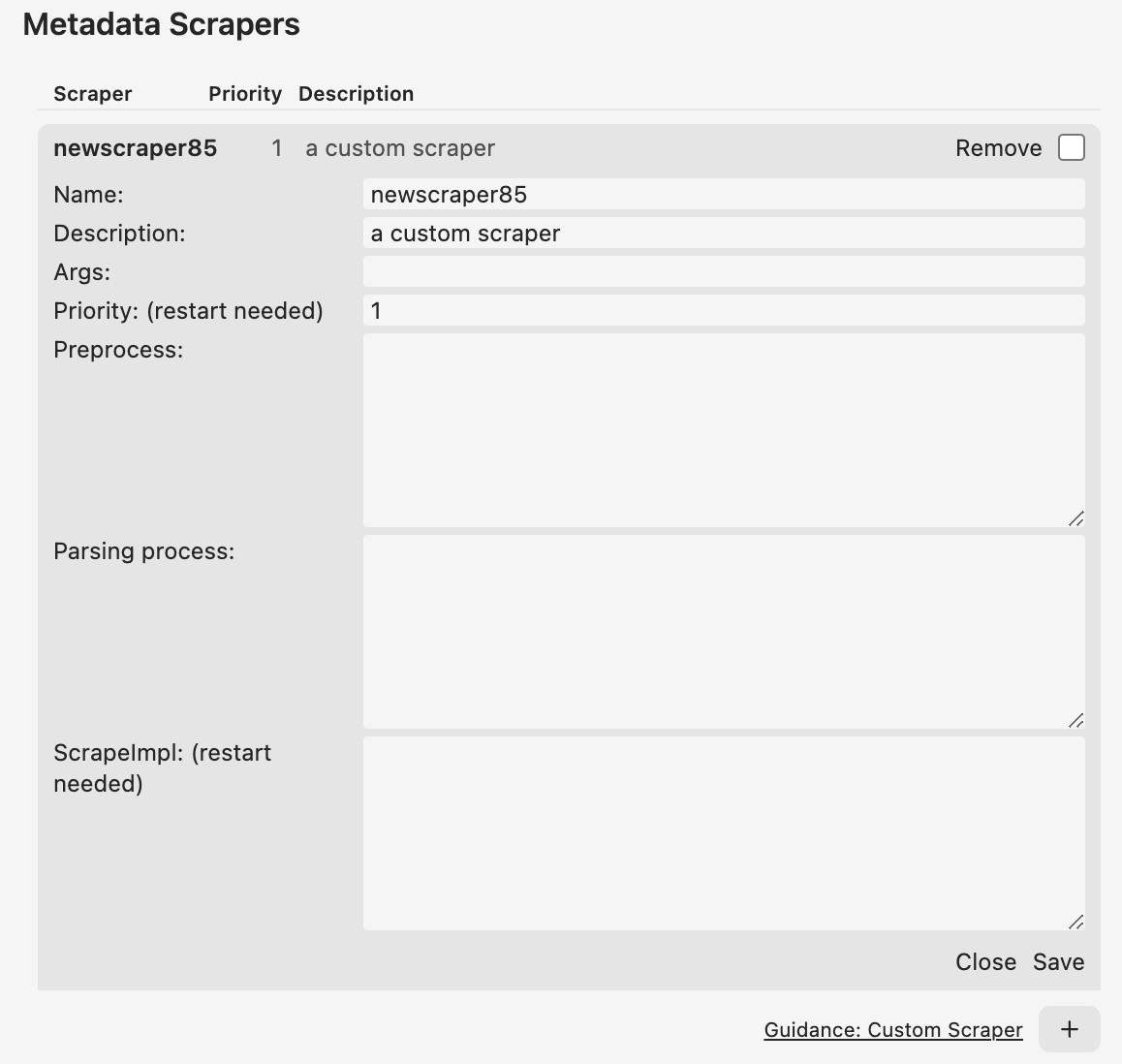

Open the preference window, click the scrapers tab, click the + button.

The default scrapeImpl function is:

const { scrapeURL, headers, enable } = this.preProcess(

entityDraft

);

if (enable) {

const agent = this.getProxyAgent();

let options = {

headers: headers,

retry: 0,

timeout: 5000,

agent: agent,

};

const response = await got(scrapeURL, options);

return this.parsingProcess(response, entityDraft);

} else {

return entityDraft;

}Usually, it is unnecessary to modify this function.

Let's use the built-in DOI scraper as an example.

enable = entityDraft.doi !== "" && this.preference.get("doiScraper");

const doiID = formatString({

str: entityDraft.doi,

removeNewline: true,

removeWhite: true,

});

scrapeURL = `https://dx.doi.org/${doiID}`;

headers = {

Accept: "application/json",

};

This function firstly determines whether this scraper should be enabled or not. Here, if the entityDraft has a valid doi property and you enable this scraper in the preference window, the enable would be true.

After that, we construct the scrapeURL.

Some API requires specific HTTP header, then we set it.

Finally, we send a message to Paperlib that your scraper are going to scrape the metadata of this paper.

const response = JSON.parse(rawResponse.body);

const title = response.title;

const authors = response.author

.map((author) => {

return author.given.trim() + " " + author.family.trim();

})

.join(", ");

const pubTime = response.published["date-parts"]["0"][0];

let pubType;

if (response.type == "proceedings-article") {

pubType = 1;

} else if (response.type == "journal-article") {

pubType = 0;

} else {

pubType = 2;

}

const publication = response["container-title"];

entityDraft.setValue("title", title);

entityDraft.setValue("authors", authors);

entityDraft.setValue("pubTime", `${pubTime}`);

entityDraft.setValue("pubType", pubType);

entityDraft.setValue("publication", publication);

if (response.volume) {

entityDraft.setValue("volume", response.volume);

}

if (response.page) {

entityDraft.setValue("pages", response.page);

}

if (response.publisher) {

entityDraft.setValue(

"publisher",

response.publisher

);

}

The parsingProcess is very easy to understand. It just parses the rawResponse and assign corresponding values to the entityDraft.

Here you can use console.log(rawResponse) and console.log(entityDraft) in this function to output the structure of these to input variables. You can find the log in the developer tools window (option+cmd+I).

You may need some configurable args in your scraper. For example, some database APIs, such as the IEEE xplore, may require some APIkeys. Here, you can access the args in your configuration as:

const ieeeAPIKey = this.preference.get("scrapers").find((scraperPref) => scraperPref.name === "ieee").args;If your args is a stringified JSON object: {APIKEY: xxxxx}, you can parse it here:

const ieeeAPIKey = JSON.parse(this.preference.get("scrapers").find((scraperPref) => scraperPref.name === "ieee").args).APIKEY;This section introduces how to use the custom scraper feature to implement a auto tagger to automatically tag your newly-imported papers.

auto-tagger

{"semi-supervised":"semi-supervised", "segmentation":"segmentation", "detection":"detection"}

You can define your own rule: {"keywords":"tag name"}

enable = !entityDraft.tags && this.getEnable("auto-tagger");const tagMap = JSON.parse(this.preference.get("scrapers").find((scraperPref) => scraperPref.name === "auto-tagger").args);

const title = entityDraft.title.toLowerCase();

let autoTags = []

for (const [key, tag] of Object.entries(tagMap)) {

if (title.includes(key.toLowerCase())) {

autoTags.push(tag)

}

}

const autoTagsStr = autoTags.join('; ')

entityDraft.setValue("tags", autoTagsStr);const { scrapeURL, headers, enable } = this.preProcess(

entityDraft

);

if (enable) {

entityDraft = this.parsingProcess('', entityDraft);

}