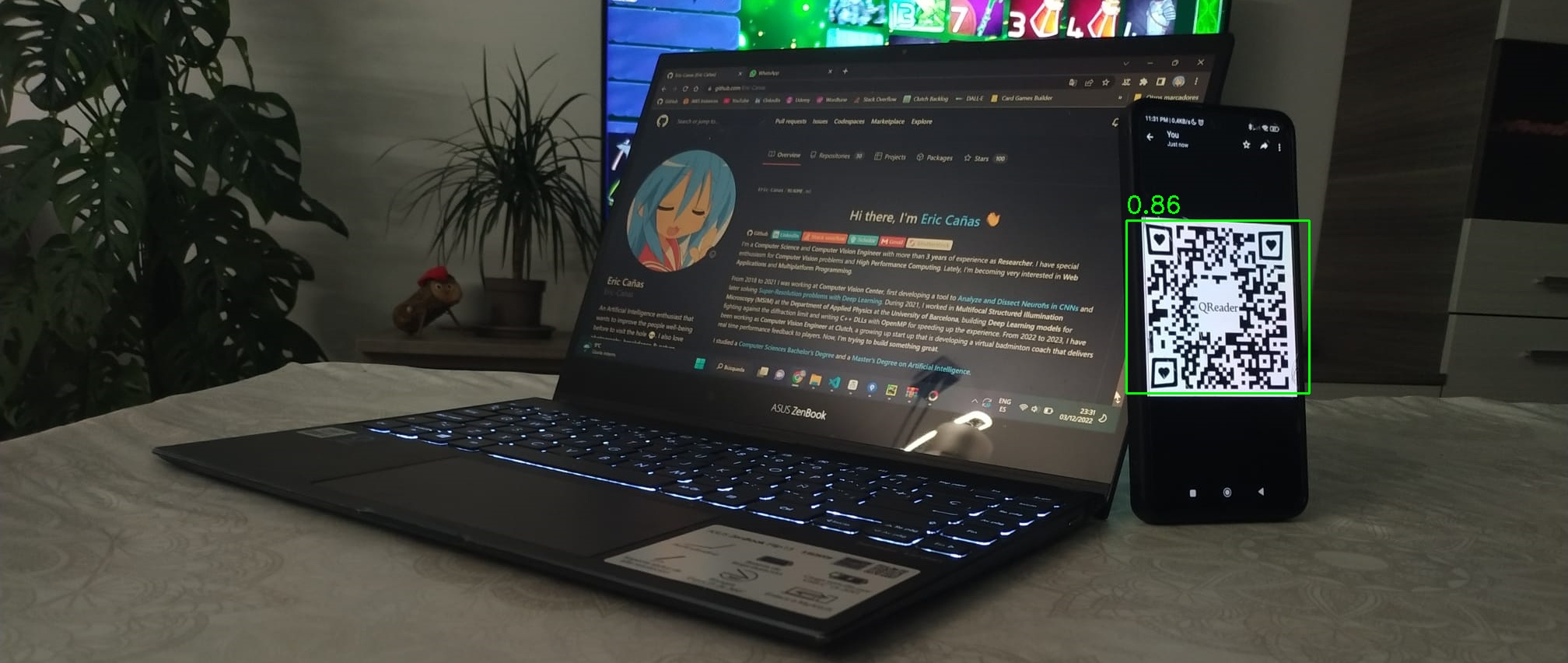

QRDet is a robust QR Detector based on YOLOv8.

QRDet will detect & segment QR codes even in difficult positions or tricky images. If you are looking for a complete QR Detection + Decoding pipeline, take a look at QReader.

To install QRDet, simply run:

pip install qrdetThere is only one function you'll need to call to use QRDet, detect:

from qrdet import QRDetector

import cv2

detector = QRDetector(model_size='s')

image = cv2.imread(filename='resources/qreader_test_image.jpeg')

detections = detector.detect(image=image, is_bgr=True)

# Draw the detections

for detection in detections:

x1, y1, x2, y2 = detection['bbox_xyxy']

confidence = detection['confidence']

cv2.rectangle(image, (x1, y1), (x2, y2), color=(0, 255, 0), thickness=2)

cv2.putText(image, f'{confidence:.2f}', (x1, y1 - 10), fontFace=cv2.FONT_HERSHEY_SIMPLEX,

fontScale=1, color=(0, 255, 0), thickness=2)

# Save the results

cv2.imwrite(filename='resources/qreader_test_image_detections.jpeg', img=image)QRDetector(model_size = 's', conf_th = 0.5, nms_iou = 0.3, weights_folder = '<qrdet_package>/.model')

model_size: "n"|"s"|"m"|"l". Size of the model to load. Smaller models will be faster, while larger models will be more capable for difficult situations. Default:'s'.conf_th: float. Confidence threshold to consider that a detection is valid. Incresing this value will reduce false positives while decreasing will reduce false_negatives. Default:0.5.nms_iou: float. Intersection over Union (IoU) threshold for Non-Maximum Suppression (NMS). NMS is a technique used to eliminate redundant bounding boxes for the same object. Increase this number if you find problems with duplicated detections. Default:0.3weights_folder: str. Folder where detection model will be downloaded. By default, it points out to an internal folder within the package, making sure that it gets correctly removed when uninstalling. You could need to change it when working in environments like AWS Lambda where only /tmp folder is writable, as issued in #11. Default:'<qrdet_package>/.model'.

-

image: np.ndarray|'PIL.Image'|'torch.Tensor'|str.np.ndarrayof shape (H, W, 3),PIL.Image,Tensorof shape (1, 3, H, W), orpath/urlto the image to predict.'screen'for grabbing a screenshot. -

is_bgr: bool. IfTruethe image is expected to be in BGR. Otherwise, it will be expected to be RGB. Only used when image isnp.ndarrayortorch.tensor. Default:False -

legacy: bool. If sent as kwarg, will parse the output to make it identical to 1.x versions. Not Recommended. Default: False. -

Returns: tuple[dict[str, np.ndarray|float|tuple[float|int, float|int]]]. A tuple of dictionaries containing all the information of every detection. Contains the following keys.

| Key | Value Desc. | Value Type | Value Form |

|---|---|---|---|

confidence |

Detection confidence | float |

conf. |

bbox_xyxy |

Bounding box | np.ndarray (4) | [x1, y1, x2, y2] |

cxcy |

Center of bounding box | tuple[float, float] |

(x, y) |

wh |

Bounding box width and height | tuple[float, float] |

(w, h) |

polygon_xy |

Precise polygon that segments the QR | np.ndarray (N, 2) | [[x1, y1], [x2, y2], ...] |

quad_xy |

Four corners polygon that segments the QR | np.ndarray (4, 2) | [[x1, y1], ..., [x4, y4]] |

padded_quad_xy |

quad_xy padded to fully cover polygon_xy |

np.ndarray (4, 2) | [[x1, y1], ..., [x4, y4]] |

image_shape |

Shape of the input image | tuple[float, float] |

(h, w) |

NOTE:

- All

np.ndarrayvalues are of typenp.float32- All keys (except

confidenceandimage_shape) have a normalized ('n') version. For example,bbox_xyxyrepresents the bbox of the QR in image coordinates [[0., im_w], [0., im_h]], whilebbox_xyxyncontains the same bounding box in normalized coordinates [0., 1.].bbox_xyxy[n]andpolygon_xy[n]are clipped toimage_shape. You can use them for indexing without further management

This library is based on the following projects:

- YoloV8 model for Object Segmentation.

- QuadrilateralFitter for fitting 4 corners polygons from noisy segmentation outputs.