diff --git a/.DS_Store b/.DS_Store

deleted file mode 100644

index 03ce2149..00000000

Binary files a/.DS_Store and /dev/null differ

diff --git a/.github/FUNDING.yml b/.github/FUNDING.yml

new file mode 100644

index 00000000..d68b17e1

--- /dev/null

+++ b/.github/FUNDING.yml

@@ -0,0 +1,12 @@

+# These are supported funding model platforms

+

+github: # Replace with up to 4 GitHub Sponsors-enabled usernames e.g., [user1, user2]

+patreon: # Replace with a single Patreon username

+open_collective: # Replace with a single Open Collective username

+ko_fi: # Replace with a single Ko-fi username

+tidelift: # Replace with a single Tidelift platform-name/package-name e.g., npm/babel

+community_bridge: # Replace with a single Community Bridge project-name e.g., cloud-foundry

+liberapay: # Replace with a single Liberapay username

+issuehunt: # Replace with a single IssueHunt username

+otechie: # Replace with a single Otechie username

+custom: ['https://www.paypal.me/Udayraj123/','https://www.buymeacoffee.com/Udayraj123']

diff --git a/.github/ISSUE_TEMPLATE/bug_report.md b/.github/ISSUE_TEMPLATE/bug_report.md

new file mode 100644

index 00000000..408ab293

--- /dev/null

+++ b/.github/ISSUE_TEMPLATE/bug_report.md

@@ -0,0 +1,34 @@

+---

+name: Bug report

+about: Create a report to help us improve

+title: "[Bug]"

+labels: ''

+assignees: ''

+

+---

+

+**Describe the bug**

+A clear and concise description of what the bug is.

+

+**To Reproduce**

+Steps to reproduce the behavior:

+1. Use sample '...'

+2. Command(s) used '....'

+3. See error

+

+**Expected behavior**

+A clear and concise description of what you expected to happen.

+

+**Screenshots**

+If applicable, add screenshots to help explain your problem.

+

+**Desktop (please complete the following information):**

+ - OS: [e.g. MacOS, Linux, Windows]

+ - Python version

+ - OpenCV version

+

+

+**Additional context**

+Add any other context about the problem here.

+

+Error Stack trace. Sample images used, etc

diff --git a/.github/ISSUE_TEMPLATE/feature_request.md b/.github/ISSUE_TEMPLATE/feature_request.md

new file mode 100644

index 00000000..604341ba

--- /dev/null

+++ b/.github/ISSUE_TEMPLATE/feature_request.md

@@ -0,0 +1,20 @@

+---

+name: Feature request

+about: Suggest an idea/enhancement for this project

+title: "[Feature]"

+labels: enhancement

+assignees: ''

+

+---

+

+**Is your feature request related to a problem? Please describe.**

+A clear and concise description of what the problem is. Ex. I think implementing [...] will help everyone.

+

+**Describe the solution you'd like**

+A clear and concise description of what you want to happen.

+

+**Describe alternatives you've considered**

+A clear and concise description of any alternative solutions or features you've considered.

+

+**Additional context**

+Add any other context or screenshots about the feature request here.

diff --git a/.github/pre-commit.yml b/.github/pre-commit.yml

new file mode 100644

index 00000000..d6b9d9a6

--- /dev/null

+++ b/.github/pre-commit.yml

@@ -0,0 +1,13 @@

+name: Pre-Commit Hook

+

+on: [push, pull_request]

+

+jobs:

+ pre-commit:

+ runs-on: ubuntu-latest

+ steps:

+ - uses: actions/checkout@v3

+ - uses: actions/setup-python@v3

+ with:

+ python-version: '3.11'

+ - uses: pre-commit/action@v3.0.0

diff --git a/.gitignore b/.gitignore

index 2778cf2c..a7d4046b 100644

--- a/.gitignore

+++ b/.gitignore

@@ -1,12 +1,14 @@

-**/__pycache__

-**/CheckedOMRs

-**/ignore

-**/.DS_Store

-OMRChecker.wiki/

+# Any directory starting with a dot

+**/\.*/

+# Except .github

+!.github/

+

# Everything in inputs/ and outputs/

inputs/*

outputs/*

-# Except *.json and OMR_Files/

-# !inputs/OMR_Files/

-# !inputs/*.json

-# !inputs/omr_marker.jpg

\ No newline at end of file

+

+# Misc

+**/.DS_Store

+**/__pycache__

+venv/

+OMRChecker.wiki/

diff --git a/.pre-commit-config.yaml b/.pre-commit-config.yaml

new file mode 100644

index 00000000..2daa2af8

--- /dev/null

+++ b/.pre-commit-config.yaml

@@ -0,0 +1,59 @@

+exclude: "__snapshots__/.*$"

+default_install_hook_types: [pre-commit, pre-push]

+repos:

+ - repo: https://github.com/pre-commit/pre-commit-hooks

+ rev: v4.4.0

+ hooks:

+ - id: check-yaml

+ stages: [commit]

+ - id: check-added-large-files

+ args: ['--maxkb=300']

+ fail_fast: false

+ stages: [commit]

+ - id: pretty-format-json

+ args: ['--autofix', '--no-sort-keys']

+ - id: end-of-file-fixer

+ exclude_types: ["csv", "json"]

+ stages: [commit]

+ - id: trailing-whitespace

+ stages: [commit]

+ - repo: https://github.com/pycqa/isort

+ rev: 5.12.0

+ hooks:

+ - id: isort

+ args: ["--profile", "black"]

+ stages: [commit]

+ - repo: https://github.com/psf/black

+ rev: 23.3.0

+ hooks:

+ - id: black

+ fail_fast: true

+ stages: [commit]

+ - repo: https://github.com/pycqa/flake8

+ rev: 6.0.0

+ hooks:

+ - id: flake8

+ args:

+ - "--ignore=E501,W503,E203,E741,F541" # Line too long, Line break occurred before a binary operator, Whitespace before ':'

+ fail_fast: true

+ stages: [commit]

+ - repo: local

+ hooks:

+ - id: pytest-on-commit

+ name: Running single sample test

+ entry: python3 -m pytest -rfpsxEX --disable-warnings --verbose -k sample1

+ language: system

+ pass_filenames: false

+ always_run: true

+ fail_fast: true

+ stages: [commit]

+ - repo: local

+ hooks:

+ - id: pytest-on-push

+ name: Running all tests before push...

+ entry: python3 -m pytest -rfpsxEX --disable-warnings --verbose --durations=3

+ language: system

+ pass_filenames: false

+ always_run: true

+ fail_fast: true

+ stages: [push]

diff --git a/.pylintrc b/.pylintrc

new file mode 100644

index 00000000..405ce2e7

--- /dev/null

+++ b/.pylintrc

@@ -0,0 +1,43 @@

+[BASIC]

+# Regular expression matching correct variable names. Overrides variable-naming-style.

+# snake_case with single letter regex -

+variable-rgx=[a-z0-9_]{1,30}$

+

+# Good variable names which should always be accepted, separated by a comma.

+good-names=x,y,pt

+

+[MESSAGES CONTROL]

+

+# Disable the message, report, category or checker with the given id(s). You

+# can either give multiple identifiers separated by comma (,) or put this

+# option multiple times (only on the command line, not in the configuration

+# file where it should appear only once). You can also use "--disable=all" to

+# disable everything first and then reenable specific checks. For example, if

+# you want to run only the similarities checker, you can use "--disable=all

+# --enable=similarities". If you want to run only the classes checker, but have

+# no Warning level messages displayed, use "--disable=all --enable=classes

+# --disable=W".

+disable=import-error,

+ unresolved-import,

+ too-few-public-methods,

+ missing-docstring,

+ relative-beyond-top-level,

+ too-many-instance-attributes,

+ bad-continuation,

+ no-member

+

+# Note: bad-continuation is a false positive showing bug in pylint

+# https://github.com/psf/black/issues/48

+

+

+[REPORTS]

+# Set the output format. Available formats are text, parseable, colorized, json

+# and msvs (visual studio). You can also give a reporter class, e.g.

+# mypackage.mymodule.MyReporterClass.

+output-format=text

+

+# Tells whether to display a full report or only the messages.

+reports=no

+

+# Activate the evaluation score.

+score=yes

diff --git a/CODE_OF_CONDUCT.md b/CODE_OF_CONDUCT.md

new file mode 100644

index 00000000..5a01f7a6

--- /dev/null

+++ b/CODE_OF_CONDUCT.md

@@ -0,0 +1,133 @@

+

+# Contributor Covenant Code of Conduct

+

+## Our Pledge

+

+We as members, contributors, and leaders pledge to make participation in our

+community a harassment-free experience for everyone, regardless of age, body

+size, visible or invisible disability, ethnicity, sex characteristics, gender

+identity and expression, level of experience, education, socio-economic status,

+nationality, personal appearance, race, caste, color, religion, or sexual

+identity and orientation.

+

+We pledge to act and interact in ways that contribute to an open, welcoming,

+diverse, inclusive, and healthy community.

+

+## Our Standards

+

+Examples of behavior that contributes to a positive environment for our

+community include:

+

+* Demonstrating empathy and kindness toward other people

+* Being respectful of differing opinions, viewpoints, and experiences

+* Giving and gracefully accepting constructive feedback

+* Accepting responsibility and apologizing to those affected by our mistakes,

+ and learning from the experience

+* Focusing on what is best not just for us as individuals, but for the overall

+ community

+

+Examples of unacceptable behavior include:

+

+* The use of sexualized language or imagery, and sexual attention or advances of

+ any kind

+* Trolling, insulting or derogatory comments, and personal or political attacks

+* Public or private harassment

+* Publishing others' private information, such as a physical or email address,

+ without their explicit permission

+* Other conduct which could reasonably be considered inappropriate in a

+ professional setting

+

+## Enforcement Responsibilities

+

+Community leaders are responsible for clarifying and enforcing our standards of

+acceptable behavior and will take appropriate and fair corrective action in

+response to any behavior that they deem inappropriate, threatening, offensive,

+or harmful.

+

+Community leaders have the right and responsibility to remove, edit, or reject

+comments, commits, code, wiki edits, issues, and other contributions that are

+not aligned to this Code of Conduct, and will communicate reasons for moderation

+decisions when appropriate.

+

+## Scope

+

+This Code of Conduct applies within all community spaces, and also applies when

+an individual is officially representing the community in public spaces.

+Examples of representing our community include using an official email address,

+posting via an official social media account, or acting as an appointed

+representative at an online or offline event.

+

+## Enforcement

+

+Instances of abusive, harassing, or otherwise unacceptable behavior may be

+reported to the community leaders responsible for enforcement at

+[INSERT CONTACT METHOD].

+All complaints will be reviewed and investigated promptly and fairly.

+

+All community leaders are obligated to respect the privacy and security of the

+reporter of any incident.

+

+## Enforcement Guidelines

+

+Community leaders will follow these Community Impact Guidelines in determining

+the consequences for any action they deem in violation of this Code of Conduct:

+

+### 1. Correction

+

+**Community Impact**: Use of inappropriate language or other behavior deemed

+unprofessional or unwelcome in the community.

+

+**Consequence**: A private, written warning from community leaders, providing

+clarity around the nature of the violation and an explanation of why the

+behavior was inappropriate. A public apology may be requested.

+

+### 2. Warning

+

+**Community Impact**: A violation through a single incident or series of

+actions.

+

+**Consequence**: A warning with consequences for continued behavior. No

+interaction with the people involved, including unsolicited interaction with

+those enforcing the Code of Conduct, for a specified period of time. This

+includes avoiding interactions in community spaces as well as external channels

+like social media. Violating these terms may lead to a temporary or permanent

+ban.

+

+### 3. Temporary Ban

+

+**Community Impact**: A serious violation of community standards, including

+sustained inappropriate behavior.

+

+**Consequence**: A temporary ban from any sort of interaction or public

+communication with the community for a specified period of time. No public or

+private interaction with the people involved, including unsolicited interaction

+with those enforcing the Code of Conduct, is allowed during this period.

+Violating these terms may lead to a permanent ban.

+

+### 4. Permanent Ban

+

+**Community Impact**: Demonstrating a pattern of violation of community

+standards, including sustained inappropriate behavior, harassment of an

+individual, or aggression toward or disparagement of classes of individuals.

+

+**Consequence**: A permanent ban from any sort of public interaction within the

+community.

+

+## Attribution

+

+This Code of Conduct is adapted from the [Contributor Covenant][homepage],

+version 2.1, available at

+[https://www.contributor-covenant.org/version/2/1/code_of_conduct.html][v2.1].

+

+Community Impact Guidelines were inspired by

+[Mozilla's code of conduct enforcement ladder][Mozilla CoC].

+

+For answers to common questions about this code of conduct, see the FAQ at

+[https://www.contributor-covenant.org/faq][FAQ]. Translations are available at

+[https://www.contributor-covenant.org/translations][translations].

+

+[homepage]: https://www.contributor-covenant.org

+[v2.1]: https://www.contributor-covenant.org/version/2/1/code_of_conduct.html

+[Mozilla CoC]: https://github.com/mozilla/diversity

+[FAQ]: https://www.contributor-covenant.org/faq

+[translations]: https://www.contributor-covenant.org/translations

diff --git a/CONTRIBUTING.md b/CONTRIBUTING.md

new file mode 100644

index 00000000..dbf459d2

--- /dev/null

+++ b/CONTRIBUTING.md

@@ -0,0 +1,32 @@

+# How to contribute

+So you want to write code and get it landed in the official OMRChecker repository?

+First, fork our repository into your own GitHub account, and create a local clone of it as described in the installation instructions.

+The latter will be used to get new features implemented or bugs fixed.

+

+Once done and you have the code locally on the disk, you can get started. We advise you to not work directly on the master branch,

+but to create a separate branch for each issue you are working on. That way you can easily switch between different work,

+and you can update each one for the latest changes on the upstream master individually.

+

+

+# Writing Code

+For writing the code just follow the [Pep8 Python style](https://peps.python.org/pep-0008/) guide, If there is something unclear about the style, just look at existing code which might help you to understand it better.

+

+Also, try to use commits with [conventional messages](https://www.conventionalcommits.org/en/v1.0.0/#summary).

+

+

+# Code Formatting

+Before committing your code, make sure to run the following command to format your code according to the PEP8 style guide:

+```.sh

+pip install -r requirements.dev.txt && pre-commit install

+```

+

+Run `pre-commit` before committing your changes:

+```.sh

+git add .

+pre-commit run -a

+```

+

+# Where to contribute from

+

+- You can pickup any open [issues](https://github.com/Udayraj123/OMRChecker/issues) to solve.

+- You can also check out the [ideas list](https://github.com/users/Udayraj123/projects/2/views/1)

diff --git a/Contributors.md b/Contributors.md

new file mode 100644

index 00000000..3f95b245

--- /dev/null

+++ b/Contributors.md

@@ -0,0 +1,22 @@

+# Contributors

+

+- [Udayraj123](https://github.com/Udayraj123)

+- [leongwaikay](https://github.com/leongwaikay)

+- [deepakgouda](https://github.com/deepakgouda)

+- [apurva91](https://github.com/apurva91)

+- [sparsh2706](https://github.com/sparsh2706)

+- [namit2saxena](https://github.com/namit2saxena)

+- [Harsh-Kapoorr](https://github.com/Harsh-Kapoorr)

+- [Sandeep-1507](https://github.com/Sandeep-1507)

+- [SpyzzVVarun](https://github.com/SpyzzVVarun)

+- [asc249](https://github.com/asc249)

+- [05Alston](https://github.com/05Alston)

+- [Antibodyy](https://github.com/Antibodyy)

+- [infinity1729](https://github.com/infinity1729)

+- [Rohan-G](https://github.com/Rohan-G)

+- [UjjwalMahar](https://github.com/UjjwalMahar)

+- [Kurtsley](https://github.com/Kurtsley)

+- [gaursagar21](https://github.com/gaursagar21)

+- [aayushibansal2001](https://github.com/aayushibansal2001)

+- [ShamanthVallem](https://github.com/ShamanthVallem)

+- [rudrapsc](https://github.com/rudrapsc)

diff --git a/LICENSE b/LICENSE

index fbbea73a..3942f30c 100644

--- a/LICENSE

+++ b/LICENSE

@@ -1,621 +1,22 @@

- GNU GENERAL PUBLIC LICENSE

- Version 3, 29 June 2007

-

- Copyright (C) 2007 Free Software Foundation, Inc.

- Everyone is permitted to copy and distribute verbatim copies

- of this license document, but changing it is not allowed.

-

- Preamble

-

- The GNU General Public License is a free, copyleft license for

-software and other kinds of works.

-

- The licenses for most software and other practical works are designed

-to take away your freedom to share and change the works. By contrast,

-the GNU General Public License is intended to guarantee your freedom to

-share and change all versions of a program--to make sure it remains free

-software for all its users. We, the Free Software Foundation, use the

-GNU General Public License for most of our software; it applies also to

-any other work released this way by its authors. You can apply it to

-your programs, too.

-

- When we speak of free software, we are referring to freedom, not

-price. Our General Public Licenses are designed to make sure that you

-have the freedom to distribute copies of free software (and charge for

-them if you wish), that you receive source code or can get it if you

-want it, that you can change the software or use pieces of it in new

-free programs, and that you know you can do these things.

-

- To protect your rights, we need to prevent others from denying you

-these rights or asking you to surrender the rights. Therefore, you have

-certain responsibilities if you distribute copies of the software, or if

-you modify it: responsibilities to respect the freedom of others.

-

- For example, if you distribute copies of such a program, whether

-gratis or for a fee, you must pass on to the recipients the same

-freedoms that you received. You must make sure that they, too, receive

-or can get the source code. And you must show them these terms so they

-know their rights.

-

- Developers that use the GNU GPL protect your rights with two steps:

-(1) assert copyright on the software, and (2) offer you this License

-giving you legal permission to copy, distribute and/or modify it.

-

- For the developers' and authors' protection, the GPL clearly explains

-that there is no warranty for this free software. For both users' and

-authors' sake, the GPL requires that modified versions be marked as

-changed, so that their problems will not be attributed erroneously to

-authors of previous versions.

-

- Some devices are designed to deny users access to install or run

-modified versions of the software inside them, although the manufacturer

-can do so. This is fundamentally incompatible with the aim of

-protecting users' freedom to change the software. The systematic

-pattern of such abuse occurs in the area of products for individuals to

-use, which is precisely where it is most unacceptable. Therefore, we

-have designed this version of the GPL to prohibit the practice for those

-products. If such problems arise substantially in other domains, we

-stand ready to extend this provision to those domains in future versions

-of the GPL, as needed to protect the freedom of users.

-

- Finally, every program is threatened constantly by software patents.

-States should not allow patents to restrict development and use of

-software on general-purpose computers, but in those that do, we wish to

-avoid the special danger that patents applied to a free program could

-make it effectively proprietary. To prevent this, the GPL assures that

-patents cannot be used to render the program non-free.

-

- The precise terms and conditions for copying, distribution and

-modification follow.

-

- TERMS AND CONDITIONS

-

- 0. Definitions.

-

- "This License" refers to version 3 of the GNU General Public License.

-

- "Copyright" also means copyright-like laws that apply to other kinds of

-works, such as semiconductor masks.

-

- "The Program" refers to any copyrightable work licensed under this

-License. Each licensee is addressed as "you". "Licensees" and

-"recipients" may be individuals or organizations.

-

- To "modify" a work means to copy from or adapt all or part of the work

-in a fashion requiring copyright permission, other than the making of an

-exact copy. The resulting work is called a "modified version" of the

-earlier work or a work "based on" the earlier work.

-

- A "covered work" means either the unmodified Program or a work based

-on the Program.

-

- To "propagate" a work means to do anything with it that, without

-permission, would make you directly or secondarily liable for

-infringement under applicable copyright law, except executing it on a

-computer or modifying a private copy. Propagation includes copying,

-distribution (with or without modification), making available to the

-public, and in some countries other activities as well.

-

- To "convey" a work means any kind of propagation that enables other

-parties to make or receive copies. Mere interaction with a user through

-a computer network, with no transfer of a copy, is not conveying.

-

- An interactive user interface displays "Appropriate Legal Notices"

-to the extent that it includes a convenient and prominently visible

-feature that (1) displays an appropriate copyright notice, and (2)

-tells the user that there is no warranty for the work (except to the

-extent that warranties are provided), that licensees may convey the

-work under this License, and how to view a copy of this License. If

-the interface presents a list of user commands or options, such as a

-menu, a prominent item in the list meets this criterion.

-

- 1. Source Code.

-

- The "source code" for a work means the preferred form of the work

-for making modifications to it. "Object code" means any non-source

-form of a work.

-

- A "Standard Interface" means an interface that either is an official

-standard defined by a recognized standards body, or, in the case of

-interfaces specified for a particular programming language, one that

-is widely used among developers working in that language.

-

- The "System Libraries" of an executable work include anything, other

-than the work as a whole, that (a) is included in the normal form of

-packaging a Major Component, but which is not part of that Major

-Component, and (b) serves only to enable use of the work with that

-Major Component, or to implement a Standard Interface for which an

-implementation is available to the public in source code form. A

-"Major Component", in this context, means a major essential component

-(kernel, window system, and so on) of the specific operating system

-(if any) on which the executable work runs, or a compiler used to

-produce the work, or an object code interpreter used to run it.

-

- The "Corresponding Source" for a work in object code form means all

-the source code needed to generate, install, and (for an executable

-work) run the object code and to modify the work, including scripts to

-control those activities. However, it does not include the work's

-System Libraries, or general-purpose tools or generally available free

-programs which are used unmodified in performing those activities but

-which are not part of the work. For example, Corresponding Source

-includes interface definition files associated with source files for

-the work, and the source code for shared libraries and dynamically

-linked subprograms that the work is specifically designed to require,

-such as by intimate data communication or control flow between those

-subprograms and other parts of the work.

-

- The Corresponding Source need not include anything that users

-can regenerate automatically from other parts of the Corresponding

-Source.

-

- The Corresponding Source for a work in source code form is that

-same work.

-

- 2. Basic Permissions.

-

- All rights granted under this License are granted for the term of

-copyright on the Program, and are irrevocable provided the stated

-conditions are met. This License explicitly affirms your unlimited

-permission to run the unmodified Program. The output from running a

-covered work is covered by this License only if the output, given its

-content, constitutes a covered work. This License acknowledges your

-rights of fair use or other equivalent, as provided by copyright law.

-

- You may make, run and propagate covered works that you do not

-convey, without conditions so long as your license otherwise remains

-in force. You may convey covered works to others for the sole purpose

-of having them make modifications exclusively for you, or provide you

-with facilities for running those works, provided that you comply with

-the terms of this License in conveying all material for which you do

-not control copyright. Those thus making or running the covered works

-for you must do so exclusively on your behalf, under your direction

-and control, on terms that prohibit them from making any copies of

-your copyrighted material outside their relationship with you.

-

- Conveying under any other circumstances is permitted solely under

-the conditions stated below. Sublicensing is not allowed; section 10

-makes it unnecessary.

-

- 3. Protecting Users' Legal Rights From Anti-Circumvention Law.

-

- No covered work shall be deemed part of an effective technological

-measure under any applicable law fulfilling obligations under article

-11 of the WIPO copyright treaty adopted on 20 December 1996, or

-similar laws prohibiting or restricting circumvention of such

-measures.

-

- When you convey a covered work, you waive any legal power to forbid

-circumvention of technological measures to the extent such circumvention

-is effected by exercising rights under this License with respect to

-the covered work, and you disclaim any intention to limit operation or

-modification of the work as a means of enforcing, against the work's

-users, your or third parties' legal rights to forbid circumvention of

-technological measures.

-

- 4. Conveying Verbatim Copies.

-

- You may convey verbatim copies of the Program's source code as you

-receive it, in any medium, provided that you conspicuously and

-appropriately publish on each copy an appropriate copyright notice;

-keep intact all notices stating that this License and any

-non-permissive terms added in accord with section 7 apply to the code;

-keep intact all notices of the absence of any warranty; and give all

-recipients a copy of this License along with the Program.

-

- You may charge any price or no price for each copy that you convey,

-and you may offer support or warranty protection for a fee.

-

- 5. Conveying Modified Source Versions.

-

- You may convey a work based on the Program, or the modifications to

-produce it from the Program, in the form of source code under the

-terms of section 4, provided that you also meet all of these conditions:

-

- a) The work must carry prominent notices stating that you modified

- it, and giving a relevant date.

-

- b) The work must carry prominent notices stating that it is

- released under this License and any conditions added under section

- 7. This requirement modifies the requirement in section 4 to

- "keep intact all notices".

-

- c) You must license the entire work, as a whole, under this

- License to anyone who comes into possession of a copy. This

- License will therefore apply, along with any applicable section 7

- additional terms, to the whole of the work, and all its parts,

- regardless of how they are packaged. This License gives no

- permission to license the work in any other way, but it does not

- invalidate such permission if you have separately received it.

-

- d) If the work has interactive user interfaces, each must display

- Appropriate Legal Notices; however, if the Program has interactive

- interfaces that do not display Appropriate Legal Notices, your

- work need not make them do so.

-

- A compilation of a covered work with other separate and independent

-works, which are not by their nature extensions of the covered work,

-and which are not combined with it such as to form a larger program,

-in or on a volume of a storage or distribution medium, is called an

-"aggregate" if the compilation and its resulting copyright are not

-used to limit the access or legal rights of the compilation's users

-beyond what the individual works permit. Inclusion of a covered work

-in an aggregate does not cause this License to apply to the other

-parts of the aggregate.

-

- 6. Conveying Non-Source Forms.

-

- You may convey a covered work in object code form under the terms

-of sections 4 and 5, provided that you also convey the

-machine-readable Corresponding Source under the terms of this License,

-in one of these ways:

-

- a) Convey the object code in, or embodied in, a physical product

- (including a physical distribution medium), accompanied by the

- Corresponding Source fixed on a durable physical medium

- customarily used for software interchange.

-

- b) Convey the object code in, or embodied in, a physical product

- (including a physical distribution medium), accompanied by a

- written offer, valid for at least three years and valid for as

- long as you offer spare parts or customer support for that product

- model, to give anyone who possesses the object code either (1) a

- copy of the Corresponding Source for all the software in the

- product that is covered by this License, on a durable physical

- medium customarily used for software interchange, for a price no

- more than your reasonable cost of physically performing this

- conveying of source, or (2) access to copy the

- Corresponding Source from a network server at no charge.

-

- c) Convey individual copies of the object code with a copy of the

- written offer to provide the Corresponding Source. This

- alternative is allowed only occasionally and noncommercially, and

- only if you received the object code with such an offer, in accord

- with subsection 6b.

-

- d) Convey the object code by offering access from a designated

- place (gratis or for a charge), and offer equivalent access to the

- Corresponding Source in the same way through the same place at no

- further charge. You need not require recipients to copy the

- Corresponding Source along with the object code. If the place to

- copy the object code is a network server, the Corresponding Source

- may be on a different server (operated by you or a third party)

- that supports equivalent copying facilities, provided you maintain

- clear directions next to the object code saying where to find the

- Corresponding Source. Regardless of what server hosts the

- Corresponding Source, you remain obligated to ensure that it is

- available for as long as needed to satisfy these requirements.

-

- e) Convey the object code using peer-to-peer transmission, provided

- you inform other peers where the object code and Corresponding

- Source of the work are being offered to the general public at no

- charge under subsection 6d.

-

- A separable portion of the object code, whose source code is excluded

-from the Corresponding Source as a System Library, need not be

-included in conveying the object code work.

-

- A "User Product" is either (1) a "consumer product", which means any

-tangible personal property which is normally used for personal, family,

-or household purposes, or (2) anything designed or sold for incorporation

-into a dwelling. In determining whether a product is a consumer product,

-doubtful cases shall be resolved in favor of coverage. For a particular

-product received by a particular user, "normally used" refers to a

-typical or common use of that class of product, regardless of the status

-of the particular user or of the way in which the particular user

-actually uses, or expects or is expected to use, the product. A product

-is a consumer product regardless of whether the product has substantial

-commercial, industrial or non-consumer uses, unless such uses represent

-the only significant mode of use of the product.

-

- "Installation Information" for a User Product means any methods,

-procedures, authorization keys, or other information required to install

-and execute modified versions of a covered work in that User Product from

-a modified version of its Corresponding Source. The information must

-suffice to ensure that the continued functioning of the modified object

-code is in no case prevented or interfered with solely because

-modification has been made.

-

- If you convey an object code work under this section in, or with, or

-specifically for use in, a User Product, and the conveying occurs as

-part of a transaction in which the right of possession and use of the

-User Product is transferred to the recipient in perpetuity or for a

-fixed term (regardless of how the transaction is characterized), the

-Corresponding Source conveyed under this section must be accompanied

-by the Installation Information. But this requirement does not apply

-if neither you nor any third party retains the ability to install

-modified object code on the User Product (for example, the work has

-been installed in ROM).

-

- The requirement to provide Installation Information does not include a

-requirement to continue to provide support service, warranty, or updates

-for a work that has been modified or installed by the recipient, or for

-the User Product in which it has been modified or installed. Access to a

-network may be denied when the modification itself materially and

-adversely affects the operation of the network or violates the rules and

-protocols for communication across the network.

-

- Corresponding Source conveyed, and Installation Information provided,

-in accord with this section must be in a format that is publicly

-documented (and with an implementation available to the public in

-source code form), and must require no special password or key for

-unpacking, reading or copying.

-

- 7. Additional Terms.

-

- "Additional permissions" are terms that supplement the terms of this

-License by making exceptions from one or more of its conditions.

-Additional permissions that are applicable to the entire Program shall

-be treated as though they were included in this License, to the extent

-that they are valid under applicable law. If additional permissions

-apply only to part of the Program, that part may be used separately

-under those permissions, but the entire Program remains governed by

-this License without regard to the additional permissions.

-

- When you convey a copy of a covered work, you may at your option

-remove any additional permissions from that copy, or from any part of

-it. (Additional permissions may be written to require their own

-removal in certain cases when you modify the work.) You may place

-additional permissions on material, added by you to a covered work,

-for which you have or can give appropriate copyright permission.

-

- Notwithstanding any other provision of this License, for material you

-add to a covered work, you may (if authorized by the copyright holders of

-that material) supplement the terms of this License with terms:

-

- a) Disclaiming warranty or limiting liability differently from the

- terms of sections 15 and 16 of this License; or

-

- b) Requiring preservation of specified reasonable legal notices or

- author attributions in that material or in the Appropriate Legal

- Notices displayed by works containing it; or

-

- c) Prohibiting misrepresentation of the origin of that material, or

- requiring that modified versions of such material be marked in

- reasonable ways as different from the original version; or

-

- d) Limiting the use for publicity purposes of names of licensors or

- authors of the material; or

-

- e) Declining to grant rights under trademark law for use of some

- trade names, trademarks, or service marks; or

-

- f) Requiring indemnification of licensors and authors of that

- material by anyone who conveys the material (or modified versions of

- it) with contractual assumptions of liability to the recipient, for

- any liability that these contractual assumptions directly impose on

- those licensors and authors.

-

- All other non-permissive additional terms are considered "further

-restrictions" within the meaning of section 10. If the Program as you

-received it, or any part of it, contains a notice stating that it is

-governed by this License along with a term that is a further

-restriction, you may remove that term. If a license document contains

-a further restriction but permits relicensing or conveying under this

-License, you may add to a covered work material governed by the terms

-of that license document, provided that the further restriction does

-not survive such relicensing or conveying.

-

- If you add terms to a covered work in accord with this section, you

-must place, in the relevant source files, a statement of the

-additional terms that apply to those files, or a notice indicating

-where to find the applicable terms.

-

- Additional terms, permissive or non-permissive, may be stated in the

-form of a separately written license, or stated as exceptions;

-the above requirements apply either way.

-

- 8. Termination.

-

- You may not propagate or modify a covered work except as expressly

-provided under this License. Any attempt otherwise to propagate or

-modify it is void, and will automatically terminate your rights under

-this License (including any patent licenses granted under the third

-paragraph of section 11).

-

- However, if you cease all violation of this License, then your

-license from a particular copyright holder is reinstated (a)

-provisionally, unless and until the copyright holder explicitly and

-finally terminates your license, and (b) permanently, if the copyright

-holder fails to notify you of the violation by some reasonable means

-prior to 60 days after the cessation.

-

- Moreover, your license from a particular copyright holder is

-reinstated permanently if the copyright holder notifies you of the

-violation by some reasonable means, this is the first time you have

-received notice of violation of this License (for any work) from that

-copyright holder, and you cure the violation prior to 30 days after

-your receipt of the notice.

-

- Termination of your rights under this section does not terminate the

-licenses of parties who have received copies or rights from you under

-this License. If your rights have been terminated and not permanently

-reinstated, you do not qualify to receive new licenses for the same

-material under section 10.

-

- 9. Acceptance Not Required for Having Copies.

-

- You are not required to accept this License in order to receive or

-run a copy of the Program. Ancillary propagation of a covered work

-occurring solely as a consequence of using peer-to-peer transmission

-to receive a copy likewise does not require acceptance. However,

-nothing other than this License grants you permission to propagate or

-modify any covered work. These actions infringe copyright if you do

-not accept this License. Therefore, by modifying or propagating a

-covered work, you indicate your acceptance of this License to do so.

-

- 10. Automatic Licensing of Downstream Recipients.

-

- Each time you convey a covered work, the recipient automatically

-receives a license from the original licensors, to run, modify and

-propagate that work, subject to this License. You are not responsible

-for enforcing compliance by third parties with this License.

-

- An "entity transaction" is a transaction transferring control of an

-organization, or substantially all assets of one, or subdividing an

-organization, or merging organizations. If propagation of a covered

-work results from an entity transaction, each party to that

-transaction who receives a copy of the work also receives whatever

-licenses to the work the party's predecessor in interest had or could

-give under the previous paragraph, plus a right to possession of the

-Corresponding Source of the work from the predecessor in interest, if

-the predecessor has it or can get it with reasonable efforts.

-

- You may not impose any further restrictions on the exercise of the

-rights granted or affirmed under this License. For example, you may

-not impose a license fee, royalty, or other charge for exercise of

-rights granted under this License, and you may not initiate litigation

-(including a cross-claim or counterclaim in a lawsuit) alleging that

-any patent claim is infringed by making, using, selling, offering for

-sale, or importing the Program or any portion of it.

-

- 11. Patents.

-

- A "contributor" is a copyright holder who authorizes use under this

-License of the Program or a work on which the Program is based. The

-work thus licensed is called the contributor's "contributor version".

-

- A contributor's "essential patent claims" are all patent claims

-owned or controlled by the contributor, whether already acquired or

-hereafter acquired, that would be infringed by some manner, permitted

-by this License, of making, using, or selling its contributor version,

-but do not include claims that would be infringed only as a

-consequence of further modification of the contributor version. For

-purposes of this definition, "control" includes the right to grant

-patent sublicenses in a manner consistent with the requirements of

-this License.

-

- Each contributor grants you a non-exclusive, worldwide, royalty-free

-patent license under the contributor's essential patent claims, to

-make, use, sell, offer for sale, import and otherwise run, modify and

-propagate the contents of its contributor version.

-

- In the following three paragraphs, a "patent license" is any express

-agreement or commitment, however denominated, not to enforce a patent

-(such as an express permission to practice a patent or covenant not to

-sue for patent infringement). To "grant" such a patent license to a

-party means to make such an agreement or commitment not to enforce a

-patent against the party.

-

- If you convey a covered work, knowingly relying on a patent license,

-and the Corresponding Source of the work is not available for anyone

-to copy, free of charge and under the terms of this License, through a

-publicly available network server or other readily accessible means,

-then you must either (1) cause the Corresponding Source to be so

-available, or (2) arrange to deprive yourself of the benefit of the

-patent license for this particular work, or (3) arrange, in a manner

-consistent with the requirements of this License, to extend the patent

-license to downstream recipients. "Knowingly relying" means you have

-actual knowledge that, but for the patent license, your conveying the

-covered work in a country, or your recipient's use of the covered work

-in a country, would infringe one or more identifiable patents in that

-country that you have reason to believe are valid.

-

- If, pursuant to or in connection with a single transaction or

-arrangement, you convey, or propagate by procuring conveyance of, a

-covered work, and grant a patent license to some of the parties

-receiving the covered work authorizing them to use, propagate, modify

-or convey a specific copy of the covered work, then the patent license

-you grant is automatically extended to all recipients of the covered

-work and works based on it.

-

- A patent license is "discriminatory" if it does not include within

-the scope of its coverage, prohibits the exercise of, or is

-conditioned on the non-exercise of one or more of the rights that are

-specifically granted under this License. You may not convey a covered

-work if you are a party to an arrangement with a third party that is

-in the business of distributing software, under which you make payment

-to the third party based on the extent of your activity of conveying

-the work, and under which the third party grants, to any of the

-parties who would receive the covered work from you, a discriminatory

-patent license (a) in connection with copies of the covered work

-conveyed by you (or copies made from those copies), or (b) primarily

-for and in connection with specific products or compilations that

-contain the covered work, unless you entered into that arrangement,

-or that patent license was granted, prior to 28 March 2007.

-

- Nothing in this License shall be construed as excluding or limiting

-any implied license or other defenses to infringement that may

-otherwise be available to you under applicable patent law.

-

- 12. No Surrender of Others' Freedom.

-

- If conditions are imposed on you (whether by court order, agreement or

-otherwise) that contradict the conditions of this License, they do not

-excuse you from the conditions of this License. If you cannot convey a

-covered work so as to satisfy simultaneously your obligations under this

-License and any other pertinent obligations, then as a consequence you may

-not convey it at all. For example, if you agree to terms that obligate you

-to collect a royalty for further conveying from those to whom you convey

-the Program, the only way you could satisfy both those terms and this

-License would be to refrain entirely from conveying the Program.

-

- 13. Use with the GNU Affero General Public License.

-

- Notwithstanding any other provision of this License, you have

-permission to link or combine any covered work with a work licensed

-under version 3 of the GNU Affero General Public License into a single

-combined work, and to convey the resulting work. The terms of this

-License will continue to apply to the part which is the covered work,

-but the special requirements of the GNU Affero General Public License,

-section 13, concerning interaction through a network will apply to the

-combination as such.

-

- 14. Revised Versions of this License.

-

- The Free Software Foundation may publish revised and/or new versions of

-the GNU General Public License from time to time. Such new versions will

-be similar in spirit to the present version, but may differ in detail to

-address new problems or concerns.

-

- Each version is given a distinguishing version number. If the

-Program specifies that a certain numbered version of the GNU General

-Public License "or any later version" applies to it, you have the

-option of following the terms and conditions either of that numbered

-version or of any later version published by the Free Software

-Foundation. If the Program does not specify a version number of the

-GNU General Public License, you may choose any version ever published

-by the Free Software Foundation.

-

- If the Program specifies that a proxy can decide which future

-versions of the GNU General Public License can be used, that proxy's

-public statement of acceptance of a version permanently authorizes you

-to choose that version for the Program.

-

- Later license versions may give you additional or different

-permissions. However, no additional obligations are imposed on any

-author or copyright holder as a result of your choosing to follow a

-later version.

-

- 15. Disclaimer of Warranty.

-

- THERE IS NO WARRANTY FOR THE PROGRAM, TO THE EXTENT PERMITTED BY

-APPLICABLE LAW. EXCEPT WHEN OTHERWISE STATED IN WRITING THE COPYRIGHT

-HOLDERS AND/OR OTHER PARTIES PROVIDE THE PROGRAM "AS IS" WITHOUT WARRANTY

-OF ANY KIND, EITHER EXPRESSED OR IMPLIED, INCLUDING, BUT NOT LIMITED TO,

-THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR

-PURPOSE. THE ENTIRE RISK AS TO THE QUALITY AND PERFORMANCE OF THE PROGRAM

-IS WITH YOU. SHOULD THE PROGRAM PROVE DEFECTIVE, YOU ASSUME THE COST OF

-ALL NECESSARY SERVICING, REPAIR OR CORRECTION.

-

- 16. Limitation of Liability.

-

- IN NO EVENT UNLESS REQUIRED BY APPLICABLE LAW OR AGREED TO IN WRITING

-WILL ANY COPYRIGHT HOLDER, OR ANY OTHER PARTY WHO MODIFIES AND/OR CONVEYS

-THE PROGRAM AS PERMITTED ABOVE, BE LIABLE TO YOU FOR DAMAGES, INCLUDING ANY

-GENERAL, SPECIAL, INCIDENTAL OR CONSEQUENTIAL DAMAGES ARISING OUT OF THE

-USE OR INABILITY TO USE THE PROGRAM (INCLUDING BUT NOT LIMITED TO LOSS OF

-DATA OR DATA BEING RENDERED INACCURATE OR LOSSES SUSTAINED BY YOU OR THIRD

-PARTIES OR A FAILURE OF THE PROGRAM TO OPERATE WITH ANY OTHER PROGRAMS),

-EVEN IF SUCH HOLDER OR OTHER PARTY HAS BEEN ADVISED OF THE POSSIBILITY OF

-SUCH DAMAGES.

-

- 17. Interpretation of Sections 15 and 16.

-

- If the disclaimer of warranty and limitation of liability provided

-above cannot be given local legal effect according to their terms,

-reviewing courts shall apply local law that most closely approximates

-an absolute waiver of all civil liability in connection with the

-Program, unless a warranty or assumption of liability accompanies a

-copy of the Program in return for a fee.

-

- END OF TERMS AND CONDITIONS

\ No newline at end of file

+MIT License

+

+Copyright (c) 2024-present Udayraj Deshmukh and other contributors

+

+Permission is hereby granted, free of charge, to any person obtaining

+a copy of this software and associated documentation files (the

+"Software"), to deal in the Software without restriction, including

+without limitation the rights to use, copy, modify, merge, publish,

+distribute, sublicense, and/or sell copies of the Software, and to

+permit persons to whom the Software is furnished to do so, subject to

+the following conditions:

+

+The above copyright notice and this permission notice shall be

+included in all copies or substantial portions of the Software.

+

+THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND,

+EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF

+MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND

+NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE

+LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION

+OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION

+WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

diff --git a/README.md b/README.md

index 8afb795e..279aaca8 100644

--- a/README.md

+++ b/README.md

@@ -1,60 +1,83 @@

# OMR Checker

-Grade exams fast and accurately using a scanner 🖨 or your phone 🤳.

-[](http://hits.dwyl.io/udayraj123/OMRchecker)

-[](https://github.com/Udayraj123/OMRChecker/wiki/TODOs)

+Read OMR sheets fast and accurately using a scanner 🖨 or your phone 🤳.

+

+## What is OMR?

+

+OMR stands for Optical Mark Recognition, used to detect and interpret human-marked data on documents. OMR refers to the process of reading and evaluating OMR sheets, commonly used in exams, surveys, and other forms.

+

+#### **Quick Links**

+

+- [Installation](#getting-started)

+- [User Guide](https://github.com/Udayraj123/OMRChecker/wiki)

+- [Contributor Guide](https://github.com/Udayraj123/OMRChecker/blob/master/CONTRIBUTING.md)

+- [Project Ideas List](https://github.com/users/Udayraj123/projects/2/views/1)

+

+

+

+[](https://github.com/Udayraj123/OMRChecker/pull/new/master)

[](https://github.com/Udayraj123/OMRChecker/pulls?q=is%3Aclosed)

[](https://GitHub.com/Udayraj123/OMRChecker/issues?q=is%3Aissue+is%3Aclosed)

-[](https://GitHub.com/Udayraj123/OMRChecker/graphs/contributors/)

+[](https://github.com/Udayraj123/OMRChecker/issues/5)

+

+

[](https://GitHub.com/Udayraj123/OMRChecker/stargazers/)

-[](https://github.com/Udayraj123/OMRChecker/pull/new/master)

+[](https://hits.seeyoufarm.com)

[](https://discord.gg/qFv2Vqf)

-[](https://github.com/Udayraj123/OMRChecker/issues/5)

-

-

-#### **TLDR;** Jump to [Getting Started](#getting-started).

+

## 🎯 Features

-A full-fledged OMR checking software that can read and evaluate OMR sheets scanned at any angle and having any color. Support is also provided for a customisable marking scheme with section-wise marking, bonus questions, etc.

+A full-fledged OMR checking software that can read and evaluate OMR sheets scanned at any angle and having any color.

+

+| Specs ![]() |

|   ![]() |

+| :--------------------- | :--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

+| 💯 **Accurate** | Currently nearly 100% accurate on good quality document scans; and about 90% accurate on mobile images. |

+| 💪🏿 **Robust** | Supports low resolution, xeroxed sheets. See [**Robustness**](https://github.com/Udayraj123/OMRChecker/wiki/Robustness) for more. |

+| ⏩ **Fast** | Current processing speed without any optimization is 200 OMRs/minute. |

+| ✅ **Customizable** | [Easily apply](https://github.com/Udayraj123/OMRChecker/wiki/User-Guide) to custom OMR layouts, surveys, etc. |

+| 📊 **Visually Rich** | [Get insights](https://github.com/Udayraj123/OMRChecker/wiki/Rich-Visuals) to configure and debug easily. |

+| 🎈 **Lightweight** | Very minimal core code size. |

+| 🏫 **Large Scale** | Tested on a large scale at [Technothlon](https://en.wikipedia.org/wiki/Technothlon). |

+| 👩🏿💻 **Dev Friendly** | [Pylinted](http://pylint.pycqa.org/) and [Black formatted](https://github.com/psf/black) code. Also has a [developer community](https://discord.gg/qFv2Vqf) on discord. |

-| Specs |    |

-|:----------------:|-----------------------------------------------------------------------------------------------------------------------------------|

-| 💯 **Accurate** | Currently nearly 100% accurate on good quality document scans; and about 90% accurate on mobile images. |

-| 💪🏿 **Robust** | Supports low resolution, xeroxed sheets. See [**Robustness**](https://github.com/Udayraj123/OMRChecker/wiki/Robustness) for more. |

-| ⏩ **Fast** | Current processing speed without any optimization is 200 OMRs/minute. |

-| ✅ **Extensible** | [**Easily apply**](https://github.com/Udayraj123/OMRChecker/wiki/User-Guide) to different OMR layouts, surveys, etc. |

-| 📊 **Visually Rich Outputs** | [Get insights](https://github.com/Udayraj123/OMRChecker/wiki/Rich-Visuals) to configure and debug easily. |

-| 🎈 **Extremely lightweight** | Core code size is **less than 500 KB**(Samples excluded). |

-| 🏫 **Large Scale** | Used on tens of thousands of OMRs at [Technothlon](https://www.facebook.com/technothlon.techniche). |

-| 👩🏿💻 **Dev Friendly** | [**Well documented**](https://github.com/Udayraj123/OMRChecker/wiki/) repository based on python and openCV with [an active discussion group](https://discord.gg/qFv2Vqf). |

+Note: For solving interesting challenges, developers can check out [**TODOs**](https://github.com/Udayraj123/OMRChecker/wiki/TODOs).

-Note: For solving live challenges, developers can checkout [**TODOs**](https://github.com/Udayraj123/OMRChecker/wiki/TODOs).

-See all details in [Project Wiki](https://github.com/Udayraj123/OMRChecker/wiki/).

+See the complete guide and details at [Project Wiki](https://github.com/Udayraj123/OMRChecker/wiki/).

+

## 💡 What can OMRChecker do for me?

-Once you configure the OMR layout, just throw images of the sheets at the software; and you'll get back the graded responses in an excel sheet!

+

+Once you configure the OMR layout, just throw images of the sheets at the software; and you'll get back the marked responses in an excel sheet!

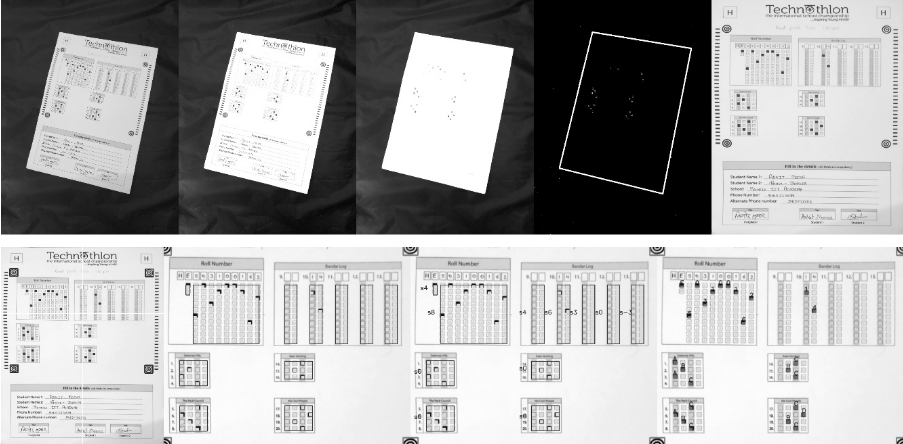

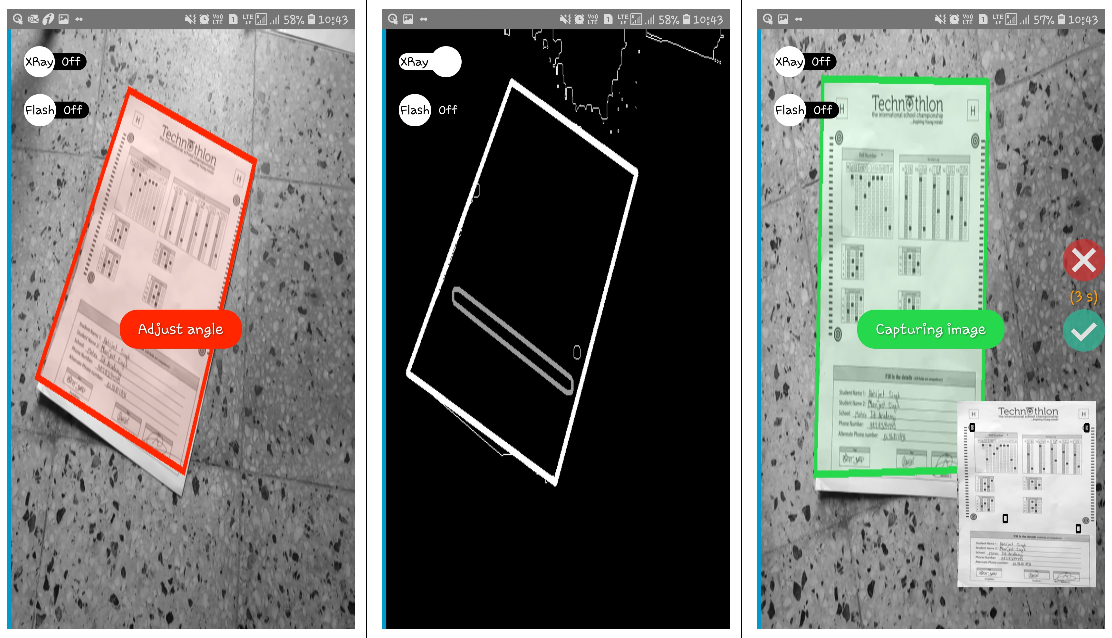

Images can be taken from various angles as shown below-

+

|

+| :--------------------- | :--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

+| 💯 **Accurate** | Currently nearly 100% accurate on good quality document scans; and about 90% accurate on mobile images. |

+| 💪🏿 **Robust** | Supports low resolution, xeroxed sheets. See [**Robustness**](https://github.com/Udayraj123/OMRChecker/wiki/Robustness) for more. |

+| ⏩ **Fast** | Current processing speed without any optimization is 200 OMRs/minute. |

+| ✅ **Customizable** | [Easily apply](https://github.com/Udayraj123/OMRChecker/wiki/User-Guide) to custom OMR layouts, surveys, etc. |

+| 📊 **Visually Rich** | [Get insights](https://github.com/Udayraj123/OMRChecker/wiki/Rich-Visuals) to configure and debug easily. |

+| 🎈 **Lightweight** | Very minimal core code size. |

+| 🏫 **Large Scale** | Tested on a large scale at [Technothlon](https://en.wikipedia.org/wiki/Technothlon). |

+| 👩🏿💻 **Dev Friendly** | [Pylinted](http://pylint.pycqa.org/) and [Black formatted](https://github.com/psf/black) code. Also has a [developer community](https://discord.gg/qFv2Vqf) on discord. |

-| Specs |    |

-|:----------------:|-----------------------------------------------------------------------------------------------------------------------------------|

-| 💯 **Accurate** | Currently nearly 100% accurate on good quality document scans; and about 90% accurate on mobile images. |

-| 💪🏿 **Robust** | Supports low resolution, xeroxed sheets. See [**Robustness**](https://github.com/Udayraj123/OMRChecker/wiki/Robustness) for more. |

-| ⏩ **Fast** | Current processing speed without any optimization is 200 OMRs/minute. |

-| ✅ **Extensible** | [**Easily apply**](https://github.com/Udayraj123/OMRChecker/wiki/User-Guide) to different OMR layouts, surveys, etc. |

-| 📊 **Visually Rich Outputs** | [Get insights](https://github.com/Udayraj123/OMRChecker/wiki/Rich-Visuals) to configure and debug easily. |

-| 🎈 **Extremely lightweight** | Core code size is **less than 500 KB**(Samples excluded). |

-| 🏫 **Large Scale** | Used on tens of thousands of OMRs at [Technothlon](https://www.facebook.com/technothlon.techniche). |

-| 👩🏿💻 **Dev Friendly** | [**Well documented**](https://github.com/Udayraj123/OMRChecker/wiki/) repository based on python and openCV with [an active discussion group](https://discord.gg/qFv2Vqf). |

+Note: For solving interesting challenges, developers can check out [**TODOs**](https://github.com/Udayraj123/OMRChecker/wiki/TODOs).

-Note: For solving live challenges, developers can checkout [**TODOs**](https://github.com/Udayraj123/OMRChecker/wiki/TODOs).

-See all details in [Project Wiki](https://github.com/Udayraj123/OMRChecker/wiki/).

+See the complete guide and details at [Project Wiki](https://github.com/Udayraj123/OMRChecker/wiki/).

+

## 💡 What can OMRChecker do for me?

-Once you configure the OMR layout, just throw images of the sheets at the software; and you'll get back the graded responses in an excel sheet!

+

+Once you configure the OMR layout, just throw images of the sheets at the software; and you'll get back the marked responses in an excel sheet!

Images can be taken from various angles as shown below-

+

-### Code in action on images taken by scanner:

+### Code in action on images taken by scanner:

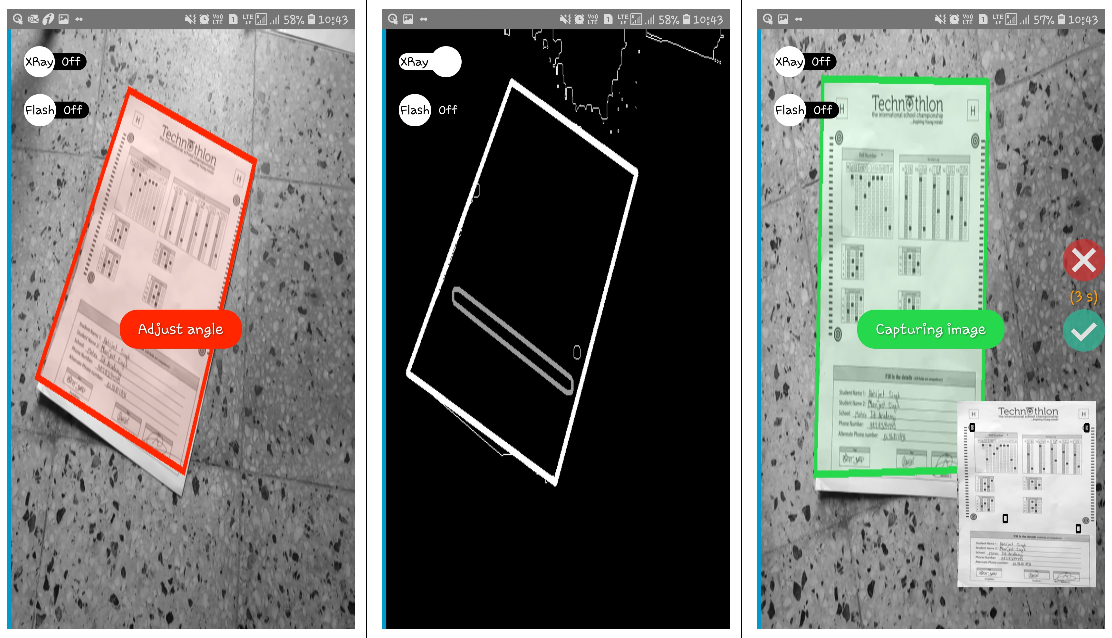

+

-### Code in action on images taken by a mobile phone:

+### Code in action on images taken by a mobile phone:

+

-See step by step processing of any OMR sheet:

+## Visuals

+

+### Processing steps

+

+See step-by-step processing of any OMR sheet:

+

@@ -63,7 +86,9 @@ See step by step processing of any OMR sheet:

*Note: This image is generated by the code itself!*

@@ -63,7 +86,9 @@ See step by step processing of any OMR sheet:

*Note: This image is generated by the code itself!*

-Output: A CSV sheet containing the detected responses and evaluated scores:

+### Output

+

+Get a CSV sheet containing the detected responses and evaluated scores:

@@ -71,29 +96,65 @@ Output: A CSV sheet containing the detected responses and evaluated scores:

-#### There are many visuals in the wiki. [Check them out!](https://github.com/Udayraj123/OMRChecker/wiki/Rich-Visuals)

+We now support [colored outputs](https://github.com/Udayraj123/OMRChecker/wiki/%5Bv2%5D-About-Evaluation) as well. Here's a sample output on another image -

+

+

+  +

+

+

+

+

+#### There are many more visuals in the wiki. Check them out [here!](https://github.com/Udayraj123/OMRChecker/wiki/Rich-Visuals)

## Getting started

+

-### Operating System

-Although windows is supported, **Linux** is recommended for a bug-free experience.

+**Operating system:** OSX or Linux is recommended although Windows is also supported.

-### 1. Install dependencies

-

+### 1. Install global dependencies

+

+

+

+To check if python3 and pip is already installed:

+

+```bash

+python3 --version

+python3 -m pip --version

+```

+

+

+ Install Python3

+

+To install python3 follow instructions [here](https://www.python.org/downloads/)

+

+To install pip - follow instructions [here](https://pip.pypa.io/en/stable/installation/)

+

+

+

+Install OpenCV

+

+**Any installation method is fine.**

+

+Recommended:

-_Note: To get a copy button for below commands, use [CodeCopy Chrome](https://chrome.google.com/webstore/detail/codecopy/fkbfebkcoelajmhanocgppanfoojcdmg) | [CodeCopy Firefox](https://addons.mozilla.org/en-US/firefox/addon/codecopy/)._

```bash

python3 -m pip install --user --upgrade pip

python3 -m pip install --user opencv-python

python3 -m pip install --user opencv-contrib-python

```

+

More details on pip install openCV [here](https://www.pyimagesearch.com/2018/09/19/pip-install-opencv/).

-> **Note:** On a fresh computer some of the libraries may get missing in above pip install.

+

+

+

+

+Extra steps(for Linux users only)

+

+Installing missing libraries(if any):

+

+On a fresh computer, some of the libraries may get missing in event after a successful pip install. Install them using following commands[(ref)](https://www.pyimagesearch.com/2018/05/28/ubuntu-18-04-how-to-install-opencv/):

-Install them using the [following commands](https://www.pyimagesearch.com/2018/05/28/ubuntu-18-04-how-to-install-opencv/):

-Windows users may skip this step.

```bash

sudo apt-get install -y build-essential cmake unzip pkg-config

sudo apt-get install -y libjpeg-dev libpng-dev libtiff-dev

@@ -101,126 +162,199 @@ sudo apt-get install -y libavcodec-dev libavformat-dev libswscale-dev libv4l-dev

sudo apt-get install -y libatlas-base-dev gfortran

```

-### 2. Clone the repo

+

+

+### 2. Install project dependencies

+

+Clone the repo

+

```bash

-# Shallow clone - takes latest code with minimal size

-git clone https://github.com/Udayraj123/OMRChecker --depth=1

+git clone https://github.com/Udayraj123/OMRChecker

+cd OMRChecker/

```

-Note: Contributors should take a full clone(without the --depth flag).

-#### Install other requirements

-

+Install pip requirements

```bash

-cd OMRChecker/

python3 -m pip install --user -r requirements.txt

```

-> **Note:** If you face a distutils error, use `--ignore-installed` flag in above command.

-

-### 3. Run the code

+_**Note:** If you face a distutils error in pip, use `--ignore-installed` flag in above command._

-1. Put your data in inputs folder. You can copy sample data as shown below:

- ```bash

- # Note: you may remove previous inputs if any with `mv inputs/* ~/.trash`

- cp -r ./samples/sample1 inputs/

- ```

- _Note: Change the number N in sampleN to see more examples_

-2. Run OMRChecker:

- **` python3 main.py `**

+

-These samples demonstrate different ways OMRChecker can be used.

+### 3. Run the code

-#### Running it on your own OMR Sheets

+1. First copy and examine the sample data to know how to structure your inputs:

+ ```bash

+ cp -r ./samples/sample1 inputs/

+ # Note: you may remove previous inputs (if any) with `mv inputs/* ~/.trash`

+ # Change the number N in sampleN to see more examples

+ ```

+2. Run OMRChecker:

+ ```bash

+ python3 main.py

+ ```

+

+Alternatively you can also use `python3 main.py -i ./samples/sample1`.

+

+Each example in the samples folder demonstrates different ways in which OMRChecker can be used.

+

+### Common Issues

+

+

+

+ 1. [Windows] ERROR: Could not open requirements file

+

+Command: python3 -m pip install --user -r requirements.txt

+

+ Link to Solution: #54

+

+

+

+2. [Linux] ERROR: No module named pip

+

+Command: python3 -m pip install --user --upgrade pip

+

+ Link to Solution: #70

+

+

+## OMRChecker for custom OMR Sheets

+

+1. First, [create your own template.json](https://github.com/Udayraj123/OMRChecker/wiki/User-Guide).

+2. Configure the tuning parameters.

+3. Run OMRChecker with appropriate arguments (See full usage).

+

+

+## Full Usage

-1. First [create your own template.json](https://github.com/Udayraj123/OMRChecker/wiki/User-Guide).

-2. Open `globals.py` and check the tuning parameters.

-

-3. Run OMRChecker with appropriate arguments.

- #### Full Usage

- ```

- python3 main.py [--setLayout] [--noCropping] [--autoAlign] [--inputDir dir1] [--outputDir dir1] [--template path/to/template.json]

- ```

- Explanation for the arguments:

+```

+python3 main.py [--setLayout] [--inputDir dir1] [--outputDir dir1]

+```

- `--setLayout`: Set up OMR template layout - modify your json file and run again until the template is set.

+Explanation for the arguments:

- `--autoAlign`: (experimental) Enables automatic template alignment - use if the scans show slight misalignments.

+`--setLayout`: Set up OMR template layout - modify your json file and run again until the template is set.

- `--noCropping`: Disables page contour detection - used when page boundary is not visible e.g. document scanner.

+`--inputDir`: Specify an input directory.

- `--inputDir`: Specify an input directory.

+`--outputDir`: Specify an output directory.

- `--outputDir`: Specify an output directory.

+

+

+ Deprecation logs

+

- `--template`: Specify a default template if no template file in input directories.

+- The old `--noCropping` flag has been replaced with the 'CropPage' plugin in "preProcessors" of the template.json(see [samples](https://github.com/Udayraj123/OMRChecker/tree/master/samples)).

+- The `--autoAlign` flag is deprecated due to low performance on a generic OMR sheet

+- The `--template` flag is deprecated and instead it's recommended to keep the template file at the parent folder containing folders of different images

+

-## 💡 Why is this software free?

+## FAQ

+

+

+

+Why is this software free?

+

-The idea for this project began at Technothlon, which is a non-profit international school championship. After seeing it work fabulously at such a large scale, we decided to share this simple and powerful tool with the world to perhaps help revamp OMR checking processes and help greatly reduce the tediousness of the work involved.

+This project was born out of a student-led organization called as [Technothlon](https://technothlon.techniche.org.in). It is a logic-based international school championship organized by students of IIT Guwahati. Being a non-profit organization, and after seeing it work fabulously at such a large scale we decided to share this tool with the world. The OMR checking processes still involves so much tediousness which we aim to reduce dramatically.

-And we believe in the power of open source! Currently, OMRChecker is in its initial stage where only developers can use it. We hope to see it become more user-friendly and even more robust with exposure to different inputs from you!

+We believe in the power of open source! Currently, OMRChecker is in an intermediate stage where only developers can use it. We hope to see it become more user-friendly as well as robust from exposure to different inputs from you all!

[](https://github.com/ellerbrock/open-source-badges/)

-### Can I use this code in my work?

+

+

+

+

+Can I use this code in my (public) work?

+

+

+OMRChecker can be forked and modified. You are encouraged to play with it and we would love to see your own projects in action!

+

+It is published under the [MIT license](https://github.com/Udayraj123/OMRChecker/blob/master/LICENSE).

+

+

+

+

+

+What are the ways to contribute?

+

+

+

+

+- Join the developer community on [Discord](https://discord.gg/qFv2Vqf) to fix [issues](https://github.com/Udayraj123/OMRChecker/issues) with OMRChecker.

+

+- If this project saved you large costs on OMR Software licenses, or saved efforts to make one. Consider donating an amount of your choice(donate section).

-OMRChecker can be forked and modified. **You are encouraged to play with it and we would love to see your own projects in action!** The only requirement is **disclose usage** of this software in your code. It is published under the [**GPLv3 license**](https://github.com/Udayraj123/OMRChecker/blob/master/LICENSE)

+

+

+

-## Credits

-_A Huge thanks to :_

-_The creative master **Adrian Rosebrock** for his blog :_ https://pyimagesearch.com

+

-_The legendary **Harrison** aka sentdex for his [video tutorials](https://www.youtube.com/watch?v=Z78zbnLlPUA&list=PLQVvvaa0QuDdttJXlLtAJxJetJcqmqlQq)._

+## Credits

-_And the james bond of computer vision **Satya Mallic** for his blog:_ https://www.learnopencv.com

+_A Huge thanks to:_

+_**Adrian Rosebrock** for his exemplary blog:_ https://pyimagesearch.com

-_And many other amazing people over the globe without whom this project would never have completed. Thank you!_

+_**Harrison Kinsley** aka sentdex for his [video tutorials](https://www.youtube.com/watch?v=Z78zbnLlPUA&list=PLQVvvaa0QuDdttJXlLtAJxJetJcqmqlQq) and many other resources._

-> _This project is dedicated to [Technothlon](https://www.facebook.com/technothlon.techniche) where the idea of making such solution was conceived. Technothlon is a logic-based examination organized by students of IIT Guwahati._

+_**Satya Mallic** for his resourceful blog:_ https://www.learnopencv.com

-

-## License

-```

-Copyright © 2019 Udayraj Deshmukh

-OMRChecker : Grade exams fast and accurately using a scanner 🖨 or your phone 🤳

-This is free software, and you are welcome to redistribute it under certain conditions;

-```

-For more details see [](https://github.com/Udayraj123/OMRChecker/blob/master/LICENSE)

-

## Related Projects

-Here's a sneak peak of the [Android OMR Helper App(WIP)](https://github.com/Udayraj123/AndroidOMRHelper):

+

+Here's a snapshot of the [Android OMR Helper App (archived)](https://github.com/Udayraj123/AndroidOMRHelper):

+

-  +

+

-

-### Other ways you can contribute:

-- Help OMRChecker cross 560 stars ⭐ to become #1 ([Currently #4](https://github.com/topics/omr)).

-Current stars: [](https://GitHub.com/Udayraj123/OMRChecker/stargazers/)

+## Stargazers over time

-- [Buy Me A Coffee ☕](https://www.buymeacoffee.com/Udayraj123) - To keep my brain juices flowing and help me create more such projects 💡

+[](https://starchart.cc/Udayraj123/OMRChecker)

-- If this project saved you large costs on OMR Software licenses, or saved efforts to make one. [](https://www.paypal.me/Udayraj123/500)

+---

-

-

-

+Made with ❤️ by Awesome Contributors

+

+

+  +

+

+---

+

+### License

+

+[](https://github.com/Udayraj123/OMRChecker/blob/master/LICENSE)

+

+For more details see [LICENSE](https://github.com/Udayraj123/OMRChecker/blob/master/LICENSE).

+

+### Donate

+

+

+

+

+---

+

+### License

+

+[](https://github.com/Udayraj123/OMRChecker/blob/master/LICENSE)

+

+For more details see [LICENSE](https://github.com/Udayraj123/OMRChecker/blob/master/LICENSE).

+

+### Donate

+

+ [](https://www.paypal.me/Udayraj123/500)

+